Incomplete debug results for pipeline with two retrievers

Describe the bug

If a pipeline contains two retrievers then the debug results only contain the results of one of the two retrievers if the pipeline is executed with the debug=True parameter. Due to having two retrievers, the pipeline execution is split into two execution streams as expected but the aggregation of the debug results misses the debug results of the second retriever.

pipeline = Pipeline()

pipeline.add_node(component=TransformersQueryClassifier(), name="QueryClassifier", inputs=["Query"])

pipeline.add_node(component=dpr_retriever, name="DPRRetriever", inputs=["QueryClassifier.output_1"])

pipeline.add_node(component=es_retriever, name="ESRetriever", inputs=["QueryClassifier.output_2"])

pipeline.add_node(component=reader, name="QAReader", inputs=["DPRRetriever"])

pipeline_output = pipeline.run(query=labels[0].query, params={"ESRetriever": {"top_k": 5}, "DPRRetriever": {"top_k": 5}}, debug=True)

pipeline_output["_debug"] contains only Query, QueryClassifier, DPRRetriever and QAReader but not ESRetriever.

Expected behavior

pipeline_output["_debug"] should also contain ESRetriever.

Additional context

This issue is blocking proper use of pipeline.eval_batch as implemented in #2942

To Reproduce

Run the test case test_multi_retriever_pipeline_with_asymmetric_qa_eval but instead of running

eval_result: EvaluationResult = pipeline.eval(labels=labels, params={"ESRetriever": {"top_k": 5}, "DPRRetriever": {"top_k": 5}})

Try to run:

pipeline_output = pipeline.run(query=labels[0].query, params={"ESRetriever": {"top_k": 5}, "DPRRetriever": {"top_k": 5}}, debug=True)

or if you have the eval_batch implementation from #2942 then try to run:

eval_result: EvaluationResult = pipeline.eval_batch(labels=labels, params={"ESRetriever": {"top_k": 5}, "DPRRetriever": {"top_k": 5}})

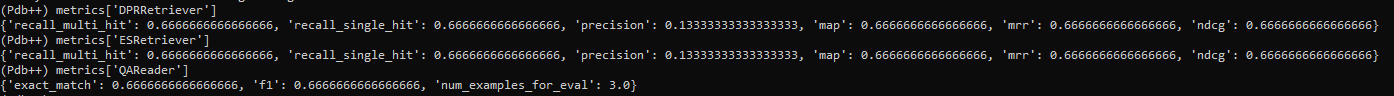

Hello there! I managed to include ESRetriever in the pipeline_output["_debug"] through a fix but, the metric asserts that follow on both test_multi_retriever_pipeline_eval and test_multi_retriever_pipeline_with_asymmetric_qa_eval failed due to unexpected metric values. Those unexpected values are the following for test_multi_retriever_pipeline_with_asymmetric_qa_eval:

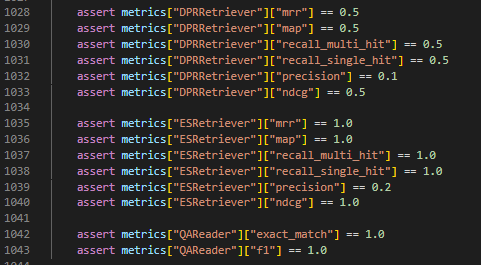

Those are the expected ones for the same test:

Those are the expected ones for the same test:

I will investigate further but if this rings a bell, feel free to chime in.

I stumble upon a similar problem when using a join-type node in the final layer of the pipeline (for instance JoinDocuments after a dual-retriever). The only debug information available is that of the join node; the debug information from all prior nodes are gone.

What's the expected time frame for this to be solved?

Thanks