dask-ml

dask-ml copied to clipboard

dask-ml copied to clipboard

Dask faster than Spark with a lot less iterations and better accuracy

Hello,

I'm benchmarking k-means clustering Dask versus Spark.

Right now these are only benchmarks on my laptop, but I've some interesting results and I'm looking for an explanation before I further benchmark this algorithm on a cluster.

I've logged the execution time, model cluster predictions, iterations. Both benchmarks used the same data with 1.6 million rows.

The questions are:

- Why does Spark need a lot more iterations than Dask?

- Why is clustering less accurate in Spark than in Dask?

I'm unclear why those are different, because they both use the same underlying algorithm and have more or less the same standard parameter.

Dask

KMeans(

n_clusters=8,

init='k-means||',

oversampling_factor=2,

max_iter=300,

tol=0.0001,

precompute_distances='auto',

random_state=None,

copy_x=True,

n_jobs=1,

algorithm='full',

init_max_iter=None,

)

Spark I've set maxIter to 300 and reset the seed for every benchmark.

KMeans(

featuresCol='features',

predictionCol='prediction',

k=2,

initMode='k-means||',

initSteps=2,

tol=0.0001,

maxIter=20,

seed=None,

distanceMeasure='euclidean',

)

Here you can see the duration of execution of each k-means clustering together with the iterations used to get a result. Spark is a lot slower than Spark on the overall calculation, but needs also a lot more iterations. Interestingly Spark is faster per iteration (the slope of a regression line) and faster on initialization (the y-intercept of the regression line). For the Spark benchmarks one can also make out a second line which I couldn't yet explain.

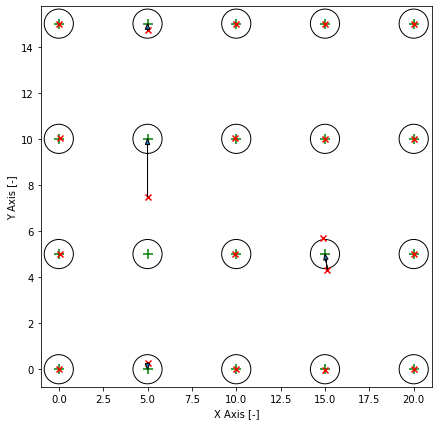

The training data is equally spaced grid. The circles around the cluster centers are the standard deviation. Clusters are overlapping and it is impossible to get a hundred percent accuracy. The red markers are the predicted cluster centers and the arrow shows their correspoding cluster center. In this example the clustering is not correct. One cluster was on the wrong spot and two predicted cluster centers share one cluster center. I can make these plots for all models.

The graph on the right makes everything much weirder. Apperently the Spark implementation is less accurate than the Dask implementation. Also you can see the distribution of the duration and iterations much butter (These are seaborn boxenplots).

I'm using Anaconda for Windows and PySpark 2.4.5 and Dask 2.5.2.

I filed this issue for Dask and Spark.

Best regards Julian

Thanks! This is an interesting benchmark. Is the full code available somewhere?

- Why does Spark need a lot more iterations than Dask?

- Why is clustering less accurate in Spark than in Dask?

Those both sound positive for Dask-ML. Are you wondering why Dask outperforms Spark, or is there an issue with Dask?

Thank you for your comment!

Unfortunately some of the code is proprietary for now, but I'll do my best to publish this and other benchmarks in the future. If you need any information or the benchmark output data, I can provide that.

Those both sound positive for Dask-ML.

Whats not in favor for dask is the slower time per iteration. May be it all comes down to hyperparameters and then Spark is faster.

I think this is also the main issue: I think it is important to align the default parameters across frameworks, so one can more or less exchange imports and use different frameworks interchangeably. For example using the default max iteration setting of Spark produces a faster training for obvious reasons. May be there is some other parameter in Dask which produces the lower iterations per training.

Aside from that I'm looking for answer why everything is behvaing like it is.

Whats not in favor for dask is the slower time per iteration.

I'd recommend looking at the Dask dashboard. That's how I develop with Dask and diagnose performance issues. Here's a some good documentation: https://distributed.dask.org/en/latest/diagnosing-performance.html

align the default parameters across frameworks,

I think discussion of default parameters should go in a separate issue. Let's focus on performance in this benchmarking issue.

@JulianWgs The most important input parameter for K-means algorithm is the number of clusters (i.e. k). The choice of k can quite often be motivated by domain knowledge over the dataset.

From your code snippet, it seems like {n_clusters=8} in Dask example whereas {k=2} in Spark example. Going back to your question:

- Why does Spark need a lot more iterations than Dask?

A smaller data partition in Dask makes each iteration faster. Maybe the original data also supports an 8-cluster outcome, so the convergence is faster. A 2 cluster result makes convergence harder in Spark.

- Why is clustering less accurate in Spark than in Dask?

It would make more sense if both of the algorithms utilize the same number of clusters, otherwise, we cannot arrive at your conclusion given the current evidence. Maybe you can align the parameters in Spark/ Dask for the next benchmarking experiment.

Thank you for your answer! Of course both Dask and Spark should have the same number of clusters as input parameters. As you pointed out this is not the case in my code snippet. I will investigate whether this was a copy paste error or if this is an actual bug in my benchmark code.

Thanks again for taking the time!

Best regards Julian