sdk

sdk copied to clipboard

sdk copied to clipboard

LinkedHashSet<int> is much slower than LinkedHashSet<String>

I noticed a Set<int> performing very slowly when working on a Flutter project. I had about 20k integers in a Set, and checking set.contains() took a very long time. But when I used toString() to convert all items to string, it performed 1000x faster.

It's apparently only reproducible with these particular integers (that I've included in the code), any randomly generated integers performed much faster in my testing (1200 ms vs 1 ms).

I've asked a question on Stack Overflow as well with more details and screenshots, a commenter pointed out that it's only LinkedHashSet<int> that caused the issue.

Dart SDK version: 2.15.1 (stable) Environment: macOS 12.1 (Apple M1) / macOS 12.1 (Intel i5) / Android 12 (Snapdragon)

With the values you have in the list it is possible we are running into hash collisions resulting in the slow down, Let me extract your list into a simple dart program and check it out.

Here's a self-contained way to see this behavior:

import 'dart:collection';

extension<X> on Set<X> {

String measureContains(X value) {

final watch = Stopwatch();

watch.start();

for (int y = 0; y < 100000; y++) contains(value);

watch.stop();

return 'Elapsed time, ${runtimeType}: ${watch.elapsedMilliseconds}';

}

}

void main() {

print({...list}.measureContains(123456789));

print({for (var e in list) e.toString()}.measureContains('123456789'));

print((HashSet<int>()..addAll(list)).measureContains(123456789));

print((HashSet<String>()..addAll(list.map((e) => e.toString())))

.measureContains('123456789'));

}

const list = [...]; // Use the data from https://pastebin.com/raw/4fm2hKQB

The output does indeed demonstrate a large difference in performance:

Elapsed time, _CompactLinkedHashSet<int>: 2162

Elapsed time, _CompactLinkedHashSet<String>: 2

Elapsed time, _HashSet<int>: 2

Elapsed time, _HashSet<String>: 1

[Edit: Now using both the set literals and HashSet.]

IMO the better question is not why LinkedHashSet<int> is much slower than LinkedHashSet<String> but why LinkedHashSet<int> is so much slower than HashSet<int>. The int vs. String difference could be attributed to different hash codes that perhaps resulted in a pathological number of collisions, but the difference between LinkedHashSet vs HashSet seems very surprising to me.

FYI I have made an answer here discussing the problem: https://stackoverflow.com/a/71600284/4619958

Changing next probe to be quadratic improves performance of the example listed above, please see https://dart-review.googlesource.com/c/sdk/+/239004

IMO the better question is not why

LinkedHashSet<int>is much slower thanLinkedHashSet<String>but whyLinkedHashSet<int>is so much slower thanHashSet<int>. Theintvs.Stringdifference could be attributed to different hash codes that perhaps resulted in a pathological number of collisions, but the difference betweenLinkedHashSetvsHashSetseems very surprising to me.

With regards to the different between LinkedHashSet and HashSet, the implementation behind these are different, in the case of LinkedHashSet if there is a collision, the next free entry is probed by going through the entries whereas HashSet creates a _HashSetEntry and if there is a collision it links these entries.

@a-siva I think there is a bigger problem that is not solved by quadratic probing.

Changing the sequence of elements like this:

final list = [

for (int i = 0; i < 14790; i++) (i + 1) * 0x10000000 + 123456789

];

results in terrible performance for both LinkedHashSet and HashSet.

Elapsed time, _CompactLinkedHashSet<int>: 3623

Elapsed time, _CompactLinkedHashSet<String>: 5

Elapsed time, _HashSet<int>: 3091

Elapsed time, _HashSet<String>: 2

Both _CompactLinkedHashSet and _HashSet have reasonable behaviour provided the hash values are nicely distributed with few collisions. alpha ≤ 0.5 for _CompactLinkedHashSet, and _HashSet has chained buckets.

What goes wrong is that not all of the bits of the hashCode are used. The original example has 14790 distinct values, but there are only 377 distinct values of the lower 16 bits. The sequence above is even worse - it has only one value of the lower 16 bits. Both hash table implementations use the low N bits of the hashCode.

What we really need better dispersion of the bits of the provided hashCode.

Good dispersion means that every bit in the output is somewhat sensitive to every bit in the input. Flip any bit in the input and about half of the output bits should change. Our int hashCode is the identity function, so is inherently poorly dispersed.

We could change int.hashCode to be better dispersed, but that does not help for objects that implement hashCode e.g. via a counter. It should be the responsibility of the data structure to make the most of the provided bits.

The trick is to do the dispersion efficiently, since computing the hash bits is on the critical path and Map indexing shows up in the profile on many apps. On x64 dispersion can be achieved efficiently using the 64x64->128 MUL instruction to multiply the given hash code by a magic dispersion constant and then adding the low and high words of the product (similar idea to Murmur hash). We might want compiler support since writing it without support will require a few dozen instructions and might impact a lot of applications.

If we are going to massage the given hashCode, we should also protect against hash table denial of service attacks by adding in a value that is initialized to a (different) random value in each isolate. Accessing this constant should be fast, e.g. via THR.

I think we should change the int.hashCode to be better dispersed.

If the performance of int.hashCode really matters, it's because people are using it in a hash table.

A small overhead on the int.hashCode getter is a very small price to pay. Most hashCode implementations are much more complicated and expensive, and people use them happily. If it makes the HashSet/HashMap work much better, it pays for itself, and it doesn't cost anything for any other class.

Having hashMap/hashSet/etc. post-optimize the hashCode is an option too. I believe the Java SDK HashMap used to do so, but they have since stopped.

Doing so helps every class with a bad hash code, but also costs extra for each class with an already good-enough hash code.

It's a fix that needs to be applied to every hash-table based data structure.

(Well, ideally, every class should have a good hashCode, and every data structure should work well with a bad hashCode, but getting the same amount of improvement at twice the price doesn't seem that great.)

We do have a problem with double, where == allows equality between integers and doubles, and therefore integral doubles need to have the same hash code as the integer. Changing int.hashCode might make double.hashCode more expensive too. Especially on the web.

@lrhn @rakudrama so what is the final conclusion here, one of you picking up the task of changing int.hashCode ?

@a-siva They don't have to happen at the same time. The dart2js versions of {Linked,}Hash{Map,Set} do not use thehashCode for small integers, so I would let someone go ahead with the VM version and I can fix the web version when I return from vacation.

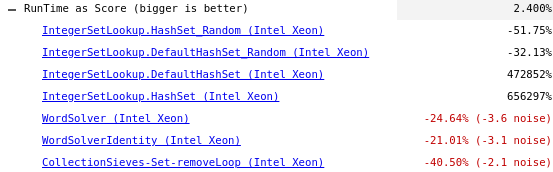

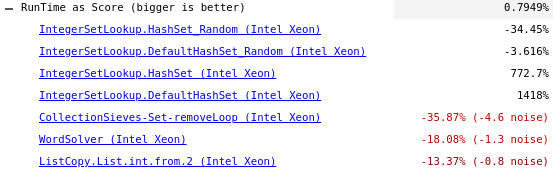

For reference and to check performance impact I put together https://dart-review.googlesource.com/c/sdk/+/258600 which adds 32-bit MurmurHash implementation on ia86/x64 platforms. Running benchmarks shows huge improvements on newly added IntegerSetLookup benchmark built from (bad-behaved) data set referenced above, while regressing at the same time by 30-50% on well-behaved(random) integer sets, 20-40% on WordSolver, CollectionSieves benchmarks that also use sets of (well-behaved) integers(see below).

I believe this is more or less in line with expectations of having better, better distributed hashCode function, but wanted to confirm (@lrhn @rakudrama) (before I extend the assembly implementation of hashCode to arm/arm64).

dart-aot on x64

dart-jit on x64

dart-jit on ia32

I think this slowdown is a higher than I expected, especially on 64-bit.

Do we need int.hashCode to be the same on different architectures?

Murmur hash takes the idea of using the multiplier (and inherent adder carry-ripple) to a point where the dispersion is excellent. I think we just need 'good'.

On x64 something like this would be good enough and quite a bit faster:

movq RAX, operand

movq RDX, 0x1b873593cc9e2d51 // or similar dispersing multiplicand

imulq RDX // single operand imul produces double-width product

addq RAX, RDX // mix in high bits into low bits

andl RAX, 0xffffffff // optional truncation

@aam Consider trying out simpler and faster hash functions, such as Jenkins hash. Multiplication is quite slow compared to shift, addition and bitwise operations.

Maybe @mraleph @mkustermann have better ideas.

@aam Consider trying out simpler and faster hash functions, such as Jenkins hash. Multiplication is quite slow compared to shift, addition and bitwise operations.

x86 imul is typically 3-5 cycle latency and pipelined to enable one result per cycle. While shift/add/bitops can be issued at a rate of several per cycle, the effective issue rate declines when they are data-dependent. Data-dependent simple ops will be worse than imul if you have more than a handful.

The reason I ask 'Do we need int.hashCode to be the same on different architectures?' is that the best answer depends on the architecture. It will be hard to beat the 5 instruction sequence above on x64 that makes use of the 64x64->128 bit multiplier to do the same dispersal as a few dozen simple ops. The story on another architecture might be very different.

Maybe @mraleph @mkustermann have better ideas.

If we are going to massage the given hashCode, we should also protect against hash table denial of service attacks by adding in a value that is initialized to a (different) random value in each isolate.

At the bare minimum we require that const maps can be shared across isolates, thereby forcing all isolates to use same hashing function of the keys. We may allow sharing deeply immutable maps in future - so same requirement there.

We may want to have the option to pre-calculate const maps/sets at AOT compilation time (to avoid cost of re-hashing at AOT runtime). For AppJIT the training run may create the const maps/sets with computed index tables, forcing the later run of AppJIT snapshot to use the same.

All of that requires us to use deterministic hashes for integers/...

For reference and to check performance impact I put together https://dart-review.googlesource.com/c/sdk/+/258600 which adds 32-bit MurmurHash implementation on ia86/x64 platforms.

Consider trying out simpler and faster hash functions, such as Jenkins hash. Multiplication is quite slow compared to shift, addition and bitwise operations.

A good reference point may be the linux kernel code - it has high standards for performance and security, see it's implementation here hash_64 and a relevant lkml discussion from linus himself.

The reason I ask 'Do we need int.hashCode to be the same on different architectures?'

There's value in having the same implementation across architectures: We often measure performance on x64 and assume it will translate to other architectures - using different implementations may lead to significant differences in performance due to more collisions. At least we should strive to be consistent across 64-bit archs and 32-bit archs.

I think we should change the int.hashCode to be better dispersed.

Interestingly enough, in C++ this

#include <unordered_map>

#include <cstdint>

int64_t hashit(std::unordered_map<int64_t, int64_t> map, int64_t a) {

return map.hash_function()(a);

}

compiles to

mov rax, rsi

ret

So the burden in C++ lies on the map implementation to scramble the bits.

For reference and to check performance impact I put together https://dart-review.googlesource.com/c/sdk/+/258600 which adds 32-bit MurmurHash implementation on ia86/x64 platforms.

As a side-note: Assembly intrinsics will - by-default - prevent inlining of a functions IIRC: So it will turn the base price for int.hashCode into box this and call (though may not matter for the benchmarks in question here)

Do we need

int.hashCodeto be the same on different architectures?

I think we do due to vm snapshots (with constants, hash values, but without actual code) potentially shared across platforms. (cc @rmacnak-google )

Tried various constants and hashing sequences, the following seems to produce best results on x64 on existing benchmarks(inlined via graph intrinsics)

__ movq(RDX, compiler::Immediate(0x2d51));

__ imulq(RDX);

__ xorq(RAX, RDX);

__ movq(RDX, RAX);

__ shrq(RDX, compiler::Immediate(32));

__ xorq(RAX, RDX);

__ andq(RAX, compiler::Immediate(0x3fffffff));

CollectionSieves-Set-removeLoop severely penalizes hash collisions for small integers as it continuously deletes elements from initially populated set.