kafka_exporter

kafka_exporter copied to clipboard

kafka_exporter copied to clipboard

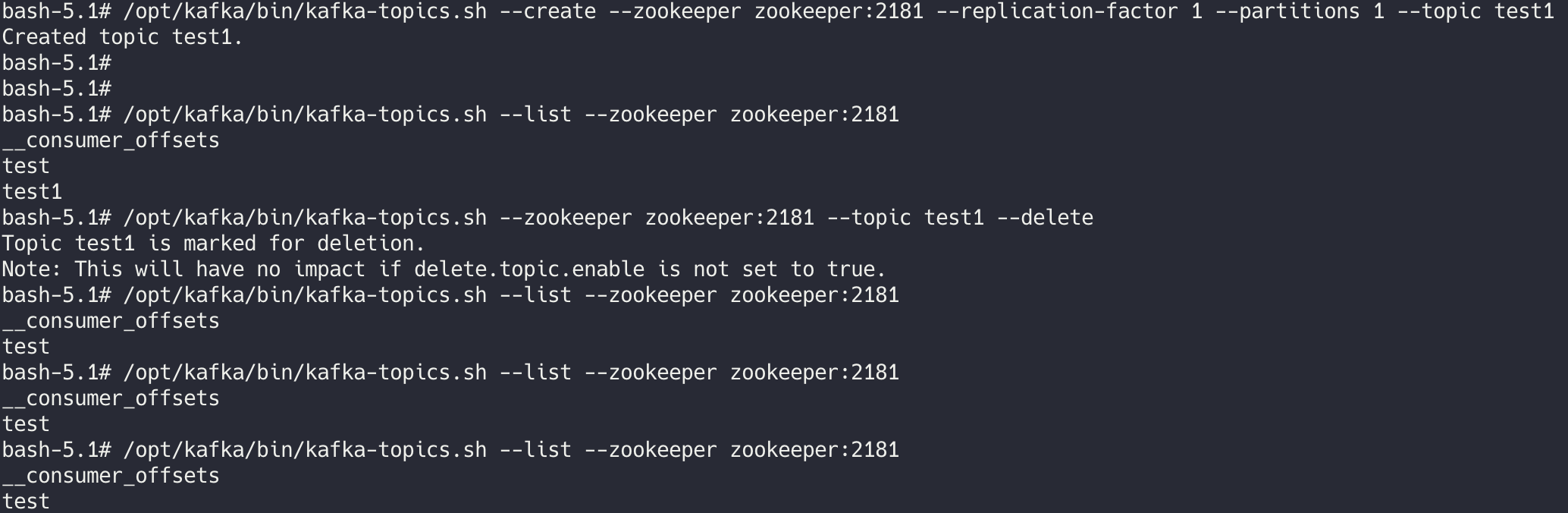

Auto creating kafka topics after remove - v1.3.0 and v1.3.1.

Hi, I noticed that after remove topic is recreating. Checked on v1.3.0 and v1.3.1. v1.2.0 doesn't have this problem. Temporary solution is to stop kafka-exporter pod and then remove given topic. kafka version: 2.7.1

message [KafkaApi-0] Auto creation of topic ams-azure.x-test with 1 partitions and replication factor 3 is successful

No logs related to topic on kafka exporter side

I cannot reproduce this in v1.3.2. Please tell me how to reproduce, thanks.

Hi @danielqsj, I've run into this problem and was able to reproduce it like this:

First, I'm showing that no other topics exist:

$ ./bin/kafka-topics.sh --bootstrap-server localhost:9092 --list

$

Then I'm creating a single topic on the server:

$ ./bin/kafka-topics.sh --bootstrap-server localhost:9092 --create --topic my-test-topic --replication-factor=1 --partitions=2

Created topic my-test-topic.

$ ./bin/kafka-topics.sh --bootstrap-server localhost:9092 --describe --topic my-test-topic

Topic: my-test-topic PartitionCount: 2 ReplicationFactor: 1 Configs:

Topic: my-test-topic Partition: 0 Leader: 1 Replicas: 1 Isr: 1

Topic: my-test-topic Partition: 1 Leader: 1 Replicas: 1 Isr: 1

$

As you can see, this topic has been created with 2 partitions.

Then I'm starting kafka_exporter:

$ ./kafka_exporter --kafka.server=localhost:9092 --verbosity=1 --offset.show-all --concurrent.enable

I0202 14:56:25.701859 1293261 kafka_exporter.go:778] Starting kafka_exporter (version=, branch=, revision=)

I0202 14:56:25.701970 1293261 kafka_exporter.go:779] Build context (go=go1.16.3, user=, date=)

I0202 14:56:25.705241 1293261 kafka_exporter.go:938] Listening on HTTP :9308

...

In another terminal, I'm starting a tight loop of curl executions that will frequently poll /metrics:

$ while true ; do curl -s http://localhost:9308/metrics > /dev/null ; sleep 1 ; done

I'm now deleting the topic I created earlier, wait a few seconds, then describe the topic:

$ ./bin/kafka-topics.sh --bootstrap-server localhost:9092 --delete --topic=my-test-topic ; sleep 3 ; ./bin/kafka-topics.sh --bootstrap-server localhost:9092 --describe --topic my-test-topic

Topic: my-test-topic PartitionCount: 1 ReplicationFactor: 1 Configs:

Topic: my-test-topic Partition: 0 Leader: 1 Replicas: 1 Isr: 1

$

What you can see here is that suddenly, the topic has only a single partition. We use an internal tool to more closely monitor kafka which actually shows that the topic gets deleted and then recreated, but I haven't been able to show this with the normal kafka-topics.sh which seems to be a bit slow.

When looking at the kafka_exporter logs, we also see that from the exporter's point of view, the topic didn't exist at some point:

I0202 15:19:06.807099 1323758 kafka_exporter.go:645] Fetching consumer group metrics

I0202 15:19:07.824354 1323758 kafka_exporter.go:645] Fetching consumer group metrics

I0202 15:19:08.837300 1323758 kafka_exporter.go:645] Fetching consumer group metrics

E0202 15:19:10.138413 1323758 kafka_exporter.go:408] Cannot get leader of topic my-test-topic partition 1: kafka server: Request was for a topic or partition that does not exist on this broker.

E0202 15:19:10.142092 1323758 kafka_exporter.go:417] Cannot get current offset of topic my-test-topic partition 1: kafka server: Request was for a topic or partition that does not exist on this broker.

E0202 15:19:10.146084 1323758 kafka_exporter.go:429] Cannot get oldest offset of topic my-test-topic partition 1: kafka server: Request was for a topic or partition that does not exist on this broker.

E0202 15:19:10.147423 1323758 kafka_exporter.go:438] Cannot get replicas of topic my-test-topic partition 1: kafka server: Request was for a topic or partition that does not exist on this broker.

E0202 15:19:10.148769 1323758 kafka_exporter.go:447] Cannot get in-sync replicas of topic my-test-topic partition 1: kafka server: Request was for a topic or partition that does not exist on this broker.

I0202 15:19:10.148879 1323758 kafka_exporter.go:645] Fetching consumer group metrics

I0202 15:19:11.192781 1323758 kafka_exporter.go:645] Fetching consumer group metrics

I0202 15:19:12.233610 1323758 kafka_exporter.go:645] Fetching consumer group metrics

I0202 15:19:13.260190 1323758 kafka_exporter.go:645] Fetching consumer group metrics

In the kafka logs, this looks like this (the timestamps in the kafka log are UTC, while in the kafka_exporter log they're CET):

2-02-02 14:19:09,423] INFO [ReplicaFetcherManager on broker 1] Removed fetcher for partitions my-test-topic-0,my-test-topic-1 (kafka.server.ReplicaFetcherManager)

[2022-02-02 14:19:09,423] INFO [ReplicaFetcherManager on broker 1] Removed fetcher for partitions (kafka.server.ReplicaFetcherManager)

[2022-02-02 14:19:09,424] INFO [ReplicaFetcherManager on broker 1] Removed fetcher for partitions my-test-topic-1 (kafka.server.ReplicaFetcherManager)

[2022-02-02 14:19:09,430] INFO Log for partition my-test-topic-1 is renamed to /kafka/kafka-logs-dff5fd8d7b17/my-test-topic-1.12bc58943d004e44b8a41322a20693c2-delete and is scheduled for deletion (kafka.log.LogManager)

[2022-02-02 14:19:09,431] INFO [ReplicaFetcherManager on broker 1] Removed fetcher for partitions my-test-topic-0 (kafka.server.ReplicaFetcherManager)

[2022-02-02 14:19:09,435] INFO Log for partition my-test-topic-0 is renamed to /kafka/kafka-logs-dff5fd8d7b17/my-test-topic-0.3a7aced711714e2fa7d4a1716bd2568a-delete and is scheduled for deletion (kafka.log.LogManager)

[2022-02-02 14:19:09,881] INFO Topic creation {"version":1,"partitions":{"0":[1]}} (kafka.admin.AdminUtils$)

[2022-02-02 14:19:09,882] INFO [KafkaApi-1] Auto creation of topic my-test-topic with 1 partitions and replication factor 1 is successful (kafka.server.KafkaApis)

[2022-02-02 14:19:09,891] INFO [ReplicaFetcherManager on broker 1] Removed fetcher for partitions my-test-topic-0 (kafka.server.ReplicaFetcherManager)

[2022-02-02 14:19:09,892] INFO Loading producer state from offset 0 for partition my-test-topic-0 with message format version 2 (kafka.log.Log)

[2022-02-02 14:19:09,892] INFO Completed load of log my-test-topic-0 with 1 log segments, log start offset 0 and log end offset 0 in 1 ms (kafka.log.Log)

[2022-02-02 14:19:09,893] INFO Created log for partition [my-test-topic,0] in /kafka/kafka-logs-dff5fd8d7b17 with properties {compression.type -> producer, message.format.version -> 1.0-IV0, file.delete.delay.ms -> 60000, max.message.bytes -> 104857600, min.compaction.lag.ms -> 0, message.timestamp.type -> CreateTime, min.insync.replicas -> 1, segment.jitter.ms -> 0, preallocate -> false, min.cleanable.dirty.ratio -> 0.5, index.interval.bytes -> 4096, unclean.leader.election.enable -> false, retention.bytes -> -1, delete.retention.ms -> 86400000, cleanup.policy -> [delete], flush.ms -> 9223372036854775807, segment.ms -> 604800000, segment.bytes -> 1073741824, retention.ms -> 604800000, message.timestamp.difference.max.ms -> 9223372036854775807, segment.index.bytes -> 10485760, flush.messages -> 9223372036854775807}. (kafka.log.LogManager)

As you can see, kafka deleted partitions 0 and 1 for my-test-topic, and then shortly afterwards, auto creation for my-test-topic is triggered, with 1 partition.

Given this behaviour, it very much looks like kafka_exporter's metric collection seems to trigger topic auto creation.

(N.B.: reproducing the problem like this doesn't always 100% work reliably. If you can't get it to run the first time, try re-running the delete topic + wait + describe topic command a few times)

Hi. Are there any updates? We also face this issue with v1.4.2 which comes together with the strimzi-kafka-operator v.0.28. Is it maybe possible to prevent this using the consumer config allow.auto.create.topics?

Best regards David

@davidpiendl turning off topic auto-creation is the only workaround we were able to find. Lots of experimentation and a review or the kafka_exporter source code led us to the conclusion that it is merely triggering a deeper bug in Kafka itself (or maybe that's exactly Kafka's intended behaviour, who knows).

@akrennmair thanks for the quick response. I saw that the used Samara client allows to configure AllowAutoCreateTopics for consumers and metadata refreshs. See https://github.com/Shopify/sarama/blob/main/config.go#L158 . It defaults to true, so consumers and metadata refreshes cause recreation.

I can not find the setting of this property to false in kafka_exporter, which should definitly be done. Did I overlook something?

@davidpiendl sorry for not being clearer: we disabled topic auto-creation on the Kafka server side. That way we managed to contain the issue. It required a review of our whole codebase whether anything implicitly depends on topic auto-creation, and after fixing these, we were safe to switch off the flag on the servers.

@akrennmair @davidpiendl Thanks for reporting this issue.

I add a new option kafka.allow-auto-topic-creation to control it in https://github.com/danielqsj/kafka_exporter/commit/5cb32d4bfeb48636ef1b5d5ebbb5856c6bc776d4, you can set --no-kafka.allow-auto-topic-creation to have a try.

The image is building in https://github.com/danielqsj/kafka_exporter/actions/runs/2912169325, after this workflow finished, you can have try the danielqsj/kafka-exporter:latest

Waiting for your response :)

@danielqsj Maybe a stupid question ... but since the new option defaults the true -> what would be the cases when it is useful for the Kafka Exporter to auto.create the topics? I'm not entirely sure I understand when would the auto-creation be useful here. Is it just for backwards compatibility? Or do you see some value in it?

@danielqsj Maybe a stupid question ... but since the new option defaults the

true-> what would be the cases when it is useful for the Kafka Exporter to auto.create the topics? I'm not entirely sure I understand when would the auto-creation be useful here. Is it just for backwards compatibility? Or do you see some value in it?

@scholzj In the previous kafka_exporter, we do not set this option, so the default value true is used in sarama. I wanna to keep the forward compatibility, and set the default value as true here.

But I'm open to change the default value.

After much thought, I agree that the default value of false seems better.

v1.6.0 has been released, please try it.

Gret, thanks for the new release 👍

@danielqsj it seems that this has fixed our problem. I can reproduce the error with v1.5.0, but not with 1.6.0 anymore.

@davidpiendl cool, thanks for reporting issue again. :)