dcache

dcache copied to clipboard

dcache copied to clipboard

Logging for rejected connections on Webdav door

Hello,

We are currently doing some performance tests with LSST use case on dCache and we are facing some issues when 5000+ jobs are accessing dCache for read/write access.

First of all, I think the Webdav logs for this kind of situation is really difficult to understand [1]. I see some rejected exceptions, stacktraces so I can imagine it concerns rejected connections because of full Webdav queues.

I'm trying to understand this STARTED,1<=2048<=2048,i=0,r=-1,q=4096

According to our webdav conf [2], the first numbers correspond to the max and active threads and the last to the queue length?

It can be helpful if we can see those numbers in the cell info from the admindoor like what we have for HTTP-TPC transfers :

--- remote-transfer-handler (HTTP-TPC transfers) ---

Current transfers:

Finaliser:

Max threads: 1000

Current threads: 0

Queued tasks: 0

What's the reasonable tuning I can set on the Webdav door conf to avoid this situation? I already tried to increase the limits default values but even with this current configuration [2], the doors start to reject connections and became unresponsive. Or shall I add more webdav doors to support this kind of workload? LSST goals are up to 10k jobs on dCache. The pools are already high on CPU load (because of HTTPS I think), I limited the max active webdav requests to 100 / pool.

[1]

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: 08 Mar 2023 10:27:49 (webdav-ccdcacli449) []

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: java.util.concurrent.RejectedExecutionException: CEP:SocketChannelEndPoint@e5843eb{l=/134.158.227.2:2880,r=/134.158.168.29:42170,OSHUT,fill=FI,flush=-,to=1/300000}{io=1/0,kio=1,kro=1}->SslConnection@2e5f82fd{NOT_HANDSHAKING,eio=-1/-1,di=-1,fill=INTERESTED,flush=IDLE}~>DecryptedEndPoint@2fadf7c9{l=/134.158.227.2:2880,r=/134.158.168.29:42170,OSHUT,fill=FI,flush=-,to=1/300000}=>HttpConnection@6151f835[p=HttpParser{s=CLOSE,0 of 0},g=HttpGenerator@2e780212{s=START}]=>HttpChannelOverHttp@7ce2254a{s=HttpChannelState@3cb196a0{s=IDLE rs=BLOCKING os=OPEN is=IDLE awp=false se=false i=true al=0},r=1,c=false/false,a=IDLE,uri=null,age=0}:runFillable:BLOCKING

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.QueuedThreadPool.execute(QueuedThreadPool.java:716)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.execute(EatWhatYouKill.java:375)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.doProduce(EatWhatYouKill.java:310)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.tryProduce(EatWhatYouKill.java:173)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.run(EatWhatYouKill.java:131)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.ReservedThreadExecutor$ReservedThread.run(ReservedThreadExecutor.java:409)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.QueuedThreadPool.runJob(QueuedThreadPool.java:883)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.QueuedThreadPool$Runner.run(QueuedThreadPool.java:1034)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at java.base/java.lang.Thread.run(Thread.java:829)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: 08 Mar 2023 10:27:49 (webdav-ccdcacli449) [] QueuedThreadPool[jetty]@2cc144f8{STARTED,1<=2048<=2048,i=0,r=-1,q=4096}[ReservedThreadExecutor@4f8a141{reserved=0/20,pending=20}] rejected org.eclipse.jetty.io.ManagedSelector$DestroyEndPoint@52cf4153

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: 08 Mar 2023 10:27:49 (webdav-ccdcacli449) [] QueuedThreadPool[jetty]@2cc144f8{STARTED,1<=2048<=2048,i=0,r=-1,q=4096}[ReservedThreadExecutor@4f8a141{reserved=0/20,pending=20}] rejected CEP:SocketChannelEndPoint@758051c9{l=/134.158.227.2:2880,r=/134.158.170.160:38618,OPEN,fill=FI,flush=-,to=3/300000}{io=1/0,kio=1,kro=1}->SslConnection@3c49b18a{NOT_HANDSHAKING,eio=-1/-1,di=-1,fill=INTERESTED,flush=IDLE}~>DecryptedEndPoint@516c1b3c{l=/134.158.227.2:2880,r=/134.158.170.160:38618,OPEN,fill=FI,flush=-,to=3/300000}=>HttpConnection@61588327[p=HttpParser{s=START,0 of -1},g=HttpGenerator@49b59386{s=START}]=>HttpChannelOverHttp@3881bedb{s=HttpChannelState@905e70c{s=IDLE rs=BLOCKING os=OPEN is=IDLE awp=false se=false i=true al=0},r=1,c=false/false,a=IDLE,uri=null,age=0}:runFillable:BLOCKING

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: 08 Mar 2023 10:27:49 (webdav-ccdcacli449) []

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: java.util.concurrent.RejectedExecutionException: CEP:SocketChannelEndPoint@758051c9{l=/134.158.227.2:2880,r=/134.158.170.160:38618,OPEN,fill=FI,flush=-,to=4/300000}{io=1/0,kio=1,kro=1}->SslConnection@3c49b18a{NOT_HANDSHAKING,eio=-1/-1,di=-1,fill=INTERESTED,flush=IDLE}~>DecryptedEndPoint@516c1b3c{l=/134.158.227.2:2880,r=/134.158.170.160:38618,OPEN,fill=FI,flush=-,to=4/300000}=>HttpConnection@61588327[p=HttpParser{s=START,0 of -1},g=HttpGenerator@49b59386{s=START}]=>HttpChannelOverHttp@3881bedb{s=HttpChannelState@905e70c{s=IDLE rs=BLOCKING os=OPEN is=IDLE awp=false se=false i=true al=0},r=1,c=false/false,a=IDLE,uri=null,age=0}:runFillable:BLOCKING

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.QueuedThreadPool.execute(QueuedThreadPool.java:716)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.execute(EatWhatYouKill.java:375)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.doProduce(EatWhatYouKill.java:310)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.tryProduce(EatWhatYouKill.java:173)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.run(EatWhatYouKill.java:131)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.ReservedThreadExecutor$ReservedThread.run(ReservedThreadExecutor.java:409)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.QueuedThreadPool.runJob(QueuedThreadPool.java:883)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at org.eclipse.jetty.util.thread.QueuedThreadPool$Runner.run(QueuedThreadPool.java:1034)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: at java.base/java.lang.Thread.run(Thread.java:829)

Mar 08 10:27:49 ccdcacli449 dcache@webdav-ccdcacli449Domain[99658]: 08 Mar 2023 10:27:49 (webdav-ccdcacli449) [] QueuedThreadPool[jetty]@2cc144f8{STARTED,1<=2048<=2048,i=0,r=-1,q=4096}[ReservedThreadExecutor@4f8a141{reserved=1/20,pending=20}] rejected org.eclipse.jetty.io.ManagedSelector$DestroyEndPoint@2ce10cb0

The webdav doors started to reject connections quickly even after increasing the limits of the door

[2]

webdav.authn.protocol=https

webdav.redirect.allow-https=true

webdav.limits.queue-length=4096

webdav.limits.threads.max=2048

webdav.limits.backlog=8192

webdav.limits.acceptors=4

webdav.mover.timeout = 3600000

webdav.service.pool.timeout = 20000

The workload context:

5000 jobs (mono CPU) Each job sends Webdav requests to the door (PROPFIND, DELETE, etc.) and keep the connection opened. For GET et PUT requests, the connection is closed when the upload/download is done. The average transfer size is around 40MB.

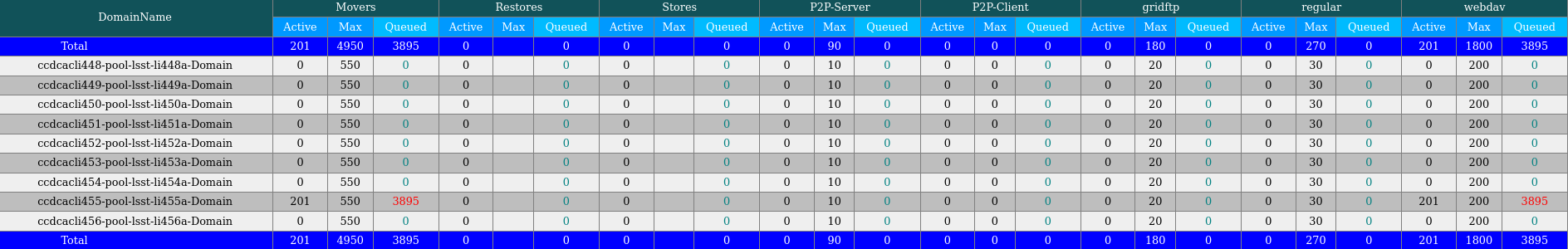

I also have to check why pool-lsst-li455a has so many queue connections...

I also have to check why pool-lsst-li455a has so many queue connections...

Any help will be appreciated :)

Cheers, Adrien

Hi Adrien,

It looks like the door simply cannot keep up with the number of clients as-is.

There are a few reasons why this might be happening:

- the door is proxying many transfers (for whatever reason),

- the door is accepting transfer requests, but blocking because pool-manager cannot find a suitable pool or the pool is queuing the request.

- the door is receiving requests (transfer or namespace) and is blocking because pnfs-manager is slow.

There is, perhaps, another effect where the client may be disconnecting (in effect, aborting the request) if the door takes too long to reply. The door may not notice that the client has disconnected, so will keep processing the request. If the client reconnects (perhaps retrying the request) then there could be an avalanche-like effect.

It would be interesting to learn the concurrency of requests from the client. This might indicate which request is causing the problem. This information is available from the access log file. I wrote a script to generate concurrency information for HTTP-TPC requests. This could be extended to support arbitrary HTTP requests.

As practical steps, you could add more pools if you see the pools struggling, otherwise try adding more WebDAV doors.

However, I think this issue really needs someone to spend some time investigating what is going on, which is (unfortunately) not something I can do right now.

HTH, Paul.