Example Colab notebook

I'm trying for a couple of days now to make use of this repo, but I can't make it work... Here is what I've tried. Can you please supply me with a simple Collab notebook example that actually works, or point out why isn't my trial working?

Edit: Currently the error I get there is:

File "test.py", line 186, in main checkpoint = load_checkpoint(model, args.checkpoint, map_location='cpu') File "/usr/local/lib/python3.7/dist-packages/mmcv/runner/checkpoint.py", line 581, in load_checkpoint checkpoint = _load_checkpoint(filename, map_location, logger) File "/usr/local/lib/python3.7/dist-packages/mmcv/runner/checkpoint.py", line 520, in _load_checkpoint return CheckpointLoader.load_checkpoint(filename, map_location, logger) File "/usr/local/lib/python3.7/dist-packages/mmcv/runner/checkpoint.py", line 285, in load_checkpoint return checkpoint_loader(filename, map_location) File "/usr/local/lib/python3.7/dist-packages/mmcv/runner/checkpoint.py", line 302, in load_from_local checkpoint = torch.load(filename, map_location=map_location) File "/usr/local/lib/python3.7/dist-packages/torch/serialization.py", line 713, in load return _legacy_load(opened_file, map_location, pickle_module, **pickle_load_args) File "/usr/local/lib/python3.7/dist-packages/torch/serialization.py", line 920, in _legacy_load magic_number = pickle_module.load(f, **pickle_load_args) _pickle.UnpicklingError: invalid load key, '\x0a'.

Hello, thanks for your attention. I read the Colab notebook you wrote. The bug is that it can't find the pre-trained model. You need to download the pre-trained model first and put it in the pretrained/ folder.

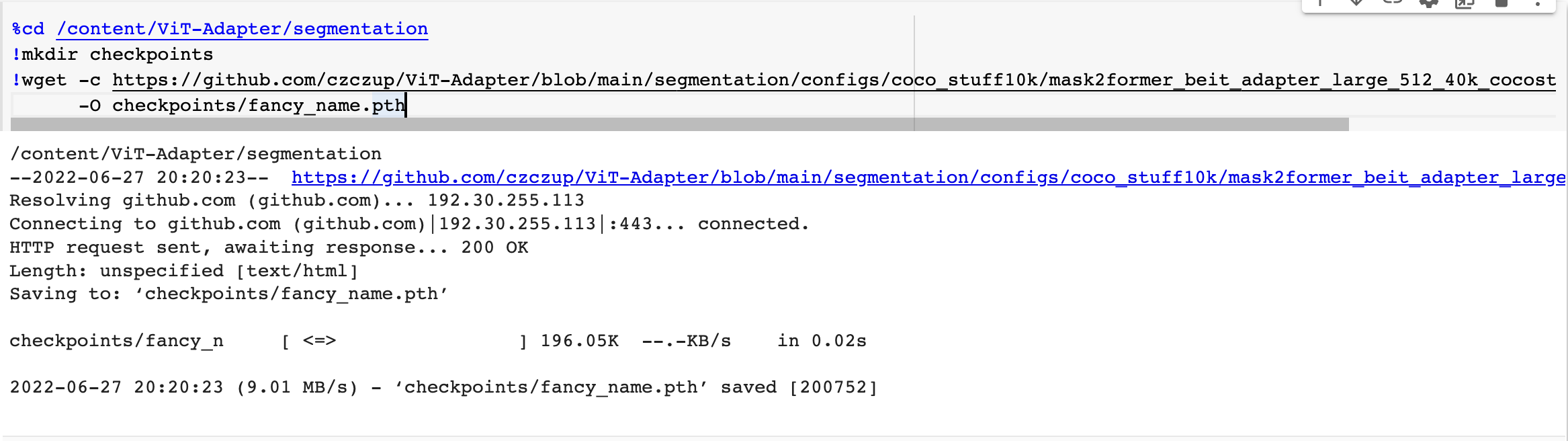

Besides, there is a bug in this code that it downloads the config file and renames it to .pth. You should download and unzip the model checkpoint (a .zip file) link.

Hey @dudifrid @czczup

I tried running the notebook with the updated checkpoint link but now I face a different issue. Link to notebook

Here is the error stack trace:

/content/ViT-Adapter/segmentation

Traceback (most recent call last):

File "test.py", line 11, in <module>

import mmseg_custom # noqa: F401,F403

File "/content/ViT-Adapter/segmentation/mmseg_custom/__init__.py", line 3, in <module>

from .models import * # noqa: F401,F403

File "/content/ViT-Adapter/segmentation/mmseg_custom/models/__init__.py", line 2, in <module>

from .backbones import * # noqa: F401,F403

File "/content/ViT-Adapter/segmentation/mmseg_custom/models/backbones/__init__.py", line 2, in <module>

from .beit_adapter import BEiTAdapter

File "/content/ViT-Adapter/segmentation/mmseg_custom/models/backbones/beit_adapter.py", line 9, in <module>

from mmseg.models.builder import BACKBONES

File "/usr/local/lib/python3.8/dist-packages/mmseg/models/__init__.py", line 2, in <module>

from .backbones import * # noqa: F401,F403

File "/usr/local/lib/python3.8/dist-packages/mmseg/models/backbones/__init__.py", line 6, in <module>

from .fast_scnn import FastSCNN

File "/usr/local/lib/python3.8/dist-packages/mmseg/models/backbones/fast_scnn.py", line 7, in <module>

from mmseg.models.decode_heads.psp_head import PPM

File "/usr/local/lib/python3.8/dist-packages/mmseg/models/decode_heads/__init__.py", line 2, in <module>

from .ann_head import ANNHead

File "/usr/local/lib/python3.8/dist-packages/mmseg/models/decode_heads/ann_head.py", line 8, in <module>

from .decode_head import BaseDecodeHead

File "/usr/local/lib/python3.8/dist-packages/mmseg/models/decode_heads/decode_head.py", line 11, in <module>

from ..losses import accuracy

File "/usr/local/lib/python3.8/dist-packages/mmseg/models/losses/__init__.py", line 6, in <module>

from .focal_loss import FocalLoss

File "/usr/local/lib/python3.8/dist-packages/mmseg/models/losses/focal_loss.py", line 6, in <module>

from mmcv.ops import sigmoid_focal_loss as _sigmoid_focal_loss

File "/usr/local/lib/python3.8/dist-packages/mmcv/ops/__init__.py", line 2, in <module>

from .active_rotated_filter import active_rotated_filter

File "/usr/local/lib/python3.8/dist-packages/mmcv/ops/active_rotated_filter.py", line 8, in <module>

ext_module = ext_loader.load_ext(

File "/usr/local/lib/python3.8/dist-packages/mmcv/utils/ext_loader.py", line 13, in load_ext

ext = importlib.import_module('mmcv.' + name)

File "/usr/lib/python3.8/importlib/__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

ImportError: /usr/local/lib/python3.8/dist-packages/mmcv/_ext.cpython-38-x86_64-linux-gnu.so: undefined symbol: _ZN2at4_ops7resize_4callERKNS_6TensorEN3c108ArrayRefIlEENS5_8optionalINS5_12MemoryFormatEEE

To my understanding, it is related to mmcv-full installation but the notebook gave no errors. I tried restarting the runtime after installing all the dependencies and just running the code execution but that made no difference.

Update: I changed the version of mmvc-full and followed the README.md provided and everything works well!

The link to the notebook is in the previous comment, Ill be cleaning it up and making it more presentable

Update: I changed the version of mmvc-full and followed the README.md provided and everything works well!

The link to the notebook is in the previous comment, Ill be cleaning it up and making it more presentable

Your work is really excellent! Could I share your colab notebook link in the readme?

Update: I changed the version of mmvc-full and followed the README.md provided and everything works well! The link to the notebook is in the previous comment, Ill be cleaning it up and making it more presentable

Your work is really excellent! Could I share your colab notebook link in the readme?

It would be an honor!! Let me know how else I can help! 🥳

I have added some text prompts to make it clear what the code is doing. I will be improving it more after work/classes!

@czczup

I got a report stating an error in the colab notebook, seems to be an issue with torch installation.

I will be addressing it soon. Until then let me know if you find a fix, seems straightforward

The issue should be fixed, The author of the report opened an issue in #103

Will be tracking over there