FaceJam

FaceJam copied to clipboard

FaceJam copied to clipboard

FaceJam (HAMR 2018)

The goal of this 2 day hackathon was given a song and an image with a face in it, to make a program that automatically detects the face and makes a music video in which the face's eyebrows move to the beat, and in which the face changes its expression depending where we are in the song (e.g. verse vs chorus). Click on the thumbnail below to show an example animating the face of Dwayne Johnson ("The Rock") to go along with Alien Ant Farm's Smooth Criminal:

and here is an example of animating Paul McCartney's face to "Come Together"

Dependencies

This requires that you have numpy/scipy installed, as well as ffmpeg for video loading and saving, dlib for facial landmark detection (pip install dlib), librosa for audio features (pip install librosa), and madmom for beat tracking (pip install madmom).

Usage

To run this program on your own songs, first check it out as follows

git clone --recursive https://github.com/ctralie/FaceJam.git

You will also need to download a dlib facial landmarks file shape_predictor_68_face_landmarks.dat and place it at the root of the repository

Then, type the following at the root of the FaceJam directory

python FaceJam.py --songfilename (path to your song) --imgfilename (path to image with a face in it) --videoname (output name for the resulting music video, e.g. "myvideo.avi")

to see more options, including number of threads to make it faster on a multicore machine, please type

python FaceJam.py --help

Algorithm / Development

Below I will describe some of the key steps of the algorithm

Piecewise Affine Face Warping (Main Code: FaceTools.py, GeometryTools.py)

We use the delaunay triangulation on facial landmarks to create a bunch of triangles. We then define a piecewise affine (triangle to triangle) warp to extend the map f from facial landmarks in one position to facial landmarks in anther position to a map g from all pixels in the face bounding box in one position to the pixels in the bounding box of a face in another position. Below is an example of a Delaunay triangulation on The Rock's face

And below is an example using this Delaunay triangulation to construct piecewise affine maps for randomly perturbed landmarks

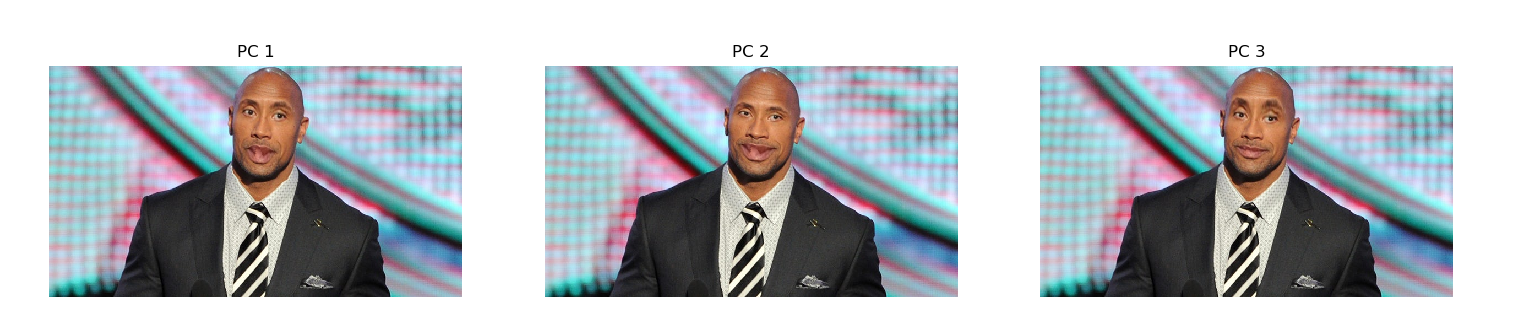

Facial Expressions PCA / Barycentric Face Expression Cloning (Main Code: ExpressionsModel.py)

Next, I took a video of myself making a bunch of facial expression to make a "facial expressions dictionary" of sorts. I perform a procrustes alignment of all of the facial landmarks to the first frame to control for rigid motions of my head. Then, considering the collections of xy positions of all of my facial landmarks as one big vector, I perform PCA on this collection of landmark positions to learn a lower dimensional coordinate system for the space of my expressions

I then use barycentric coordinates of my facial landmarks relative to triangles drawn on The Rock's face to define a piecewise affine warp on his face

<BR><BR>

<BR><BR> Here's an entire video showing me "cloning" my face expression to The Rock's face this way

Song Structure Diffusion Maps + Beat Tracking = FaceJam! (Main Code: GraphDitty submodule, FaceJam.py)

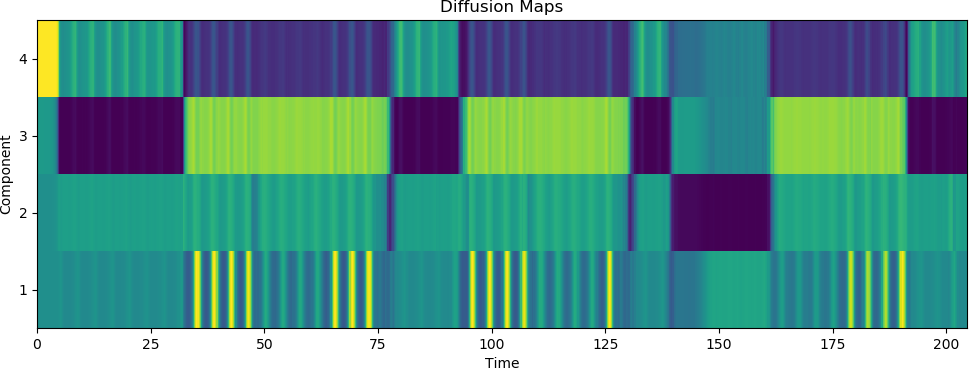

Finally, given a song, I perform beat tracking using madmom, the best beat tracking software I'm aware of, and I also perform diffusion maps on song structure (this is my ISMIR late breaking demo this year...please see my GraphDitty demo code, and abstract NOTE that GraphDitty is a submodule of this repo). Below is a plot of the coordinates of diffusion maps for Alien Ant Farm's "Smooth Criminal" cover:

Verse/chorus separation is clearly visible in the second component, and there is some finer structure in the other components. I put the diffusion maps coordinates in the PCA space of facial landmarks, add on an additional vertical eyebrow displacement depending on how close we are to a beat onset, do the warp, and synchronize the result to music. And that's it!