adm-zip

adm-zip copied to clipboard

adm-zip copied to clipboard

Adding content to Existing Archive corrupts data

Overview

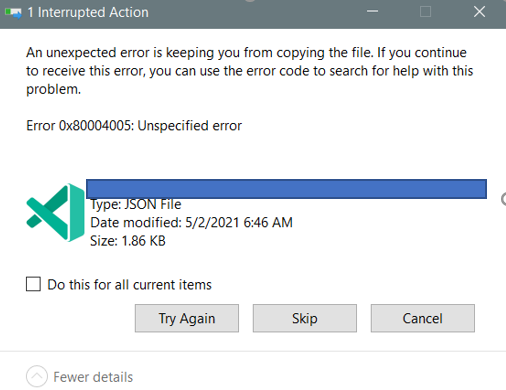

We try to add files and folder to an existing archive. The existing archive is around 206KB. When we add content to the existing archive, it corrupts some of the existing content. When trying to extract the files, we get this error:

Package Information

"adm-zip": ^0.5.1

Code snippet

I took out some code from our repo and combined it into a single snippet to help show what we are doing. Basically, just adding some files to the archive and overwriting any existing content.

const admZip = new AdmZip(fullPath);

files.forEach(file => {

const content = file.content;

if (admZip.getEntry(file.path)) {

admZip.deleteFile(file.path);

}

admZip.addFile(file.path, Buffer.from(file.content));

});

admZip.writeZip(fullPath);

Additional Information

I looked into the error code specified in the overview, and it looks like it there is a difference in the compression format. According to this page: "The above error messages appear if the .zip file is compressed or encrypted using an algorithm that Windows doesn’t support. Windows supports Deflate, Deflate64 compression methods."

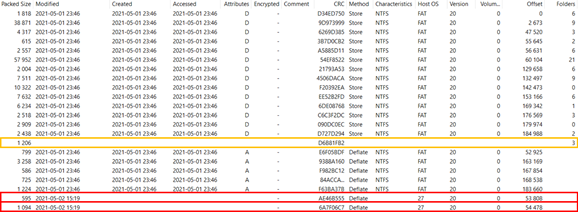

I used 7zip to look at the contents of the archive and was able to see that compression method is specified for the added files, but not for any directory or subdirectories that were added:

The added content is highlighted. The orange is a directory that should be store. The red correctly has deflate, but has 27 under Host OS while the original content has FAT

I've mitigated the issue by decompressing and adding them to a new AdmZip instance.

const temp = new AdmZip(fullPath);

const newZip = new AdmZip();

temp.getEntries().forEach(entry => {

if (!entry.isDirectory) {

newZip.addFile(entry.entryName, entry.getData());

}

});

return newZip;

It seems that the issue is a difference between how the target archive entries were added and how the new ones are being added through the api.

- Could this be caused by the offset of the new entries conflicting with the existing archive?

- And/Or would this most likely be an issue with a difference in compression algorithms?

Thanks for reporting, I will look into.