polis

polis copied to clipboard

polis copied to clipboard

Feature/convict with config volume

@patcon (cc: @tamas-soos-toptal) This is a replacement of https://github.com/compdemocracy/polis/pull/1275. It doesn't require a makefile.

I tried moving the FROM polis_config AS config statement later in the docker build process, but I was unsuccessful with my first try.

@patcon, we think that this resolves your concerns with my original pull request without needed a makefile.

My next step is to see how it works in practice with secrets kept outside of the repo and to see whether it is compatible with docker or aws secrets.

-Chris

# Gulp v3 stops us from upgrading beyond Node v11

FROM polis_config AS config

FROM node:11.15.0-alpine

WORKDIR /app

RUN apk add git --no-cache

COPY package*.json ./

RUN npm ci

COPY --from=config /config/config.js /app/config/

COPY --from=config /config/*.yaml /app/config/

COPY . .

ARG GIT_HASH

RUN npm run deploy:prod

Thanks! Will review the rest later, and see about those failing tests

One minor thing to note is the depends_on key is for the start order of containers, and not build order. This could lead to unexpected behavior when COPY'ing from local images. We already kinda hit wierd stuff with file-server. I believe using multistage builds from a prebuilt local image (as opposed to from a stage built earlier in the dockerfile) means we have to be manual about how we start/build. Otherwise, I believe we sometimes get setups that aren't actually up-to-date until the second (or now third) rerun.

But we're already hitting this with file-server, so it's no worse off than before.

Worst case, the fix is just a startup script that runs like:

docker-compose up --build --detach config

docker-compose up --build --detach client-admin client-participation client-report math server nginx-proxy

docker-compose up --build --detach file-server

Instead of docker-compose up --build --detach

@patcon,

- The E2E test failures were similar to those I saw on my build machine. However, I got the same errors with dev.

- The bundlewatch error is because of the missing

polis.config.template.jsfile. I've pushed a change to the bundlewatch config file that should fix that particular error.

The new bundlewatch error is also related to the config volume. Client-admin works, but client-participation does not.

Can you explain why the bundlewatch code uses npm run build:webpack for client-admin and npm run deploy:prod for client-participation?

Thanks!

- name: "Install & Build: client-admin"

working-directory: client-admin

run: |

npm install

npm run build:webpack

- name: "Install & Build: client-participation"

working-directory: client-participation

run: |

npm install

npm run deploy:prod

# So directory stays consistent between builds for comparison.

mv dist/cached/* dist/cached/xxxxxxxxx

On another note, I found some information on using docker secrets with node convict.

In the meantime, a user can place all of their secrets in a yaml file that is kept out of version control.

The E2E test failures were similar to those I saw on my build machine. However, I got the same errors with dev.

I suspect the errors in CI and the ones you're seeing locally might look similar but have different origins...? embed and integration specs always fail locally when make e2e-prepare isn't run before build, which is commonly overlooked (and poorly documented 😬 ):

https://github.com/compdemocracy/polis/blob/f33d0b0471fe2d31d08321b58c74b5e59bb4fe77/Makefile#L17-L19

But the failures in CI, this wouldn't have that issue, because we do that here:

https://github.com/compdemocracy/polis/blob/f33d0b0471fe2d31d08321b58c74b5e59bb4fe77/.github/workflows/cypress-tests.yml#L59-L60

So it's likely something related to domainWhitelist or dev_mode being set incorrectly. These config only come into play for embeds/integrations.

Can you explain why the bundlewatch code uses npm run build:webpack for client-admin and npm run deploy:prod for client-participation?

Heh, just arcane reasons :) client-participation just has the original script name, but they're functionally the same :) I think for client-admin, I snuck a better name for the script (build:webpack) into the package.json, but client-participation hasn't been updated much at all, so no opportunity

@patcon, thanks for the explanations above!

And to confirm, @tamas-soos-toptal and I are are trying to modify our pull request so that it passes all of the github actions.

Is this the right thing to do next?

Is this the right thing to do next?

Definitely. Happy to poke around before, but getting tests passing is usually a good first step before seeking review/feedback. It's possible that maintainers haven't really looked at this while they're failing

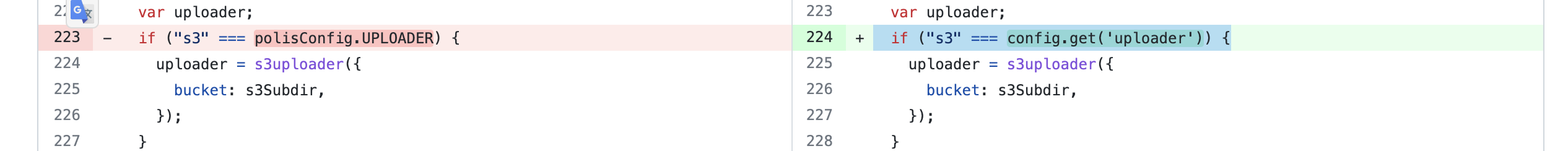

Small personal suggestion based on how I think about things when progress stalls on a big contribution: if you wanted to make this PR seem less intimidating to review for the team, I would consider looking for a way to wrap convict's method calls to allow polisConfig.DISABLE_PLANS to run config.get('fb_app_id'). It might not be the most elegant thing architecturally, but making changesets appear smaller is sometimes helpful. You could basically just instantiate polisConfig object at the top of each file with polis.config.js instead of polis.config.json (since var polisConfig = require("./polis.config"); won't need any change), so the diff would be very minimal

Note, I'm still pretty on-the-fence about building config into a new container image and them pulling those files from an image into client-* during build. This strikes me as docker gymnastics just to avoid copying 3 files on the host system before running docker. Add it leads to unexpected side-effects imho (needing to pre-build one container first), which I think will bite us later (e.g., I've been exploring using Helm as a deploy tool to get Polis managed using the Kubernetes orchestration tool, and I don't think it would understand this thing we're doing)

So personally, I think I'm unfortunately still a -1 on what's happening in client-* containers. But again, it's really easily resolved with just copying a file into each build context.

@patcon, we are getting a message "4 workflows awaiting approval. First-time contributors need a maintainer to approve running workflows. Learn more." We did not need this before. Is there a quota that we have passed?

@tamas-soos-toptal, I want your priority to still be understanding and fixing the workflow errors.

@patcon, I think that an advantage of Tamas's docker solution is that deploying new configs would be faster and easier. The config volume would be recreated and the nodes would just need a rolling restart. The slow part of polis-client-admin is building and distributing the new static code, so think that the docker volume and makefile solutions are comparable.

@tamas-soos-toptal & @patcon,

I think that Patrick's suggestion below makes sense. But, can polisConfig.FB_APP_ID really be wired to run config.get('fb_app_id'), or would it need to be written polisConfig.FB_APP_ID()` (with brackets)? If the brackets are needed, all of the diffs would still show up. (I'm not a js/ts expert.)

Small personal suggestion based on how I think about things when progress stalls on a big contribution: if you wanted to make this PR seem less intimidating to review for the team, I would consider looking for a way to wrap convict's method calls to allow polisConfig.FB_APP_ID to run config.get('fb_app_id'). It might not be the most elegant thing architecturally, but making changesets appear smaller is sometimes helpful. You could basically just instantiate polisConfig object at the top of each file with polis.config.js instead of polis.config.json (since var polisConfig = require("./polis.config"); won't need any change), so the diff would be very minimal

Is there a quota that we have passed?

Ah yeah, I hate it, but that's something that arrived due to GitHub abuse (it's platform-wide): https://github.blog/2021-04-22-github-actions-update-helping-maintainers-combat-bad-actors/

I usually just create a PR from my feature branch to the mainline of my own repo, and test there. The workflows should still run on PRs completely within your own repo.

Rushing out door, but will read the rest of your msg later :)

would it need to be written

polisConfig.FB_APP_ID()(with brackets)?

Should be able to exec the config.get() commands in polis.config.js and export an object with a bunch of const keys export {FB_APP_ID: config.get('FB_APP_ID'), ... } (but more programmatically :) ).

config volume would be recreated and the nodes would just need a rolling restart. The slow part of polis-client-admin is building and distributing the new static code,

Agree with config volume for server-side stuff, but for the react builds, I'm still hearing "faster and easier" as a reference to avoiding running 3 copy operations before running docker, which I can't understand.

Maybe this suggestion has been lost to the sands of time, but for client-*, there is much more merit in just replacing tokens on-place as the files are being served through file-server or server, as the codebase does for other places it needed dynamic data, like so:

https://create-react-app.dev/docs/title-and-meta-tags/#generating-dynamic-meta-tags-on-the-server

https://github.com/compdemocracy/polis/blob/f33d0b0471fe2d31d08321b58c74b5e59bb4fe77/client-participation/index.html#L24

https://github.com/compdemocracy/polis/blob/f33d0b0471fe2d31d08321b58c74b5e59bb4fe77/server/src/server.ts#L16804-L16865

And in makeFileFetcher:

https://github.com/compdemocracy/polis/blob/f33d0b0471fe2d31d08321b58c74b5e59bb4fe77/server/src/server.ts#L16644-L16651

The perk of the above is that it even allows public images to be configurable (without hardcoding things into built images)

@patcon and @tamas-soos-toptal,

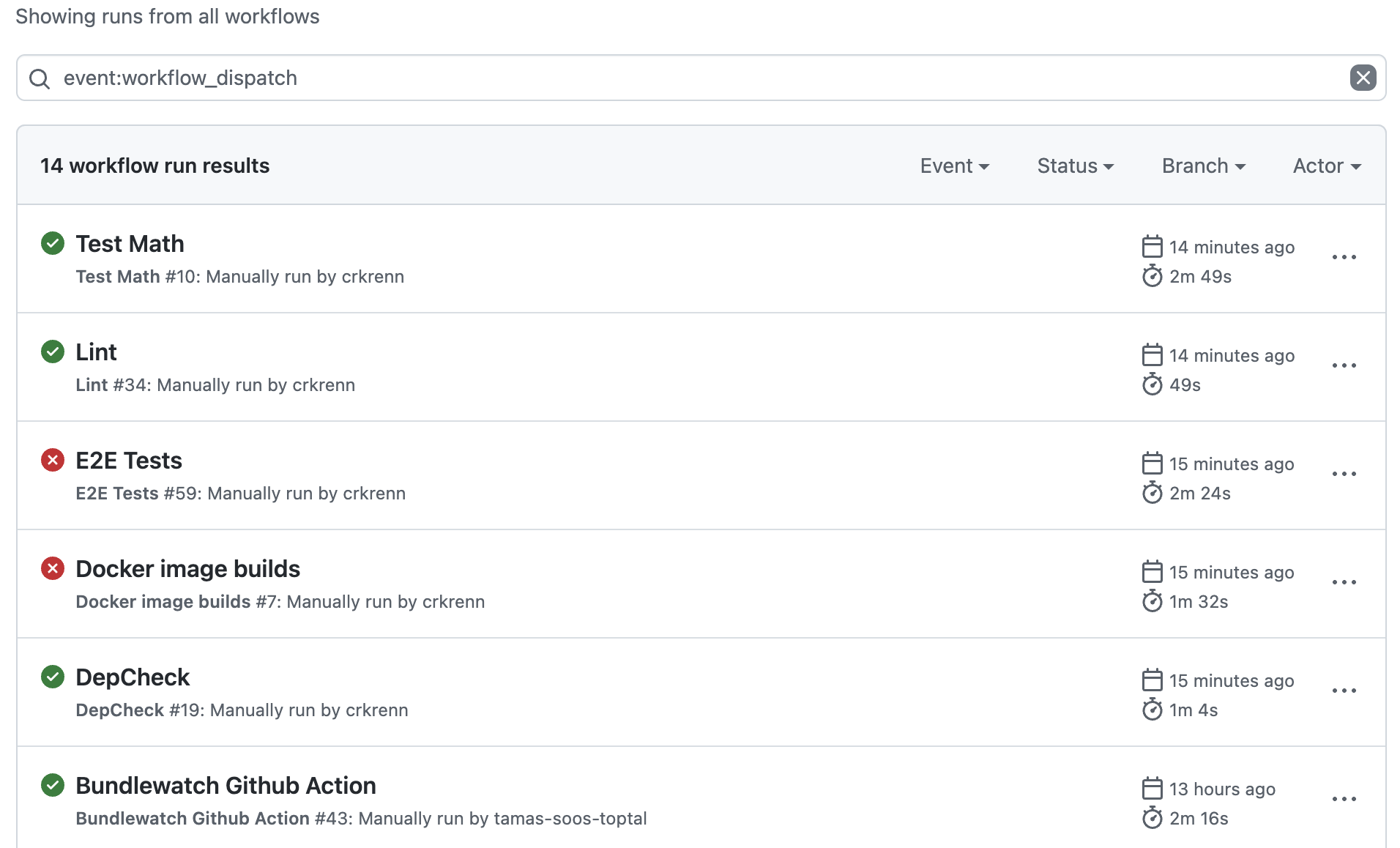

I added "workflow_dispatch" to all of the workflow files so that we can run the tests manually. There may be a more elegant way, but this works for me. Tamas may need to work from his fork of my repo so that this works.

I've made some incremental changes, but I still get an error:

Error: There is no matching file for client-participation/dist/cached/*/js/polis.js

Error: There is no matching file for client-participation/dist/cached/*/js/vis_bundle.js

[INFO] Retrieving comparison

@patcon & @tamas-soos-toptal,

When I'm in a hurry, I tend to be terse and not use a lot of words ;).

Below are some more details on where the bundlewatch error for client-participation is right now in the feature/convict-with-config-volume (current default) branch of https://github.com/crkrenn/polis.

The error is:

Error: There is no matching file for client-participation/dist/cached/*/js/polis.js

Error: There is no matching file for client-participation/dist/cached/*/js/vis_bundle.js

This requirement comes from the .bundlewatch.config.js file:

"files": [

{

"path": "client-admin/dist/*.js",

"maxSize": "180 kB",

},

{

"path": "client-participation/dist/cached/*/js/polis.js",

"maxSize": "150 kB",

},

{

"path": "client-participation/dist/cached/*/js/vis_bundle.js",

"maxSize": "200 kB",

},

]

The client-admin files seem to be copied successfully with the client-admin/webpack.config.js configuration file:

module.exports = {

entry: ["./src/index"],

output: {

path: path.join(__dirname, "dist"),

filename: "admin_bundle.js",

publicPath: "/dist/",

},

I've copied this coding into the client-participation/webpack.config.js file:

module.exports = {

entry: [

"./vis2/vis2"

],

output: {

path: path.join(__dirname, "dist"),

filename: "vis_bundle.js",

publicPath: "/dist/",

},

but it does not work. Any suggestions on updating the client-participation/webpack.config.js file?

What should entry point to?

I was only able to trigger the bundlewatch action manually in the crkrenn:feature/convict-with-config-volume repo but by changing the .bundlewatch.config.js file, now it runs successfully. You can see the results here. I changed the client-participation part to be like the client-admin since the webpack config is essentially the same, too:

"files": [

{

"path": "client-admin/dist/*.js",

"maxSize": "180 kB",

},

{

"path": "client-participation/dist/*.js",

"maxSize": "200 kB",

},

]

I'm glad you disabled some stuff to advance with the work you care about. For correction before the eventual merge, I can explain why it was there and the purpose.

You're hitting this issue on CI because this was disabled: https://github.com/crkrenn/polis/blob/8ea15243c8bd0a77a5f2007f531e978b55178c96/.github/workflows/bundlewatch.yml#L59-L65

The point of that was to rename the filenames (which have hex hashes in them, for cachebusting on production), so that the bundlewatch is monitoring consistent filenames that don't change each time. This was required to give real feedback on the change in size, instead of saying in one PR that admin/<whatever>/bundle.abcdef1245.js got from 120kb to 0kb, and admin/<whatever>/bundle.feccaf23.js went from 0kb to 121kb.

There's prob merit it putting this little "move the file" one-liner into the root Makefile with some mention in the README, so that bundlewatch can work better locally (without spying on a single line in a github action workflow). I've only used bundlewatch on CI, so I missed this poor developer experience of trying to run it locally.

@tamas-soos-toptal (cc: @patcon),

Thanks for getting the bundlewatch test to work! "Lint", "DepCheck", and "Math" tests are also working.

Can you take a look at the e2e errors next?

One blocking error (see below) is because I updated the package.json file but did not update package-lock.json. Do you know how to fix this? (A simple npm install on our dev machine failed... Feel free to install any needed packages to fix this.)

Thanks!

-Chris

npm ERR! cipm can only install packages when your package.json and package-lock.json or npm-shrinkwrap.json are in sync. Please update your lock file with `npm install` before continuing.

npm ERR!

npm ERR!

npm ERR! Missing: compression-webpack-plugin@^7.1.2

npm ERR! Missing: lodash-webpack-plugin@^0.11.6

npm ERR!

npm ERR! A complete log of this run can be found in:

npm ERR! /root/.npm/_logs/2022-01-27T06_09_04_113Z-debug.log

The command '/bin/sh -c npm ci' returned a non-zero code: 1

ERROR: Service 'client-participation' failed to build : Build failed

crkrenn_gmail_com@polis-dev-4:~/dev/crk/polis$ cd client-participation/

crkrenn_gmail_com@polis-dev-4:~/dev/crk/polis/client-participation$ npm install

npm WARN npm npm does not support Node.js v10.24.0

npm WARN npm You should probably upgrade to a newer version of node as we

npm WARN npm can't make any promises that npm will work with this version.

npm WARN npm Supported releases of Node.js are the latest release of 4, 6, 7, 8, 9.

npm WARN npm You can find the latest version at https://nodejs.org/

npm ERR! code EUNSUPPORTEDPROTOCOL

npm ERR! Unsupported URL Type "npm:": npm:[email protected]

npm ERR! A complete log of this run can be found in:

npm ERR! /home/crkrenn_gmail_com/.npm/_logs/2022-01-27T06_09_20_292Z-debug.log

@crkrenn I was able to resolve the issue with the package-lock.json file so now the docker-compose build process in successful within the e2e tests. However, there are some issues with the cypress tests so that will be the next step, I think. @patcon I am not sure if it's only me but I wasn't able to make the build process run with the current LTS version of node (16.13.2 with npm version 8.1.2) so I had to downgrade node and npm to 14.18.3 and 6.14.15, respectively. Is that a known issue ?

That's awesome!

I had to downgrade node and npm to 14.18.3 and 6.14.15, respectively. Is that a known issue ?

I actually didn't know about this constraint for running cypress, so thanks for mentioning! Hm, we could make this more explicit by adding a setup-node action to the e2e workflow, like we have in the bundlewatch workflow:

https://github.com/compdemocracy/polis/blob/f33d0b0471fe2d31d08321b58c74b5e59bb4fe77/.github/workflows/bundlewatch.yml#L27-L31

Re: the workflows not running in this PR.

Sorry that your ability to run workflows hasn't been approved. I feel like this makes things a bit more tedious on your end due to having to run locally.

We could move the PR over to a fork at https://github.com/g0v-network/polis, where I have more ability to resolve this stuff. Would that work? (We wouldn't be aiming to actually merge over there, but when we're done review, we could push the changes back here for the final decision)

@patcon, @tamas-soos-toptal, and I had a google meet this morning.

A current issue is that the e2e tests don't pass on the feature/convict-with-config-volume branch branch.

To test whether this is a repository issue or a branch issue, I created a dev-for-ci-test branch that is an exact clone of the polis dev branch. The only change was that I added the workflow_dispatch option to the github workflows.

e2e tests on the dev-for-ci-test are currently running.

One reason we are doing this is that we get the same failures when we npm run e2e:headless on dev-for-ci-test and feature/convict-with-config-volume branch:

"e2e:headless": "cypress run --spec 'cypress/integration/polis/**'"

OK:

- Good news: e2e tests ran on

dev-for-ci-test(we can use github.com/crkrenn for testing) - Bad news: e2e tests ran on

dev-for-ci-test(we need to track down some errors)

Failed test on feature/convict-with-config-volume branch: https://github.com/crkrenn/polis/actions/runs/1761901222 Successful test on dev-for-ci-test: https://github.com/crkrenn/polis/actions/runs/1762076733

My guess is that it is not a file directory structure issue. One difference that may be important is the "e2e:headless" line that I added to the e2e/package.json file. I'm running without that change here: https://github.com/crkrenn/polis/actions/runs/1762719078

@patcon, I've invited you to my branch of polis, but I'd prefer if you don't push changes (that might conflict with what Tamas is doing.

@patcon, I've made a branch for you: https://github.com/crkrenn/polis/tree/feature/convict-with-config-volume-patcon. You should be able to push and pull and run tests.

- bad news: my

e2e:headlesshypothesis was incorrect.

https://github.com/crkrenn/polis/actions/workflows/cypress-tests.yml Failure: E2E Tests #64: Manually run by crkrenn Success: E2E Tests #63: Manually run by crkrenn

Below are screen shots of the differences. There are some differences in the first two screen shots that don't make sense, but which are hopefully unimportant.

The third screen shot shows that the convict branch "does not respond properly to being closed".

I am almost certain this is because of a miscommunication between Tamas and myself.

All of the configuration settings the config/env_all.yaml and config/schema.yaml files in the convict branch need to be synchronized with the polis.config.template.js files in the dev branch.

Before we do this, I think that we need to consolidate all of the variables into the schema.yaml file, remove any duplicates, and to delete the env_all.yaml file.

It also might be best to wait until Monday to discuss.

(base) crkrenn@admins-MBP polis % git branch

* dev

origin

(base) crkrenn@admins-MBP polis % find . -name "polis*config*js" -exec ls -ltr {} \;

-rw-r--r--@ 1 crkrenn staff 391 Jan 15 07:43 ./client-report/polis.config.template.js

-rw-r--r--@ 1 crkrenn staff 806 Jan 15 07:43 ./client-admin/polis.config.template.js

-rw-r--r--@ 1 crkrenn staff 1245 Jan 15 07:43 ./client-participation/polis.config.template.js

@patcon & @tamas-soos-toptal,

I started the merging process. schema.yaml is alphabetized. I don't think there are any missing variables. There are some differences between variable values in the schema and in the dev branch. These should be easy to update, but I haven't done so yet.

@patcon & @tamas-soos-toptal,

Hopefully, we can solve our e2e test problem quickly.

If not (and for future applications), I think it would be useful to document how to:

- run

polis-admin, etc. in docker containers (instead of compiling to a static file hosted by the file server), and - connect a chrome debugger to an instance running in docker

- To make this process even easier, I think it would be very valuable to create a makefile in the root polis directory for common operations:

- Bring docker images up

- Bring docker images down

- Rebuild docker images

- Remove all docker artifacts

- Bring polis up without the file server

- Bring polis up in

--inspectmode so that we can attach a chrome debugger to all of the polis pieces.

Does this all make sense?

👍 Very in favour of (3)!

Def eager for (1) and (2), though (1) is perhaps a bit of a task. (1) and (2) also require a strategy of having a prod and dev container environment, which is unfortunately a larger conversation (related: https://github.com/compdemocracy/polis/issues/411#issuecomment-988385951)

Had another call today, and made some progress on this. Notes here: https://hackmd.io/bE9uUQ6HQFSDiHlbbJ0Bgg?view#2022-01-31

Will be checking back with one another in maybe a week. @tamas-soos-toptal and @crkrenn will continue to work through it. Same timeslot next week, for simplicity?

@patcon & @tamas-soos-toptal,

Thanks again for the call today!

I reviewed the differences between config settings in the dev and convict branches and did not see anything obvious to change. I did change the "port" config variable from '5000' to 'undefined'. I am testing that now at: https://github.com/crkrenn/polis/runs/5014739245?check_suite_focus=true. It failed in the usual place.

Conversation: Configure

Closing

1) responds properly to being closed

@tamas-soos-toptal, I'd like you to start with https://github.com/crkrenn/polis/tree/feature/convict-with-config-volume-debug (which is currently identical to https://github.com/crkrenn/polis/tree/dev-for-ci-test) and to systematically add and test the changes necessary to make this similar to https://github.com/crkrenn/polis/tree/feature/convict-with-config-volume.

I'll let you decide what order to do things in, but I think you need to do the convict and dockerfile changes first. It will be interesting to see if everything works with only these changes but before you replace any process.env code.

Please let me know if you have any questions!

Very gratefully,

-Chris

@crkrenn,

I started the process of redoing all the changes on (https://github.com/crkrenn/polis/tree/feature/convict-with-config-volume-debug). As a first step, I created the config container and added it to the Dockerfiles of all the necessary containers (and for now, I also left the previous config file in them). With these changes, the E2E tests are running successfully so the next step is to start using the convict type of config in the containers. I am planning to do it one by one if that's okay for you.

PS: @crkrenn & @patcon thanks for the call yesterday! I think it was really helpful!

Best, Tamas

@tamas-soos-toptal This sounds great! Please send an email to my democracygps.org account if you have any questions as I will see that message more quickly.

@tamas-soos-toptal, can you give us a status update? Thanks! Chris

@crkrenn, sorry for the late follow-up. I am currently working on locating the issue behind the failing E2E tests. So far I have the following results:

- I reapplied the changes made to the client-admin container and the tests ran successfully

- after some trial and error kind of work, I figured that it seems like there is an issue with the gulpfile.js in client-participation. Specifically, on line 291.

So this works:

template({

polisHostName: polisConfig.SERVICE_HOSTNAME || "pol.is",

})

And if I replace it with the following, I get the well-known E2E test failures:

template({

polisHostName: yaml_config.get('service_hostname') || "pol.is",

})

I am currently trying to figure out this issue and I will check if there is anything else what can cause and failing test.

Best, Tamas

@crkrenn, quick update: it seems like I found the issue. The previous version used the polis.config.template.js file, in which the value for the SERVICE_HOSTNAME is "localhost". However, the new convict approach uses the schema.yaml file and there the default value was set to undefined so I changed it to "localhost" and now the test runs successfully. I will check if there is any issue with any of the other files changed and will get back to you with the results.

Tamas

@crkrenn, it seems like with this change the client-participation container is clean. I reintroduced all the changes and the E2E tests are running successfully. I will continue with the remaining containers tomorrow and will let you know once I have the results.

Tamas