`test_bump_pre_commit_changelog` is failing on `master` (afa0d93) for some environments

Description

I am seeing some tests failing:

test_bump_pre_commit_changelog_fails_always[True]test_bump_pre_commit_changelog[True]test_bump_pre_commit_changelog_fails_always[False]

See resolution (pre-commit was silently failing): https://github.com/commitizen-tools/commitizen/issues/520#issuecomment-1139207915

Steps to reproduce

- cloned repo

- poetry install

- set up hooks

./scripts/test

Current behavior

./scripts/test:

FAILED tests/test_bump_create_commit_message.py::test_bump_pre_commit_changelog_fails_always[True] - Failed: DID NOT RAISE <class 'commitizen.exceptions.BumpCommitFailedError'>

FAILED tests/test_bump_create_commit_message.py::test_bump_pre_commit_changelog[True] - AssertionError: assert '## 0.1.1 (20...\n\n- _test\n' == '## 0.1.1 (20...\n- \\_test\n'

FAILED tests/test_bump_create_commit_message.py::test_bump_pre_commit_changelog_fails_always[False] - Failed: DID NOT RAISE <class 'commitizen.exceptions.BumpCommitFailedError'>

I was able to alter this assert in test_bump_pre_commit_changelog to get that test to work.

What could be different about my machine? I assume the \\ had purpose otherwise the CI would have failed a couple of days ago when this test was created.

Desired behavior

test pass

Screenshots

No response

Environment

commit hash: afa0d93

platform linux -- Python 3.6.8, pytest-6.2.5, py-1.11.0, pluggy-1.0.0

plugins: regressions-2.3.1, xdist-2.5.0, mock-2.0.0, freezegun-0.4.2, forked-1.4.0, cov-2.12.1, datadir-1.3.1

@yajo sorry to bother you over something probably really straightforward.

Any guess what the purpose of the \\ was?

Any guess what the purpose of the

\\was?

The first \ is to escape the 2nd. Prettier would add a leading \ to some text that starts with _ to avoid markdown treating that _ as the start of a italic marker.

This test exercises the case that https://github.com/commitizen-tools/commitizen/pull/505 is meant to fix.

Thank you. Avoiding the italic markdown makes a lot of sense.

Cause:

/tmp directory, used by pytest, was mounted with noexec option. (CIS 1.1.2)

No execution was allowed within /tmp but the test had generated .git/hooks/pre-commit within that mount and so the test failed.

Workaround:

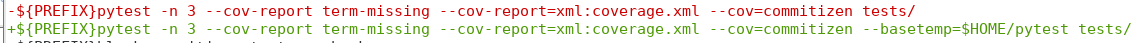

Edited scripts/test locally:

Want a PR to improve git logging?

It appears git has a zero return code even when commit hooks fail to run.

This is what was hidden in the unlogged c.err (with c.return_code == 0):

hint: The \'.git/hooks/pre-commit\' hook was ignored because it\'s not set as executable.

hint: You can disable this warning with `git config advice.ignoredHook false`.

(#518 is sort of related.)

Wondering will this on be solved by #540 as well?

sort of. #540 improves the logging so that the tests raise this warning before failing:

hint: The \'.git/hooks/pre-commit\' hook was ignored because it\'s not set as executable.

hint: You can disable this warning with `git config advice.ignoredHook false`.

The actual "workaround" is shown in my last comment.

Sorry for the later reply. After reading through the whole discussion again, I'm a bit confused. What is the issue we're facing now? Is it failed test_bump_pre_commit_changelog_fails_always test_bump_pre_commit_changelog and test_bump_pre_commit_changelog_fails_always? If so, how does No execution was allowed within /tmp affected it?

the test generates it's own temporary hook via cmd.run("pre-commit install") normally that's not a problem but my sys-admin seem to have removed execute permissions from tmp/.

git returns an exit code of zero when the hook is missing execute permission. So commitizen isn't at fault.

I have create a PR to log git commit during the bump, but no worries if you aren't interested.

@bhelgs as the logging message is further improved through #571, do you think we still have anything to track on this one?

I think it can be closed now. thanks a lot!

El jue, 26 de may de 2022 a las 19:00:15 PM, bhelgs @.***> escribió:

/tmp directory, used by pytest, was mounted noexec option. ([CIS 1.1.2])(https://paper.bobylive.com/Security/CIS/CIS_Debian_Linux_8_Benchmark_v2_0_1.pdf)

I understand. Probably you should just decorate the test with xfail in those situations.