CommandNotFoundException error when running

F:homedalaillamauildReleasemain : The term 'F:homedalaillamauildReleasemain' is not recognized as the name of a cmdlet, function, script file, or operable program. Check the spelling of the name, or if a path was included, verify that the path is correct and try again. At line:1 char:96

- ... .Text.Encoding]::UTF8; F:homedalaillamauildReleasemain --seed ...

-

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~- CategoryInfo : ObjectNotFound: (F:homedalaillamauildReleasemain:String) [], CommandNotFoundException

- FullyQualifiedErrorId : CommandNotFoundException

Getting the same issue here, assume it's a recent bug from the latest release?

Edit: a quick examination shows something should have been built and wasn't

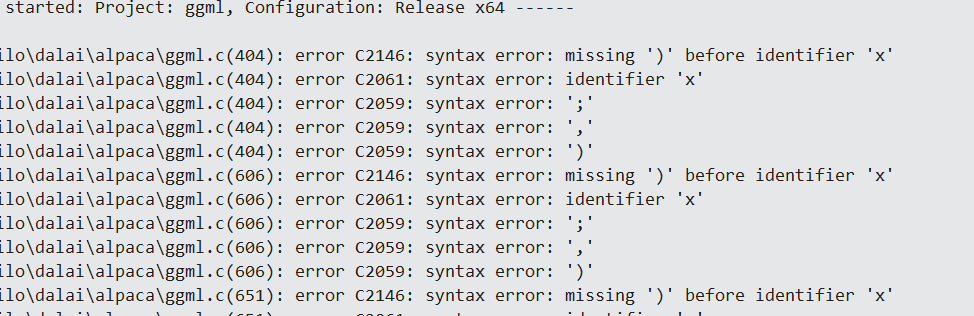

Edit 2: tried just opening in VS and compiling it but... that doesn't look right

The file itself looks right if I open it but I don't know enough C/C++ to figure out what's wrong

The file itself looks right if I open it but I don't know enough C/C++ to figure out what's wrong

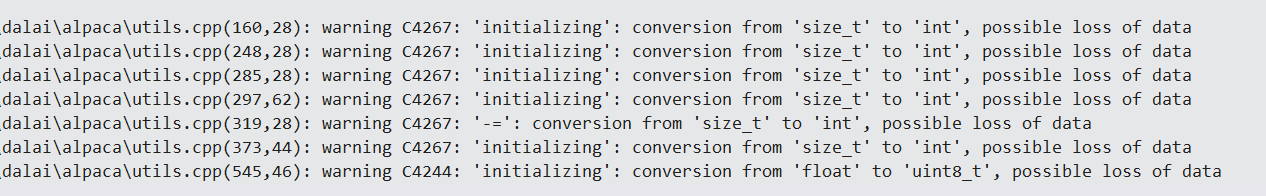

Edit 3: Solved it, you just gotta make sure it's using Visual Studio 2022 to compile it. Go to the solution file, open it in VS 2022 and hit "build solution". There's a bunch of warnings like these:

But that's par for the course whenever you compile somebody's C++, if something doesn't throw a bunch of warnings it's probably a blank file or hello world sample. Just check that there are 3 files in

But that's par for the course whenever you compile somebody's C++, if something doesn't throw a bunch of warnings it's probably a blank file or hello world sample. Just check that there are 3 files in dalai/alpaca/build/Release

I tried compiling the project, but unfortunately, it didn't work for me

Can you send me your main file?

Can you send me your main file?

Get these here:

https://github.com/antimatter15/alpaca.cpp/releases

rename chat.exe to main.exe. if you just run it from the command line with the right parameters (e.g., --model "30B/ggml-model-q4_0.bin") you'll get a chat, too! I found that's even more convenient.

If you have 32GB of system RAM you should be able to run the 30B parameter model. It's slow, but damn impressive for being a local ChatGPT!