cockpit

cockpit copied to clipboard

cockpit copied to clipboard

storage: Btrfs PoC

This is a PoC, to see what excatly we need from UDisks2. First demo: https://www.youtube.com/watch?v=0b-Ov4Gbcr8

- [x] #16991

- [x] #16518

- [x] make block devices with btrfs on it point to the volume details page

- [x] move subvol polling into client, we need subvols for the overview already

- [x] btrfs entries in filesystem panel

- [x] handle subvol mounting option in Filesystem tab

- [x] creation dialog for subvols

- [x] root subvol is named "/", not "" as our code pretends.

- [x] mount/unmount without UDisks2

- [x] list subvolumes without UDisks2

- [x] create subvolumes without UDisks2

- [x] delete for volume

- [x] delete for subvolumes

- [x] poll immediately after mounting temporarily and after creating new subvol

- [x] sizes and usage

- [x] adding/removing disks

- [x] screencast

- [x] usage and teardown

- [x] subvolumes need to be deleted recursively, point out in teardown info

- [x] use "mount-monitor" always for mounting and unmounting

- [x] keep usage numbers updated

- [ ] mismounting help

- [ ] handle default subvol

- [ ] more actions for subvols (snapshot, rename, ...)

- [ ] rename for volume

- [ ] top-level "Create" button like we have for LVM etc?

- [ ] delay "page initialized" event until after first btrfs poll

- [ ] unify is_mounted and get_fstab_config calls, they both do the same thing basically

- [ ] qgroups

- [ ] ...

For UDisks2:

- [ ] MountPoints per subvol, or MountPointsWithOptions

- [ ] Fix result of GetSubvolumes, make libblockdev use "-a" with "btrfs subvol list".

- [ ] Expose default subvolume

- [ ] Targetted mountin and unmount, "multi mounting": https://github.com/storaged-project/udisks/pull/938

- [ ] Fix subvolume creation, make UDisks2 find a suitable parent and create within that with a suitably adjusted relative path

- [ ] Same for deletion, find a mounted parent and delete within that.

- [ ] AddDevice and RemoveDevice needs a udev trigger afterwards

- [ ] Retrieve qgroup usage numbers, if available.

- [ ] jobs for device removal? those can take a long time.

Other:

- [ ] systemd sometimes unmounts subvolumes when a device is removed from btrfs. Seems to be racy and probably has to do with some udev properties changing...

UDisks2 could fix this by temporarily mounting the "/" subvol somewhere and work with that. I think that's acceptable for modifications such as creating or removing subvolumes.

Another idea is that we only allow creation of subvols that are under subvols that are currently mounted, and explain that clearly to the user. Thus, UDisks2 would find a suitable mountpoint to use for the creation request, and fail if it can't find any. Cockpit would guide the user through this and make sure that the parent subvol is mounted when creating new ones.

There doesn't seem to be any per-subvolume usage statistics unless quotas are enabled, so we can only show the stats for the whole volume.

We could guide people into enabling quotas if they are interested in per-subvolume usage data, but quotas seem to come with some caveats, so I am not sure how much we should make them look like a good idea.

PERFORMANCE IMPLICATIONS

When quotas are activated, they affect all extent processing, which

takes a performance hit. Activation of qgroups is not recommended

unless the user intends to actually use them.

STABILITY STATUS

The qgroup implementation has turned out to be quite difficult as it

affects the core of the filesystem operation. Qgroup users have hit

various corner cases over time, such as incorrect accounting or system

instability. The situation is gradually improving and issues found and

fixed.

I start to think that maybe we need a almost general filesystem browser here. Initially, only the root volume would be visible, but you can mount it and start browsing around. Any subvolume you find while browsing can be acted upon, and can also be mounted in its own right, at which point it would get its own top-level entry in the UI. Once some subvolumes have been mounted, the root can be unmounted if that is desired.

In other words, the details page would list "Mounted subvolumes", and each entry in that list can be expanded to reveal all contained subvolumes. (We woudn't attempt to show the whole filesystem, but would cut off below subvolumes. But there might be directories, it's not just a flat list of subvolumes.)

"Mounted subvolumes" is a bit of a misnormer, we would list all subvolumes at the top-level that have an entry in fstab, regardless of whether they are mounted or not. "Mountable"? Also bad, hmm.

We could then use "btrfs subvol list -o", but we might still want to avoid making redundant calls (i.e. not list a subvol that was already included in the listing of a parent.)

One odd thing about this would be that a "Mounted" subvolume on the top-level can still not be deleted there. One would need to find it below its parent and delete it there.

@mvollmer: Nice demo video, thanks! Some ramblings (sent on IRC, but you are probably already AFK)

There is no individual per-subvolume usage -- all of them share the same space. That's the very thing which makes btrfs so much better than partitions or even (non-thin) LVs. You don't need to think about/allocate sizes in advance (you can with quotas, but for almost all desktop/small server use cases you won't).

I.e. a subvol is meant to be much more like a magic directory than a partition.

The main reason for creating a subvolume instead of a directory is subvolumes can have qgroups enabled on them and can be snapshot.

Btrfs snapshots are open-ended compared to LVM (each LV is a peer, snapshots aren't mounted or active by default) and ZFS (all snapshots can be navigated with a clear hierarchy relative to the dataset). A btrfs snapshot can be located anywhere. It can be nested within the parent subvolume, or located in some completely different part of the file system. Location can be arbitrary. And they are read-write by default.

The GUI might consider multiple ways of organizing the same layout. Literal organization as it appears on disk is a given. But another view might show snapshots as (generation?) ordered children under their parent subvolume. Another view might show snapshots as clones ordered by how much or how recently they've changed from the original (e.g. docker/moby/podman using the btrfs graphdriver make prolific use of read-write snapshots). A common problem for container usage I'm seeing is the lack of autopruning in the docker/moby/podman world, and it could be useful for folks to see their container's subvolumes ordered by how stale they are. This is a fairly cheap thing to estimate based on various generations or transid types.

The main reason for creating a subvolume instead of a directory is subvolumes can have qgroups enabled on them and can be snapshot.

Also, they can be individually mounted via the "subvol=" mount option, and directories can't, right?

The GUI might consider multiple ways of organizing the same layout. Literal organization as it appears on disk is a given.

Yep.

But another view [...]

I don't think I am not ready yet to think beyond the natural hierarchical view, but those seem like some good ideas, thanks!

Storage display in Cockpit is only very mildly hierarchical right now, the most we have are thin volumes within a thin pool within a volume group, I think. I tried to just have a list of subvolumes (and the user has to figure out the hierarchy by looking at the names), but that's clearly not sufficient.

I guess it's very obvious now how naive I am, so please let the ideas come, and please keep reminding me if I seem to forget about them. :-)

There is no individual per-subvolume usage -- all of them share the same space.

Yeah, but there still is the notion of exclusive vs shared usage, and it makes sense to show that (btrfs qgroup show). I think this makes sense even if we don't support qgroups themselves.

Something to consider is that libbtrfsutil exposes concepts and functionality that aren't necessarily fully accessible via the btrfs(8) CLI, which might be useful for libblockdev-btrfs and udisks-btrfs.

Also, they can be individually mounted via the "subvol=" mount option, and directories can't, right?

Yep; I can confirm: I'm actually using this feature (mounting a subvolume directly) on my Raspberry Pi 4 NAS.

And Fedora does this by default too, right?

Also, they can be individually mounted via the "subvol=" mount option, and directories can't, right?

Yep; I can confirm: I'm actually using this feature (mounting a subvolume directly) on my Raspberry Pi 4 NAS.

And Fedora does this by default too, right?

We do, yes.

Also, [subvolumes] can be individually mounted via the "subvol=" mount option, and directories can't, right?

Correct. Although it is in effect a bind mount which you can also do with directories. There is a bit of magic involved that permits subvol/subvolid mount options to locate the subvolume without "line of sight" to the subvolume; whereas with a bind mount, you need an absolute path for $olddir $newdir.

I would like also see better support when you have btrfs on lvm. You cannot do grow/shrink from cockpit.

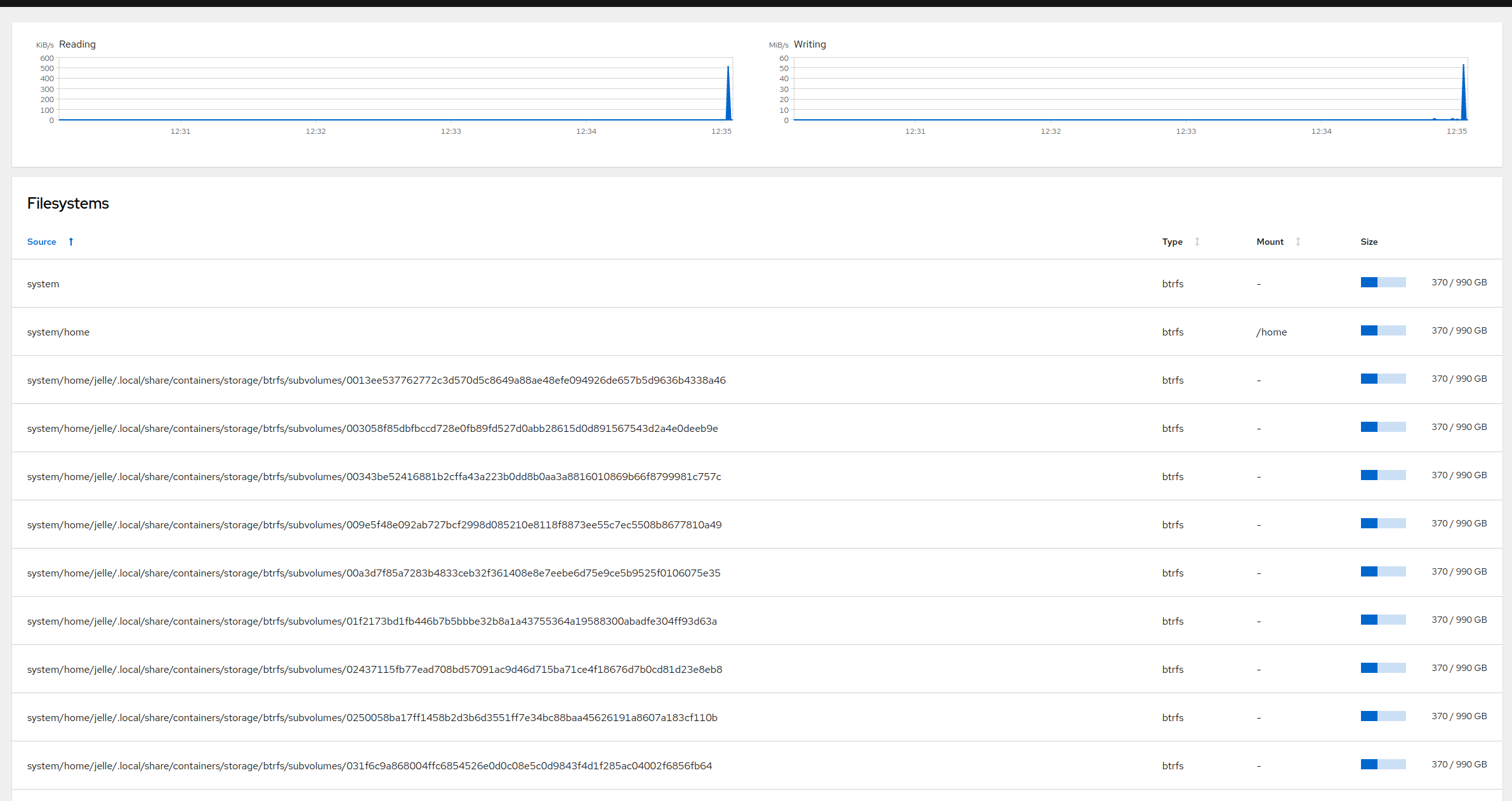

On a system with user podman containers the storage page is kinda overloaded with subvolumes:

storage page is kinda overloaded with subvolumes

I think we had a design for this, with the Storage page unified redesign, which I mocked up due to btrfs (and RAID in general).

We probably want to group them somehow, and possibly make them collapsable (depending on how they're shown). First recommendation is to work on the storage redesign, however, as that will "automatically" solve a lot of these kinds of problems.

I don't think it makes sense to show the mount options for btrfs, subvolumes are more like directories. Sure we should allow users to mount a subvolume but it's not something which happens a lot.

We should hide these by default:

ID 258 gen 113 top level 256 path var/lib/portables ID 259 gen 113 top level 256 path var/lib/machines

My rebase broke, creating a FS

SUBVOLS {"a280b604-6023-4ba5-bb9e-80d612f84b0d":null} [client.js:531:28](https://127.0.0.2:9091/cockpit/pkg/storaged/client.js)

Uncaught TypeError: block is undefined

Missed this comment from ~2 years ago

Also, they can be individually mounted via the "subvol=" mount option, and directories can't, right?

Behind the scenes this is a bind mount. So you could do the same thing as subvol/subvolid mount option by doing a bind mount of a directory. In effect, all btrfs mounts are bind mounts because without subvol/subvolid one is implied: the default subvolume. And there is always one even if we never create subvolumes, there is one unnamed unmoveable subvolume created at mkfs time, a.k.a. "top-level" subvolume a.k.a. "subvolume id 5" aliased to "subvolume id 0"

I don't think it makes sense to show the mount options for btrfs, subvolumes are more like directories. Sure we should allow users to mount a subvolume but it's not something which happens a lot.

Related: https://btrfs.readthedocs.io/en/latest/Subvolumes.html#nested-subvolumes The flat case tends to call for explicit mounting of subvolumes (via fstab or some kind of as yet invented systemd discoverable subvolumes idea). The nested case tends to call for treating subvolumes as directories. I tend to see nested style as ways of preventing (stopping) the recursion of snapshotting, but this is not a requirement. Anyway, the open endedness of Btrfs does mean opinions on layouts are just that, opinions. Btrfs itself doesn't care that much.

I've found an issue with the way we discover subvolumes when you have multiple btrfs disks

Label: 'fedora' uuid: a280b604-6023-4ba5-bb9e-80d612f84b0d

Total devices 3 FS bytes used 2.28GiB

devid 1 size 11.92GiB used 5.92GiB path /dev/vda5

devid 2 size 10.00GiB used 0.00B path /dev/sda

devid 3 size 10.00GiB used 0.00B path /dev/sdb

In Cockpit we pick a block from uuids_btrfs_blocks which turns out to be /dev/sdb and has no mountpoint. Thus we only show the / and root and /home. (Those probably come from fstab)

This is probably a question of, what should do udisks do?

/dev/vda5 has MountPoints ["/", /home"] /dev/sdb has MountPoints [] /dev/sda has MountPoints []

Multidisk support has more issues, I would suggest not supporting it at all, at the start.

For example I add a new disk to my btrfs volume:

[root@fedora-38-127-0-0-2-2201 ~]# btrfs filesystem show

Label: 'fedora' uuid: a280b604-6023-4ba5-bb9e-80d612f84b0d

Total devices 3 FS bytes used 2.28GiB

devid 2 size 10.00GiB used 2.00GiB path /dev/sda

devid 3 size 10.00GiB used 2.56GiB path /dev/sdb

devid 4 size 10.00GiB used 0.00B path /dev/sdd

The disk is added, I have more space now. But my space and metadata is not spread over the disks I will need to balance it.

btrfs balance start /

[root@fedora-38-127-0-0-2-2201 ~]# btrfs filesystem show

Label: 'fedora' uuid: a280b604-6023-4ba5-bb9e-80d612f84b0d

Total devices 3 FS bytes used 2.28GiB

devid 2 size 10.00GiB used 576.00MiB path /dev/sda

devid 3 size 10.00GiB used 1.00GiB path /dev/sdb

devid 4 size 10.00GiB used 3.00GiB path /dev/sdd

Now my data is spread across disks. This is probably especially important for RAID setups.

Note: Udisks does not offer any balancing as of yet.

Partially implemented in https://github.com/cockpit-project/cockpit/pull/19690