cloudflared

cloudflared copied to clipboard

cloudflared copied to clipboard

Many tunnels on different servers are getting repeatedly disconnected `Connection terminated error="connection with edge closed"`

Describe the bug

We're a software consultancy that uses many different hosting providers and platforms for a wide variety of client projects (AWS, DigitalOcean, Vultr, etc.). We use cloudflared tunnels for ingress for many of our apps, with probably 30+ tunnels running at any given time on servers around the world.

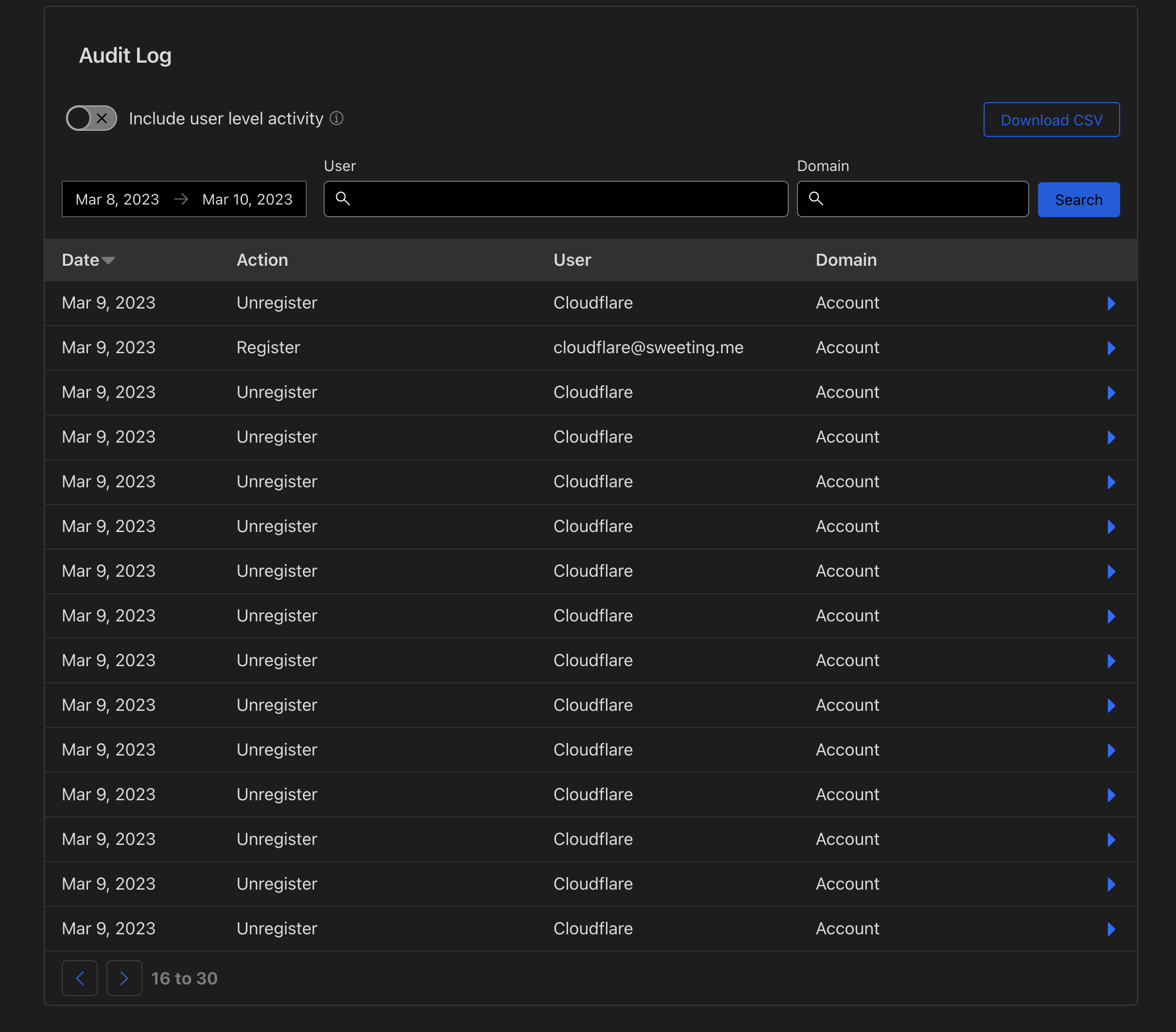

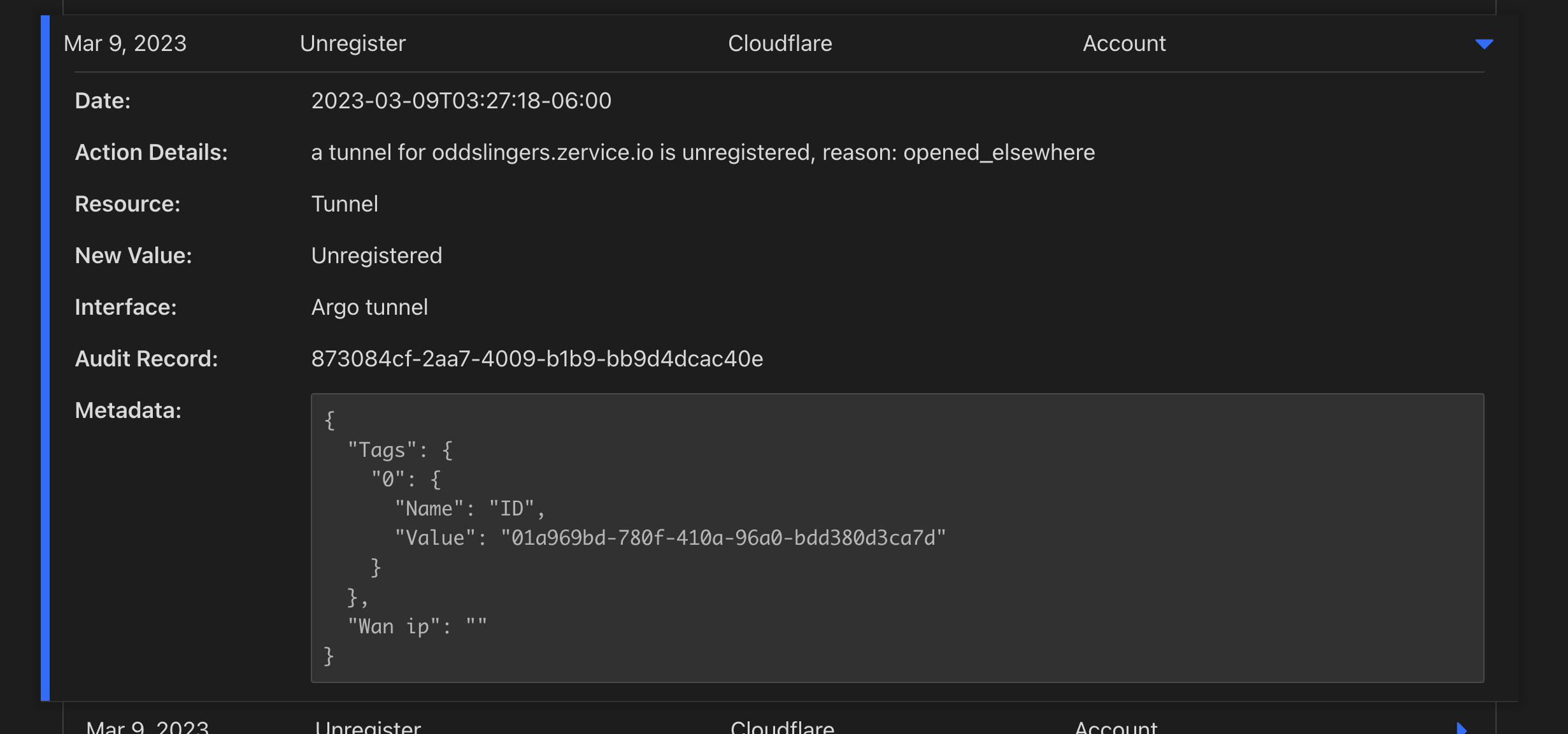

On March 9th, 2023 all of our Cloudflare tunnels disconnected around the same time. According to the Cloudflare admin dashboard audit log (see screenshot below), all of the tunnels were de-registered due to "origin server went away" or "opened elsewhere", and then re-registered. Most came up successfully after the outage on their own, but a few never successfully reconnected until we SSH'd in to restart the cloudflared container. They all showed lots of this error in console:

Connection terminated error="connection with edge closed" (which may be a red herring, or may be related, there are so many of these lines it's hard to tell if they're a cause or a symptom)

No one had changed anything in our Cloudflare config on March 9th (or even logged into the admin dash prior to the incident), and no one had logged into the servers affected via SSH either, so we're 99% sure we didn't do anything to trigger this. It also occurred across multiple hosting providers, so we don't think it was an outage on DigitalOcean or any one of our VPS providers.

...

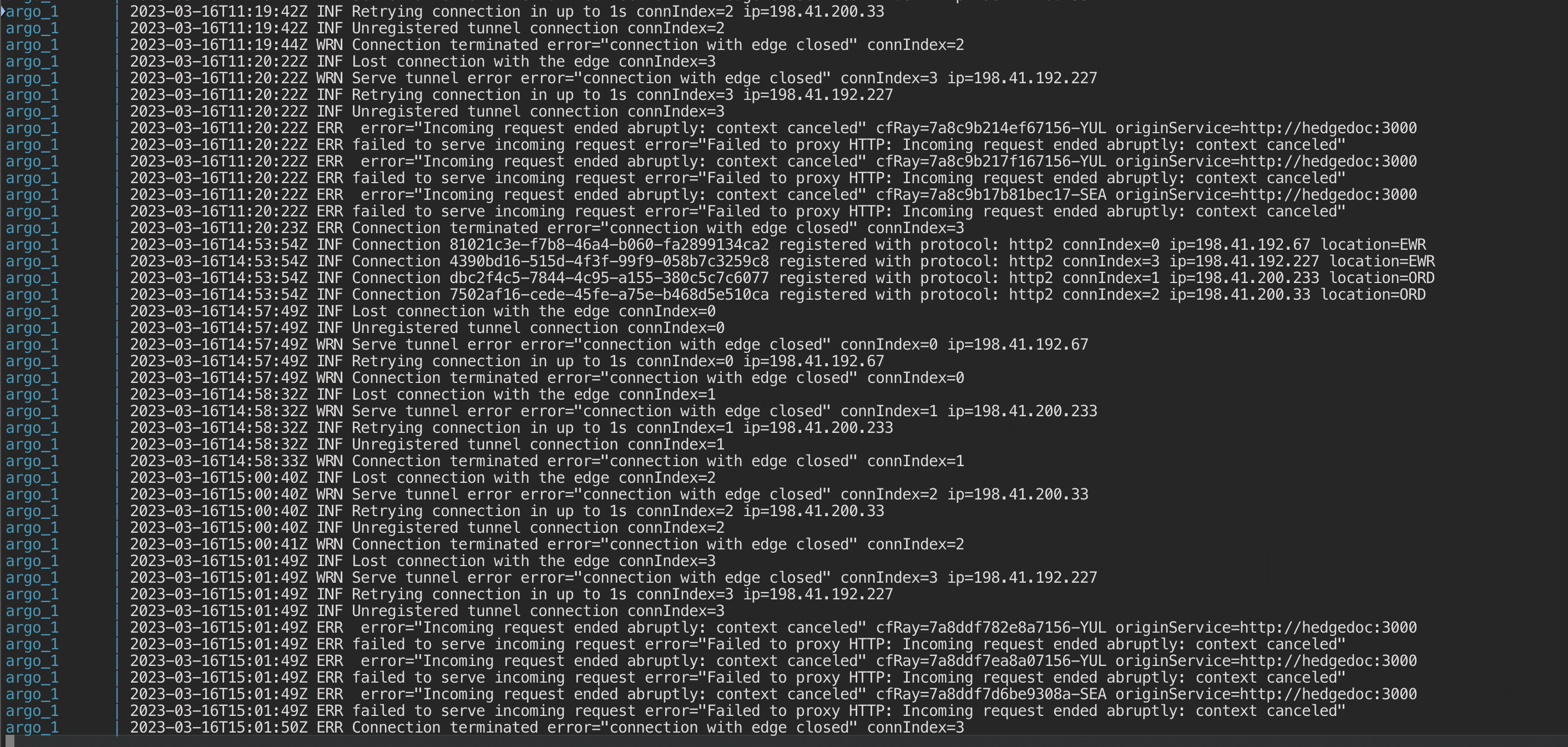

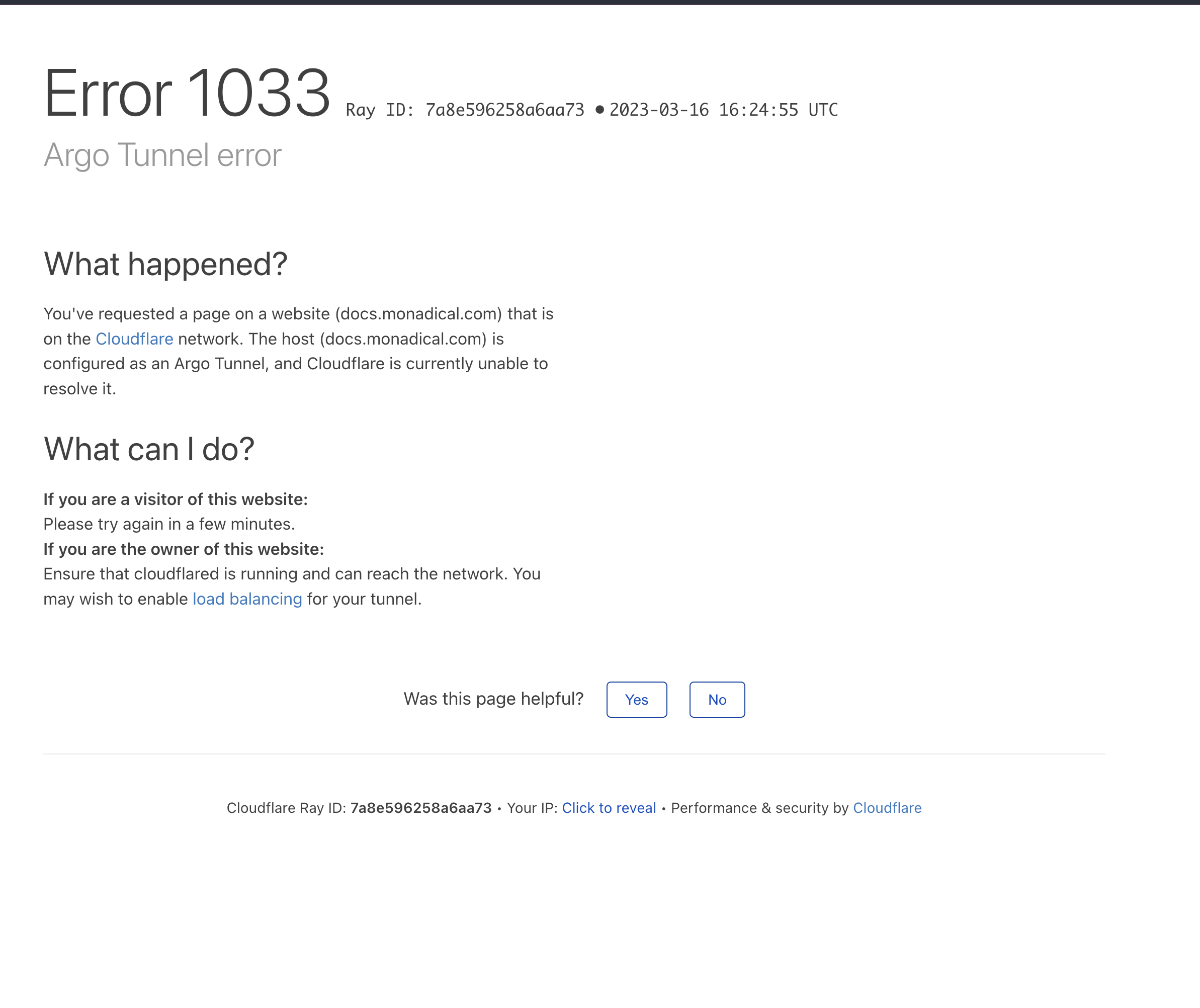

On March 15th (edit: and again on March 16th), one of these tunnels randomly started experiencing this error again, causing a critical service to go down for several hours. Nothing has changed on this server during this time, and restarting it fixed it with seemingly no underlying cause and no other applications on the server affected. The server has plenty of memory, cpu, and bandwidth, and none of the other things on the server experienced packet loss when the tunnel went down.

We're worried this will keep happening and we'll have to move off Cloudflare entirely, but would really like to avoid that as tunnels are quite nice for one-line docker-based deployment without needing config files/admin dash setup/persistent state/etc.

To Reproduce Steps to reproduce the behavior:

- Run

cloudflared/cloudflared:2023.3.1in docker on a DigitalOcean VPS - Wait ?

- Tunnel randomly disconnects, restarting the tunnel container fixes it instantly

If it's an issue with Cloudflare Tunnel:

4. Tunnel ID: 2b2bd7dc-633f-4fae-b385-24423f0fbb9d

5. cloudflared config:

argo:

image: cloudflare/cloudflared:

command: tunnel --no-autoupdate --overwrite-dns --retries 15 --protocol http2 --url http://hedgedoc:3000 --hostname docs.zervice.io --name docs.zervice.io

volumes:

# cert.pem in this folder from https://dash.cloudflare.com/argotunnel

- ./etc/cloudflared:/etc/cloudflared

Environment and versions

- OS:

Ubuntu 22.04.2 - Architecture:

amd64 - Version:

2023.3.1

Logs and errors

argo_1 | 2023-03-16T05:08:01Z INF Connection 94991c9c-8a41-497e-897a-360734fd564c registered with protocol: http2 connIndex=0 ip=198.41.192.67 location=EWR

argo_1 | 2023-03-16T05:12:07Z INF Lost connection with the edge connIndex=0

argo_1 | 2023-03-16T05:12:07Z WRN Serve tunnel error error="connection with edge closed" connIndex=0 ip=198.41.192.67

argo_1 | 2023-03-16T05:12:07Z INF Unregistered tunnel connection connIndex=0

argo_1 | 2023-03-16T05:12:07Z INF Retrying connection in up to 1s connIndex=0 ip=198.41.192.67

argo_1 | 2023-03-16T05:12:08Z WRN Connection terminated error="connection with edge closed" connIndex=0

argo_1 | 2023-03-16T05:12:09Z INF Connection 3163ea84-9031-4033-8e65-a00e72f17759 registered with protocol: http2 connIndex=0 ip=198.41.192.67 location=EWR

argo_1 | 2023-03-16T05:14:03Z INF Unregistered tunnel connection connIndex=0

argo_1 | 2023-03-16T05:14:03Z INF Lost connection with the edge connIndex=0

argo_1 | 2023-03-16T05:14:03Z WRN Serve tunnel error error="connection with edge closed" connIndex=0 ip=198.41.192.67

argo_1 | 2023-03-16T05:14:03Z INF Retrying connection in up to 1s connIndex=0 ip=198.41.192.67

argo_1 | 2023-03-16T05:14:04Z WRN Connection terminated error="connection with edge closed" connIndex=0

argo_1 | 2023-03-16T05:14:12Z INF Connection 914121fc-04f4-4634-922e-3c464328c9dd registered with protocol: http2 connIndex=0 ip=198.41.192.67 location=EWR

argo_1 | 2023-03-16T05:14:34Z INF Lost connection with the edge connIndex=0

argo_1 | 2023-03-16T05:14:34Z WRN Serve tunnel error error="connection with edge closed" connIndex=0 ip=198.41.192.67

argo_1 | 2023-03-16T05:14:34Z INF Retrying connection in up to 1s connIndex=0 ip=198.41.192.67

argo_1 | 2023-03-16T05:14:34Z INF Unregistered tunnel connection connIndex=0

argo_1 | 2023-03-16T05:14:35Z WRN Connection terminated error="connection with edge closed" connIndex=0

argo_1 | 2023-03-16T05:14:44Z INF Connection 17872c64-60d8-43dc-bdfd-8e2b54a1034a registered with protocol: http2 connIndex=0 ip=198.41.192.67 location=EWR

I have had similar experiences. Cloudflare Tunnel has been going worse and worse and I don't know what I could do about it other than to migrate away from it. Today it has been a nightmare.

I do have 5 servers with tunnels. Only 2 of them are dropping connections currently. One of them is dropping constantly and the other "only" every 15 minutes or so.

Server "A" is tunneling service www.example.com:80 (name changed) which is constantly losing connection. I tried to move tunneling of this service to server "B" (on the same network, sending http requests to the server A). After that the tunnel on the B started to drop connections and the tunnel on the A was good. Then switched back and the A started to drop connections and the B was good. So this issue followed with the service/domain.

I tried to ping some Cloudflare IP addresses and got 0% packet loss. I also can't find any other problem from my servers except that it just cloudflared which is dropping connections because "Lost connection with the edge" and 'Connection terminated error="connection with edge closed"'.

If this is just my server/service configuration, is there any info what I should to do to make cloudflared work better?

This is what happens all the time:

2023-03-16T14:51:58Z ERR Connection terminated error="connection with edge closed" connIndex=2

2023-03-16T14:54:35Z INF Connection dc1d923f-e423-4dd9-8980-458918aad271 registered with protocol: http2 connIndex=1 ip=198.41.200.63 location=HEL

2023-03-16T14:54:35Z INF Connection 0bc9e2bf-b6c1-4c3f-afb0-591089abfac9 registered with protocol: http2 connIndex=0 ip=198.41.192.27 location=FRA

2023-03-16T14:54:35Z INF Connection f78b3bf6-3ed2-4e42-8f8f-a1d26bc97e4e registered with protocol: http2 connIndex=2 ip=198.41.192.167 location=FRA

2023-03-16T14:54:35Z INF Connection 8b7e659d-9825-4055-acf5-b9c77d9f4600 registered with protocol: http2 connIndex=3 ip=198.41.200.113 location=HEL

2023-03-16T14:54:45Z INF Lost connection with the edge connIndex=3

2023-03-16T14:54:45Z WRN Serve tunnel error error="connection with edge closed" connIndex=3 ip=198.41.200.113

2023-03-16T14:54:45Z INF Retrying connection in up to 1s connIndex=3 ip=198.41.200.113

2023-03-16T14:54:45Z INF Unregistered tunnel connection connIndex=3

2023-03-16T14:54:46Z WRN Connection terminated error="connection with edge closed" connIndex=3

2023-03-16T14:55:37Z ERR error="Incoming request ended abruptly: context canceled" cfRay=7a8dd57ee81a0a44-ARN ingressRule=0 originService=http://www.example.com:80

2023-03-16T14:55:37Z ERR failed to serve incoming request error="Failed to proxy HTTP: Incoming request ended abruptly: context canceled"

2023-03-16T14:55:40Z ERR error="Incoming request ended abruptly: context canceled" cfRay=7a8dd5eb8f94c7da-TLL ingressRule=0 originService=http://www.example.com:80

Here you can see how often this happens:

2023-03-16T14:00:17Z INF Lost connection with the edge connIndex=1

2023-03-16T14:00:41Z INF Lost connection with the edge connIndex=0

2023-03-16T14:01:08Z INF Lost connection with the edge connIndex=2

2023-03-16T14:02:13Z INF Lost connection with the edge connIndex=0

2023-03-16T14:02:14Z INF Lost connection with the edge connIndex=1

2023-03-16T14:07:06Z INF Lost connection with the edge connIndex=3

2023-03-16T14:08:09Z INF Lost connection with the edge connIndex=2

2023-03-16T14:08:18Z INF Lost connection with the edge connIndex=1

2023-03-16T14:08:48Z INF Lost connection with the edge connIndex=0

2023-03-16T14:08:56Z INF Lost connection with the edge connIndex=3

2023-03-16T14:09:25Z INF Lost connection with the edge connIndex=2

2023-03-16T14:10:32Z INF Lost connection with the edge connIndex=1

2023-03-16T14:10:36Z INF Lost connection with the edge connIndex=0

2023-03-16T14:11:27Z INF Lost connection with the edge connIndex=1

2023-03-16T14:11:42Z INF Lost connection with the edge connIndex=0

2023-03-16T14:12:15Z INF Lost connection with the edge connIndex=3

2023-03-16T14:12:44Z INF Lost connection with the edge connIndex=2

2023-03-16T14:15:47Z INF Lost connection with the edge connIndex=3

2023-03-16T14:17:08Z INF Lost connection with the edge connIndex=3

2023-03-16T14:17:46Z INF Lost connection with the edge connIndex=2

2023-03-16T14:17:52Z INF Lost connection with the edge connIndex=0

2023-03-16T14:18:13Z INF Lost connection with the edge connIndex=3

2023-03-16T14:19:22Z INF Lost connection with the edge connIndex=3

2023-03-16T14:20:29Z INF Lost connection with the edge connIndex=2

2023-03-16T14:20:35Z INF Lost connection with the edge connIndex=0

2023-03-16T14:21:40Z INF Lost connection with the edge connIndex=1

2023-03-16T14:22:38Z INF Lost connection with the edge connIndex=0

2023-03-16T14:23:10Z INF Lost connection with the edge connIndex=2

2023-03-16T14:26:10Z INF Lost connection with the edge connIndex=3

2023-03-16T14:27:12Z INF Lost connection with the edge connIndex=2

2023-03-16T14:29:34Z INF Lost connection with the edge connIndex=1

2023-03-16T14:30:12Z INF Lost connection with the edge connIndex=0

...

I have also come across this issue today. We have 4 tunnels setup with 2 tunnels per Cloudflare Load Balancer. This morning at 02:13:17 UTC, both of the tunnels connected to the same Load Balancer started dropping connections. Restarting cloudflared, when it got stuck at various times in the day, would solve the problem for a while. However, we are still getting disconnections, whilst the other 2 tunnels are running perfectly well.

2023-03-16T15:38:45Z INF Connection 3964d3a4-9a49-41ea-8c60-0b69f1d5eb3d registered connIndex=2 location=MAN

2023-03-16T15:38:49Z INF Lost connection with the edge connIndex=1

2023-03-16T15:38:50Z ERR Serve tunnel error error="connection with edge closed" connIndex=1

2023-03-16T15:38:50Z INF Retrying connection in up to 1s seconds connIndex=1

2023-03-16T15:38:50Z INF Unregistered tunnel connection connIndex=1

2023-03-16T15:38:51Z INF Connection 6c6001a4-e4c6-48df-83c8-c34f1f27061c registered connIndex=1 location=LHR

2023-03-16T15:39:31Z INF Lost connection with the edge connIndex=1

2023-03-16T15:39:31Z ERR Serve tunnel error error="connection with edge closed" connIndex=1

2023-03-16T15:39:31Z INF Retrying connection in up to 1s seconds connIndex=1

2023-03-16T15:39:31Z INF Unregistered tunnel connection connIndex=1

2023-03-16T15:39:31Z INF Connection 7639cecc-309c-4c3f-90bb-79f071705a19 registered connIndex=1 location=LHR

2023-03-16T15:41:35Z INF Lost connection with the edge connIndex=2

2023-03-16T15:41:35Z ERR Serve tunnel error error="connection with edge closed" connIndex=2

2023-03-16T15:41:35Z INF Retrying connection in up to 1s seconds connIndex=2

2023-03-16T15:41:35Z INF Unregistered tunnel connection connIndex=2

2023-03-16T15:41:36Z INF Connection 712bd754-a544-4278-980c-294211355e51 registered connIndex=2 location=MAN

2023-03-16T15:41:54Z INF Lost connection with the edge connIndex=0

2023-03-16T15:41:54Z INF Unregistered tunnel connection connIndex=0

2023-03-16T15:41:54Z ERR Serve tunnel error error="connection with edge closed" connIndex=0

2023-03-16T15:41:54Z INF Retrying connection in up to 1s seconds connIndex=0

2023-03-16T15:41:56Z INF Connection 2074d8a7-5085-4a3a-9cb8-a5027904ac1e registered connIndex=0 location=MAN

2023-03-16T15:42:21Z INF Lost connection with the edge connIndex=0

2023-03-16T15:42:21Z ERR Serve tunnel error error="connection with edge closed" connIndex=0

2023-03-16T15:42:21Z INF Retrying connection in up to 1s seconds connIndex=0

2023-03-16T15:42:21Z INF Unregistered tunnel connection connIndex=0

2023-03-16T15:42:23Z INF Connection 2f7b16ed-3923-4acf-a311-f7db26f1b74f registered connIndex=0 location=MAN

2023-03-16T15:42:35Z INF Lost connection with the edge connIndex=1

2023-03-16T15:42:35Z ERR Serve tunnel error error="connection with edge closed" connIndex=1

2023-03-16T15:42:35Z INF Unregistered tunnel connection connIndex=1

2023-03-16T15:42:35Z INF Retrying connection in up to 1s seconds connIndex=1

2023-03-16T15:42:37Z INF Connection 05d19c44-4f4b-49e5-af09-258aff52ca54 registered connIndex=1 location=LHR

2023-03-16T15:42:38Z INF Lost connection with the edge connIndex=2

2023-03-16T15:42:38Z ERR Serve tunnel error error="connection with edge closed" connIndex=2

2023-03-16T15:42:38Z INF Retrying connection in up to 1s seconds connIndex=2

2023-03-16T15:42:38Z INF Unregistered tunnel connection connIndex=2

2023-03-16T15:42:40Z INF Connection 21ef6af2-e65c-4e34-ade5-07e5d4b1f723 registered connIndex=2 location=MAN

2023-03-16T15:43:07Z INF Lost connection with the edge connIndex=1

2023-03-16T15:43:07Z ERR Serve tunnel error error="connection with edge closed" connIndex=1

2023-03-16T15:43:07Z INF Retrying connection in up to 1s seconds connIndex=1

2023-03-16T15:43:07Z INF Unregistered tunnel connection connIndex=1

2023-03-16T15:43:07Z INF Connection 9f30e60d-ef85-4b57-a17f-44a371e443a5 registered connIndex=1 location=LHR

2023-03-16T15:44:26Z INF Lost connection with the edge connIndex=1

2023-03-16T15:44:26Z ERR Serve tunnel error error="connection with edge closed" connIndex=1

2023-03-16T15:44:26Z INF Retrying connection in up to 1s seconds connIndex=1

2023-03-16T15:44:26Z INF Unregistered tunnel connection connIndex=1

2023-03-16T15:44:26Z INF Lost connection with the edge connIndex=0

2023-03-16T15:44:26Z ERR Serve tunnel error error="connection with edge closed" connIndex=0

2023-03-16T15:44:26Z INF Retrying connection in up to 1s seconds connIndex=0

2023-03-16T15:44:26Z INF Unregistered tunnel connection connIndex=0

2023-03-16T15:44:27Z INF Connection cd3e7c11-d9d4-48a2-9ae7-62e030f16414 registered connIndex=0 location=MAN

2023-03-16T15:44:28Z INF Connection 0659fcdc-f962-416f-b219-a9a0fe4a0501 registered connIndex=1 location=LHR

2023-03-16T15:44:35Z INF Lost connection with the edge connIndex=0

2023-03-16T15:44:35Z ERR Serve tunnel error error="connection with edge closed" connIndex=0

2023-03-16T15:44:35Z INF Unregistered tunnel connection connIndex=0

2023-03-16T15:44:35Z INF Retrying connection in up to 1s seconds connIndex=0

2023-03-16T15:44:37Z INF Connection d95c356e-c03f-4a7b-a821-0306ba8d8cd9 registered connIndex=0 location=MAN

2023-03-16T15:45:06Z INF Lost connection with the edge connIndex=2

2023-03-16T15:45:06Z ERR Serve tunnel error error="connection with edge closed" connIndex=2

2023-03-16T15:45:06Z INF Retrying connection in up to 1s seconds connIndex=2

2023-03-16T15:45:06Z INF Unregistered tunnel connection connIndex=2

2023-03-16T15:45:08Z INF Connection e987ac3a-a92a-4e5b-81cc-65ac23dac43a registered connIndex=2 location=MAN

2023-03-16T15:45:15Z INF Lost connection with the edge connIndex=1

2023-03-16T15:45:15Z ERR Serve tunnel error error="connection with edge closed" connIndex=1

2023-03-16T15:45:15Z INF Unregistered tunnel connection connIndex=1

2023-03-16T15:45:15Z INF Retrying connection in up to 1s seconds connIndex=1

2023-03-16T15:45:15Z INF Connection 7f250869-5660-4171-8479-f864e0c09a33 registered connIndex=1 location=LHR

Confirming this issue is persisting for us today as well, our tunnels are dropping today constantly and the only thing that works is restarting cloudflared in a loop every hour.

Hey guys... So sorry that you are having these problems. I am looking at this right now and trying to get to the bottom of this.

For quick remediation, I notice that 2/3 reports use http2 protocol (I'm not sure what @crackalak is connecting with). Can I recommend you change protocol to quic and see if this alleviates your problems for now?

If you could also share your tunnel IDs, it helps us look it up faster (I have @pirate 's and I do see your tunnel disconnecting from our servers in the last 30-45 mins. At the moment I cannot see anything on our side causing this but I'm still investigating).

Also, having a timeline would help me zone in better on what is causing this. @pirate , am I right that you are only seeing this problem since yesterday or have you had this before?

Others, feel free to chime in as well.

QUIC has never worked for us since it was introduced, it never even connects (we've always been using HTTP2 for this reason). Possibly because the upstream service doesn't support QUIC, or maybe a middlebox is blocking it?

The first disconnection was March 9th across all tunnels, then it worked March 9th - March 15th. March 15th EST nighttime it dropped and has continued dropping repeatedly through 16th, 17th, 18th, 19th, etc.

Thanks for the rapid help! We love tunnels in general, props to the whole team that builds/maintains them. Hope we can get this resolved :)

I've tried downgrading to 2022.12.1 and it doesn't appear to help, we're still getting Lost connection with the edge connIndex=1, Unregistered tunnel connection connIndex=1, Serve tunnel error error="connection with edge closed" connIndex=1 ip=198.41.200.53 errors.

@sudarshan-reddy I was also connecting with http2. I have now updated my 2 affected tunnels to 2023.3.1 and connected using quic. All is working well and no errors logged in the last 15 minutes that it has been running.

@sudarshan-reddy Thank you for looking this issue. I actually see these problems already at 2023-02-22 (about 1 drop per hour) but it has increased since. Now it’s about 1 drop per minute. I have never managed to connect with quic: "failed to dial to edge with quic: timeout: no recent network activity"

Tunnel ID: 0ad3c7c4-71f7-4384-b788-50ea5100c2b0

Hey friends... I looked for anomalies in our servers to see if we were dropping these connections and it does not seem so. I'm also noting that Tunnels are designed to be connected to different locations just to make sure we guard against network partitions.

- We haven't made any releases in the past week that would suddenly cause our systems to behave this way.

- @pirate also kindly tested this on an older version of cloudflared to confirm they were still having the issue.

Can we also try to see if there is something the matter with the network on your side that may cause this. I only ask because we have 1000s of tunnels connected right now not seeing these disconnects (this is something I just verified thanks to these reports) .

I am especially concerned with @terabitti seeing these reconnects in increasing frequency.

Where are y'all running your Tunnels (I see @pirate runs it on Digital Ocean. Are those the only tunnels that are having trouble connecting right now?).

We are also seeing connections dropping increasingly from a few Vultr servers.

I have a question though: in theory tunnels are resilient to intermittent connections though right? Even if we did have packet loss (which I think is unlikely across multiple providers), wouldn't it reconnect automatically?

Because restarting the cloudflared tunnel fixes it instantly, shouldn't the retry mechanism within cloudflared already be able to restart connections after interruption?

@sudarshan-reddy you can see from my logs that we were having disconnections from both MAN and LHR locations. We are hosting on Azure Kubernetes Service.

I'm using UpCloud. I have fully restarted my VM’s, still the same problem. There’s actually another tunnel (731acbcf-4a8d-41a0-8f1f-7b326c6e2015) having connection drops but not so frequently. I also have three other servers/tunnels without these problems (one of them is very similar as the other 2 problematic).

I have done some gateway changes to my server(s) and it allowed me to connect using QUIC. It certainly looks like this helped with the connection issues.

I can say that I haven't done any server/network chances recently (before changes I mentioned in the previous sentence) so I still don't have any idea why this started to happen now (as badly).

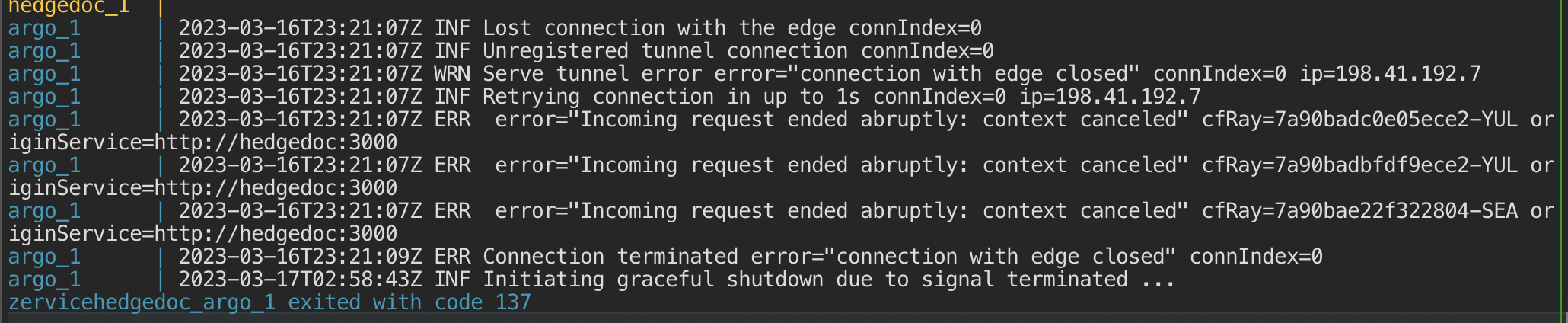

Unfortunately QUIC doesn't work for our server (it fails to ever connect), still seeing the disconnections every few hours here (ignore the last line about it exiting, that was me restarting it to get it working again)

Before switching to QUIC I was getting ”Lost connection with the edge” about every 2 minutes. After switching to QUIC I have seen ”timeout: no recent network activity” only a few times in 6-7 hours. That’s a huge change and am not worried about those infrequent errors.

I still have one http2 tunnel (not migrated to QUIC yet) throwing ”Lost connection with the edge” about 10 times per hour (increased from yesterday).

It wasn’t very straightforward to get QUIC working on our end (had to change networks/gateways), but fortunately it was possible. I have to migrate other servers to this too.

I have a question though: in theory tunnels are resilient to intermittent connections though right? Even if we did have packet loss (which I think is unlikely across multiple providers), wouldn't it reconnect automatically?

Indeed, this is the expectation. I see some re-registers in your initial logs:

argo_1 | 2023-03-16T05:14:44Z INF Connection 17872c64-60d8-43dc-bdfd-8e2b54a1034a registered with protocol: http2 connIndex=0 ip=198.41.192.67 location=EWR

Do you not see this behaviour anymore? Or are you saying this registered connection doesn't help?

If it's the latter, I'm inclined to investigate in the direction of something on the client side stopping this traffic.

I noticed you mentioned you are seeing this on your VULTR services. Do you see it on other deployments also or is it isolated to here?

Thanks @terabitti . That really helps me zone in on the blast radius.

Just circling back here to let you know we are still investigating this issue.

The challenge on our side is that it's isolated to a few reporters (and to http2 protocol based tunnels). That said, if you are willing to experiment, can you try:

- Creating and connecting a tunnel that doesn't see any traffic on the same machine where you see tunnel disconnects. Do both tunnels restart or is it only tunnels that see traffic?

- Also, what are your traffic patterns like? Did you see a sudden increase in traffic around this time?

Also @terabitti

After switching to QUIC I have seen ”timeout: no recent network activity” only a few times in 6-7 hours.

did this cause downtime or was it just one of our redundant connections?

@sudarshan-reddy No downtime. These connection timeouts happens occasionally with QUIC but no complete downtime. At least not as long as our monitoring system could catch.

@pirate can you try running cloudflared in host level and not docker? just to check whether it's a docker issue or not.

talking about tunnels, I have no issue running http/2 in do, linode, vultr, even dedicated server providers.

esp cloud providers having peering/PNI with cloudflare, connections should be fine.

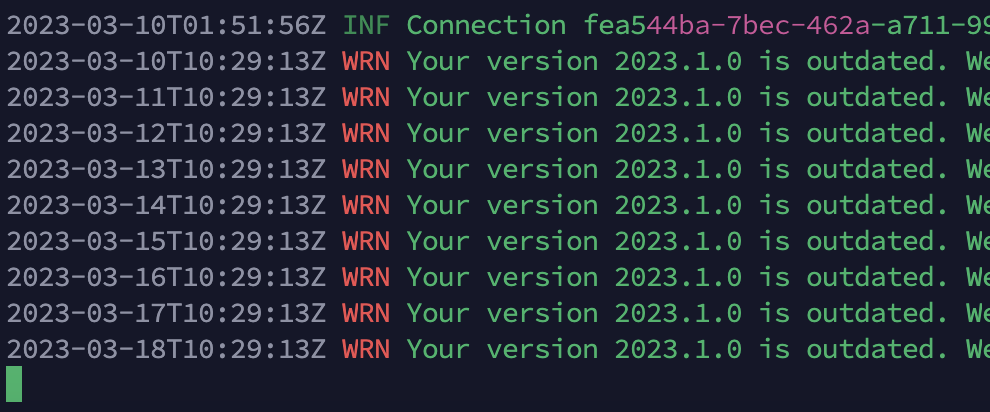

We are also experiencing this with docker based tunnels running http2. We are on 2023.3.1. It started occurring on 17 March when were were on 2023.1.0

- Creating and connecting a tunnel that doesn't see any traffic on the same machine where you see tunnel disconnects. Do both tunnels restart or is it only tunnels that see traffic?

~~Haven't been able to reproduce it like this with a fresh tunnel~~. I re-created one of the failing tunnels by deleting it's <uuid>.json file and running it with a new --name & the same parameters and it started failing in the same way again.

- Also, what are your traffic patterns like? Did you see a sudden increase in traffic around this time?

We have not seen any sudden increase in traffic as far as I can tell. We are also getting disconnects on some fairly low-traffic tunnels. Also I confirmed that our http2 traffic patterns didn't change around when they started failing, we didn't update any of our apps around that time. I really don't think this is a traffic patterns issue or peering/connection issue, as restarting a failing tunnel fixes it immediately. It takes minutes to an hour or more for it to fail after being restarted, which feels like a memory leak, upstream/middlebox issue, or some other transient condition in the runtime (vs on the network).

Is there any advanced debug output or logging I can enable to see exactly why it's losing connection with the edge and failing?

@pirate can you try running cloudflared in host level and not docker? just to check whether it's a docker issue or not.

I tried just to test and wasn't able to reproduce it without docker, but I wasn't able to reproduce it with docker in some cases either. Practically speaking we can't run our deployments in prod without docker so it would be a dealbreaker for us to remove it :/

Here are docker-compose.yml instructions to reproduce exactly the setup we have (that's failing consistently):

(replace docs.example.com with a domain you control on cloudflare)

$ mkdir -p cloudflared_failing_test/data_cloudflared && cd cloudflared_failing_test

# create ./docker-compose.yml & insert yaml from below

# open https://dash.cloudflare.com/argotunnel and download docs.example.com cert to `./data_cloudflared/cert.pem`

$ docker-compose pull

$ docker-compose run

# ... after some time you see this in the argo output:

argo_1 | 2023-03-16T16:51:20Z INF Lost connection with the edge connIndex=2

argo_1 | 2023-03-16T16:51:20Z WRN Serve tunnel error error="connection with edge closed" connIndex=2 ip=198.41.200.23

argo_1 | 2023-03-16T16:51:20Z INF Retrying connection in up to 1s connIndex=2 ip=198.41.200.23

argo_1 | 2023-03-16T16:51:20Z ERR error="Incoming request ended abruptly: context canceled" cfRay=7a8e7ffdd807e8ed-DFW originService=http://hedgedoc:3000

argo_1 | 2023-03-16T16:51:20Z INF Unregistered tunnel connection connIndex=2

argo_1 | 2023-03-16T16:51:21Z WRN Connection terminated error="connection with edge closed" connIndex=2

# restarting cloudflared fixes it instantly

$ docker-compose restart argo

# after 30~60min it fails again, so we solved it for now like this...

$ while true; do sleep 3600; docker-compose restart argo; done

docker-compose.yml:

version: '3.9'

services:

postgres:

image: postgres:13-alpine

expose:

- 5432

environment:

- POSTGRES_DB=hedgedoc

- POSTGRES_USER=hedgedoc

- POSTGRES_PASSWORD=hedgedoc

volumes:

- ./data_postgres:/var/lib/postgresql/data

cpus: 2

mem_limit: 4096m

restart: on-failure

hedgedoc:

# https://docs.hedgedoc.org/setup/docker/

image: quay.io/hedgedoc/hedgedoc:1.9.6

expose:

- 3000

environment:

- CMD_DB_URL=postgres://hedgedoc:hedgedoc@postgres:5432/hedgedoc

- CMD_IMAGE_UPLOAD_TYPE=filesystem

- CMD_DEFAULT_PERMISSION=locked

- CMD_ALLOW_FREEURL=true

- CMD_ALLOW_ANONYMOUS=true

- CMD_ALLOW_ANONYMOUS_EDITS=true

- CMD_REQUIRE_FREEURL_AUTHENTICATION=true

- CMD_DOMAIN=docs.example.com

- CMD_ALLOW_ORIGIN=docs.example.com,localhost

- CMD_PROTOCOL_USESSL=true

- CMD_URL_ADDPORT=false

- CMD_CSP_ENABLE=false

- CMD_COOKIE_POLICY=lax

- CMD_USECDN=false

- CMD_ALLOW_GRAVATAR=true

- CMD_SESSION_SECRET=1234deadbeefdeadbeefdeadbeefdead1234

volumes:

- ./data_uploads:/hedgedoc/public/uploads

depends_on:

- postgres

healthcheck:

test: ["CMD", "node", "-e", "require('https').get('https://docs.example.com/slide-example', (resp) => process.exit(resp.statusCode != 200))"]

cpus: 2

mem_limit: 4096m

restart: on-failure

argo:

# https://developers.cloudflare.com/cloudflare-one/connections/connect-apps/install-and-setup/tunnel-guide/local/local-management/arguments/

# :2022.12.1 also fails

image: cloudflare/cloudflared:2023.3.1

command: tunnel --no-autoupdate --overwrite-dns --retries 15 --protocol http2 --url http://hedgedoc:3000 --hostname docs.example.com --name docs.example.com

volumes:

# download a cert.pem into this folder from https://dash.cloudflare.com/argotunnel

- ./data_cloudflared:/etc/cloudflared

cpus: 2

mem_limit: 4096m

restart: on-failure

FWIW: i am seeing this running cloudflared in AWS ECS Fargate containers in ap-southeast-2.

QUIC is much more stable for me than HTTP2; the tunnels go down every few days. But HTTP2 is much faster in terms of latency and bandwidth (between 5-10x), despite failing almost daily. This is what an HTTP2 tunnels failure looks like:

2023-03-20T13:29:17Z WRN Connection terminated error="DialContext error: dial tcp 198.41.200.13:7844: i/o timeout" connIndex=2

2023-03-20T13:29:34Z WRN Connection terminated error="DialContext error: dial tcp 198.41.192.227:7844: i/o timeout" connIndex=0

2023-03-20T13:29:40Z WRN Connection terminated error="DialContext error: dial tcp 198.41.192.167:7844: i/o timeout" connIndex=3

2023-03-20T13:29:59Z INF Lost connection with the edge connIndex=1

2023-03-20T13:29:59Z INF Unregistered tunnel connection connIndex=1

2023-03-20T13:29:59Z WRN Serve tunnel error error="connection with edge closed" connIndex=1 ip=198.41.200.43

2023-03-20T13:29:59Z INF Retrying connection in up to 1s connIndex=1 ip=198.41.200.43

2023-03-20T13:30:00Z ERR Connection terminated error="connection with edge closed" connIndex=1

2023-03-20T13:29:17Z WRN Connection terminated error="DialContext error: dial tcp 198.41.200.13:7844: i/o timeout" connIndex=2 2023-03-20T13:29:34Z WRN Connection terminated error="DialContext error: dial tcp 198.41.192.227:7844: i/o timeout" connIndex=0

is that running under docker? it's timing out to 2 different regions, that's weird...

@morpig, a Docker container, yes. There's a QUIC container running side by side with the HTTP2 one serving another tunnel and it has not had any issues.

Mine was also timing out to multiple regions, I just only copied enough log output previously to show one failure at a time, sry. I can post more failing lines if it's helpful.

I have also found relief by upgrading to QUIC.

One tip for doing this in AWS infrastructure is that the AWS NAT gateways appear to rewrite the source address of inbound QUIC packets so that they appear to come from the NAT Gateway rather than the cloudflare edge servers. As a result, I had to add additional ACL ingress rules to cope with this unexpected occurrence. I have no idea why the NAT Gateways do this but it seems they do (our tunnel servers are in a different subnet to the NAT gateway)

QUIC is much more stable for me than HTTP2; the tunnels go down every few days. But HTTP2 is much faster in terms of latency and bandwidth (between 5-10x), despite failing almost daily. This is what an HTTP2 tunnels failure looks like:

To add more data to this: https://github.com/cloudflare/cloudflared/issues/895 and why we are not able to use QUIC.

We too are seeing an uptake of Connection terminated error="connection with edge closed" in our tunnels. FWIW, our tunnels are connecting to the AMS, MAN data centers.

Hi

I have 2 tunnels running with version 2023.2.2

Default protocol: auto

So 2 days ago, I had to change the protocol to quic for a tunnel.

Within 2 days I monitoring then I look unstable connections to the tunnels

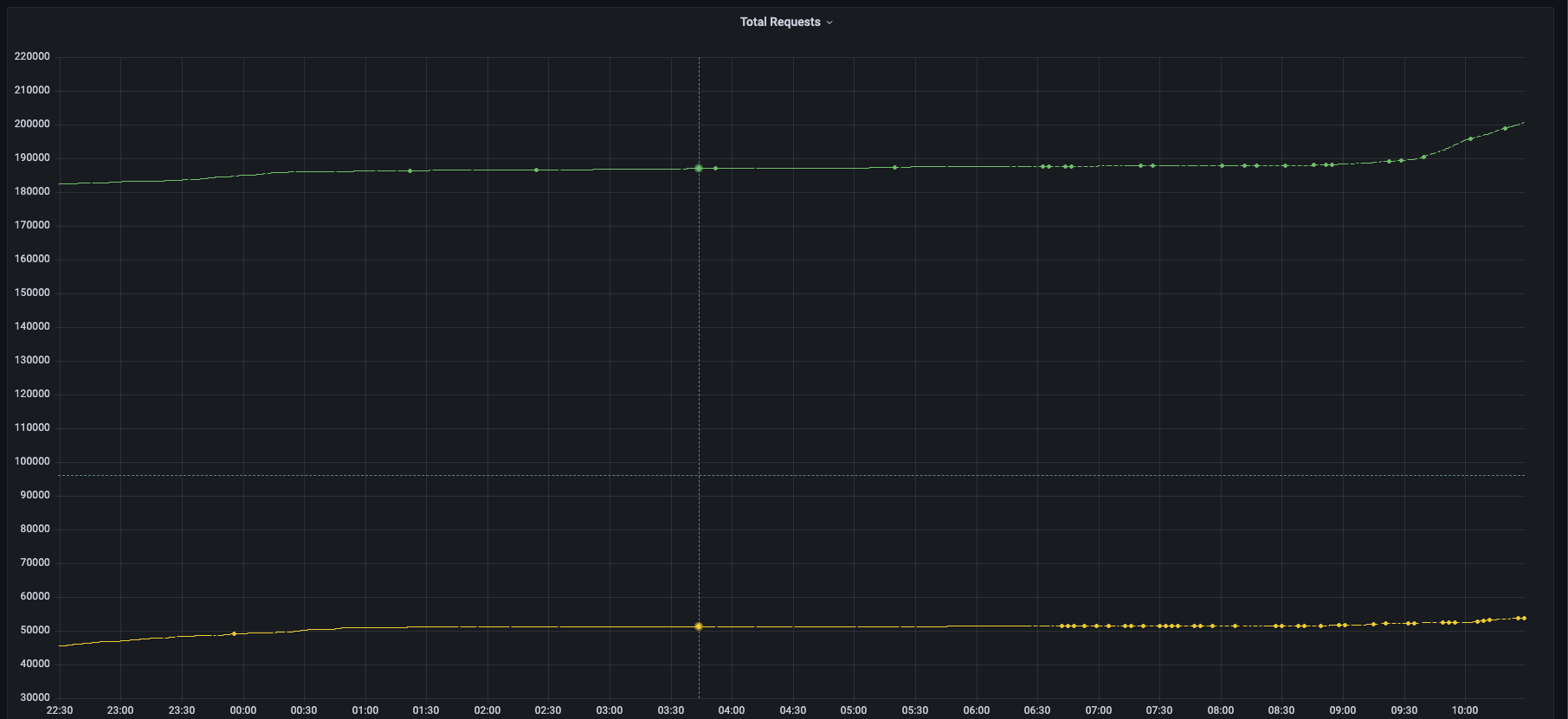

This is monitoring for the tunnel to changed the protocol to quic

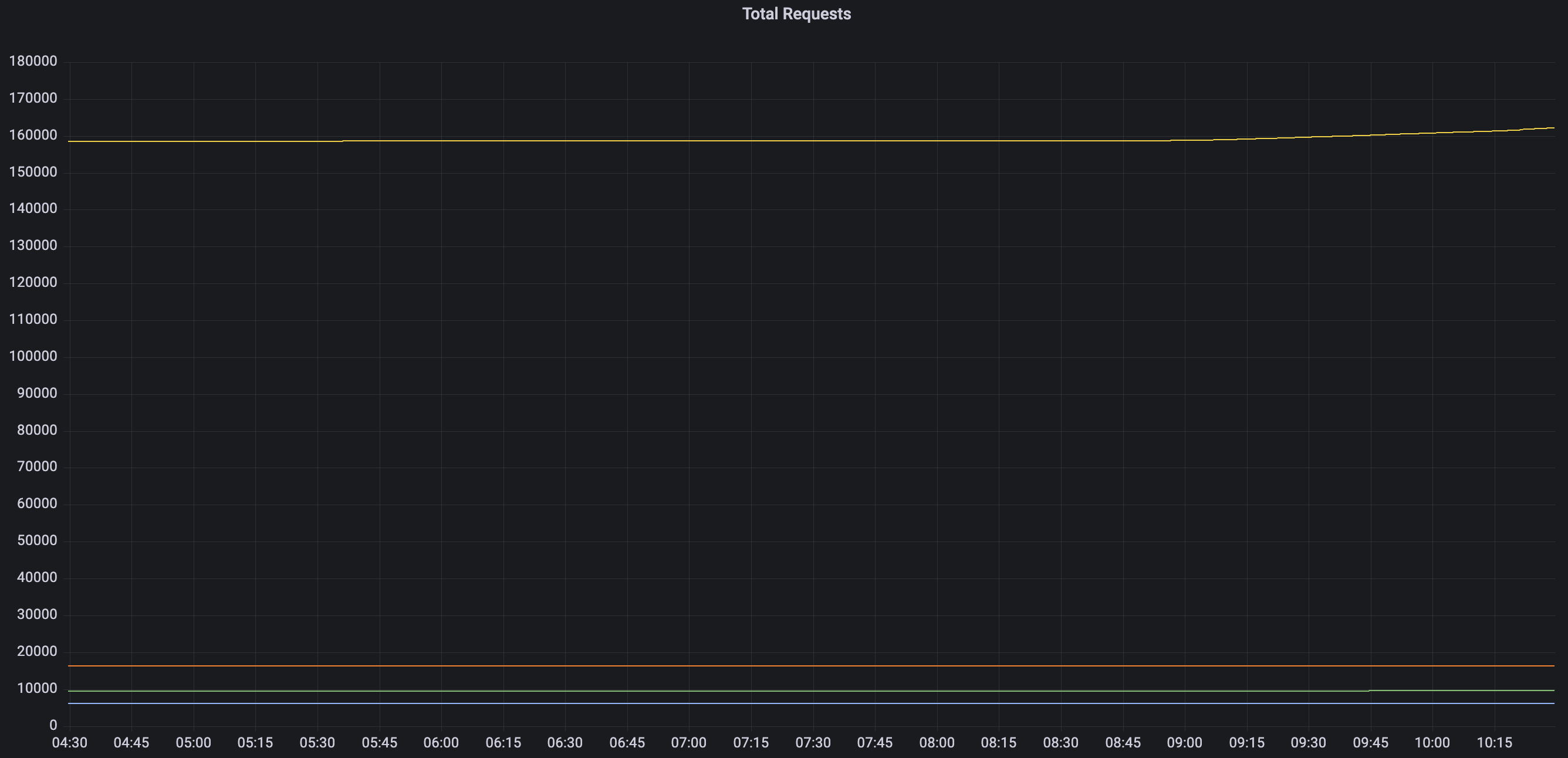

This is monitoring for the tunnel with the protocol to auto

Any update this problem? Thanks all