Un-inject values from Unet

Right now, if we save_pretrained with the unet modifed for lora training, diffusion pipeline will refuse to load the unet later because of some "Linear" values.

Is there a way to un-inject the LORA values from unet before doing pipeline.save_pretrained?

Hmm... so as you mightve probably checked my code, there is extract_lora_ups_down function that you can use to extract lora weights. Now you can load new models, and merge lora with weight_apply_lora. Although that would take extra 10 seconds or so...

Not sure if this is the solution you were looking for, as it just extracts lora and reloads the new, raw model

Not sure if extractig_lora_ups_downs is what I need.

At the start of training, you call inject_trainable_lora on the unet, which seems to add some new values to it.

If I do pipeline.save_pretrained on this unet, I can't load it again later with pipeline.from_pretrained(), it doesn't like the new Linear() stuff that's in it...which makes sense.

So, is there a way to "finalize" lora training on the unet, where we would remove the injected Linear stuff, collect the weights, and apply them to the unet...and then ideally...save them to a checkpoint file.

The .pt file size is incredibly useful, but at the end of the day, I need to be able to take the trained lora data and stick it back into a checkpoint. I messaged you on reddit about this as well.

So there is CLI for this, lora_add that you've probably checked.

There is also weight_apply_lora, which "doesn't add anything", and simply updates the weight in the unet.

So im just suggesting to remove the pipeline after injection, reinitiate the new ones, and use weight_apply_lora

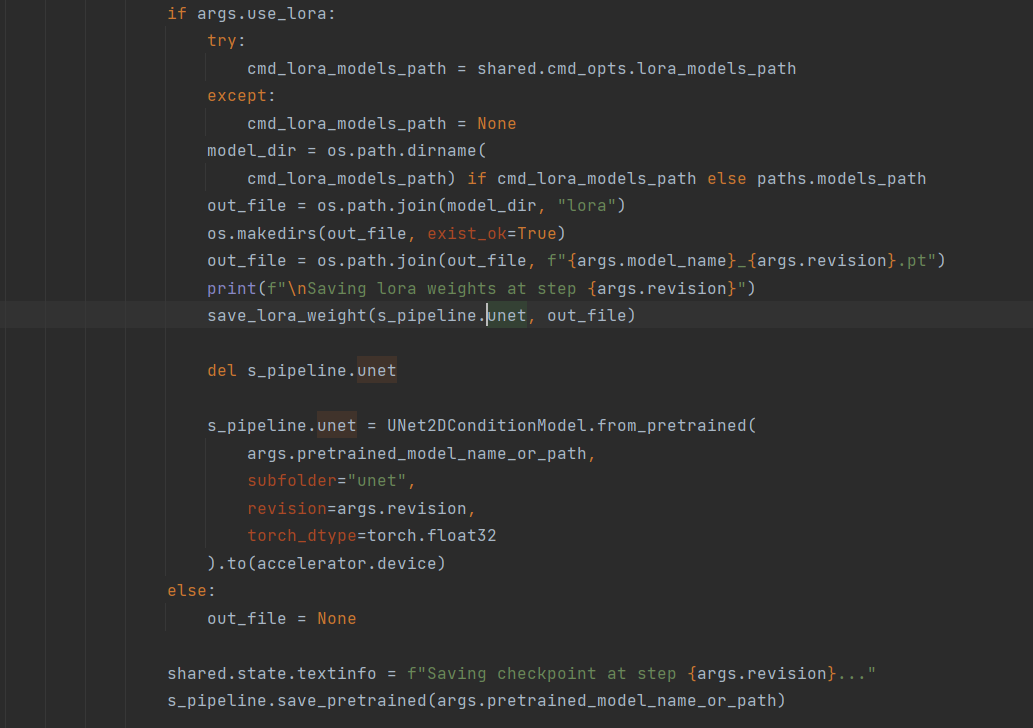

This is what I'm doing presently:

In the above, I extract the lora weights, save it to a pt file, reload the unet from file, save the whole pipeline with the "clean" unet...

Then, I pass the path to my "compile checkpoint" method, which, when a .pt file is specified, will try to load the weights to the unet, save it separately, and then convert this new unet before compiling to a checkpoint.

But, as I said above...images generated with the pt + diffusers seem to work properly. Converting the same pipeline to a checkpoint results in images that have nothing to do with the trained subject...

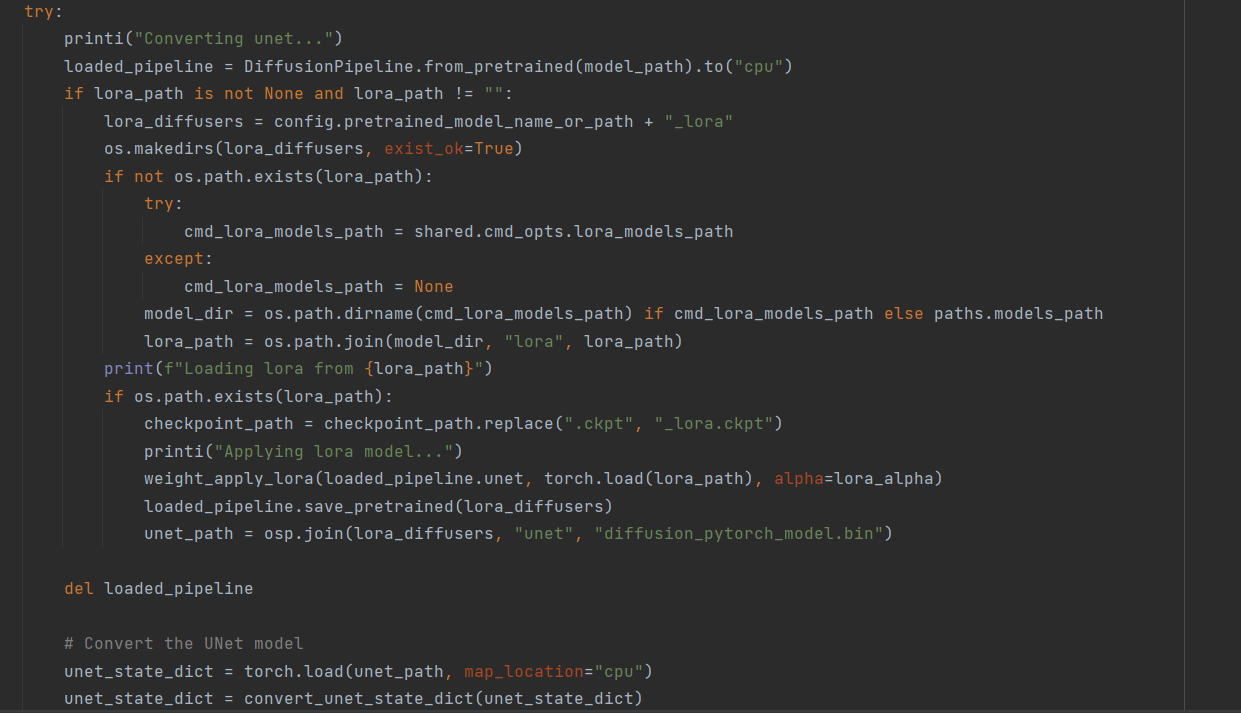

So what you are doing is essentially what Im doing in lora_add cli, in mode upl (unet + lora). Can you check if that works for you?

loaded_pipeline = StableDiffusionPipeline.from_pretrained(

path_1,

).to("cpu")

weight_apply_lora(loaded_pipeline.unet, torch.load(path_2), alpha=alpha)

if output_path.endswith(".pt"):

output_path = output_path[:-3]

loaded_pipeline.save_pretrained(output_path)

Seems pretty much the same logic for me, not sure why yours wouldn't work

By the way, nice work implementing to automatic's webui. I appreciate your work!

Thanks!

Do you think it could be something in the conversion from diffusers to sd that's causing issues?

I'll try the CLI, but as you said, I'm literally just using a slightly modified version of that function to save the unet...

On Sat, Dec 10, 2022 at 10:46 AM Simo Ryu @.***> wrote:

By the way, nice work implementing to automatic's webui. I appreciate your work!

— Reply to this email directly, view it on GitHub https://github.com/cloneofsimo/lora/issues/9#issuecomment-1345303733, or unsubscribe https://github.com/notifications/unsubscribe-auth/AAMO4NHAMGI6XT52LRZJJQDWMSXWFANCNFSM6AAAAAASZOEP24 . You are receiving this because you authored the thread.Message ID: @.***>

So, I think this issue is resolved via #18 ?

@d8ahazard @anotherjesse. I have add some code to support the Automatic111 WebUI. What you should do is just runing 'train_lora_dreambooth.py', then you will find that there is another lora_weight_webui.safetensors in the output dir. That is the right weight for WebUI. reference my project in https://github.com/tengshaofeng/lora_tbq