hubble-ui

hubble-ui copied to clipboard

hubble-ui copied to clipboard

Unable to inspect L7 info with hubble by configuring io.cilium.proxy-visibility

Hello forks, I'm trying to set up a simle HTTP info inspecting environment with Cilium and hubble, using minikube for single node environment.

My kubernets info:

- Linux version: Centos 7 (Inside a vmware virtual machine) with Linux kernel 5.4 (5.4.210-1.el7.elrepo.x86_64/kernel-lt)

- Kubernets version: v1.16.2

- kubectl version: v1.16.2

- minikube version: v1.26.1

creating kubernetes cluster with minikube:

minikube start \

--vm-driver=none \

--kubernetes-version=v1.16.2 \

--network-plugin=cni \

--cni=false \

--image-mirror-country='cn'

deploying cilium with helm:

helm install cilium cilium/cilium \

--version 1.12.0 \

--namespace=kube-system \

--set endpointStatus.enabled=true \

--set endpointStatus.status="policy" \

--set operator.replicas=1 \

--set hubble.relay.enabled=true \

--set hubble.ui.enabled=true

and cluster status seems fine

[root@control-plane ~]# kubectl cluster-info

Kubernetes master is running at https://192.168.59.131:8443

KubeDNS is running at https://192.168.59.131:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

[root@control-plane ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

cilium-f6std 1/1 Running 0 13m

cilium-operator-58b84657f-ljqq7 1/1 Running 0 13m

coredns-67c766df46-t55vg 1/1 Running 0 13m

etcd-control-plane 1/1 Running 0 12m

hubble-relay-85bfc97ddb-vvlgv 1/1 Running 0 13m

hubble-ui-5fb54dc4db-d4676 2/2 Running 0 13m

kube-apiserver-control-plane 1/1 Running 0 12m

kube-controller-manager-control-plane 1/1 Running 0 12m

kube-proxy-jzjvh 1/1 Running 0 13m

kube-scheduler-control-plane 1/1 Running 0 12m

storage-provisioner 1/1 Running 0 13m

[root@control-plane ~]# cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Hubble: OK

\__/¯¯\__/ ClusterMesh: disabled

\__/

DaemonSet cilium Desired: 1, Ready: 1/1, Available: 1/1

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-relay Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-ui Desired: 1, Ready: 1/1, Available: 1/1

Containers: hubble-relay Running: 1

hubble-ui Running: 1

cilium-operator Running: 1

cilium Running: 1

Cluster Pods: 4/4 managed by Cilium

Image versions cilium quay.io/cilium/cilium:v1.12.0@sha256:079baa4fa1b9fe638f96084f4e0297c84dd4fb215d29d2321dcbe54273f63ade: 1

hubble-relay quay.io/cilium/hubble-relay:v1.12.0@sha256:ca8033ea8a3112d838f958862fa76c8d895e3c8d0f5590de849b91745af5ac4d: 1

hubble-ui quay.io/cilium/hubble-ui:v0.9.0@sha256:0ef04e9a29212925da6bdfd0ba5b581765e41a01f1cc30563cef9b30b457fea0: 1

hubble-ui quay.io/cilium/hubble-ui-backend:v0.9.0@sha256:000df6b76719f607a9edefb9af94dfd1811a6f1b6a8a9c537cba90bf12df474b: 1

cilium-operator quay.io/cilium/operator-generic:v1.12.0@sha256:bb2a42eda766e5d4a87ee8a5433f089db81b72dd04acf6b59fcbb445a95f9410: 1

For testing, I create nginx deployment and expose it. Success to acess the website

[root@control-plane ~]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 15m

[root@control-plane ~]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 17m

nginx NodePort 10.109.79.91 <none> 80:30219/TCP 15m

[root@control-plane ~]# minikube service nginx --url

http://192.168.59.131:30219

# (In my Windows host powershell)

~ 09:22:53 ﮫ 0ms⠀ curl http://192.168.59.131:32233/

StatusCode : 200

StatusDescription : OK

Content : ...

I can observe L3/L4 info with hubble-ui

cilium hubble port-forward &

cilium hubble ui &

Accodring to (Layer 7 Protocol Visibility)[https://docs.cilium.io/en/stable/policy/visibility/], I make annotations

[root@control-plane ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-86c57db685-fxv2v 1/1 Running 0 74s

[root@control-plane ~]# kubectl annotate pod nginx-86c57db685-fxv2v io.cilium.proxy-visibility="<Ingress/80/TCP/HTTP>,<Egress/80/TCP/HTTP>"

pod/nginx-86c57db685-fxv2v annotated

[root@control-plane ~]# kubectl get cep

NAME ENDPOINT ID IDENTITY ID INGRESS ENFORCEMENT EGRESS ENFORCEMENT VISIBILITY POLICY ENDPOINT STATE IPV4 IPV6

nginx-86c57db685-fxv2v 345 10688 false false ready 10.0.0.38

[root@control-plane ~]# kubectl annotate pod nginx-86c57db685-fxv2v io.cilium.proxy-visibility="<Ingress/80/TCP/HTTP>,<Egress/80/TCP/HTTP>"

error: --overwrite is false but found the following declared annotation(s): 'io.cilium.proxy-visibility' already has a value (<Ingress/80/TCP/HTTP>,<Egress/80/TCP/HTTP>)

[root@control-plane ~]# kubectl get cep

NAME ENDPOINT ID IDENTITY ID INGRESS ENFORCEMENT EGRESS ENFORCEMENT VISIBILITY POLICY ENDPOINT STATE IPV4 IPV6

nginx-86c57db685-fxv2v 345 10688 false false OK ready 10.0.0.38

[root@control-plane ~]#

After that, I can't get acess to my nginx service, or inspecting L7 info.

I'm trapped here, any suggestions to debug this?

@rolinh I don't think this issue is related to hubble-ui cuz I can't inspect L7 info in hubble client either.

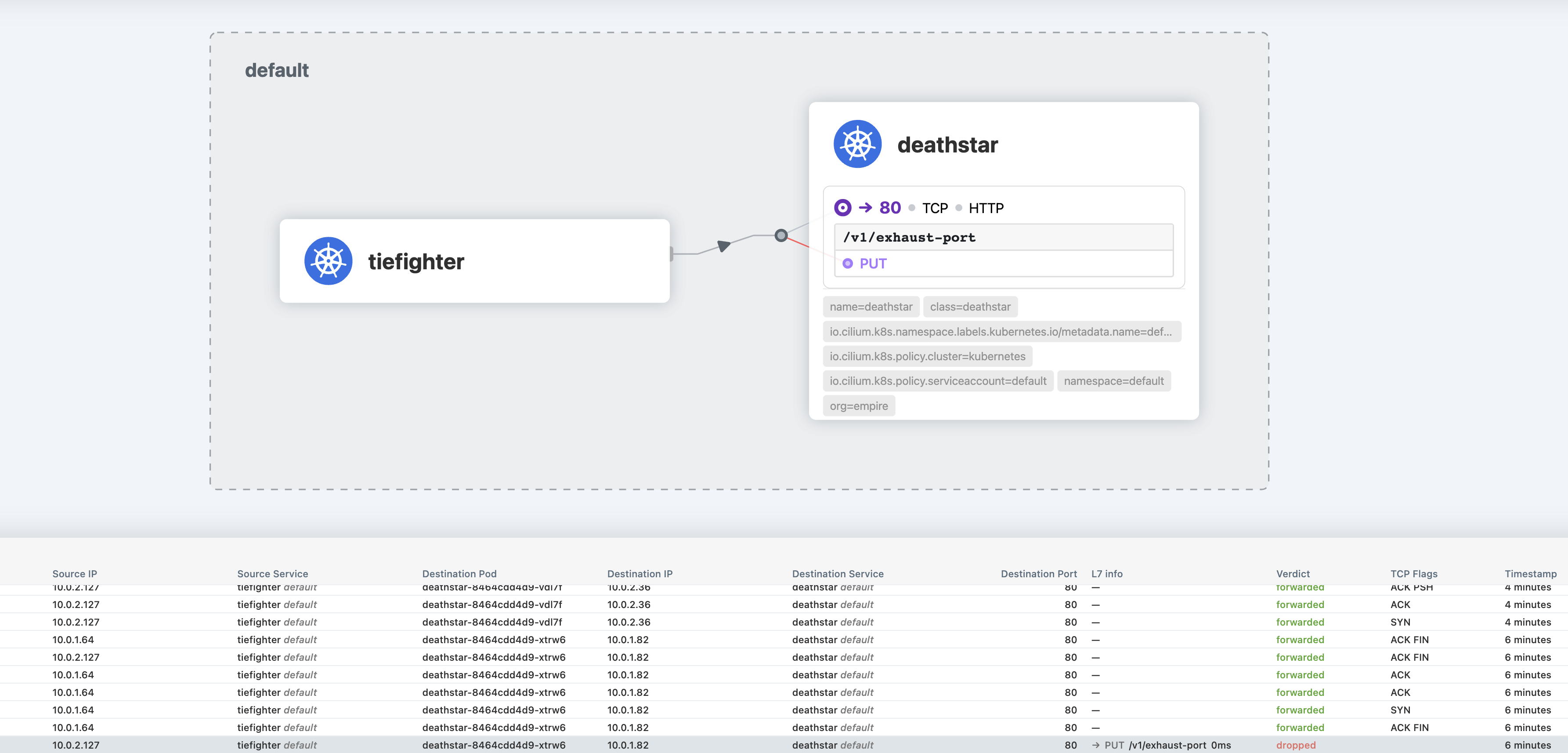

L7 information will be shown once you implement a layer 7 policy

Closing as stale, feel free to re-open.