Proposal / RFE: Change to use the value of `external-dns.alpha.kubernetes.io/hostname` instead of `${ClusterName}.mesh.cilium.io` when `external-dns.alpha.kubernetes.io/hostname` is set on the `clustermesh-apiserver` LoadBalancer Service

Proposal / RFE

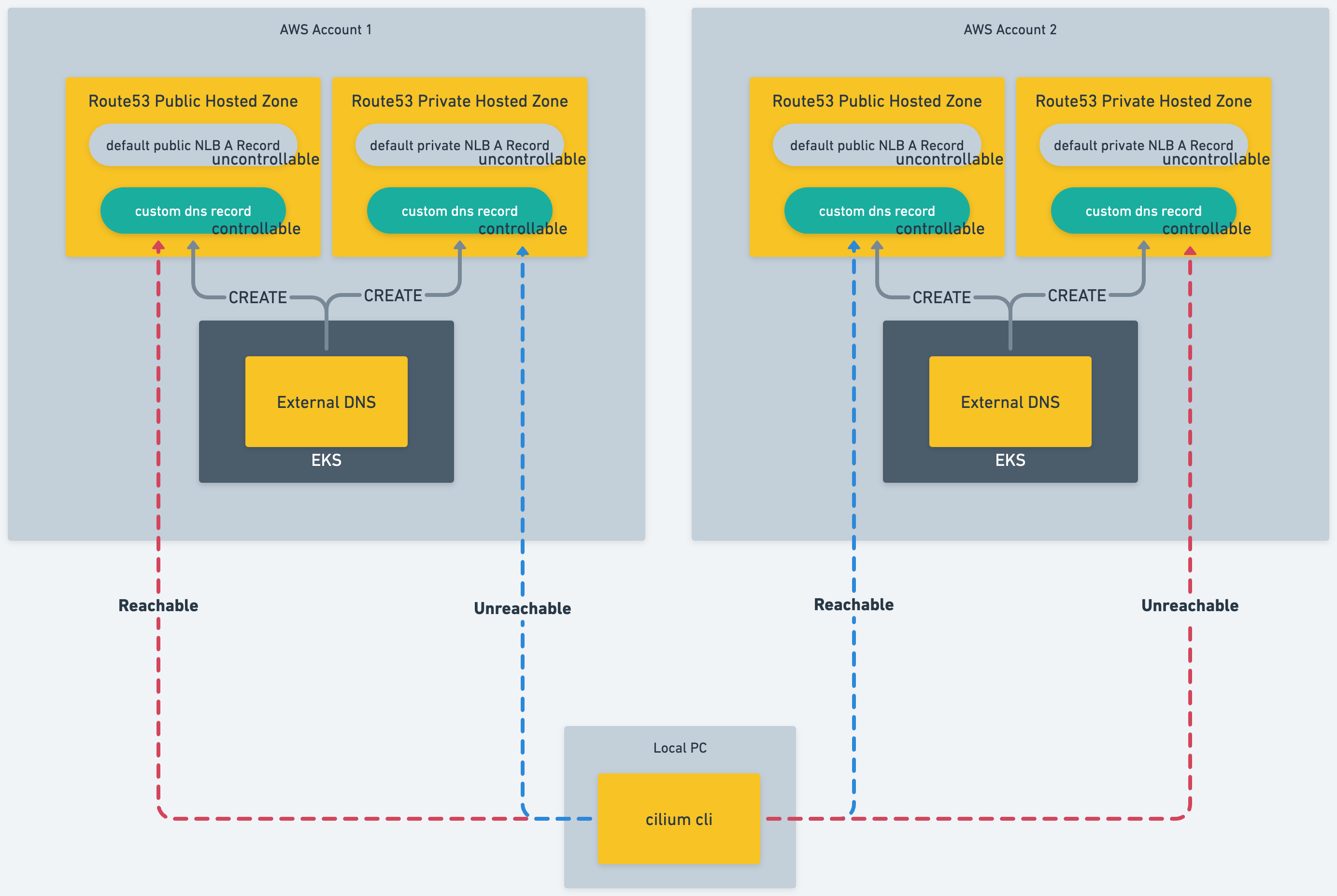

In the current implementation, it is necessary to perform a DNS lookup for all target clusters in the environment running cilium clustermesh connect. This can be difficult depending on network configurations.

As a security best practice, the clustermesh-apiserver NLB should be created in a private subnet. However, the default hostname's A record for an NLB in a private subnet is registered in the Route53 Private Hosted Zone. As a result, lookup from a local PC will fail.

The primary solution is to create a custom A record for the clustermesh-apiserver NLB using tools like External DNS, and register it in a Public Hosted Zone.

However, the primary reason for performing the DNS lookup is to set the IP for hostAliases of ${ClusterName}.mesh.cilium.io. The initial motivation for wanting to perform a DNS lookup during the cilium clustermesh connect process is when the DNS hostname of the clustermesh-apiserver LoadBalancer Service is unknown, right?

Implementation Pattern 1

As a solution, how about adding the following flags?

--use-status-hostname- Referencing

status.loadBalancer.ingress[].hostname

- Referencing

--use-destination-status-hostname- Referencing

status.loadBalancer.ingress[].hostname

- Referencing

--use-externaldns-hostname- Referencing

metadata.annotations."external-dns.alpha.kubernetes.io/hostname"

- Referencing

--use-destination-externaldns-hostname- Referencing

metadata.annotations."external-dns.alpha.kubernetes.io/hostname"

- Referencing

In this case, there will be no need for hostAliases and they will not be set.

There is also a rollOutCiliumPods option in the helm values, so we think that those who want to restart the system should activate it.

Implementation Pattern 2

-

If

external-dns.alpha.kubernetes.io/hostnameis set onclustermesh-apiserver, use that hostname as the targetclustermesh-apiserverfor the connection. Do not set hostAliases. https://github.com/cilium/cilium-cli/blob/9c3ea4c5e55ee7b6d466c57ade34091e0314b13a/clustermesh/clustermesh.go#L648 -

If

metadata.annotations."external-dns.alpha.kubernetes.io/hostname"is not set onclustermesh-apiserver, follow the existing process. -

If the DNS lookup fails, reference

status.loadBalancer.ingress[].hostnameand do not set hostAliases.

There is also a rollOutCiliumPods option in the helm values, so we think that those who want to restart the system should activate it.

Hi, thanks for your proposal. I do think that it's important that we support LoadBalancers in private subnets for cilium clustermesh connect. In some cases, the user of cilium clustermesh connect will be on a VPN which is capable of using the cloud provider's private DNS resolvers such that the CLI will be able to resolve such hostnames. However, that is certainly not possible in all cases.

I am currently working on a reimplementation of cilium clustermesh connect that will replace much of the templating logic that you have come across with usage of the recently expanded-upon Helm template functions related to Clustermesh configuration and configuration of the clustermesh-apiserver etcd servers. For example, here is the template function that allows us to specify an arbitrary set of endpoint names or endpoint IPs for the clustermesh. There is this function to template the value of the endpoints: array, which is invoked for each configured cluster here

Once this has been implemented, one way to achieve the behavior that you are after would be to specify the cluster endpoints in a minimal helm values file when using the clustermesh connect command. For example:

cat << EOF >clustermesh-values.yaml

clustermesh:

config:

enabled: true

clusters:

- name: cluster-1

address: cluster1.mesh.cilium.io

- name: cluster-2

address: cluster2.mesh.cilium.io

EOF

cilium clustermesh connect --helm-values custermesh-values.yaml

In this case we are using helm values to provide the addresses, and other values such as the port numbers and PKI information will be autoconfigured as usual.

In your proposal, you have two implementation paths where ExternalDNS annotations would be used. Would the user provide the value of these annotations at "connect" time, or would we expect a multi-pass workflow, where the user attempts to have these LoadBalancers created, and then annotates them?

Either way, I think the new implementation will support your intended use case, as you will be able to annotate your LoadBalancers as desired, and then use an address configuration like the above.

My current feeling is that the Cilium CLI should not attempt to write or read ExternalDNS annotations until we find that is absolutely necessary to provide a smooth configuration experienc here.

If you wouldn't mind waitig until I put up the new implementation of clustermesh connect, I would be thrilled to have you test it out and ensure that it supports this use case.

@asauber

In your proposal, you have two implementation paths where ExternalDNS annotations would be used. Would the user provide the value of these annotations at "connect" time, or would we expect a multi-pass workflow, where the user attempts to have these LoadBalancers created, and then annotates them?

This is neither of the options mentioned. Annotations are applied simultaneously during the initial creation process. For example, I am deploying Cilium in an EKS environment using the helm install command. The values related to the clustermesh-apiserver I'm using during this process are as follows:

clustermesh:

apiserver:

affinity: null

podDisruptionBudget:

enabled: true

priorityClassName: system-cluster-critical

replicas: 3

resources:

limits:

cpu: 1000m

memory: 1024M

requests:

cpu: 100m

memory: 64Mi

service:

annotations:

service.beta.kubernetes.io/aws-load-balancer-internal: "true"

service.beta.kubernetes.io/aws-load-balancer-type: nlb

external-dns.alpha.kubernetes.io/hostname: "cilium-clustermesh-apiserver.example.com"

externalTrafficPolicy: Local

type: LoadBalancer

topologySpreadConstraints:

- maxSkew: 1

topologyKey: topology.kubernetes.io/zone

whenUnsatisfiable: DoNotSchedule

useAPIServer: true

Once this has been implemented, one way to achieve the behavior that you are after would be to specify the cluster endpoints in a minimal helm values file when using the clustermesh connect command.

Indeed, I agree that this could be a good solution, considering that it would be quite difficult to cover all cases.

However, I am aware that ExternalDNS has a significant market share, and many users would appreciate the convenience brought by auto-lookup capabilities. For that reason, I believe it would be most beneficial to support both options.

If you wouldn't mind waitig until I put up the new implementation of clustermesh connect, I would be thrilled to have you test it out and ensure that it supports this use case.

Of course, let me help you.

Hello @kahirokunn, are you still interested in working on this?

The Helm mode CLI is merged now (and is also the default mode in 0.15.0), and this may be a matter of including the appropriate addresses around this area of the Helm configuration. https://github.com/cilium/cilium-cli/blob/main/clustermesh/clustermesh.go#L2266

If you would like to work on an implementation, I would be interested in reviewing it and helping it move along.

@asauber Thank you! I am interested in improving the cilium project. It certainly seems to work well when applied to that part of the project.

I need to decide on the specifications so that I can proceed with the implementation. Am I correct in assuming that you want to be able to pass the following structure that you suggested earlier? In that case, clustermesh.config.clusters would be copied as is.

cat << EOF >clustermesh-values.yaml

clustermesh:

config:

clusters:

- name: cluster-1

address: cluster1.mesh.cilium.io

- name: cluster-2

address: cluster2.mesh.cilium.io

EOF

cilium clustermesh connect --helm-values custermesh-values.yaml

@kahirokunn correct, you mentioned that you want to do some DNS lookup validation as well before the configuration. I think that this is a good idea. Please go ahead with an implementation, when you have a chance, and we can iterate on it.

@asauber Thanks for the suggestion! Let me ask a few more questions! 🙇♂️ I just want to confirm, what is the reason for conducting DNS lookup validation? Is it just to add the IP address to the daemonset's hostAliases? So, my understanding is:

- If the DNS lookup is successful, add the IP address to the daemonset's hostAliases.

- Merge with the values passed with

cilium clustermesh connect --helm-values custermesh-values.yaml.

Is that correct?

My concern, independent of the issue with the private subnet, is that when making the clustermesh connect, doing a DNS lookup and adding the IP address to the daemonset's hostAliases will cause all daemonsets to restart, which could temporarily disconnect the network. I see that as a potential problem. Of course, it can be mitigated by carefully scheduling system maintenance times, but I would prefer not to change the Daemonset's spec unless necessary. I think those who want the daemonset to restart every time can achieve this by setting operator.rollOutPods=true.

I see your point. If we can avoid DNS lookups entirely, then that would be ideal. Providing .address for each cluster should be sufficient, given the clustermesh config implementation in the Helm chart.

Therefore, for the purpose of this Issue let's not use host aliases. It seems that we can achieve the type of configuration that you are interested in by setting clustermesh.config.clusters and clustermesh.apiserver.service.annotations in a helm-values file to be used with CLI. Can you test this, and note any issues?

Then, going back to your original suggestion, what you could implement is logic within the CLI to do this type of configuration in a more automated way. The current minimal set of parameters needed to establish a Clustermesh with the CLI can be found in the latest documentation updates.

However, if you feel that the "helm-values way" is sufficiently simple for an NLB setup on AWS, then what we can do is add your procedure to the documentation and not make any changes to the CLI.

As for the question of the DaemonSet restart, I think this is a possibility with a variety of upgrade scenarios, so let's consider that a separate issue.

@asauber Okay. Let me try. I will do a minimal implementation and verification first, and once I am sure, I will request a review to make sure I am going in the right direction. Thx 🙏

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs.

This issue has not seen any activity since it was marked stale. Closing.