Memory leak on linux

Hi, I discovered a strange memory leak on linux. That happens only if the application is overloaded. I created one udp server that forwards data to clients connected to it. With a big number of connected clients, sending a lot of data, it is normal that the cpu goes to 100% and the application starts to use more memory. But stopping and disconnecting the clients doesn't produce a memory release on the server. Using a windows machine with the same hardware doesn't have the same problem.

I created a multi-threaded client/server application that can be used to reproduce the issue. I attached a zip containing the code that reproduces the issue. Inside _readme.txt you will have more detailed informations on my test.

The application can be built on both linux and windows and the only dependency is boost asio. I'm using boost 1.78

Can you help me and tell me if the problem exists, or it is due to my wrong usage of the library? Also can you suggest to me how to debug asio on linux? Which tools should I use to go deeper inside asio?

Thank you in advance for your help on this.

Emanuele asio_udp_sfu_standalone.zip

Compiling with clang instead of gcc I get these memory leaks report. Maybe it can help to understand. leaks.txt d

I created a smaller standalone example that reproduces the memory leak in a single application without sockets, only timers and strand, it should easier to use. Also without any external deps

@emabiz, @chriskohlhoff, I think the problem is synchronization. I can't reproduce the problem with "-fsanitize=leak" enabled.

I modified a bit my memory_leak example, so that it is possible to use malloc_trim and malloc_stats. It seems that the memory is not in use, but it is not released by the allocator. Then technically this is not a memory leak.

My feeling is that for some reason this effect is produced asking too much to asio/epoll. And that performances of asio under linux (epoll) are a bit worse than on windows (iocp), using the same hardware. Is that possible? Is it mostly due to epoll itself or asio? Is it possible to configure asio in some way to have better performances under linux?

@emabiz

Is it possible to configure asio in some way to have better performances under linux?

On Linux use dedicated io_context per thread, instead of shared io_context for multiple thread.

@emabiz

After calling the malloc_trim function, htop shows 0% memory usage.

This is not asio issue. This is default behaviour for glibc allocator. By default glibc allocator does not release memory to OS.

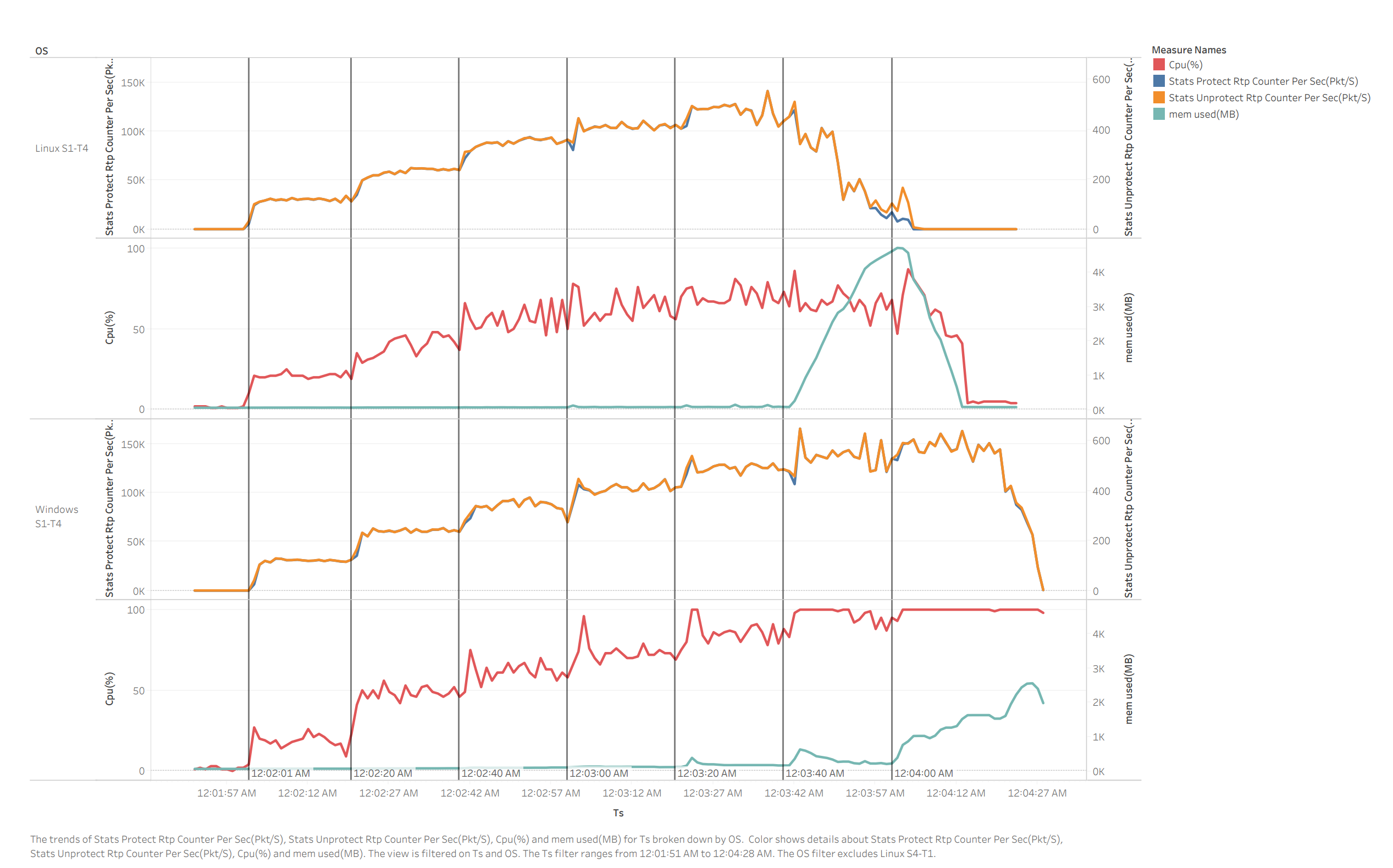

This is the result of a real application test. The server forwards UDP RTP video packets coming from 1/6 transmitters to 256 receivers. The test has been done on google cloud platform lan using google virtual machines: one windows and one linux for the server, 256 win for the clients. Both servers have 4 cores and 16GB of ram. Obviously the hypervisor could affect results, but they are extremely reproducible. Asio is configured to use 1 io_context with 4 threads in both cases (I tested also 4 io_context with 1 thread each, but the results on linux are even worse). Every 20 seconds one new client starts to transmit. Both servers reached the limit almost at the same level, receiving about 600 packets per seconds from transmitters and forwarding about 150K packets per second to receivers. What I notice is that on windows the cpu is used better, it stays at 100% on all cores and the video on receiver side continue to be smooth, also when the memory on the server start growing, because the application is not able to manage the amount of data. On linux I notice that the limit is reached before, with one less transmitter, and when this condition is reached, the memory starts to grow immediately and the performances in forwarding packets drop dramatically. The video on the receivers starts to be immediately frozen.

If I use multiple io_context with 1 thread each, as suggested, what I notice is that the cores of the cpu starts to be used at 100% intermittently and the total result is not good.

I understand that with a complex real application the problems can be everywhere, that's why with my above example I am trying to reproduce this scenario using only one application and no sockets (I am using timers and strands in order to reproduce the socket rx/tx behavior that I am using in the real application), in order to understand why the performances on linux are different and try to improve this part, if it is possible. And it seems to me that the problem I get in the real world is very similar to the one reproduced with the example

I totally agree that there is no memory leak in asio, but the growth of memory seems a symptom of something that can be improved in some area of the code.