Why is the disk usage much larger than the available space displayed by the `df` command after disabling ext4 journal?

Describe the bug

A clear and concise description of what the bug is.

Environment details

- Image/version of Ceph CSI driver :

cephcsi:v3.5.1 - Helm chart version :

- Kernel version :

🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel) uname -a

Linux k1 3.10.0-1127.el7.x86_64 #1 SMP Tue Mar 31 23:36:51 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

- Mounter used for mounting PVC (for cephFS its

fuseorkernel. for rbd itskrbdorrbd-nbd) : krbd - Kubernetes cluster version :

🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel) kubectl version

Client Version: version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.7", GitCommit:"b56e432f2191419647a6a13b9f5867801850f969", GitTreeState:"clean", BuildDate:"2022-02-16T11:50:27Z", GoVersion:"go1.16.14", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.7", GitCommit:"b56e432f2191419647a6a13b9f5867801850f969", GitTreeState:"clean", BuildDate:"2022-02-16T11:43:55Z", GoVersion:"go1.16.14", Compiler:"gc", Platform:"linux/amd64"}

- Ceph cluster version :

🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel)ceph --version

ceph version 15.2.16 (d46a73d6d0a67a79558054a3a5a72cb561724974) octopus (stable)

Steps to reproduce

Steps to reproduce the behavior:

- Create a storageclass with storageclass

- Then create pvc, and test pod like below

➜ /root ☞ cat csi-rbd/examples/pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Gi

storageClassName: csi-rbd-sc

🍺 /root ☞cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: csi-rbd-demo-pod

spec:

containers:

- name: web-server

image: docker.io/library/nginx:latest

volumeMounts:

- name: mypvc

mountPath: /var/lib/www/html

volumes:

- name: mypvc

persistentVolumeClaim:

claimName: rbd-pvc

readOnly: false

- The following steps are executed in ceph cluster

🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel)rbd ls -p pool-51312494-44b2-43bc-8ba1-9c4f5eda3287 csi-vol-ad0bba2a-49fc-11ed-8ab9-3a534777138b

🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel)rbd map pool-51312494-44b2-43bc-8ba1-9c4f5eda3287/csi-vol-ad0bba2a-49fc-11ed-8ab9-3a534777138b /dev/rbd0 🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel)lsblk -f NAME FSTYPE LABEL UUID MOUNTPOINT sr0 vda ├─vda1 xfs a080444c-7927-49f7-b94f-e20f823bbc95 /boot ├─vda2 LVM2_member jDjk4o-AaZU-He1S-8t56-4YEY-ujTp-ozFrK5 │ ├─centos-root xfs 5e322b94-4141-4a15-ae29-4136ae9c2e15 / │ └─centos-swap swap d59f7992-9027-407a-84b3-ec69c3dadd4e └─vda3 LVM2_member Qn0c4t-Sf93-oIDr-e57o-XQ73-DsyG-pGI8X0 └─centos-root xfs 5e322b94-4141-4a15-ae29-4136ae9c2e15 / vdb vdc rbd0 ext4 e381fa9f-9f94-43d1-8f3a-c2d90bc8de27

🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel)mount /dev/rbd0 /mnt/ext4 🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel)df -hT | egrep 'rbd|Type' Filesystem Type Size Used Avail Use% Mounted on /dev/rbd0 ext4 49G 53M 49G 1% /mnt/ext4 🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel)umount /mnt/ext4 🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel)tune2fs -o journal_data_writeback /dev/rbd0 tune2fs 1.46.5 (30-Dec-2021) 🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel)tune2fs -O "^has_journal" /dev/rbd0 tune2fs 1.46.5 (30-Dec-2021) 🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel)e2fsck -f /dev/rbd0 e2fsck 1.46.5 (30-Dec-2021) Pass 1: Checking inodes, blocks, and sizes Pass 2: Checking directory structure Pass 3: Checking directory connectivity Pass 4: Checking reference counts Pass 5: Checking group summary information /dev/rbd0: 11/3276800 files (0.0% non-contiguous), 219022/13107200 blocks 🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel)mount /dev/rbd0 /mnt/ext4 🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel)df -hT | egrep 'rbd|Type' Filesystem Type Size Used Avail Use% Mounted on /dev/rbd0 ext4 64Z 64Z 50G 100% /mnt/ext4

Actual results

the disk usage much larger than the available space displayed by the df command after disabling ext4 journal

🍺 /root/go/src/ceph/ceph-csi ☞ git:(devel)df -T | egrep 'rbd|Type' Filesystem Type 1K-blocks Used Available Use% Mounted on /dev/rbd0 ext4 73786976277711028224 73786976277659475512 51536328 100% /mnt/ext4

Expected behavior

The df command could show disk usage normally.

@microyahoo looks like you are doing nothing with cephcsi driver apart from creating the image, it is good to ask this in ceph mailing list instead of cephcsi

cc @idryomov

OK, thanks.

This doesn't seem to have anything to do with RBD either. I suspect you would see the same behavior with a loop device (or any other device) on that kernel.

I tried to create a loop device on the local node, it is normal. In addition, I found a very strange phenomenon, if I only create pvc, and then do the above operations, it is normal, but if I apply this pvc in a pod, the above problem will occur. I suspect that the file system options setup by k8s or csi caused this problem. @idryomov

Did you supply mkfs options that Ceph CSI hard-codes: -m0 -Enodiscard,lazy_itable_init=1,lazy_journal_init=1 when trying with a loop device?

if I only create pvc, and then do the above operations, it is normal, but if I apply this pvc in a pod, the above problem will occur. I suspect that the file system options setup by k8s or csi caused this problem

Did you mount the filesystem before doing these operations when trying with a loop device? Applying the PVC does more than just mount of course, but that's the most obvious difference. Note that Ceph CSI may be passing some mount options as well.

Did you supply mkfs options that Ceph CSI hard-codes:

-m0 -Enodiscard,lazy_itable_init=1,lazy_journal_init=1when trying with a loop device?

Yes, I followed your commands and it's normal like below. @idryomov

[root@k1 ~]# truncate -s 50G backingfile

[root@k1 ~]# losetup -f --show backingfile

/dev/loop0

[root@k1 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sr0 11:0 1 1024M 0 rom

vda 252:0 0 100G 0 disk

├─vda1 252:1 0 1G 0 part /boot

├─vda2 252:2 0 59G 0 part

│ ├─centos-root 253:0 0 93G 0 lvm /

│ └─centos-swap 253:1 0 6G 0 lvm

└─vda3 252:3 0 40G 0 part

└─centos-root 253:0 0 93G 0 lvm /

vdb 252:16 0 50G 0 disk

vdc 252:32 0 50G 0 disk

loop0 7:0 0 50G 0 loop

[root@k1 ~]# mkfs.ext4 -m0 -Enodiscard,lazy_itable_init=1,lazy_journal_init=1 /dev/loop0

mke2fs 1.46.5 (30-Dec-2021)

Creating filesystem with 13107200 4k blocks and 3276800 inodes

Filesystem UUID: 1a89454f-38c6-445a-a2b4-762ec64cbbd9

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624, 11239424

Allocating group tables: done

Writing inode tables: done

Creating journal (65536 blocks): done

Writing superblocks and filesystem accounting information: done

[root@k1 ~]# mount -o rw,relatime,discard,stripe=1024,data=ordered,_netdev /dev/loop0 /mnt/ext4/

[root@k1 ~]# df -hT | egrep "Type|ext"

Filesystem Type Size Used Avail Use% Mounted on

/dev/loop0 ext4 49G 24K 49G 1% /mnt/ext4

[root@k1 ~]# umount /mnt/ext4

[root@k1 ~]# tune2fs -o journal_data_writeback /dev/loop0

tune2fs 1.46.5 (30-Dec-2021)

[root@k1 ~]# tune2fs -O "^has_journal" /dev/loop0

tune2fs 1.46.5 (30-Dec-2021)

[root@k1 ~]# e2fsck /dev/loop0

e2fsck 1.46.5 (30-Dec-2021)

/dev/loop0: clean, 11/3276800 files, 219022/13107200 blocks

[root@k1 ~]# mount -o rw,relatime,discard,stripe=1024,data=ordered,_netdev /dev/loop0 /mnt/ext4/

[root@k1 ~]# df -hT | egrep "Type|ext"

Filesystem Type Size Used Avail Use% Mounted on

/dev/loop0 ext4 50G 24K 50G 1% /mnt/ext4

I created a rbd image throug k8s PVC, then map and mount it. It's normal to do like this.

[root@k1 ~]# rbd ls -p pool-51312494-44b2-43bc-8ba1-9c4f5eda3287

csi-vol-da7f0cdc-4a3a-11ed-8ab9-3a534777138b

[root@k1 ~]# lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

sr0

vda

├─vda1 xfs a080444c-7927-49f7-b94f-e20f823bbc95 /boot

├─vda2 LVM2_member jDjk4o-AaZU-He1S-8t56-4YEY-ujTp-ozFrK5

│ ├─centos-root xfs 5e322b94-4141-4a15-ae29-4136ae9c2e15 /

│ └─centos-swap swap d59f7992-9027-407a-84b3-ec69c3dadd4e

└─vda3 LVM2_member Qn0c4t-Sf93-oIDr-e57o-XQ73-DsyG-pGI8X0

└─centos-root xfs 5e322b94-4141-4a15-ae29-4136ae9c2e15 /

vdb

vdc

[root@k1 ~]# rbd map pool-51312494-44b2-43bc-8ba1-9c4f5eda3287/csi-vol-da7f0cdc-4a3a-11ed-8ab9-3a534777138b

/dev/rbd0

[root@k1 ~]# mkfs.ext4 -m0 -Enodiscard,lazy_itable_init=1,lazy_journal_init=1 /dev/rbd0

mke2fs 1.46.5 (30-Dec-2021)

Creating filesystem with 13107200 4k blocks and 3276800 inodes

Filesystem UUID: 33ca621a-1da3-4997-9acc-f0f41d063e81

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624, 11239424

Allocating group tables: done

Writing inode tables: done

Creating journal (65536 blocks): done

Writing superblocks and filesystem accounting information: done

[root@k1 ~]# lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

sr0

vda

├─vda1 xfs a080444c-7927-49f7-b94f-e20f823bbc95 /boot

├─vda2 LVM2_member jDjk4o-AaZU-He1S-8t56-4YEY-ujTp-ozFrK5

│ ├─centos-root xfs 5e322b94-4141-4a15-ae29-4136ae9c2e15 /

│ └─centos-swap swap d59f7992-9027-407a-84b3-ec69c3dadd4e

└─vda3 LVM2_member Qn0c4t-Sf93-oIDr-e57o-XQ73-DsyG-pGI8X0

└─centos-root xfs 5e322b94-4141-4a15-ae29-4136ae9c2e15 /

vdb

vdc

rbd0 ext4 33ca621a-1da3-4997-9acc-f0f41d063e81

[root@k1 ~]# mount -o rw,relatime,discard,stripe=1024,data=ordered,_netdev /dev/rbd0 /mnt/ext4/

[root@k1 ~]# df -hT | egrep "Type|ext"

Filesystem Type Size Used Avail Use% Mounted on

/dev/rbd0 ext4 49G 24K 49G 1% /mnt/ext4

[root@k1 ~]# umount /mnt/ext4

[root@k1 ~]# tune2fs -o journal_data_writeback /dev/rbd

rbd/ rbd0

[root@k1 ~]# tune2fs -o journal_data_writeback /dev/rbd

rbd/ rbd0

[root@k1 ~]# tune2fs -o journal_data_writeback /dev/rbd0

tune2fs 1.46.5 (30-Dec-2021)

[root@k1 ~]# tune2fs -O "^has_journal" /dev/rbd0

tune2fs 1.46.5 (30-Dec-2021)

[root@k1 ~]# e2fsck -f /dev/rbd0

e2fsck 1.46.5 (30-Dec-2021)

Pass 1: Checking inodes, blocks, and sizes

Pass 2: Checking directory structure

Pass 3: Checking directory connectivity

Pass 4: Checking reference counts

Pass 5: Checking group summary information

/dev/rbd0: 11/3276800 files (0.0% non-contiguous), 219022/13107200 blocks

[root@k1 ~]# mount -o rw,relatime,discard,stripe=1024,data=ordered,_netdev /dev/rbd0 /mnt/ext4/

[root@k1 ~]# df -hT | egrep 'rbd|Type'

Filesystem Type Size Used Avail Use% Mounted on

/dev/rbd0 ext4 50G 24K 50G 1% /mnt/ext4

OK, it sounds like the issue is caused by something else that happens as part of "applying the PVC", not the mount itself. @Madhu-1 can you investigate?

@idryomov Thanks for checking i will look into it.

This problem can be stably reproduced when pvc is applied in pods.

🍺 /root/go/src/deeproute.ai/smd ☞ git:(pool) rbd map pool-51312494-44b2-43bc-8ba1-9c4f5eda3287/csi-vol-8a32112e-4a3e-11ed-8ab9-3a534777138b

/dev/rbd0

🍺 /root/go/src/deeproute.ai/smd ☞ git:(pool) lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

sr0

vda

├─vda1 xfs a080444c-7927-49f7-b94f-e20f823bbc95 /boot

├─vda2 LVM2_member jDjk4o-AaZU-He1S-8t56-4YEY-ujTp-ozFrK5

│ ├─centos-root xfs 5e322b94-4141-4a15-ae29-4136ae9c2e15 /

│ └─centos-swap swap d59f7992-9027-407a-84b3-ec69c3dadd4e

└─vda3 LVM2_member Qn0c4t-Sf93-oIDr-e57o-XQ73-DsyG-pGI8X0

└─centos-root xfs 5e322b94-4141-4a15-ae29-4136ae9c2e15 /

vdb

vdc

rbd0 ext4 618f048e-a7f6-47fb-90e5-fb3afd9248a3

🍺 /root/go/src/deeproute.ai/smd ☞ git:(pool) mount /dev/rbd0 /mnt/ext4

🍺 /root/go/src/deeproute.ai/smd ☞ git:(pool) df -hT | egrep 'rbd|Type'

Filesystem Type Size Used Avail Use% Mounted on

/dev/rbd0 ext4 49G 53M 49G 1% /mnt/ext4

🍺 /root/go/src/deeproute.ai/smd ☞ git:(pool) umount /mnt/ext4

🍺 /root/go/src/deeproute.ai/smd ☞ git:(pool) tune2fs -o journal_data_writeback /dev/rbd0

tune2fs 1.46.5 (30-Dec-2021)

🍺 /root/go/src/deeproute.ai/smd ☞ git:(pool) tune2fs -O "^has_journal" /dev/rbd0

tune2fs 1.46.5 (30-Dec-2021)

🍺 /root/go/src/deeproute.ai/smd ☞ git:(pool) e2fsck -f /dev/rbd0

e2fsck 1.46.5 (30-Dec-2021)

Pass 1: Checking inodes, blocks, and sizes

Pass 2: Checking directory structure

Pass 3: Checking directory connectivity

Pass 4: Checking reference counts

Pass 5: Checking group summary information

/dev/rbd0: 11/3276800 files (0.0% non-contiguous), 219022/13107200 blocks

🍺 /root/go/src/deeproute.ai/smd ☞ git:(pool) df -hT | egrep 'rbd|Type'

Filesystem Type Size Used Avail Use% Mounted on

🍺 /root/go/src/deeproute.ai/smd ☞ git:(pool) mount /dev/rbd0 /mnt/ext4

🍺 /root/go/src/deeproute.ai/smd ☞ git:(pool) df -hT | egrep 'rbd|Type'

Filesystem Type Size Used Avail Use% Mounted on

/dev/rbd0 ext4 64Z 64Z 50G 100% /mnt/ext4

Attach the partial dmesg

🍺 /root/go/src/deeproute.ai/smd ☞ git:(pool)dmesg -T | grep rbd

[Wed Oct 12 23:02:08 2022] rbd: rbd0: capacity 53687091200 features 0x1

[Wed Oct 12 23:02:17 2022] EXT4-fs (rbd0): mounted filesystem with ordered data mode. Opts: (null)

[Wed Oct 12 23:03:06 2022] EXT4-fs (rbd0): mounted filesystem without journal. Opts: (null)

Thanks for your advice and help @idryomov @Madhu-1

[🎩︎]mrajanna@fedora rbd $]kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

rbd-pvc Bound pvc-3e8c8292-da02-4ddd-8112-186811b9c992 1Gi RWO rook-ceph-block 2s

[🎩︎]mrajanna@fedora rbd $]kubectl create -f pod.yaml

pod/csirbd-demo-pod created

[🎩︎]mrajanna@fedora rbd $]kubectl exec -it csirbd-demo-pod -- sh

# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 28G 4.3G 22G 17% /

tmpfs 64M 0 64M 0% /dev

tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/vda1 28G 4.3G 22G 17% /etc/hosts

shm 64M 0 64M 0% /dev/shm

/dev/rbd0 976M 2.6M 958M 1% /var/lib/www/html

tmpfs 3.9G 12K 3.9G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 2.0G 0 2.0G 0% /proc/acpi

tmpfs 2.0G 0 2.0G 0% /proc/scsi

tmpfs 2.0G 0 2.0G 0% /sys/firmware

#

I tested with cephcsi 3.7.1 i dont see any problem, never seens this issue in cephcsi.

For 50Gb pvc

[🎩︎]mrajanna@fedora rbd $]kubectl execectl exec -it csirbd-demo-pod -- sh

# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 28G 4.3G 22G 17% /

tmpfs 64M 0 64M 0% /dev

tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/vda1 28G 4.3G 22G 17% /etc/hosts

shm 64M 0 64M 0% /dev/shm

/dev/rbd0 49G 53M 49G 1% /var/lib/www/html

tmpfs 3.9G 12K 3.9G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 2.0G 0 2.0G 0% /proc/acpi

tmpfs 2.0G 0 2.0G 0% /proc/scsi

tmpfs 2.0G 0 2.0G 0% /sys/firmware

# exit

[🎩︎]mrajanna@fedora rbd $]kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

rbd-pvc Bound pvc-b75cdad1-01fc-40f5-9ed0-a7a8e0e89fe7 50Gi RWO rook-ceph-block 68s

Hi @Madhu-1 , you could disable ext4 journal option after creating pods.

- create pvc and pod

- delete pod, because

e2fsckshould make sure the disk is not used. - map the rbd image in ceph cluster

- disable ext4 journal, then run

e2fsck. - mount the block device in ceph cluster or recreate the pod again.

- check the output of

df.

@microyahoo why ext4 journal option need to be disabled?

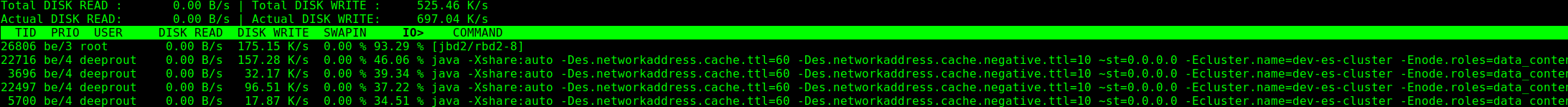

Because jbd2 process caused the high IO, I want to disable ext4 journal to optimize it.

Below is the test it did, i hope this is what you need to do to follow to disable journaling

[🎩︎]mrajanna@fedora rbd $]kubectl execectl exec -it csirbd-demo-pod -- sh

# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 28G 4.3G 22G 17% /

tmpfs 64M 0 64M 0% /dev

tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/vda1 28G 4.3G 22G 17% /etc/hosts

shm 64M 0 64M 0% /dev/shm

/dev/rbd0 49G 53M 49G 1% /var/lib/www/html

tmpfs 3.9G 12K 3.9G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 2.0G 0 2.0G 0% /proc/acpi

tmpfs 2.0G 0 2.0G 0% /proc/scsi

tmpfs 2.0G 0 2.0G 0% /sys/firmware

# exit

[🎩︎]mrajanna@fedora rbd $]kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

rbd-pvc Bound pvc-b75cdad1-01fc-40f5-9ed0-a7a8e0e89fe7 50Gi RWO rook-ceph-block 68s

[🎩︎]mrajanna@fedora ceph-csi $]kubectl delete po csirbd-demo-pod

pod "csirbd-demo-pod" deleted

sh-4.4# rbd ls replicapool

csi-vol-ab596f94-4a44-11ed-8af4-d2297377c19c

sh-4.4# rbd map replicapool/csi-vol-ab596f94-4a44-11ed-8af4-d2297377c19c

/dev/rbd0

sh-4.4# tune2fs -O "^has_journal" /dev/rbd0

tune2fs 1.45.6 (20-Mar-2020)

sh-4.4# e2fsck -f /dev/rbd0

e2fsck 1.45.6 (20-Mar-2020)

Pass 1: Checking inodes, blocks, and sizes

Pass 2: Checking directory structure

Pass 3: Checking directory connectivity

Pass 4: Checking reference counts

Pass 5: Checking group summary information

/dev/rbd0: 11/3276800 files (0.0% non-contiguous), 219022/13107200 blocks

sh-4.4

sh-4.4# rbd unmap /dev/rbd0

sh-4.4# exit

exit

[🎩︎]mrajanna@fedora rook $]kubectl create -f deploy/examples/csi/rbd/pod.yaml

pod/csirbd-demo-pod created

[🎩︎]mrajanna@fedora rook $]kubectl get po

NAME READY STATUS RESTARTS AGE

csirbd-demo-pod 1/1 Running 0 5s

[🎩︎]mrajanna@fedora rook $]kubectl exec -it csirbd-demo-pod -- sh

# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 28G 4.4G 22G 17% /

tmpfs 64M 0 64M 0% /dev

tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/vda1 28G 4.4G 22G 17% /etc/hosts

shm 64M 0 64M 0% /dev/shm

/dev/rbd0 50G 53M 50G 1% /var/lib/www/html

tmpfs 3.9G 12K 3.9G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 2.0G 0 2.0G 0% /proc/acpi

tmpfs 2.0G 0 2.0G 0% /proc/scsi

tmpfs 2.0G 0 2.0G 0% /sys/firmware

#

sh-4.4# dmesg | grep EXT4

[ 4.682295] EXT4-fs (vda1): mounted filesystem with ordered data mode. Opts: (null)

[ 449.667910] EXT4-fs (rbd0): mounted filesystem with ordered data mode. Opts: (null)

[ 623.732915] EXT4-fs (rbd0): mounted filesystem with ordered data mode. Opts: (null)

[58541.333193] EXT4-fs (rbd0): mounted filesystem without journal. Opts: (null)

Isn't the journal used to write data to the ext4 filesystem? If you disabled the journal, would not an other process do the same I/O? Or maybe the workload is very filesystem metadata intense, in that case the app might benefit from some optimizations for storage...

If you really want to disable the journal, you could consider using ext2 as filesystem type instead. ext2 does not have journalling at all.

We might want to support a parameter in the StorageClass that contains options for mkfs, that would be an alternative path to disable journalling for certain volumes.

Thank you for your investigation. I have two environments that can be reproduced stably. I am not sure if it is related to the system. @Madhu-1

[root@node01 smd]# uname -a

Linux node01 3.10.0-1160.45.1.el7.x86_64 #1 SMP Wed Oct 13 17:20:51 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux

🍺 /root ☞ uname -a

Linux k1 3.10.0-1127.el7.x86_64 #1 SMP Tue Mar 31 23:36:51 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

Thanks for your advice, I will try it. @nixpanic

This issue has been automatically marked as stale because it has not had recent activity. It will be closed in a week if no further activity occurs. Thank you for your contributions.

This issue has been automatically closed due to inactivity. Please re-open if this still requires investigation.