elastic-ci-stack-for-aws

elastic-ci-stack-for-aws copied to clipboard

elastic-ci-stack-for-aws copied to clipboard

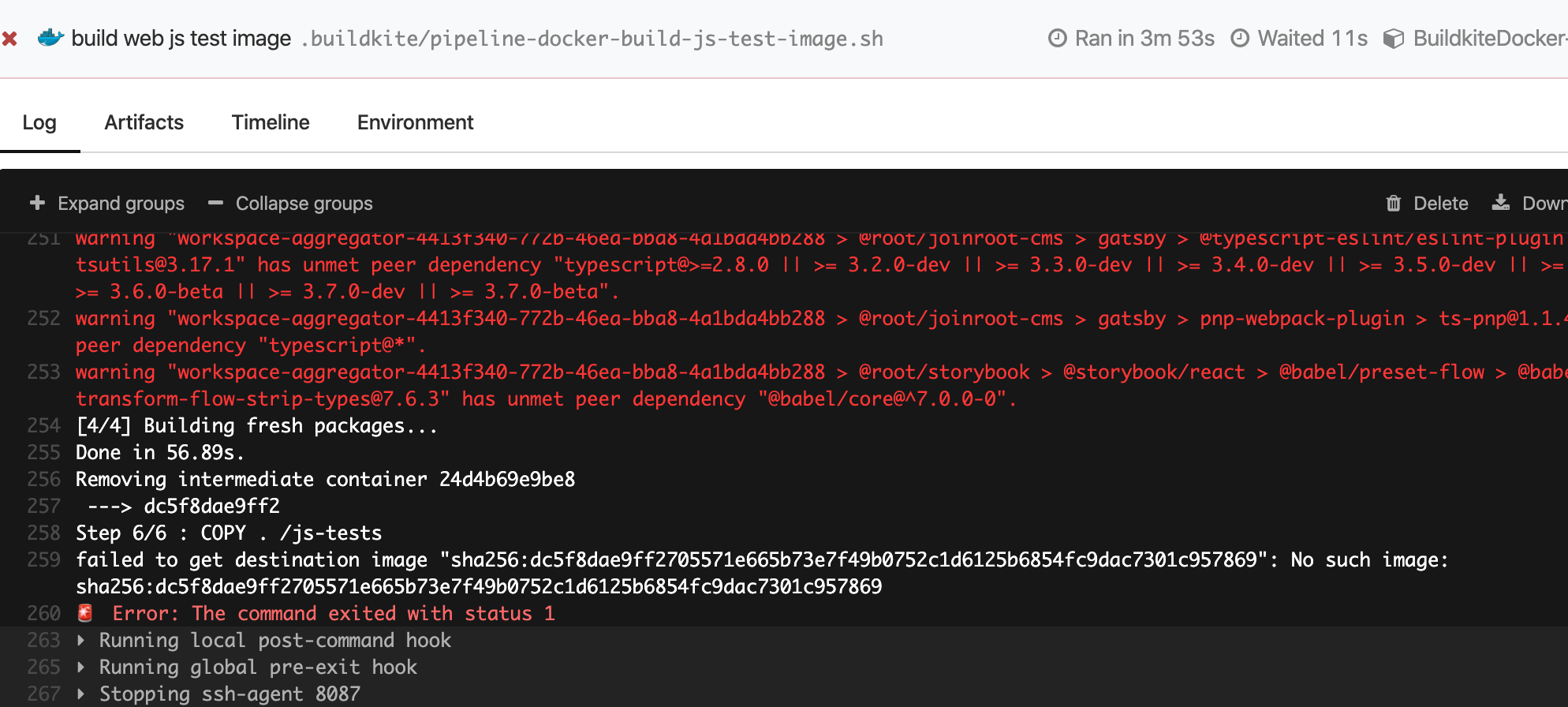

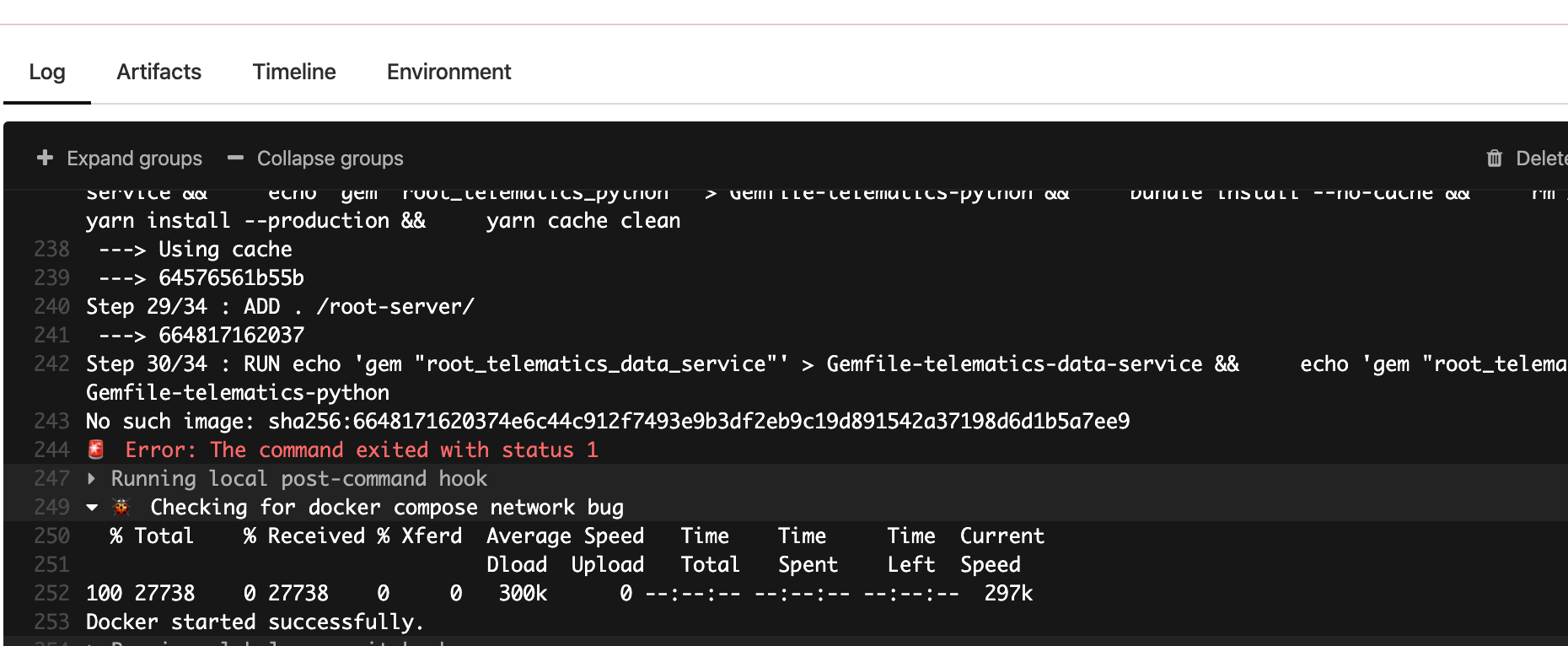

Docker image pruned during builds exit with `No such image: sha256:` `failed to get digest sha256:` `failed to get destination image` and `unable to find image sha256`

Docker images pruned during build

After some initial research, we identified that the hourly cron job was causing our builds to fail with "No such image: sha256:" and other related issues.

We gathered the job_failed_at timestamp value and figured out that all were exactly one hour apart and happen at the start of the hour. When we retry the job it passes without any issue.

Possible solution

If there is a way to make DOCKER_PRUNE_UNTIL value to 2h it might be a good bandage for now.

Or, the enhancement suggested here would also work. Is there an actual timeline for this issue and if at all this enhancement would fix the above issue for us. On the whole, this is costing 2mins of our total build time and would like to find some resolution as soon as possible. We would be willing to discuss a more permanent solution for the problem as well.

Buildkite logs

| failed to get destination image "sha256:" | No such image: sha256: |

|---|---|

|

|

@lox Is this something you are aware of? We would like to work with you in resolving this issue.

@TejuGupta I have endured this for a long time, and finally I discovered that the docker build command generates temporary layers during its execution, and these layers are cleaned up by the docker image prune command. Even if the filter parameter is specified, it cannot prevent the temporary layers from being cleaned up.

UPDATE: I have tested docker 24.0.5. The bug seems to be fixed.

Hi, we've considered changing the DOCKER_PRUNE_UNTIL, but we think it won't completely solve the issue at the cost of making it less effective at cleaning up disk space.

We think some kind of quarantine mode for the agent so that the cleaning does not occur while jobs are running is needed. We are considering building such a feature.