Optimize "Best" Snapshot

Describe what you are trying to accomplish and why in non technical terms I want to ability to disable the snapshot updates, so that the snapshot that is displayed for an event is the snapshot that triggered that event. the benefit of this would be: easy viewing of the image that triggered event in the "events" tab in the UI; and when you have an object that triggered an event that leaves all required zones but snapshot gets updated to an image of that object not the required zones it creates confusion of why that triggered an event without looking into the clip to see what happened.

Describe the solution you'd like I would like a Boolean true/false property in the frigate config for snapshots (something like keep_best or keep_first) to enable either keeping the best snapshot (current functionality) or keeping the snapshot of the image that triggered the event.

I think this is what you are getting at:

As a user, I need to be able to determine where bounding boxes were located during the event so that I can adjust my filters and zones to avoid false positives.

I am suggesting a feature to enhance the user experience by providing the option to quickly and easily identify the object that triggered an event in the UI. The current system in Frigate utilizes a "Best" image approach, either by selecting the image with the highest score or the one that reaches the "best_image_timeout." However, I believe that it would be more beneficial for the user to have the option to choose between the "Best" image or the "First" image that initiated the event as the snapshot in the Events Tab. This can be achieved by adding a configurable parameter for this purpose.

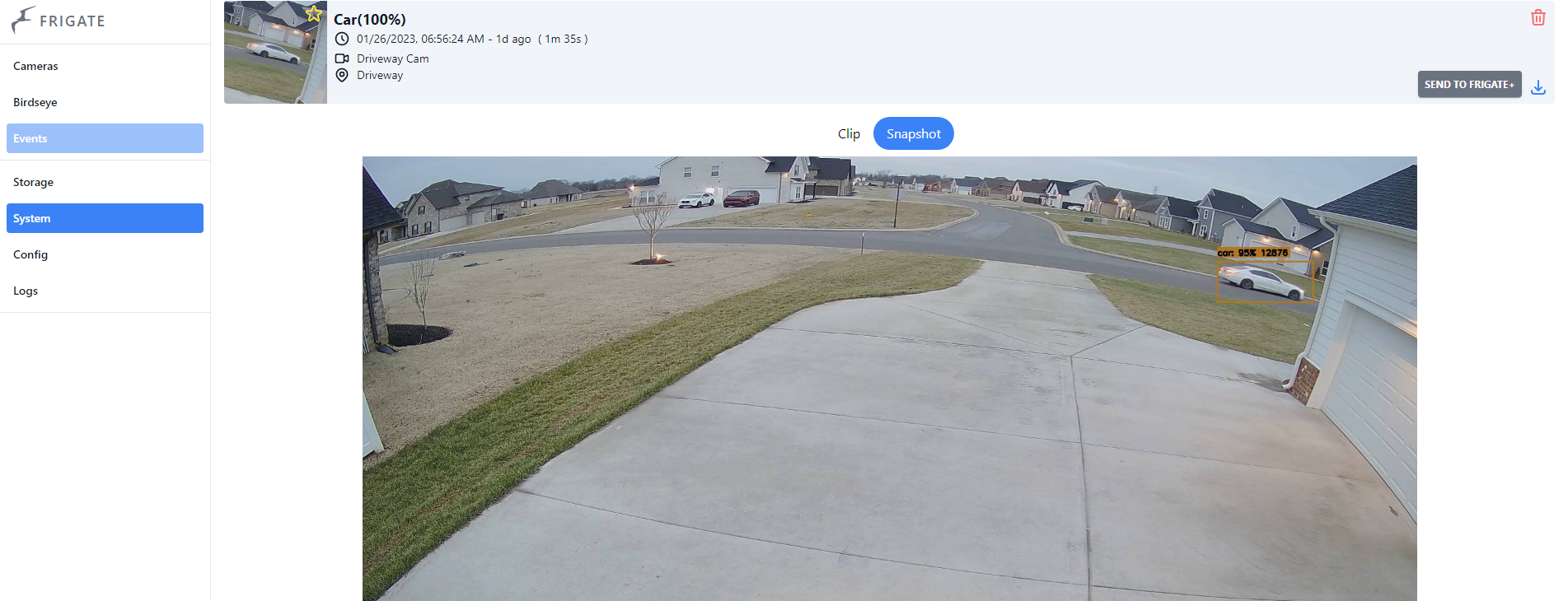

an example:

As depicted above, the vehicle was captured entering my driveway zone, as confirmed by the video clip. However, the snapshot selected as the "Best" image, was of the vehicle as it was exiting the zone and already outside my designated areas. This presents a challenge for users scrolling through the UI to review events, as the snapshot provided does not accurately reflect the object's presence in the zones. I am suggesting a feature to improve the user experience by providing a more relevant snapshot of the event, specifically one that captures the object within the designated zones.

As depicted above, the vehicle was captured entering my driveway zone, as confirmed by the video clip. However, the snapshot selected as the "Best" image, was of the vehicle as it was exiting the zone and already outside my designated areas. This presents a challenge for users scrolling through the UI to review events, as the snapshot provided does not accurately reflect the object's presence in the zones. I am suggesting a feature to improve the user experience by providing a more relevant snapshot of the event, specifically one that captures the object within the designated zones.

The snapshot that I would have expected to see, based on the event, is the one depicted here:

As displayed in the above image retrieved from the video clip, it is clearly evident that a vehicle entering my driveway zone was the triggering event. This image effectively illustrates the occurrence and provides a clear understanding of the event for the user.

As displayed in the above image retrieved from the video clip, it is clearly evident that a vehicle entering my driveway zone was the triggering event. This image effectively illustrates the occurrence and provides a clear understanding of the event for the user.

@jsbowling42 The point being made above is that a single snapshot that is the first won't be the best way to solve this problem. We could instead create a feature which saves the MQTT payloads that are sent for each event in some way. Then we could accurately reconstruct the event at those frames with what frigate saw (not just an approximation like the current way of doing it)

@NickM-27 When I refer to 'first', I mean capturing the snapshot at the moment of triggering the event , providing a clear and accurate representation of the event.

@NickM-27 Could you please provide more information about your proposed solution? I am uncertain about how Frigate's backend processes are involved. Specifically, how using the initial image at the timestamp of the "new" event, after it goes through Frigate's filtering process to determine if it is a valid event, wouldn't be a valid solution.

"event" actually means "tracked object". The "first" snapshot of a tracked object will be wherever it was first seen regardless of the zones.

The required zones determine whether or not the video related to the tracked object should be retained. I think what you are suggesting is the moment the tracked object meets criteria to be a saved as an event is actually the "best" snapshot.

Based on that, it's not really about avoiding false positives as I had assumed. Now I think you are saying:

As a user, I want the snapshot to demonstrate how the tracked object entered the zones required to store an event so I can more easily understand why the event was created.

The problem with this approach is it doesn't work well for situations where a person enters my front yard and approaches my door. In that situation, I don't want the snapshot to be the person when they are far away. I want it to be when they are standing in front of the door so I can better recognize them. Similarly for cars entering my driveway, I want the snapshot to be when the car gets closer and the license plate is more readable.

Is the frame the tracked object meets criteria to store an event really the "best" frame? Future versions will be able to select the best frame for a person object as the one with the largest overlapping face detection.

The required zones determine whether or not the video related to the tracked object should be retained. I think what you are suggesting is the moment the tracked object meets criteria to be a saved as an event is actually the "best" snapshot.

Based on that, it's not really about avoiding false positives as I had assumed. Now I think you are saying:

As a user, I want the snapshot to demonstrate how the tracked object entered the zones required to store an event so I can more easily understand why the event was created.

Yes this is what I'm saying.

The problem with this approach is it doesn't work well for situations where a person enters my front yard and approaches my door. In that situation, I don't want the snapshot to be the person when they are far away. I want it to be when they are standing in front of the door so I can better recognize them. Similarly for cars entering my driveway, I want the snapshot to be when the car gets closer and the license plate is more readable.

I understand your perspective now, thanks for elaborating. In your example, if a person were to enter my front yard (assuming it is a required zone), I would want the snapshot to capture that moment. I can always refer to the clip or recording for additional details such as facial features or license plate numbers, if necessary, after the event has been deemed worthy of further attention.

Is the frame the tracked object meets criteria to store an event really the "best" frame? Future versions will be able to select the best frame for a person object as the one with the largest overlapping face detection.

I concur that this is an intriguing concept. Given that this is the direction that future versions are headed, I suggest that a more optimal solution would be to incorporate a defined window of time from which Frigate looks for the "Best" frame after the tracked object satisfies the criteria for storing an event. This would enable the user to specify a time frame, following the store criteria being met, for identifying the 'best' frame. This approach would i think work for future versions for looking for a "face", it would just look for the largest overlapping face detection during that user defined time frame. i think this would solve or come close to solving my current issue as well.

I think the right thing to do here is to refine the definition of "best". Specifically, I think you could argue that a frame where the object does not meet the required zones cannot be the best snapshot even if it has been longer than the best image timeout.

@blakeblackshear I completely agree, however, it's worth mentioning that a frame capturing an object outside of the designated area (required zones) or taken after the initial few seconds of the event may not accurately represent the event. The longer the frame is delayed from the start, the less reflective it becomes of the event's essence. Capturing a snapshot well into the event may cause confusion about its context within the larger occurrence.

I vote for it. I have many snapshots when the person is moving within the zone, just to have a snapshot too late when the person disappeared behind the wall (and snapshot shows just the wall with no person) or being so close to the camera where I can see only part of the face.

I would like to be able to 'tweak' snapshot taking as well. In my case persons are walking past the doorbell cam, the snapshot is almost always too late when the person has already passed the cam and taking a snapshot of their back.

I have some camera setups where the largest area of an object is obscured. Frigate is choosing "best frames" where the object is entirely obstructed and not visible, almost every time. Here are some examples of camera setups that do that:

The current method of allowing larger areas to override smaller areas make the best frame dependent on temporal direction. For example, in my driveway camera:

Any cars coming left to right in the frame get bigger. Even if I get a great score early when the car is in clear view, the 110% size increase will override the best frame. If that exact same video were played in reverse, the car would score low while being obstructed, then get smaller but score higher as it moved to the left and further away. That would give a different (better) result for best_frame.

A weighted scoring system could remove the temporal nature of the comparisons.

More info/discussion for consideration in this thread: https://github.com/blakeblackshear/frigate/discussions/10868#discussioncomment-9038520

I have the same issue however for license plate detection, it keeps the last shot of the car:

snapshot

clip

https://github.com/blakeblackshear/frigate/assets/16291156/7a803917-bac8-4df9-a8de-96ea13457fcd

Never mind the stutter as I don't know how to fix it for now, but despite it there are still other valid snapshots than this one .

This snapshot looks like a fine choice. Unless you're running frigate+ with license plate label enabled then frigate can not consider license plate location in the best snapshot logic.

This snapshot looks like a fine choice. Unless you're running frigate+ with license plate label enabled then frigate can not consider license plate location in the best snapshot logic.

I'm not running frigate+, but the car's front is blurry. I'm using platerecognizer API, if you know of a better free alternative solution that can detect the license on this snapshot I'd be happy to try it out.

neither of those things have anything to do with this issue though. From the perspective of a car only (since license plate detection is not being used inside of frigate), the snapshot you are showing is perfectly valid IMO.