charts

charts copied to clipboard

charts copied to clipboard

[bitnami/redis-cluster] different redis-cluster state when checking from inside and outside pod

Name and Version

bitnami/redis-cluster , 7.1.0

What steps will reproduce the bug?

helm install redis-cluster redis-cluster

Are you using any custom parameters or values?

external.access.enabled=true service.type=nodeport

What is the expected behavior?

cluster should be working fine and able to set and get keys from all masters and slave.

What do you see instead?

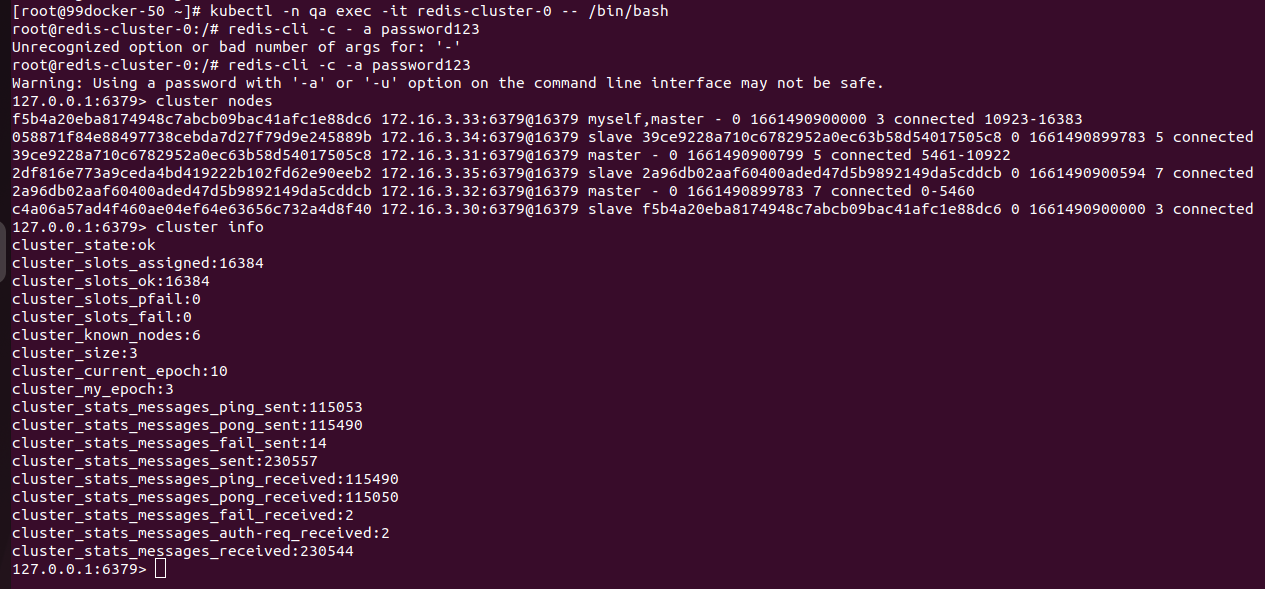

INside pod - everything is working fine . cluster state is fine and all masters and slave are connected. set and get opeerations are working

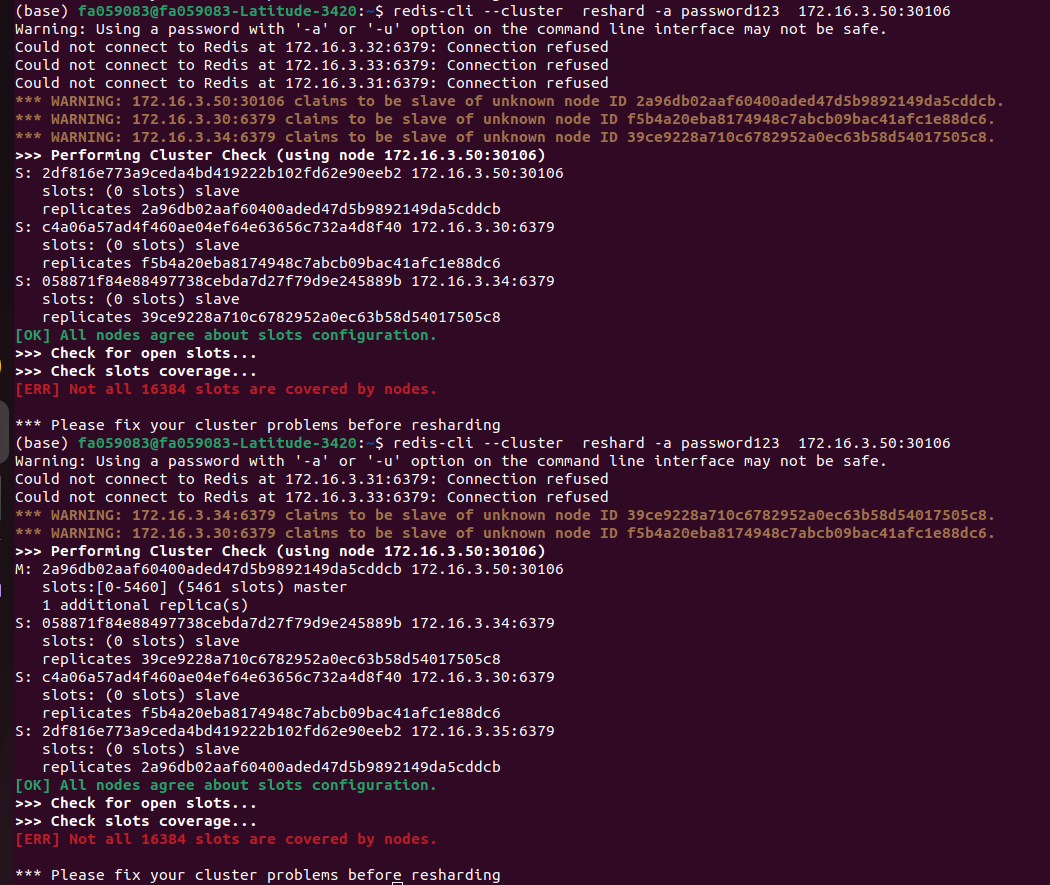

outside pod from redis-cli - cluster state is fine . but when i chck cluster state it staes that some masters and slaves are down . only able to set and get key from some masters

Additional information

No response

Hi @abhishekgupta2205,

The redis-cluster chart version 7.1.0 was released 8 months ago on January 5th, could you please upgrade to at least the latest 7.x.x version (7.6.4) and let us know if the problem persists?

During that time, both the redis-cluster chart and image received changes and improvements that may fix the problem, and, in case we detected a bug while troubleshooting, its fix would be applied to the latest version.

For that reason, we recommend upgrading the charts before we can troubleshoot the issue.

i have checked on latest version also . same issue is there. can you please check @migruiz4

Could you please provide more information about the values used to deploy the chart and the output of kubectl get service? The information used in the issue description may not be enough.

In the screenshot from the local test, I see IPs 172.16.3.30-35, but in the other screenshots you are using 172.16.3.50.

Actually i am using minikube cluster where external ip are not there . so i am using metallb addon to get external ip. also i want to acess my pods from outside cluster . so in service.type i have used nodeport and provided port to connect to pods. so to acces it i am using redis-cli where i put my minikube ip instead of external ip . due to this it is showing 172.16.3.50 which is my k8 master ip and 172.16.3.30-35 which are my external ip created by metallb

I'm sorry @abhishekgupta2205 but that approach may not work.

Because of how Redis works, if cluster.externalAccess.enabled=true the chart will generate an individual service for each node and the regular service for internal connections.

For external access, connections must be established through the externalAccess services IPs, and using the internal service with NodePort/LoadBalancer type won't work.

so is there any way that i can run redis -cluster having following conditions

- cluster type = minikube (does not have external ip support)

- external.access =true ( I should be able to connect to my cluster from outside by nodeport and cluster ip).

The equivalent for minikube for external ips would be using minikube tunnel: https://minikube.sigs.k8s.io/docs/handbook/accessing/#using-minikube-tunnel

- Run

minikube tunnel. - Run

helm install redis bitnami/redis-cluster --set 'cluster.externalAccess.enabled=true'. - Run

kubectl get svcand check the external IP addresses. - Upgrade the chart providing the external IP addresses

helm upgrade redis bitnami/redis-cluster --set 'cluster.externalAccess.enabled=true,cluster.externalAccess.service.loadBalancerIP[0]=<external-ip-1>,cluster.externalAccess.service.loadBalancerIP[1]=<external-ip-2>,cluster.externalAccess.service.loadBalancerIP[2]=<external-ip-3>'.

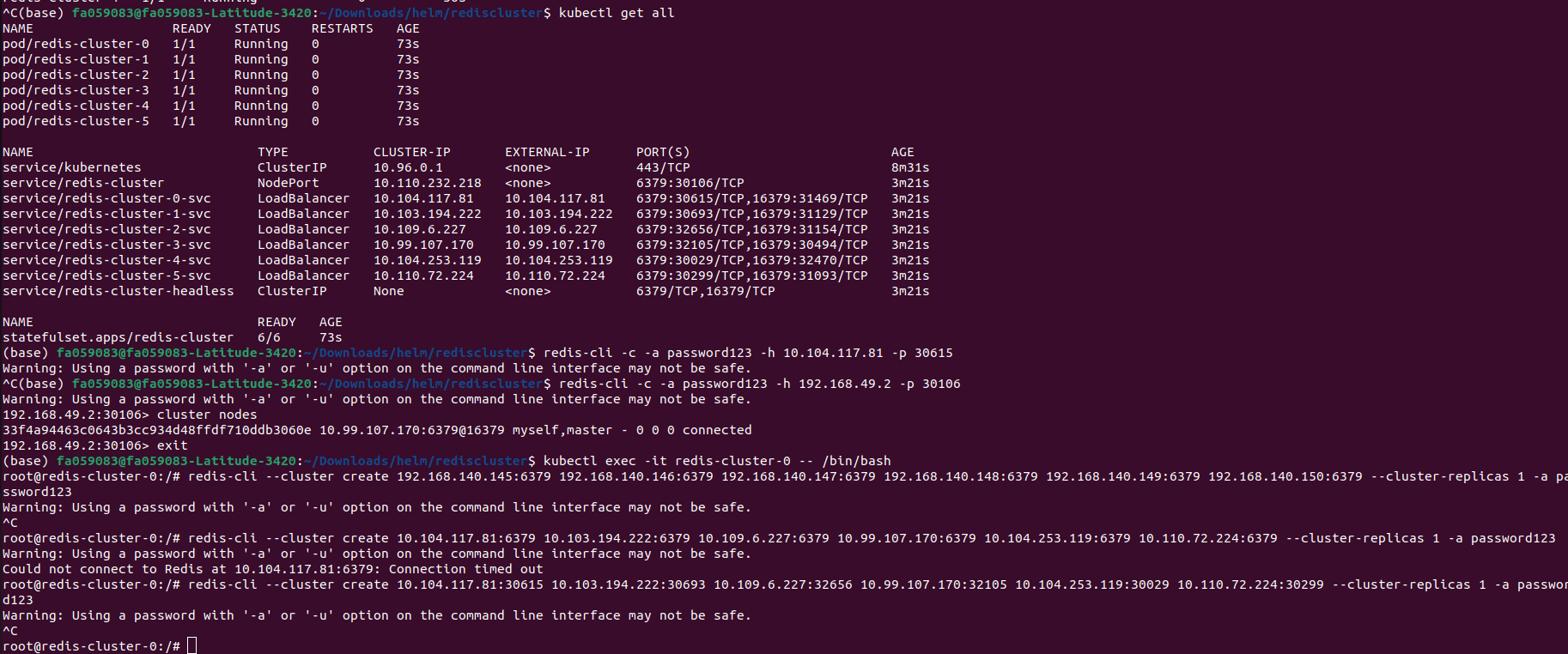

i tried using the approach you suggested but now i am unable to create cluster only . Also when i try to connect to pods using external ip and port then connection is not established.

Also what is the reason to make it mandatory to create loadbalancer services in this chart . we could have made any pod acessible from outside using nodeport and rest can be connected through it by internal connections .

The reason is that Redis needs every node to be reachable by the redis-cli, otherwise the externalAccess would not be needed.

More information about how the Redis Cluster and redis-cli work and why each node needs a reachable IP can be found here:

The Redis Cluster API is designed in a way that the clients are smart enough to understand the topology of the cluster and to react to changes (e.g.,slot migrations, failovers). You are typically using a Cluster client library to access Redis Cluster (instead of client library for standalone Redis).

The way how it works is that the client is getting bootstrapped by then knowing which hash slots are mapped to which endpoints. The client will then try to send the command to the correct endpoint. If something changed, then the client would usually get a 'MOVED

' response to which it needs to react. Such a reaction would be to follow the redirect to another endpoint, OR to fetch the topology (slots to endpoint mapping) again. Now, adding a simple load balancer on top of a Redis OSS Cluster does typically not work well because the load balancer doesn't know the slots to endpoints mapping. If the load balancer would do a round-robin across the endpoints, then this would lead to a lot of MOVED responses. Such a MOVED response contains the IP address and port of the Redis endpoint that is most likely responsible for the requested key, BUT the load balancer would then not know how to deal with this information.

i tried using the approach you suggested but now i am unable to create cluster only . Also when i try to connect to pods using external ip and port then connection is not established.

You are connecting using 192.168.42.2:30106, but you need the redis client somewhere in your topology where it can reach 10.104.117.81:6379

if we want that client should be able to connect to each of the pods , then we can simply have nodeports for each pod and the client can connect to any of the pods ( like we have in redis master-slave architecture helm chart with sentinels) . Also we can use -c parameter to solve moved error . why need the external ip

Hi @abhishekgupta2205,

The externalAccess services can't be configured with NodePort service type as mentioned in the values: https://github.com/bitnami/charts/blob/5dc005242b5a974586c520775c45b6b5cfcf08e8/bitnami/redis-cluster/values.yaml#L741-L749

The -c parameter won't work because, as I previously mentioned, the Redis Cluster API is designed so the client needs to be able to establish a direct connection with every node.

By default, the redis-cluster chart will deploy a service (redis-cluster) and a headless service (redis-cluster-headless). That is enough for other Redis clients running in the same Kubernetes cluster to reach the Redis cluster.

If externalAccess is enabled, the chart will also deploy a service for each individual Redis node (redis-cluster-N-svc) which have to be assigned an external IP for each.

The idea is to emulate an on-premise deployment, so clients outside Kubernetes can connect to the Redis cluster.

That workaround is the only way to connect to Redis cluster externally, because Redis is not designed to work behind a load balancer / reverse-proxy. If Redis supported load balancing, then no externalAccess would be needed as both internal and external clients would be able to connect to the Redis cluster using a single service.

i am still unable to understand why use external ip when we can do same thing by just providing different nodeports to different pods . if we want to connect to any pod then simply connect to it by giving minikube ip and nodeport . Also cluster can be created by using these ip and different nodeports. ( redis-cli --cluster create 192.168.49.2:30104 192.168.49.2:30105 192.168.49.2:30106 ...) . this architecture will also solve our problem .

Also if we do not want -c parameter , the client can connect to any pod if he knows correct master ip where hast slot i served

i am still unable to understand why use external ip when we can do same thing by just providing different nodeports to different pods . if we want to connect to any pod then simply connect to it by giving minikube ip and nodeport . Also cluster can be created by using these ip and different nodeports. ( redis-cli --cluster create 192.168.49.2:30104 192.168.49.2:30105 192.168.49.2:30106 ...) . this architecture will also solve our problem .

It is Redis limitation, it will publish the same address for connection to other nodes and for clients.

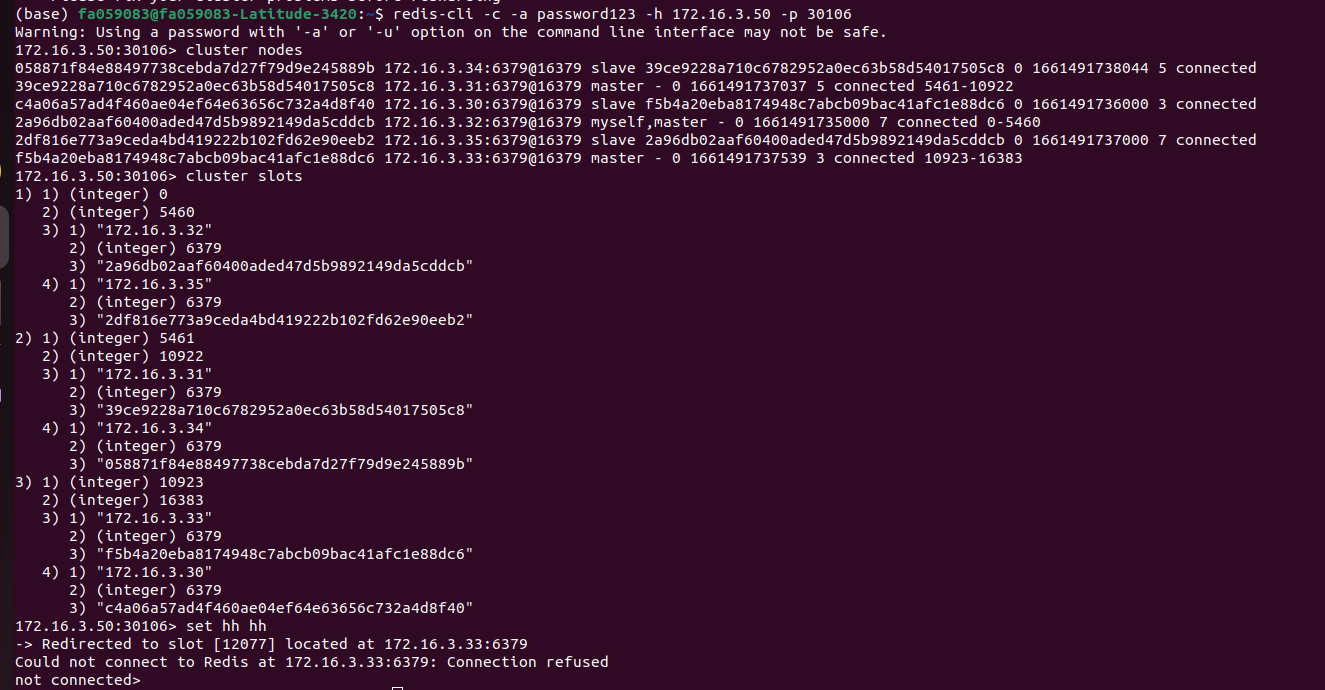

Although you can establish a connection with node 1 via 192.168.49.2:30106, it will reply with the address of the other nodes in the cluster (eg. 10.104.117.81:6379, ... ).

The client will attempt to connect with those internal addresses to collect the cluster information, but the client won't be able to resolve those addresses. In other words, although you use NodePort, as Redis is not aware of those IP:PORT addresses, the client connection won't work.

if we leave the problem of external ip , i should be able to connect to 192.168.49.2:30106 atleast . but i am unable to connect to it. is it due to external ip problem or any other problem is there . i have one chart running where i have used metallb to get externalip and created the chart . there cluster is properly craeted and things are working correct if i connect from inside the pod . but when i try to establish connection through node port connection is timed out . according to you it should atleast be connected

As you mentioned that redis should have both internal ip and external ip same . then can we run using these approaches -

- use nodeports for every pod and create cluster with these ip . now pods can communicate with these ip and also can connect from outside using these nodeport and ip.

- Also when we use redis with sentinels , when we ask sentinel about masterip then it return internal cluster ip of pod. if we want to connect to it we can make entry of the ip in etc/host file and resolve the hostname. then we would not external ip to connect with pods .

Hi @abhishekgupta2205,

The chart does not support the configuration you suggest, and I'm not sure it could be achieved because of the following reasons:

use nodeports for every pod and create cluster with these ip . now pods can communicate with these ip and also can connect from outside using these nodeport and IP.

As far as I know, NodePort services can't be reached from a internal pod using <k8s_node_ip>:<k8s_node_port>, it only works for connections from the outside.

Also when we use redis with sentinels , when we ask sentinel about masterip then it return internal cluster ip of pod. if we want to connect to it we can make entry of the ip in etc/host file and resolve the hostname. then we would not external ip to connect with pods.

/etc/hosts only supports hostname->IP translations, not ports. To trick the Redis client into thinking he can reach the Redis internal address when they are accessing through the nodePort, you may need something in addition to /etc/hosts.

If you find a solution that fulfills your requirements and would like to contribute to the bitnami/redis chart with your improvements, feel free to send a PR and we will be happy to review it.

So is there a way that like in redis-sentinel we can announce ip into hostnames , we can do here in redis-cluster also . it will make external access easier as we can put the hostname entries in etc/host and client can connect to it using dns resolution

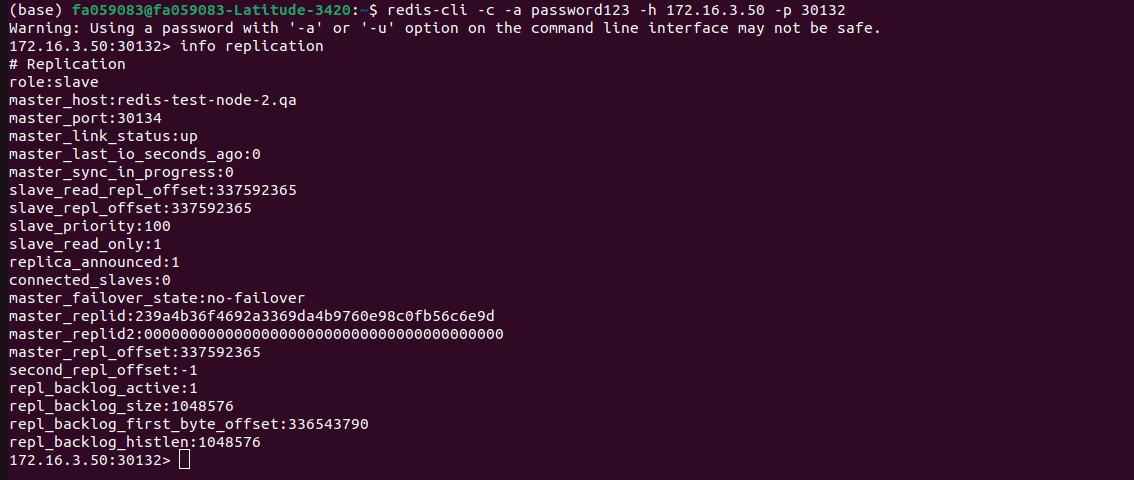

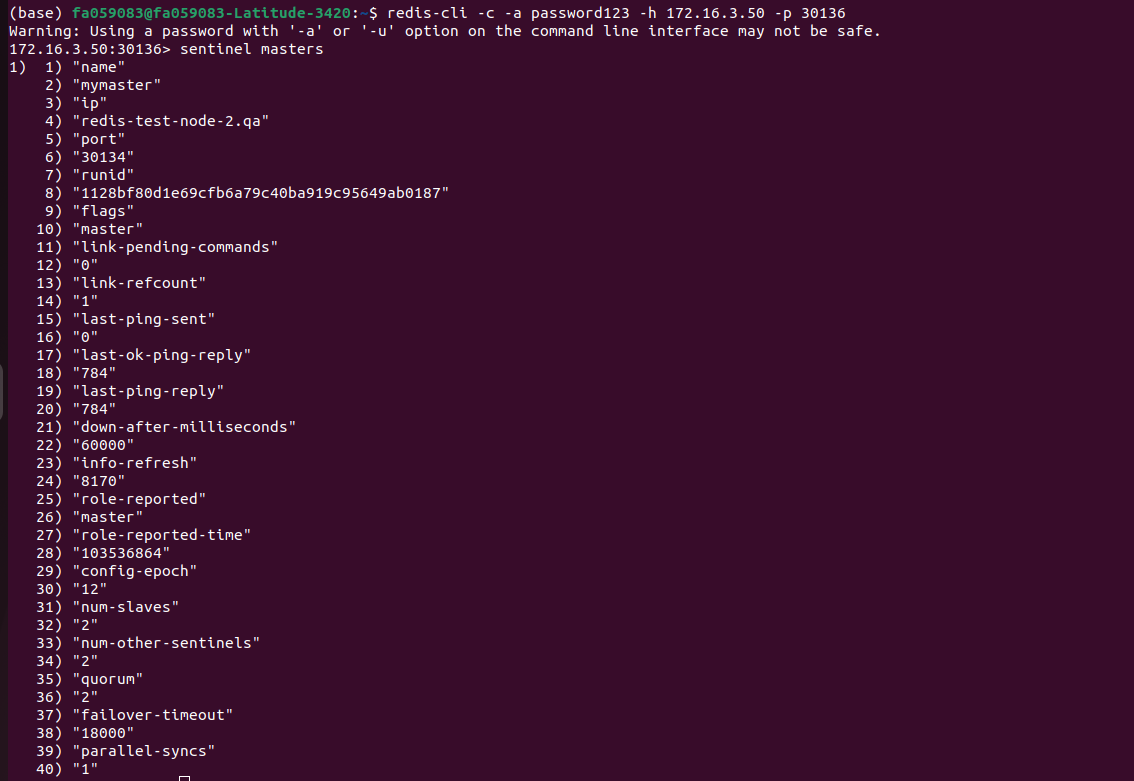

Also as you said that redis publish same ip for both internal and external communication. i have deployed a helm chart with redis-sentinel architecture and when i see sentinel masters while connecting to sentienl it shows internal ip and nodeport instead of redis port(6379). why it is happening there

Hi @abhishekgupta2205,

What you see there, when connecting through 172.16.3.50:30136 is the Redis client point of view but the error you reported at the beginning of this thread, is the real issue.

Although you can connect to one node using nodePort, when the client request data stored in a different node, the Redis node will reply with the internal address of that node, and the client will fail to retrieve the information from that node.

That is why, as the title states, 'different redis-cluster state when checking from inside and outside pod' when using NodePort instead of externalAccess services with one IP per node.

hi @migruiz4 can we make pods communicate with each other through nodeport and ip . means pods communicate withe each other using cluster ip generated which get changed every time pod is destroyed and newly created . so intead of cluster ip , is it possible that node only communicate through nodeport and ip . in this way they will publish the same nodeport and ip for external

Hi @abhishekgupta2205,

It is not possible to make pods communicate with each other through NodePort and IP.

That is the main reason why the suggested feature is technically impossible, and the only way Redis Cluster can be accessed externally is using Cluster IP with one external IP per pod.

hi @migruiz4 can we use the following approach - i create 6 standalone redis pods using redis helm chart each having its nodeport and ip and then create a cluster out of them

Feel free to experiment with the chart, but please be aware that we may not be able to provide support for such custom deployment.

As I previously mentioned, the only supported method for externalAccess at the moment would be using externalAccess.service.type: LoadBalancer.

This Issue has been automatically marked as "stale" because it has not had recent activity (for 15 days). It will be closed if no further activity occurs. Thanks for the feedback.

Due to the lack of activity in the last 5 days since it was marked as "stale", we proceed to close this Issue. Do not hesitate to reopen it later if necessary.