3070 8G

- (1) Describe the bug 简述

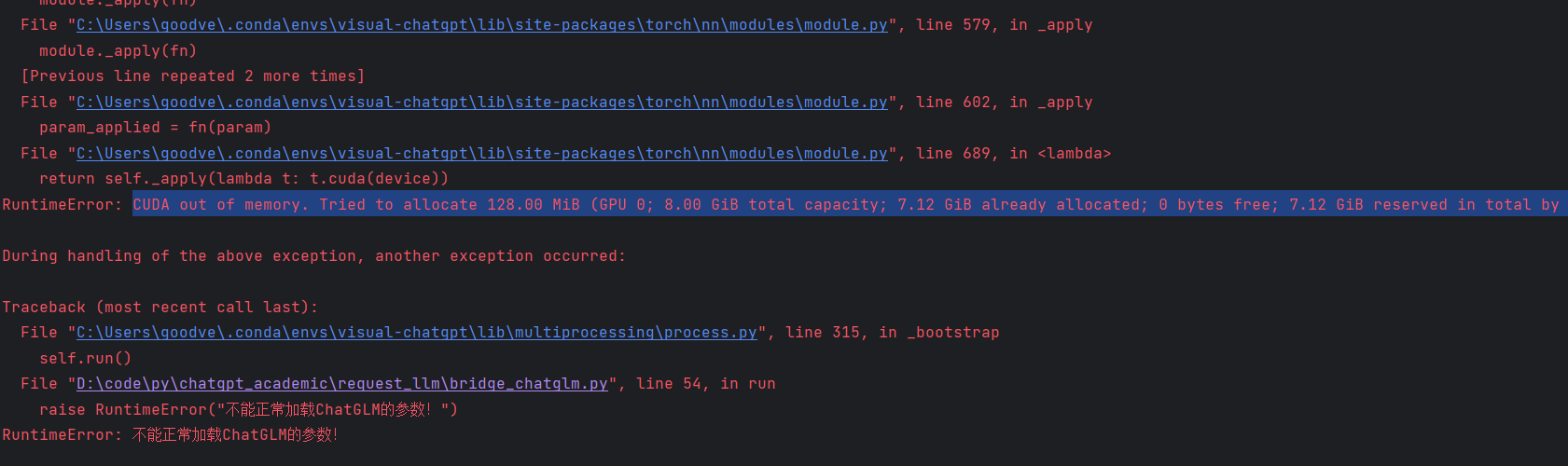

CUDA out of memory. Tried to allocate 128.00 MiB (GPU 0; 8.00 GiB total capacity; 7.12 GiB already allocated; 0 bytes free; 7.12 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

- (2) Screen Shot 截图

-

(3) Terminal Traceback 终端traceback(如有)

-

(4) Material to Help Reproduce Bugs 帮助我们复现的测试材料样本(如有)

Before submitting an issue 提交issue之前:

- Please try to upgrade your code. 如果您的代码不是最新的,建议您先尝试更新代码

- Please check project wiki for common problem solutions.项目wiki有一些常见问题的解决方法

https://github.com/THUDM/ChatGLM-6B#%E7%A1%AC%E4%BB%B6%E9%9C%80%E6%B1%82

https://github.com/THUDM/ChatGLM-6B#%E7%A1%AC%E4%BB%B6%E9%9C%80%E6%B1%82

换成 int4 可以用了

我试的大概需要13GB显存多一点