bevy

bevy copied to clipboard

bevy copied to clipboard

Maybe pipelined rendering

Objective

- Implement pipelined rendering

- Fixes #5082

User Facing Description

Bevy now implements piplelined rendering! Pipelined rendering allows the app logic and rendering logic to run on different threads leading to large gains in performance.

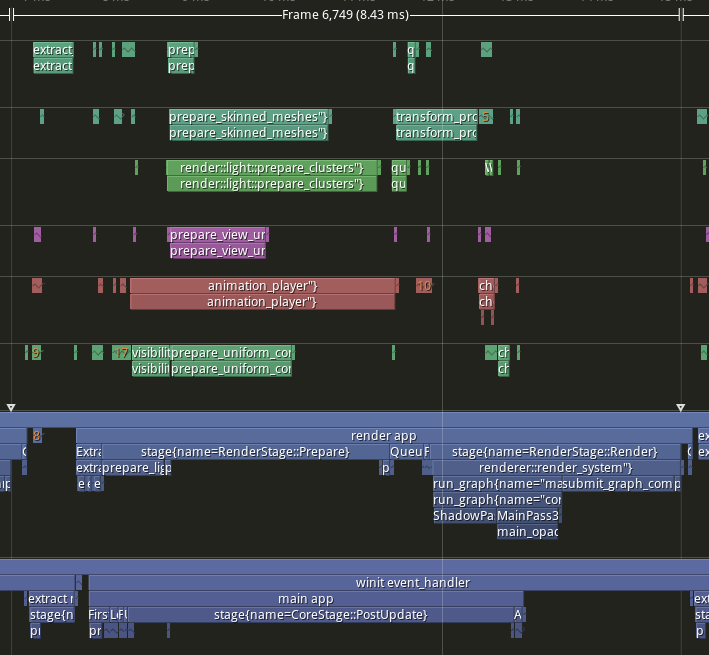

tracy capture of many_foxes example

tracy capture of many_foxes example

To use pipelined rendering, you just need to add the PipelinedRenderingPlugin. If you're using DefaultPlugins then it will automatically be added for you on all platforms except wasm. Bevy does not currently support multithreading on wasm which is needed for this feature to work. If you aren't using DefaultPlugins you can add the plugin manually.

use bevy::prelude::*;

use bevy::render::pipelined_rendering::PipelinedRenderingPlugin;

fn main() {

App::new()

// whatever other plugins you need

.add_plugin(RenderPlugin)

// needs to be added after RenderPlugin

.add_plugin(PipelinedRenderingPlugin)

.run();

}

If for some reason pipelined rendering needs to be removed. You can also disable the plugin the normal way.

use bevy::prelude::*;

use bevy::render::pipelined_rendering::PipelinedRenderingPlugin;

fn main() {

App::new.add_plugins(DefaultPlugins.disable::<PipelinedRenderingPlugin>());

}

A setup function was added to plugins

A optional plugin lifecycle function was added to the Plugin trait. This function is called after all plugins have been built, but before the app runner is called. This allows for some final setup to be done. In the case of pipelined rendering, the function removes the sub app from the main app and sends it to the render thread.

struct MyPlugin;

impl Plugin for MyPlugin {

fn build(&self, app: &mut App) {

}

// optional function

fn setup(&self, app: &mut App) {

// do some final setup before runner is called

}

}

A Stage for Frame Pacing

In the RenderExtractApp there is a stage labelled BeforeIoAfterRenderStart that systems can be added to. The specific use case for this stage is for a frame pacing system that can delay the start of main app processing in render bound apps to reduce input latency i.e. "frame pacing". This is not currently built into bevy, but exists as bevy

|-------------------------------------------------------------------|

| | BeforeIoAfterRenderStart | winit events | main schedule |

| extract |---------------------------------------------------------|

| | extract commands | rendering schedule |

|-------------------------------------------------------------------|

Small API additions

-

Schedule::remove_stage -

App::insert_sub_app -

App::remove_sub_app -

TaskPool::scope_with_executor

Problems and Solutions

Moving render app to another thread

Most of the hard bits for this were done with the render redo. This PR just sends the render app back and forth through channels which seems to work ok. I originally experimented with using a scope to run the render task. It was cuter, but that approach didn't allow render to start before i/o processing. So I switched to using channels. There is much higher complexity in the coordination that needs to be done, but it's worth it. By moving rendering during i/o processing the frame times should be much more consistent in render bound apps. See https://github.com/bevyengine/bevy/issues/4691.

Unsoundness with Sending World with NonSend resources

Dropping !Send things on threads other than the thread they were spawned on is considered unsound. The render world doesn't have any nonsend resources. So if we tell the users to "pretty please don't spawn nonsend resource on the render world", we can avoid this problem.

More seriously there is this https://github.com/bevyengine/bevy/pull/6534 pr, which patches the unsoundness by aborting the app if a nonsend resource is dropped on the wrong thread. That PR should probably be merged before this one. For a longer term solution we have this discussion going https://github.com/bevyengine/bevy/discussions/6552.

NonSend Systems in render world

The render world doesn't have any !Send resources, but it does have a non send system. While Window is Send, winit does have some API's that can only be accessed on the main thread. prepare_windows in the render schedule thus needs to be scheduled on the main thread. Currently we run nonsend systems by running them on the thread the TaskPool::scope runs on. When we move render to another thread this no longer works.

To fix this, a new scope_with_executor method was added that takes a optional TheadExecutor that can only be ticked on the thread it was initialized on. The render world then holds a MainThreadExecutor resource which can be passed to the scope in the parallel executor that it uses to spawn it's non send systems on.

Scopes executors between render and main should not share tasks

Since the render world and the app world share the ComputeTaskPool. Because scope has executors for the ComputeTaskPool a system from the main world could run on the render thread or a render system could run on the main thread. This can cause performance problems because it can delay a stage from finishing. See https://github.com/bevyengine/bevy/pull/6503#issuecomment-1309791442 for more details.

To avoid this problem, TaskPool::scope has been changed to not tick the ComputeTaskPool when it's used by the parallel executor. In the future when we move closer to the 1 thread to 1 logical core model we may want to overprovide threads, because the render and main app threads don't do much when executing the schedule.

Performance

My machine is Windows 11, AMD Ryzen 5600x, RX 6600

Examples

This PR with pipelining vs Main

Seeing a perf gain from 46% on many foxes to 5% on many cubes.

| percent | Diff | Main | PR | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| tracy frame time | mean | median | sigma | mean | median | sigma | mean | median | sigma | mean | median | sigma |

| many foxes | 41.70% | 42.12% | 27.81% | 6.33 | 6.25 | 3.09 | 15.18 | 14.84 | 11.11 | 8.85 | 8.59 | 8.02 |

| many lights | 29.68% | 29.25% | 29.85% | 4.41 | 4.27 | 1.94 | 14.86 | 14.6 | 6.5 | 10.45 | 10.33 | 4.56 |

| many animated sprites | 15.38% | 15.23% | -3.06% | 4.51 | 4.39 | -0.14 | 29.33 | 28.82 | 4.58 | 24.82 | 24.43 | 4.72 |

| 3d scene | 11.64% | 10.69% | 18.21% | 0.78 | 0.7 | 0.59 | 6.7 | 6.55 | 3.24 | 5.92 | 5.85 | 2.65 |

| many cubes | 8.16% | 7.66% | 3.33% | 1.83 | 1.69 | 0.32 | 22.43 | 22.06 | 9.62 | 20.6 | 20.37 | 9.3 |

| many sprites | 5.61% | 6.56% | -11.06% | 1.5 | 1.73 | -0.47 | 26.73 | 26.36 | 4.25 | 25.23 | 24.63 | 4.72 |

This PR with pipelining disabled vs Main

Not sure I 100% understand these changes, but they're possibly due to 2 things. First is that there is 1 less thread for executing systems compute tasks now that the main thread doesn't execute them. Second is that the scope executor changed away from try_tick.

| percent | Diff | Main | PR no pipelining | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| tracy frame time | mean | median | sigma | mean | median | sigma | mean | median | sigma | mean | median | sigma |

| many foxes | 1.78% | 1.62% | -2.25% | 0.27 | 0.24 | -0.25 | 15.18 | 14.84 | 11.11 | 14.91 | 14.6 | 11.36 |

| many lights | -0.61% | -1.03% | 16.92% | -0.09 | -0.15 | 1.1 | 14.86 | 14.6 | 6.5 | 14.95 | 14.75 | 5.4 |

| many animated sprites | 1.77% | 1.60% | -10.48% | 0.52 | 0.46 | -0.48 | 29.33 | 28.82 | 4.58 | 28.81 | 28.36 | 5.06 |

| 3d scene | -1.64% | -2.75% | -19.14% | -0.11 | -0.18 | -0.62 | 6.7 | 6.55 | 3.24 | 6.81 | 6.73 | 3.86 |

| many cubes | -1.29% | -1.63% | -2.70% | -0.29 | -0.36 | -0.26 | 22.43 | 22.06 | 9.62 | 22.72 | 22.42 | 9.88 |

| many sprites | 2.02% | 1.90% | -3.06% | 0.54 | 0.5 | -0.13 | 26.73 | 26.36 | 4.25 | 26.19 | 25.86 | 4.38 |

Benchmarks

TODO

Migration Guide

SubApp runner has conceptually been changed to an extract function.

The runner no longer is in charge of running the sub app schedule. It's only concern is now moving data between the main world and the sub app. The sub_app.app.schedule is now run for you after the provided function is called.

// before

fn main() {

let sub_app = App::empty();

sub_app.add_stage(MyStage, SystemStage::parallel());

App::new().add_sub_app(MySubApp, sub_app, move |main_world, sub_app| {

extract(app_world, render_app);

render_app.app.schedule.run();

});

}

// after

fn main() {

let sub_app = App::empty();

sub_app.add_stage(MyStage, SystemStage::parallel());

App::new().add_sub_app(MySubApp, sub_app, move |main_world, sub_app| {

extract(app_world, render_app);

// schedule is automatically called for you after extract is run

});

}

App runner and SubApp extract functions are now required to be Send

This was done to make App and SubApp Send. If this breaks your use case please report it as these new bounds might be able to be relaxed.

ToDo

- [ ] benchmarking

- [x] check examples perf with pipelining disabled

- [ ] test wasm still works

Fix tests. A bunch of tests are broken because tests don't run on the main thread. Need to come up with a workaround for this.

You should be able to set the number of threads the tests are run with to 1 to force this to work :)

TIL that cfg(test) only works on the current workspace being tested. I tried to revert to the previous behavior of scope when testing (each instance of scope has it's own scope executor), but this only works for bevy_tasks when using cfg[test]. The only solution I've thought of so far is to put the code behind a feature flag instead. This would mean that any time a user wants to run tests on an App or Stage they'll need to enable the feature. This may be confusing as the failure mode currently is that the test deadlocks and times out. Maybe we could add a warning/panic for when test is enabled, but the feature flag is not on.

I think my plan for now is to change the MainThreadExecutor into a resource so it's not global and pass it into scope as an optional argument. A little less ergonomic API wise, but probably better than asking users to turn on a feature flag for testing.

edit: also a step backwards from global task pools, but ¯\(ツ)/¯

Would we still want a task pool scope to run the render world if !Send resources didn't exist?

Or would we just spawn a thread for the render world and park it until some signal arrives? (I would think so since ideally nothing blocks the main thread's event loop. We'd want app world running in another thread too.)

So I changed the approach a good bit. It's now spawning a long running task that is controlled through channels that send the rendering app back and forth. This change allows the rendering app to run during input processing and will help with more consistent frame times when the app is gpu bound.

Would we still want a task pool scope to run the render world if !Send resources didn't exist?

Not sure. Right now prepare_windows is the only thing in the render schedule that needs to run on the main thread. It's not really using a !Send resource other than as a marker to force the system to run on the main thread. I haven't looked into what it's doing to know if this could be changed somehow.

When testing many_foxes I was getting really bad frame times when the animation_player (which is part of PostUpdate) ended up running on the render thread. This delays the render thread from making progress until that system is done running.

As a quick fix I've removed ticking the task stealing executors from scope. Seems to not hurt execution time on many_foxes and makes the tail latency much better. Below is a histogram of frame times. Yellow is before removing executor.run and red is with executor.run removed. You can see that this removes the outer peaks from the distribution with the mean remaining mostly the same.

This probably hurts the micro benchmarks a lot, but I still need to run them and test some other examples

Taking this out of draft as the code is mostly ready. Still need to think about making inserting NonSend Resources unsafe still and investigate perf more.

Holding off on approval until we have a solid grasp on the direction of how we're dealing with

!Sendsoundness inWorld.

Should we prioritize refactoring these winit interactions so they don't use !Send resources?

With the ThreadExecutor here (maybe a simpler, non-async version) and some NonSend container that stores all the !Send resources, we could proxy all !Send data accesses behind them.

Like if NonSend was stored on App, and we left it on the main thread at startup, World can be Send. And we don't have to limit systems to a particular thread, just blocks of code that read NonSend.

The main thread is just running an event loop, so systems in other threads can send FnMut(NonSend) objects to the main thread executor, and they'll be processed at some point in the loop. Doesn't even have to be async. If the main thread is just winit and we kick the "main" and render worlds to their own dedicated threads, then the event loop can run as fast as necessary.

I'd rather us just have a dedicated thread for running each app (main and subapp), and have them all use the ComputeTaskPool for their systems/tasks.

A separate thread doesn't help the problem. The ComputeTaskPool::scope will still run systems from the other app on it's thread.

Removed the render task pool and changed the ComputeTaskPool scope to not tick the global executors when running the parallel executor. Perf is roughly the same.

@hymm can you add Fixes #4718? The shift away from spin-ticking to using Executor::run should resolve it.

FYI some combination of the changes in this PR + the changes to scope on main reintroduced the flaky test problem in bevy_transform.

So the flakiness was very similar to before. It got reintroduced by transform propagation becoming multithreaded and me removing the catch unwind. So the parallel transform propagation in panicking test can poison the scope in another test. I pushed a fix where I tried to keep the executor.run's, but I'm not sure if the AssertUnwindSafe's are sound or not. Need to think about it more. Also should probably bench it just to see if it has much effect. I don't think it should as it's outside the hot loop.

ran a quick test with many_foxes and it seems to have improved the frame time a decent amount. Not sure what that's about.

@alice-i-cecile I would still mark this as blocked until #6534 or something equivalent is merged. This implementation is unsound unless we're 100% certain World: Send.

So more about AssertUnwindSafe. When I remove the AssertUnwindSafe, I get this note with the errors

= note: `UnwindSafe` is implemented for `&[closure@async_executor::Runner<'_>::runnable::{closure#0}::{closure#0}]`, but not for `&mut [closure@async_executor::Runner<'_>::runnable::{closure#0}::{closure#0}]`

but not sure currently what is taking the Runner as &mut instead of &

I'm not sure if the AssertUnwindSafe's are sound or not.

Unwind safety is about not leaving something in a invalid state due to an unwind, not memory safety and undefined behavior. Given that async_executor is entirely safe code (sans the task spawning from async_task), we cannot be left in a state that could trigger UB outside of our own use of unsafe.

This generally seems OK? See https://stackoverflow.com/questions/65762689/how-can-assertunwindsafe-be-used-with-the-catchunwind-future. The issue seems to be from the FnMut closure passed to https://github.com/smol-rs/async-executor/blob/master/src/lib.rs#L659, everything else involved uses immutable references. Sans potential lock poisoning, I don't think it's possible to leave async_executor in an invalid state.

Remember to update the PR title :)

I wanted to check that my assumption that switching to async executor's run was still worth it over try_tick in the scope, since there have been a decent number of changes in this PR and main since I did that.

For the most part there are clear gains to using run over try_tick, except for many_cubes which has a small gain on the mean and a small loss on the median. I'm going to dig a little into the traces to see if I can understand what's happening there, but I don't expect that to change the PR.

Negative numbers means the current PR version was faster.

| percent | Diff | PR run scope | PR try_tick scope | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| tracy frame time | mean | median | sigma | mean | median | sigma | mean | median | sigma | mean | median | sigma |

| many foxes | -5.39% | -6.80% | 37.05% | -0.23 | -0.28 | 2.06 | 4.27 | 4.12 | 5.56 | 4.5 | 4.4 | 3.5 |

| many lights | -5.91% | -6.77% | 25.90% | -0.43 | -0.48 | 1.51 | 7.27 | 7.09 | 5.83 | 7.7 | 7.57 | 4.32 |

| many animated sprites | -4.11% | -2.23% | 4.34% | -0.96 | -0.5 | 0.37 | 23.33 | 22.4 | 8.52 | 24.29 | 22.9 | 8.15 |

| 3d scene | -14.18% | -11.19% | -93.01% | -0.2 | -0.15 | -1.73 | 1.41 | 1.34 | 1.86 | 1.61 | 1.49 | 3.59 |

| many cubes | -0.77% | 0.57% | -19.17% | -0.11 | 0.08 | -1.48 | 14.2 | 14 | 7.72 | 14.31 | 13.92 | 9.2 |

| many sprites | -3.26% | -1.54% | 44.27% | -0.73 | -0.33 | 5.91 | 22.37 | 21.45 | 13.35 | 23.1 | 21.78 | 7.44 |

I still need to redo these for current main and with pipelining disabled. Once I get those numbers I'll update the tables in the description.

Updated the PR description.

except for many_cubes which has a small gain on the mean and a small loss on the median

Most of the loss seems to be in extract and applying the commands from extract. I do get why having another scope active with try_tick is a problem though.

As seen in the above image the scope running on the render thread is running tasks as fast as prepare_systems can make them. This is leading to the other threads being starved of tasks and also slowing down prepare_systems due to increased contention on the global queue (which is mostly empty). Not 100% sure why we we don't see the benefit of this as much on many_cubes. Might just be things not lining up timing wise and extract being a little more single threaded.

(sorry my vscode got overexcited, i'm still going through)

@aevyrie do you see anything in this PR that is strongly blocking your considerations around pacing? If you see that things can be reworked or adjusted later for that kind of work, and I believe that should be possible one way or another, then I feel the pacing concerns do not block this, but I wanted to at least ask.

@cart I feel that you should review this and for no good reason other than it being fun, you should get to push the bors button.

Not critical, no. I find the way BeforeIoAfterRenderStart is added to be a bit confusing. It would make a lot more sense if this was part of the default app event loop. IMO we should remove BeforeIoAfterRenderStart if it doesn't work for non-pipelined apps, and add it in a separate PR instead.

It would make a lot more sense if this was part of the default app event loop. IMO we should remove BeforeIoAfterRenderStart if it doesn't work for non-pipelined apps, and add it in a separate PR instead.

Hmm. Might be better to just let the frame pacing plugin itself add the stages then. It'll need to be different between when pipelined rendering is enabled or not. With pipelined rendering you need to insert after the extract schedule, but with it disabled you need to add it to the end of the rendering schedule.

framepacing would have to do its sleep after extract because that's technically the "beginning" of the next frame (before winit loads new input events).

Yup, this is exactly what I'm doing in bevy_framepace. It would be better if we could do it at the start of the event loop before polling, though I don't know by how much. I can look into how we can do this in another PR, though it will depend on if we intend to keep the winit and bevy update loops linked.

bors r+

Pull request successfully merged into main.

Build succeeded:

- build-and-install-on-iOS

- build-android

- build (macos-latest)

- build (ubuntu-latest)

- build-wasm

- build (windows-latest)

- build-without-default-features (bevy)

- build-without-default-features (bevy_ecs)

- build-without-default-features (bevy_reflect)

- check-compiles

- check-doc

- check-missing-examples-in-docs

- ci

- markdownlint

- msrv

- run-examples

- run-examples-on-wasm

- run-examples-on-windows-dx12