separate tonemapping and upscaling passes

Attempt to make features like bloom https://github.com/bevyengine/bevy/pull/2876 easier to implement.

This PR:

- Moves the tonemapping from

pbr.wgslinto a separate pass - also add a separate upscaling pass after the tonemapping which writes to the swap chain (enables resolution-independant rendering and post-processing after tonemapping)

- adds a

hdrbool to the camera which controls whether the pbr and sprite shaders render into aRgba16Floattexture

Open questions:

- ~should the 2d graph work the same as the 3d one?~ it is the same now

- ~The current solution is a bit inflexible because while you can add a post processing pass that writes to e.g. the

hdr_texture, you can't write to a separateuser_postprocess_texturewhile reading thehdr_textureand tell the tone mapping pass to read from theuser_postprocess_textureinstead. If the tonemapping and upscaling render graph nodes were to take in aTextureViewinstead of the view entity this would almost work, but the bind groups for their respective input textures are already created in theQueuerender stage in the hardcoded order.~ solved by creating bind groups in render node

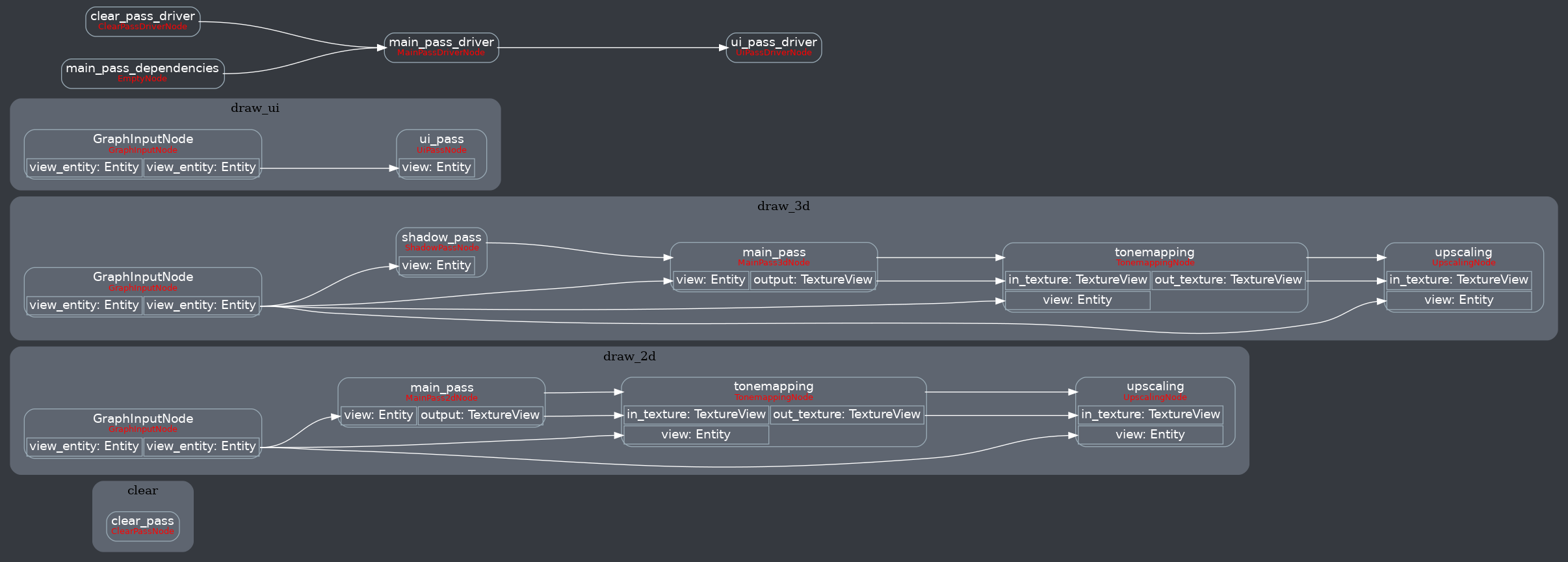

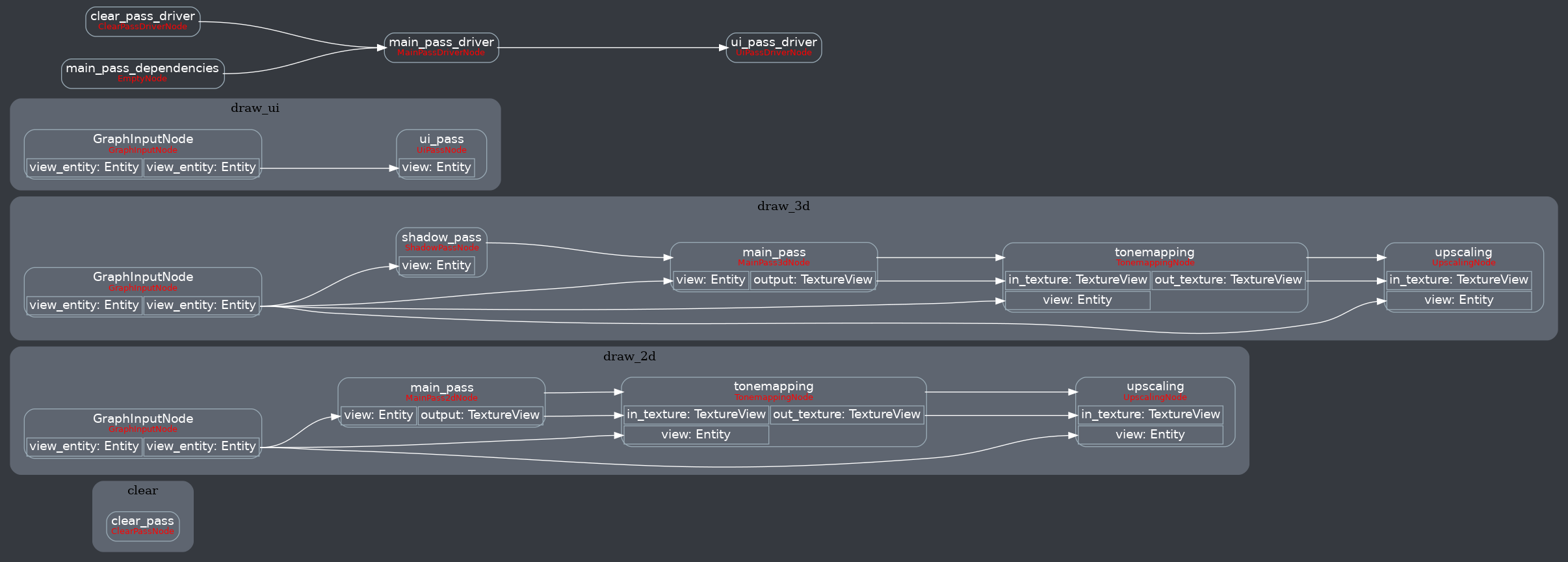

New render graph:

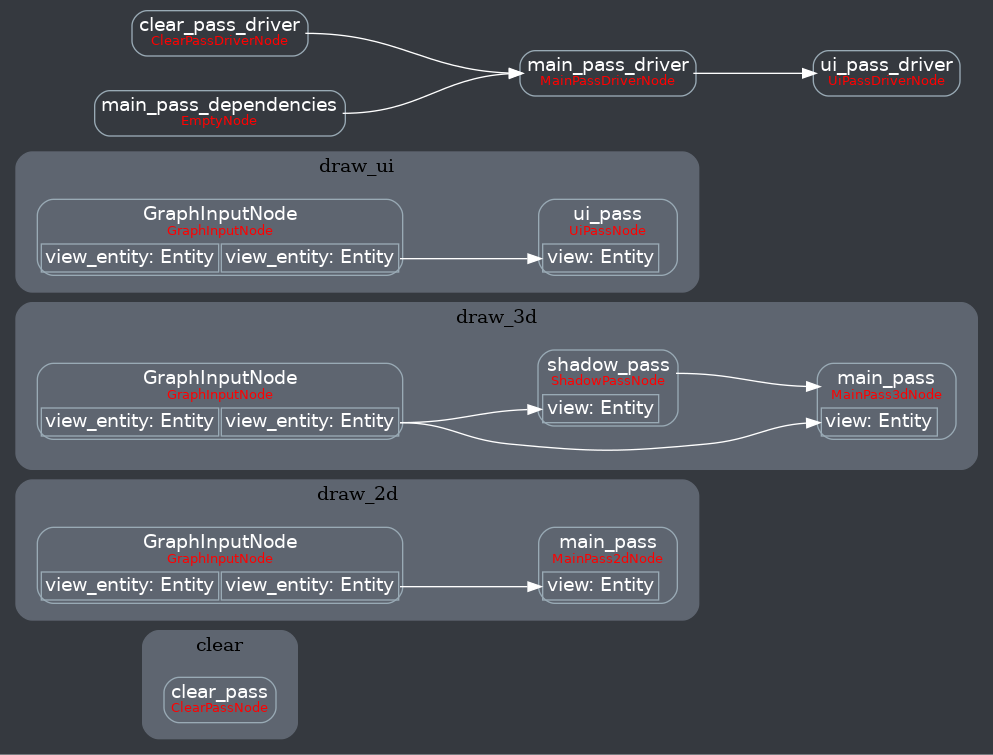

Before

Would it maybe make sense to do post processing after the main pass driver node rather than inside the 3d sub graph?

Would it maybe make sense to do post processing after the main pass driver node rather than inside the 3d sub graph?

I don't think so because I could image situations where the 2d and the 3d cameras would have different post processing settings which wouldn't work if it was after the main_pass_driver.

CI failure is because the breakout example has no 3d camera and doesn't write to the intermediate textures, so wgpu needs COPY_DST to zero the texture. Will be fixed either by using the same post processing in the 2d subgraph or by https://github.com/gfx-rs/wgpu/pull/2307.

New render graph:

The tonemapping node does nothing if hdr isn't enabled, because then the pbr.wgsl or sprite.wgsl shaders already do tone mapping.

hdr can be configured on cameras, but is automatically true for 3d and false for 2d.

Any chance you could address the feedback and update this on top of main @jakobhellermann ? It would be good to get this and bloom in for 0.7. :)

I rebased the code on main.

Open questions:

- where should configuration for hdr and the upscaling mode live? fields on a render target component?

- should we default to using HDR? (currently yes for 3d, no for 2d)

Oh, and either removing the upscaling key, or exposing and implementing it. I am fine with removing it for now and when we have some sensibly-configurable upscaling options, we add it then.

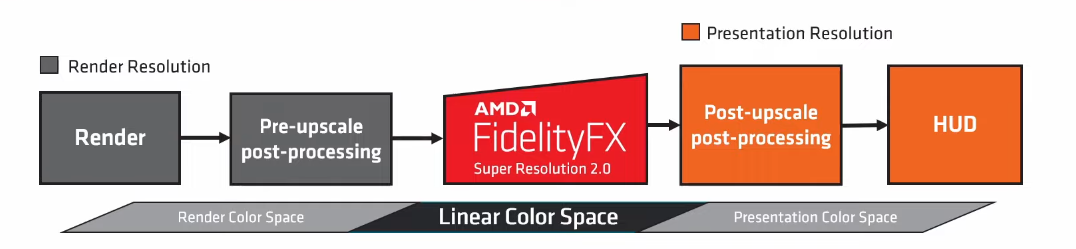

While watching the FSR 2.0 presentation, I noticed that UI rendering should be after the upscaling, which currently isn't the case in this PR.

That should definitely be done eventually, but I don't think that should block merging this.

I'm going to give this a first pass. After that, if this seems like the right approach, @jakobhellermann do you have any interest in adapting this to main (especially the changes in #4745)? Shouldn't require too many adjustments. Merging post-processing work is next on my todo list (and something I'd like to get in by Bevy 0.8). I'm happy to either endorse direction here if you've got time + motivation to do it, pick up work, or some combination of the two.

After a first pass, I'm relatively convinced this is the right approach. I've started updating this to main. I'll push the results here if I get them working.

I'm inclined to simplify some of the graph dataflow by removing the texture passing across nodes. I see why you chose to do that (it makes the nodes more flexible and more accurately models an effect chain), but in the interest of keeping the graph+implementation simple (and the changeset small / easier to port to future higher level post processing apis), I think I'd prefer to just read the texture directly from the view entity. That will also make it easier for users to insert their effects into the "graph based" effect chain, while they wait for a higher level api.

Some progress on the port:

Still more work to be done to make viewports / rendering multiple things to the same target work properly.

Sorry for not responding earlier, I was a little busy the last few days. Thanks for taking a look already. There are some aspects of this PR that I'm not so sure about/don't know what the ideal solution would be. For example the interaction of MSAA, tonemapping and HDR. Doing the MSAA resolve on HDR data apparently leads to pretty rough edges (https://mynameismjp.wordpress.com/2012/10/24/msaa-overview/ "Working with HDR and tone mapping"), which can be mitigated by doing invertible tonemapping at the time of the MSAA resolve and applying the inverse of the tone mapping function afterwards to continue post-processing: http://theagentd.blogspot.com/2013/01/hdr-inverse-tone-mapping-msaa-resolve.html.

Another question is whether this design is the most optimal use of textures and passes. For example say there's two different cameras rendered next to each other, with the current design I think they'd both get rendered and MSAA-resolved separately, driven by the CameraDriverNode. I don't know if it is possible/desirable to do that on one MSAA resolve.

Got viewports / multiple passes working again! Just gotta do a few more tweaks and re-wire up the tonemapping node, then I'll push my changes.

Sorry for not responding earlier

No worries! I'm the one that left this hanging for months :)

There are some aspects of this PR that I'm not so sure about/don't know what the ideal solution would be

Hmm yeah that MSAA interaction does seem pretty nasty. I'll read up and try to form some opinions. At first glance, inverse tone mapping seems pretty nasty to me. Feels like that introduces a lot of room for error / might eliminate some tonemapping algorithms as options.

I don't know if it is possible/desirable to do that on one MSAA resolve.

Yeah this is probably worth experimenting with.

Sorry for not responding earlier, I was a little busy the last few days. Thanks for taking a look already. There are some aspects of this PR that I'm not so sure about/don't know what the ideal solution would be. For example the interaction of MSAA, tonemapping and HDR. Doing the MSAA resolve on HDR data apparently leads to pretty rough edges (https://mynameismjp.wordpress.com/2012/10/24/msaa-overview/ "Working with HDR and tone mapping"), which can be mitigated by doing invertible tonemapping at the time of the MSAA resolve and applying the inverse of the tone mapping function afterwards to continue post-processing: http://theagentd.blogspot.com/2013/01/hdr-inverse-tone-mapping-msaa-resolve.html.

There is also an article by Brian Karis http://graphicrants.blogspot.com/2013/12/tone-mapping.html and Matt Pettineo’s own siggraph 2015 talk http://advances.realtimerendering.com/s2015/rad_siggraph_advances_2015.pptx both linked from the Pettineo article. It seems like the practical way is to do tonemapping or an approximation that focuses on luminance and is easily invertible so that the MSAA resolve happens before post processing and on colours as they would be displayed in screen, or a close approximation, and then convert the resolved values back to HDR by inverting the tonemapping/approximation. This has shipped in games and has support of real time graphics engineers at the top of the field so sounds like a good bet to me.

Another question is whether this design is the most optimal use of textures and passes. For example say there's two different cameras rendered next to each other, with the current design I think they'd both get rendered and MSAA-resolved separately, driven by the

CameraDriverNode. I don't know if it is possible/desirable to do that on one MSAA resolve.

I’ll have to take another look at this with the new perspective of cart’s render target and viewport changes. People have discussed having different msaa for different targets, but I’m not sure if they would intend it for different viewports within a target. That sounds like it would basically require the viewport to use its own msaa colour attachment with the appropriate configuration of msaa samples but resolve into the render target.

Just pushed my changes:

- Merged into main

- Removed "texture passing" from the graph in favor of direct view input access

- Fixed camera driven rendering + viewports. This includes re-adding "texture sharing"

(3) has some problems. Mixing and matching hdr and non-hdr cameras on the same render target doesn't work (at least, with viewports). We might want to revert the "texture sharing" changes and try to make per-camera textures work instead.

Ok I'm all caught up on the MSAA + tonemapping issue and its solutions.

Some thoughts:

- It looks like a "inverse tonemapping" (or approximate) solution is our best option. My initial concern about needing to limit ourselves to "safely invertible" tonemapping algorithms seems unfounded. We don't need to use the same mapping algorithm we use on the final image. We just need to find one that accomplishes the goal of "encoding and decoding" our information. This could still be configurable, but the constraints we're solving for are different than actual tonemapping.

- If we do use "real" tonemapping algorithms, this does limit what options are available to us / puts the burden on us to implement inverses for each one. Precision is also a concern. See the floating point issues here (which were largely resolved for that image, but I worry about subtle losses, especially as we expand our tonemapping options). Doing this seems like we'd be on the fringes of standard practice, which scares me.

- The simpler "luminance based" tone mapping proposed by Brian Karis seems preferable, both because it is cheaper and because it is simpler. It seems less likely to introduce precision issues / it is more easily invertible. The fact that this is used in shipped games also increases my comfort with this approach.

- I'd like to see how / if Unity and Unreal solve this problem.

- MSAA + HDR might want its own "special cased" pipeline config. We need to find the right balance between pipeline consistency, simplicity, and performance.

- We don't need to solve these problems in this PR. We should solve them (and ill try to do that in a follow up pr), but we can just disable "HDR textures" by default while we sort things out, given that currently we have no effects that rely on HDR textures. Godot's new renderer currently suffers from the same problem / hasn't implemented a solution yet, so we'd be in good company here. HDR + SMAA or TSAA is probably going to be a popular alternative anyway (once we support those). I say "hdr textures" because even if we tonemap in the shader, we're still doing HDR lighting. We might want to clarify our language here (or at least, do it in the docs). Checking the

Camera::hdrbox doesn't enable "hdr lighting". It enables "rendering to an HDR texture and deferring tonemapping".

For now, I'm going to focus on resolving the "how / if we share the main textures across cameras" problem. Then I'll resolve the conflicts with the recent "shader imports" changes on main and hopefully we can reach relative consensus on this / get it merged.

Ok I'm all caught up on the MSAA + tonemapping issue and its solutions.

Some thoughts:

- It looks like a "inverse tonemapping" (or approximate) solution is our best option. My initial concern about needing to limit ourselves to "safely invertible" tonemapping algorithms seems unfounded. We don't need to use the same mapping algorithm we use on the final image. We just need to find one that accomplishes the goal of "encoding and decoding" our information. This could still be configurable, but the constraints we're solving for are different than actual tonemapping.

- If we do use "real" tonemapping algorithms, this does limit what options are available to us / puts the burden on us to implement inverses for each one. Precision is also a concern. See the floating point issues here (which were largely resolved for that image, but I worry about subtle losses, especially as we expand our tonemapping options). Doing this seems like we'd be on the fringes of standard practice, which scares me.

- The simpler "luminance based" tone mapping proposed by Brian Karis seems preferable, both because it is cheaper and because it is simpler. It seems less likely to introduce precision issues / it is more easily invertible. The fact that this is used in shipped games also increases my comfort with this approach.

- I'd like to see how / if Unity and Unreal solve this problem.

- MSAA + HDR might want its own "special cased" pipeline config. We need to find the right balance between pipeline consistency, simplicity, and performance.

- We don't need to solve these problems in this PR. We should solve them (and ill try to do that in a follow up pr), but we can just disable "HDR textures" by default while we sort things out, given that currently we have no effects that rely on HDR textures. Godot's new renderer currently suffers from the same problem / hasn't implemented a solution yet, so we'd be in good company here. HDR + SMAA or TSAA is probably going to be a popular alternative anyway (once we support those). I say "hdr textures" because even if we tonemap in the shader, we're still doing HDR lighting. We might want to clarify our language here (or at least, do it in the docs). Checking the

Camera::hdrbox doesn't enable "hdr lighting". It enables "rendering to an HDR texture and deferring tonemapping".

I agree on all points. For point 4 I would think either Unreal is using what Karis proposed or they found something better since then.

Just pushed some changes:

- Merged into main

- Re-added tonemapping and upscaling to 2d

- Separated tonemapping config from hdr, allowing users to "opt out" of tonemapping (both when hdr is enabled and disabled). Specialization keys now specify tonemapping separately too.

- Disabled tonemapping for sprites by default, as this makes their colors "wrong". If users want to build 2d hdr lighting, they can enable tonemapping manually.

I'm seeing panics with 2d meshes, I verified I'm on your latest commit.

mesh2d_manual panic

2022-07-11T23:05:15.173521Z INFO bevy_render::renderer: AdapterInfo { name: "NVIDIA GeForce RTX 3050 Ti Laptop GPU", vendor: 4318, device: 9632, device_type: DiscreteGpu, backend: Vulkan }

2022-07-11T23:05:15.504262Z INFO naga::back::spv::writer: Skip function Some("mesh2d_position_world_to_clip")

2022-07-11T23:05:15.504301Z INFO naga::back::spv::writer: Skip function Some("mesh2d_position_local_to_clip")

2022-07-11T23:05:15.504312Z INFO naga::back::spv::writer: Skip function Some("mesh2d_normal_local_to_world")

2022-07-11T23:05:15.515194Z ERROR wgpu::backend::direct: Handling wgpu errors as fatal by default

thread 'main' panicked at 'wgpu error: Validation Error

Caused by:

In a RenderPass

note: encoder = `<CommandBuffer-(0, 1, Vulkan)>`

In a set_pipeline command

note: render pipeline = `colored_mesh2d_pipeline`

Render pipeline targets are incompatible with render pass

Incompatible color attachment: [Rgba16Float] != [Bgra8UnormSrgb]

mesh2d_vertex_color_texture panic

2022-07-11T23:09:03.717326Z INFO bevy_render::renderer: AdapterInfo { name: "NVIDIA GeForce RTX 3050 Ti Laptop GPU", vendor: 4318, device: 9632, device_type: DiscreteGpu, backend: Vulkan }

2022-07-11T23:09:04.218017Z ERROR wgpu::backend::direct: Handling wgpu errors as fatal by default

thread 'main' panicked at 'wgpu error: Validation Error

Caused by:

In a RenderPass

note: encoder = `<CommandBuffer-(0, 12, Vulkan)>`

In a set_pipeline command

note: render pipeline = `transparent_mesh2d_pipeline`

Render pipeline targets are incompatible with render pass

Incompatible color attachment: [Rgba16Float] != [Bgra8UnormSrgb]

mesh2d

2022-07-11T23:13:32.257005Z INFO bevy_render::renderer: AdapterInfo { name: "NVIDIA GeForce RTX 3050 Ti Laptop GPU", vendor: 4318, device: 9632, device_type: DiscreteGpu, backend: Vulkan }

2022-07-11T23:13:32.569748Z ERROR wgpu::backend::direct: Handling wgpu errors as fatal by default

thread 'main' panicked at 'wgpu error: Validation Error

Caused by:

In a RenderPass

note: encoder = `<CommandBuffer-(0, 1, Vulkan)>`

In a set_pipeline command

note: render pipeline = `transparent_mesh2d_pipeline`

Render pipeline targets are incompatible with render pass

Incompatible color attachment: [Rgba16Float] != [Bgra8UnormSrgb]

This effects more examples, but I'll stop there.

The panics looks like fairly simple pipeline configuration omissions. Giving this a look through in its current state...

Finally got caught up with main / wgpu 0.13 / the various renderer changes. Broken mesh2d pipelines are also fixed.

Is upscaling only enabled if an UpscalingTarget is inserted on the view?

It is technically enabled everywhere. The upscaling node/pass doubles as the ldr/intermediate texture -> swap chain texture write. Also note that we don't really do upscaling atm. @superdump

Is upscaling only enabled if an UpscalingTarget is inserted on the view?

It is technically enabled everywhere. The upscaling node/pass doubles as the ldr/intermediate texture -> swap chain texture write.

Ok. I'd be interested to hear how well things work to necessarily resample from the 'render' texture into the swapchain texture on mobile.

Also note that we don't really do upscaling atm. @superdump

Sure we do. It just uses a poor quality filter. :)

I've merged this with main and reconciled it with the changes in #5413. I've made some changes:

- Renamed RenderTextureFormat to SurfaceTextureFormat for clarity. This is about what the surface supports, not what formats are available for use for rendering generally. The fact that we're currently selecting the "default white texture format" based on what the os surface supports is pretty weird.

- Went back to using

TextureFormat::bevy_default()in every case but the final blit to the surface in UpscalingNode. I personally think this is a good move for a variety of reasons: it simplifies accessing this value and it standardizes format expectations / behavior across OS-es (instead of being random / unpredictable). The biggest downside here is that the blit step becomes "required" as the main pass render pipelines are no longer aware of what the surface supports (by default). Making it possible to skip the blit step would require re-piping the supported surface format into the pipeline.

I think we should probably make a few more changes:

- Remove the "compile time" selection of the

bevy_defaultTextureFormat. Pretty sure the separate UpscalingNode blit will resolve this problem. This was always a bit of a hack. Using the runtime surface format is the better approach. This seems in-line with @superdump's suggestion above. - Supporting one global SurfaceTextureFormat might ultimately bite us if different surfaces on a given OS have different format restrictions. Unlikely, I think, but something to keep in the back of our minds if the error ever shows up in the wild.

Barring additional feedback, I plan on making the following changes:

- Remove compile-time selection of

bevy_defaultTextureFormat and make sure it works correctly on wasm / android. - Disable

hdrby default. This means we will use "tonemap in shader" by default, ensuring MSAA works as expected out of the box. We can revisit this once we have an alternative AA implementation and/or we implement a reversible intermediate tonemapping solution (as discussed above).

Does "using the runtime surface format" for the TextureFormat mean that using a texture as the camera RenderTarget for other formats than TextureFormat::bevy_default() like RGBA16 will work? That would be super cool.

I think disabling hdr by default makes sense. Better to be able to move forward with this than blocking on figuring out the best MSAA solution.

Does "using the runtime surface format" for the TextureFormat mean that using a texture as the camera RenderTarget for other formats than TextureFormat::bevy_default() like RGBA16 will work? That would be super cool.

Doing this would require specializing the pipeline on each camera's RenderTarget format. We could do this, but idk if its worth it.

Ah wait hold on. Actually it should just work! I wasn't thinking properly. The final blit in UpscalingNode will make it work "as expected".