feature: Allow for New relic integration

Feature request

We are using bentoML for our model serving. We found that it is a great library. However when integrating with new relic we are facing issues.

Motivation

We have been using bentoML for our work. It is a great repository thank you for building this. However, when we try to integrate bentoML with new relic monitoring we are getting the following error. We tried mounting a fastapi app/ custom middleware but seems like these efforts are not fruitful. Please help us to integrate newrelic with bentoML

Debug mode from bentoML:

/usr/local/lib/python3.8/site-packages/uvicorn/lifespan/on.py:97: RuntimeWarning: coroutine 'middleware_wrapper' was never awaited

self.logger.error(msg, exc_info=exc)

Object allocated at (most recent call last):

File "/usr/local/lib/python3.8/site-packages/asgiref/compatibility.py", lineno 34

instance = application(scope)

Complete error Message

ERROR: Exception in ASGI application

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/uvicorn/protocols/http/h11_impl.py", line 407, in run_asgi

result = await app( # type: ignore[func-returns-value]

File "/usr/local/lib/python3.9/site-packages/uvicorn/middleware/proxy_headers.py", line 78, in __call__

return await self.app(scope, receive, send)

File "/usr/local/lib/python3.9/site-packages/newrelic/api/asgi_application.py", line 387, in nr_async_asgi

return await coro

File "/usr/local/lib/python3.9/site-packages/newrelic/common/async_proxy.py", line 148, in __next__

return self.send(None)

File "/usr/local/lib/python3.9/site-packages/newrelic/common/async_proxy.py", line 120, in send

return self.__wrapped__.send(value)

File "/usr/local/lib/python3.9/site-packages/newrelic/api/asgi_application.py", line 70, in __call__

return await self.app(scope, receive, self.send_inject_browser_agent)

File "/usr/local/lib/python3.9/site-packages/newrelic/api/asgi_application.py", line 330, in nr_async_asgi

return await wrapped(scope, receive, send)

File "/usr/local/lib/python3.9/site-packages/starlette/applications.py", line 124, in __call__

await self.middleware_stack(scope, receive, send)

File "<string>", line 5, in wrapper

File "/usr/local/lib/python3.9/site-packages/starlette/middleware/errors.py", line 184, in __call__

raise exc

File "/usr/local/lib/python3.9/site-packages/starlette/middleware/errors.py", line 162, in __call__

await self.app(scope, receive, _send)

File "/usr/local/lib/python3.9/site-packages/newrelic/hooks/framework_starlette.py", line 108, in middleware_wrapper

return await FunctionTraceWrapper(wrapped, name=name)(*args, **kwargs)

File "<string>", line 5, in wrapper

File "/usr/local/lib/python3.9/site-packages/opentelemetry/instrumentation/asgi/__init__.py", line 581, in __call__

await self.app(scope, otel_receive, otel_send)

File "/usr/local/lib/python3.9/site-packages/asgiref/compatibility.py", line 35, in new_application

return await instance(receive, send)

TypeError: 'coroutine' object is not callable

/usr/local/lib/python3.9/site-packages/uvicorn/protocols/http/h11_impl.py:416: RuntimeWarning: coroutine 'middleware_wrapper' was never awaited

self.transport.close()

Object allocated at (most recent call last):

File "/usr/local/lib/python3.9/site-packages/asgiref/compatibility.py", lineno 34

instance = application(scope)

Other

No response

@kkkarthik6 thanks for sharing the use case. Are you using the @asgi_application decorator to integrate New Relic? Upon a quick Google search, it seems like New Relic also offers a Python agent for integrating with ASGI applications, like Uvicorn which BentoML was built on top of. Have you evaluated if that works for your use case?

Update: New Relic's Python Agent turns out to be the incorrect integration point with BentoML. See conversation later in this thread, https://github.com/bentoml/BentoML/issues/3463#issuecomment-1410076941

Hi @ssheng , Thanks for the reply. We have the following setup and also tried mounting fastAPI as our asgi middleware. Seems like it always fails to boot with the above warning.

import newrelic.agent

newrelic.agent.initialize('/app/newrelic.ini')

import numpy as np

import bentoml

from bentoml.io import NumpyNdarray

import time

import tracemalloc

tracemalloc.start()

from bentoml import Service

async def sleep():

time.sleep(1)

class ACVService(Service):

@Service.asgi_app.setter

def asgi_app(self):

from bentoml._internal.server.http_app import HTTPAppFactory

return HTTPAppFactory(self)(enabale_metrics=False)

svc = ACVService("data-bentoml-monitoring")

asgi_bento = svc.asgi_app

@newrelic.agent.asgi_application()

@svc.api(input=NumpyNdarray(), output=NumpyNdarray())

async def classify(input_series: np.ndarray) -> np.ndarray:

await sleep()

return input_series

Start the app using this command

BENTOML_DEBUG=TRUE uvicorn src.service:asgi_bento --port 5000 --host 0.0.0.0 --reload

Error log.

Traceback (most recent call last): data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/uvicorn/protocols/http/h11_impl.py", line 407, in run_asgi data-bento-monitoring-retail-1 | result = await app( # type: ignore[func-returns-value] data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/uvicorn/middleware/proxy_headers.py", line 78, in __call__ data-bento-monitoring-retail-1 | return await self.app(scope, receive, send) data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/newrelic/api/asgi_application.py", line 363, in nr_async_asgi data-bento-monitoring-retail-1 | return await coro data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/newrelic/common/async_proxy.py", line 148, in __next__ data-bento-monitoring-retail-1 | return self.send(None) data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/newrelic/common/async_proxy.py", line 120, in send data-bento-monitoring-retail-1 | return self.__wrapped__.send(value) data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/newrelic/api/asgi_application.py", line 363, in nr_async_asgi data-bento-monitoring-retail-1 | return await coro data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/newrelic/common/async_proxy.py", line 148, in __next__ data-bento-monitoring-retail-1 | return self.send(None) data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/newrelic/common/async_proxy.py", line 120, in send data-bento-monitoring-retail-1 | return self.__wrapped__.send(value) data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/starlette/applications.py", line 124, in __call__ data-bento-monitoring-retail-1 | await self.middleware_stack(scope, receive, send) data-bento-monitoring-retail-1 | File "<string>", line 5, in wrapper data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/starlette/middleware/errors.py", line 184, in __call__ data-bento-monitoring-retail-1 | raise exc data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/starlette/middleware/errors.py", line 162, in __call__ data-bento-monitoring-retail-1 | await self.app(scope, receive, _send) data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/newrelic/hooks/framework_starlette.py", line 112, in middleware_wrapper data-bento-monitoring-retail-1 | return await FunctionTraceWrapper(wrapped, name=name)(*args, **kwargs) data-bento-monitoring-retail-1 | File "<string>", line 5, in wrapper data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/bentoml/_internal/server/http/instruments.py", line 111, in __call__ data-bento-monitoring-retail-1 | self._setup() data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/simple_di/__init__.py", line 139, in _ data-bento-monitoring-retail-1 | return func(*_inject_args(bind.args), **_inject_kwargs(bind.kwargs)) data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/bentoml/_internal/server/http/instruments.py", line 51, in _setup data-bento-monitoring-retail-1 | self.legacy_metrics_request_duration = metrics_client.Histogram( data-bento-monitoring-retail-1 | File "/usr/local/lib/python3.8/site-packages/bentoml/_internal/server/metrics/prometheus.py", line 151, in Histogram data-bento-monitoring-retail-1 | return partial(self.prometheus_client.Histogram, registry=self.registry) File "/usr/local/lib/python3.8/site-packages/bentoml/_internal/server/metrics/prometheus.py", line 59, in prometheus_client assert os.path.isdir(self.multiproc_dir)

I'll share an experiment I ran:

# run-newrelic-instrumented-bento.sh

pip install newrelic

NEW_RELIC_LICENSE_KEY="MY KEY" \

NEW_RELIC_APP_NAME="bento-tensorflow-poc" \

NEW_RELIC_APPLICATION_LOGGING_ENABLED=true \

NEW_RELIC_APPLICATION_LOGGING_LOCAL_DECORATING_ENABLED=true \

NEW_RELIC_APPLICATION_LOGGING_FORWARDING_ENABLED=true \

newrelic-admin run-program uvicorn poc_api.service:svc.asgi_app --host 0.0.0.0 --port 5000 --workers 1

Requirements

bentoml==1.0.13

rich

loguru # the new-relic CLI instruments this logging library with trace ID, etc.

I used the train.py from the BentoML keras example

# src/poc_model/service.py

import numpy as np

from numpy.typing import NDArray

from typing import List

from PIL.Image import Image as PILImage

from textwrap import dedent

import bentoml

from bentoml.io import Image, JSON

from bentoml.io import NumpyNdarray

from bentoml.exceptions import InternalServerError

from pydantic import BaseModel, Field

# endpoint that inputs and outputs a string

from bentoml.io import Text

from loguru import logger as LOGGER

mnist_classifier: bentoml.Model = bentoml.tensorflow.get("tensorflow_mnist:latest")

mnist_classifier_runner: bentoml.Runner = mnist_classifier.to_runner()

svc = bentoml.Service(

name="tensorflow_mnist_service",

runners=[mnist_classifier_runner],

)

from rich import inspect

print(inspect(svc, methods=True))

class ClassifyDigitResponse(BaseModel):

confidences: List[float] = Field(..., description=dedent("""\

Confidence scores for each digit class.

The `0`th element is the confidence score for digit `0`,

the `1`st element is the confidence score for digit `1`,

the `2`st element is the confidence score for digit `2`,

and so on.

"""))

"""

Request:

curl -X 'POST' \

'http://0.0.0.0:5000/classify-digit' \

-H 'accept: application/json' \

-H 'Content-Type: image/jp2' \

--data-binary '@7.png'

Response:

{

"confidences": [

-3.8217179775238037,

1.1907492876052856,

-1.0903446674346924,

-0.28582924604415894,

-3.335996627807617,

-5.478107929229736,

-13.418190956115723,

14.586523056030273,

-1.2501418590545654,

1.6832548379898071

]

}

Response headers:

content-length: 213

content-type: application/json

date: Tue,31 Jan 2023 07:55:31 GMT

server: uvicorn

x-bentoml-request-id: 1925001177826861353

"""

@svc.api(

route="/classify-digit",

input=Image(),

output=JSON(pydantic_model=ClassifyDigitResponse),

)

async def classify_digit(f: PILImage) -> "np.ndarray":

assert isinstance(f, PILImage)

arr = np.array(f) / 255.0

assert arr.shape == (28, 28)

# We are using greyscale image and our PyTorch model expect one extra channel dimension

arr = np.expand_dims(arr, (0, 3)).astype("float32") # reshape to [1, 28, 28, 1]

confidences_arr: NDArray = await mnist_classifier_runner.async_run(arr) # [1, 10]

confidences = list(confidences_arr[0])

return ClassifyDigitResponse(confidences=confidences)

"""

Request:

curl -X 'POST' \

'http://0.0.0.0:5000/newrelic-log' \

-H 'accept: text/plain' \

-H 'Content-Type: text/plain' \

-d 'None'

Response: "None"

Response Headers:

content-length: 4

content-type: text/plain; charset=utf-8

date: Tue,31 Jan 2023 07:50:05 GMT

server: uvicorn

x-bentoml-request-id: 283141936243368829

"""

@svc.api(input=Text(), output=Text(), route="/newrelic-log")

def health(text: str):

LOGGER.info(f"This is an INFO log. {text}")

return text

"""

Request:

curl -X 'POST' \

'http://0.0.0.0:5000/newrelic-error' \

-H 'accept: text/plain' \

-H 'Content-Type: text/plain' \

-d 'None'

Response: error

Response headers:

content-length: 2

content-type: application/json

date: Tue,31 Jan 2023 07:48:40 GMT

server: uvicorn

Note: the bento trace id wasn't returned here ^^^. That'd be bad if I were trying to debug this error.

Also, the NewRelic trace ID header isn't here in both endpoints.

"""

@svc.api(input=Text(), output=Text(), route="/newrelic-error")

def error(text: str):

LOGGER.error(f"This is an ERROR log. {text}")

exc = InternalServerError(message="Fail!")

LOGGER.exception(exc)

raise exc

Here are some of the logs I got. You can see that the instrumentation affected loguru:

6ef6f8a|bento-tensorflow-poc|

2023-01-31 00:48:40.786 | ERROR | poc_api.service:error:76 - Fail! NR-LINKING|MzM1NjA0OHxBUE18QVBQTElDQVRJT058MTIwODc1MDAzOQ|PRVLOERIDDOCH|d1b88a559281729ffa23b7a5fd0cf8d0|9954d0a586ef6f8a|bento-tensorflow-poc|

NoneType: None

Exception on /newrelic-error [POST] NR-LINKING|MzM1NjA0OHxBUE18QVBQTElDQVRJT058MTIwODc1MDAzOQ|PRVLOERIDDOCH|d1b88a559281729ffa23b7a5fd0cf8d0|9954d0a586ef6f8a|bento-tensorflow-poc|

Traceback (most recent call last):

File "/Users/eric/repos/extra/bento-tensorflow-poc/venv/lib/python3.8/site-packages/bentoml/_internal/server/http_app.py", line 336, in api_func

output = await run_in_threadpool(api.func, input_data)

File "/Users/eric/repos/extra/bento-tensorflow-poc/venv/lib/python3.8/site-packages/newrelic/hooks/framework_starlette.py", line 213, in wrap_run_in_threadpool

return await wrapped(func, *args, **kwargs)

File "/Users/eric/repos/extra/bento-tensorflow-poc/venv/lib/python3.8/site-packages/starlette/concurrency.py", line 41, in run_in_threadpool

return await anyio.to_thread.run_sync(func, *args)

File "/Users/eric/repos/extra/bento-tensorflow-poc/venv/lib/python3.8/site-packages/anyio/to_thread.py", line 31, in run_sync

return await get_asynclib().run_sync_in_worker_thread(

File "/Users/eric/repos/extra/bento-tensorflow-poc/venv/lib/python3.8/site-packages/anyio/_backends/_asyncio.py", line 937, in run_sync_in_worker_thread

return await future

File "/Users/eric/repos/extra/bento-tensorflow-poc/venv/lib/python3.8/site-packages/anyio/_backends/_asyncio.py", line 867, in run

result = context.run(func, *args)

File "/Users/eric/repos/extra/bento-tensorflow-poc/venv/lib/python3.8/site-packages/newrelic/core/context.py", line 85, in _context_wrapper

return wrapped(*args, **kwargs)

File "/Users/eric/repos/extra/bento-tensorflow-poc/api/src/poc_api/service.py", line 77, in error

raise exc

bentoml.exceptions.InternalServerError: Fail!

INFO: 127.0.0.1:64048 - "POST /newrelic-error HTTP/1.1" 500 Internal Server Error

2023-01-31 00:50:06.017 | INFO | poc_api.service:health:69 - This is an INFO log. None NR-LINKING|MzM1NjA0OHxBUE18QVBQTElDQVRJT058MTIwODc1MDAzOQ|PRVLOERIDDOCH|0aa3fc64ef1857294ba0c6cf8283ab43|61c25b67f8b9959e|bento-tensorflow-poc|

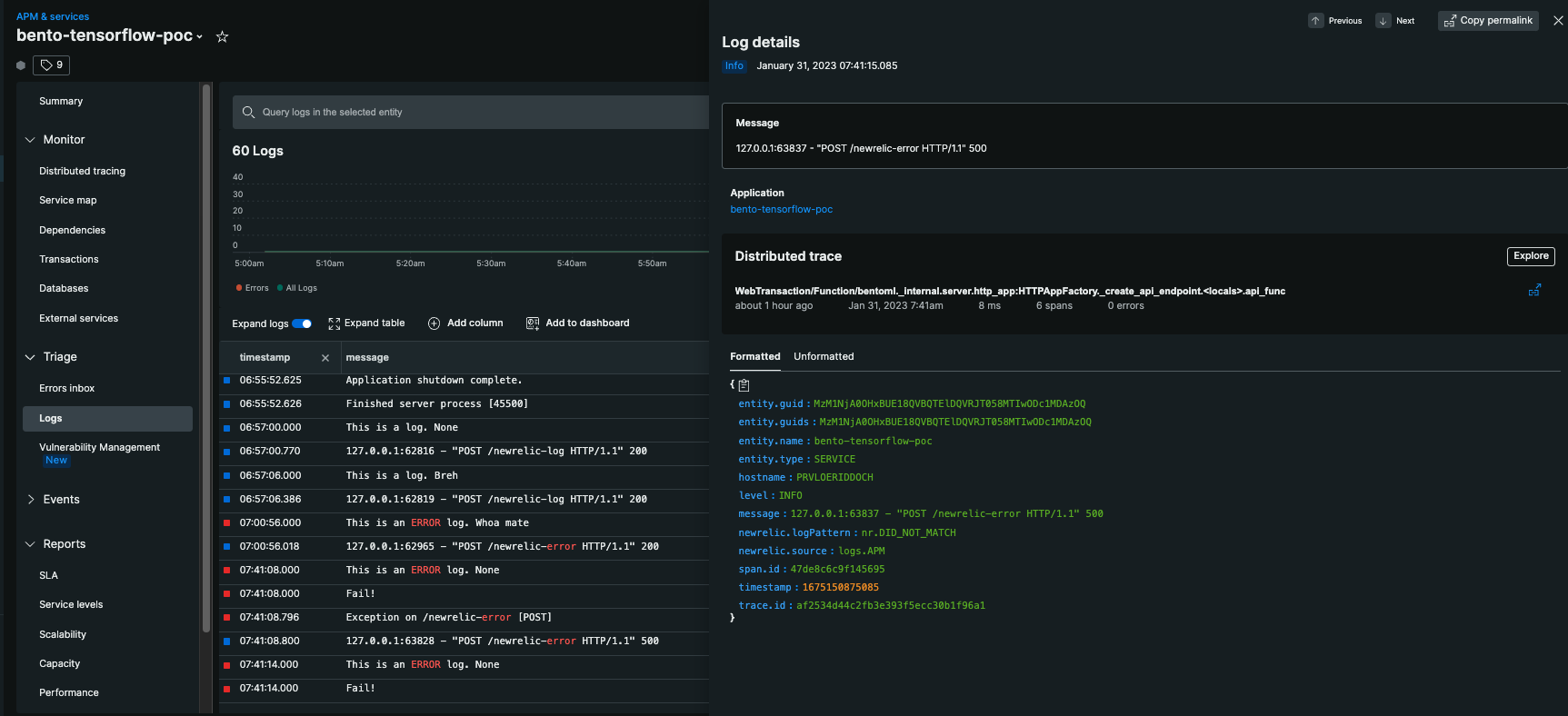

Here's the logs page in NewRelic

Here's the tracing page:

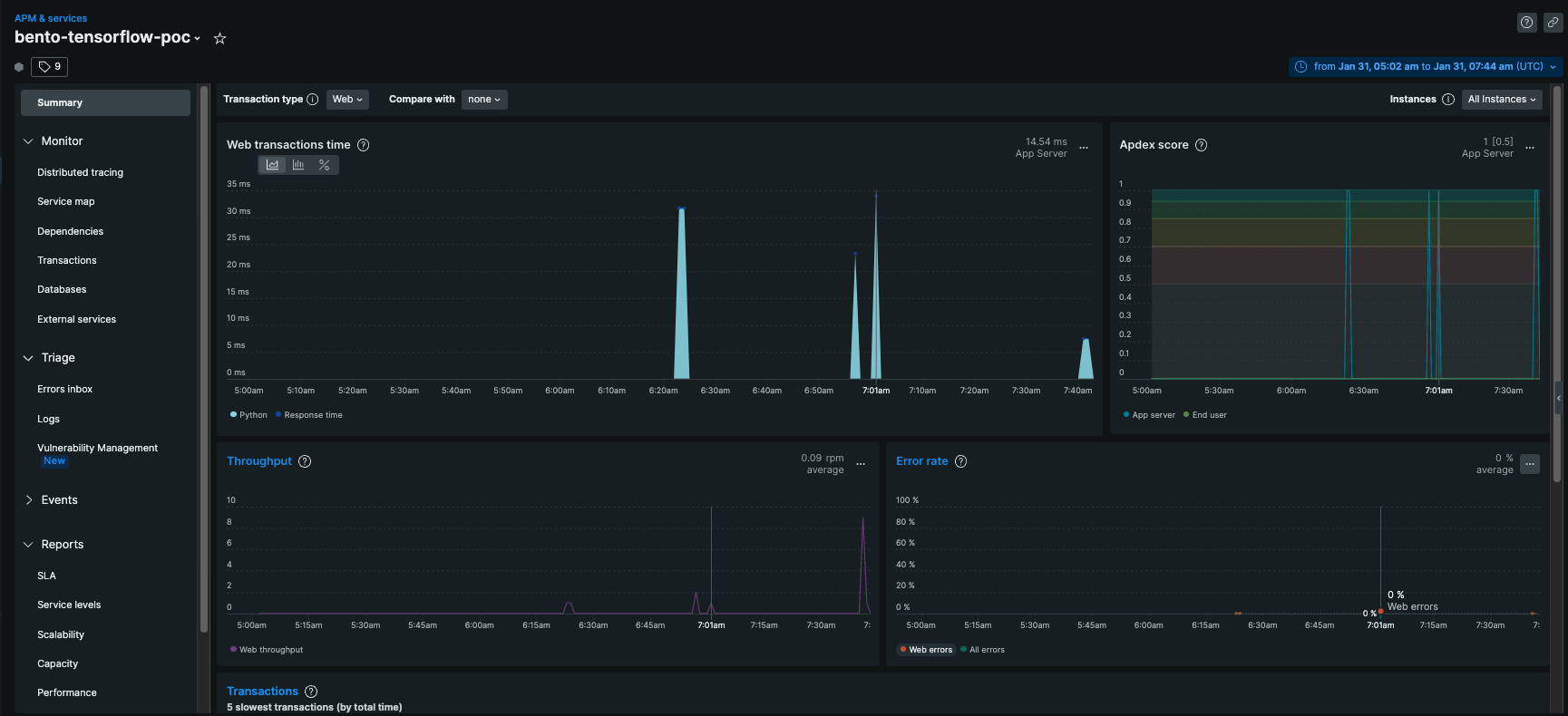

Summary page

I've done this same setup with FastAPI before. Unlike FastAPI, with Bento I'm not seeing

-

Any evidence of errors, even though most of my requests were to the

/newrelic-errorendpoint -

No metrics regarding which path I hit

/newrelic-errorshows up in some of the logs, but FastAPI's NewRelic instrumentation will give me a full "Access Logs report" AKA which paths returned which status codes and with what ratio - No trace ID in the response headers; with FastAPI the response headers include the trace ID. FastAPI also knows how to handle when the trace ID is present in the request headers

Can you think of a way I could properly configure NewRelic to have these features? These may be deal breakers for us. At the end of the day, we need to be able to:

- know which endpoints are getting errors

- be able to look up errors using a trace ID

- be able to correlate logs from up- and downstream services using the trace ID

And it's a major plus if:

- we can get a good traceback when we correlate the logs to the error trace ID

There's an ongoing NewRelic support thread here, though as of right now, there isn't much there.

Moving some of the conversation we had with @phitoduck here. Hopefully they can be helpful to @kkkarthik6.

Thanks for the insightful post, @phitoduck. It seems like you are integrating with newrelic through the Python Agent. Haven’t read a lot into the implementation, it seems like the instrumentation is automatically injected into the ASGI application if the application was started with newrelic-admin run-program uvicorn.

Treating BentoML as a simple ASGI application will not work out-of-the-box. BentoML’s runner architecture spawns multiple processes to optimize system resource utilization. Instead of a single uvicorn process, BentoML consists of multiple ASGI processes.

I will need to look into New Relic further to investigate a better integration. Since BentoML is fully compliant to OpenTelemetry and Prometheus standards, I’m hopeful that New Relic has proper supports for those standards.

New Relic supports OpenTelemetry Protocol for exporting trace data. In BentoML, you can customize the OTLP exporter in the Tracing configuration with New Relic’s endpoint.

New Relic supports exporting Prometheus metrics through their Prometheus OpenMetrics integration for Docker.

I opened a ticket with the NewRelic support team. Here's an email I just got from them:

Hi,

It looks like that our devs have analyzed the issue and as per their recommendation, we don't support the way BentoML internally creates multiple asgi apps and suggest to use OTEL or prometheus open metrics to report trace data.

However, I can definitely see the advantage of supporting BentoML. I'll be submitting a feature request on your behalf for this capability. At the moment, I don't have a timeline for this feature, but submitting this request will certainly help with its prioritization. We take requests like this very seriously and use them to prioritize what we implement next. Since we track feature requests internally, I'll mark this ticket to be closed.

We appreciate you taking the time to bring this to our attention, and hope that you will continue to do so in the future. If you have any further questions or comments about this, please let us know by replying to this ticket and we'll be happy to continue the conversation.

Best, Raji Durai Technical Support Engineer New Relic, Inc | Portland

Hi @phitoduck, I see you got a response from NewRelic and they suggested using OpenTelemetry to report metrics and you created an example in this repo https://github.com/phitoduck/bentoml-opentelemetry-newrelic. Does that work for you, or was it an experiment? I definitely need to monitor our BentoML services with NewRelic! Thank you for investigating this.