Startup overhead

Hello,

thank you for this tool, it's being great to use to look into profiling C extensions!

I am running the tool to compare psycopg2 and psycopg3 behaviours (back on a task I was doing a few months ago by running py-spy on pgbench runs. I have noticed a massive overhead at import time. Using this program (here are the requirements):

#!/usr/bin/env python3

import argparse

import asyncio

from concurrent import futures

import csv

import io

import itertools

import json

import re

import sys

import time

import numpy as np

import uvloop

import aiopg

import asyncpg

import postgresql

import psycopg2

import psycopg2.extras

if __name__ == '__main__':

print("hello")

sys.exit(1)

a py-spy run takes more than 5 seconds:

(venv) piro@baloo:~/dev/pgbench/_python$ time py-spy record -n -r 1000 -f flamegraph -o test-flame.svg -- python ./test.py

py-spy> Sampling process 1000 times a second. Press Control-C to exit.

hello

py-spy> Stopped sampling because process exitted

py-spy> Wrote flamegraph data to 'test-flame.svg'. Samples: 7157 Errors: 1

Error: No child process (os error 10)

real 0m7.118s

user 0m4.040s

sys 0m3.902s

A simple run is immediate of course.

(venv) piro@baloo:~/dev/pgbench/_python$ time python ./test.py

hello

real 0m0.253s

user 0m0.337s

sys 0m0.186s

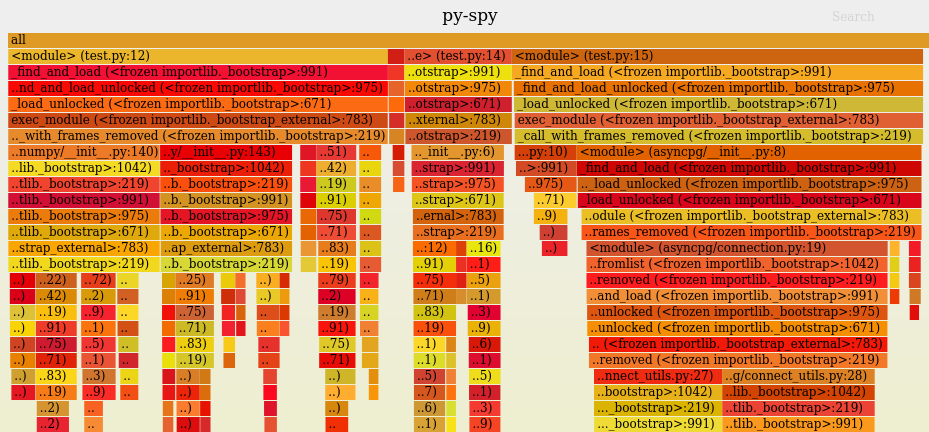

The flame graph looks like the following

Is this expected? Is there a way to reduce this overhead, or to avoid to sample it, or to display these samples?

Tests are on Python 3.8 on ubuntu 20.04 in a vitrualenv, py-spy 0.3.3

Thank you very much!

The option to sample native threads can be quite a bit more intensive than without - the easiest thing for you to do is either remove the '-n' flag to not profile native extensions or lower your sampling rate.

Did you get warnings running this? It should have showed a message telling you to reduce your sampling rate here.

the easiest thing for you to do is either remove the '-n' flag

Well, the entire reason to use py-spy is to profile C extensions :)

Did you get warnings running this? It should have showed a message telling you to reduce your sampling rate here.

No, not for these runs. Increasing further the sampling lead to that warning, and I have noticed that downsampling reduces the overhead.