Performance issue with BCFips security provider

Hi team,

My company is planning to use bouncy castle to enforce fips compliance for encryption. I've used a simple jmh benchmark that is running in a loop with a lot of encryptions/ decryptions using java cipher for AES.

I ran the attached benchmark on my machine(mac) and on our local bamboo server(linux) and got the following results

mvn clean -B verify --file pom.xml exec:java

Benchmark Mode Cnt Score Error Units

EncryptionBenchmark.fipsBcProviderEncryption thrpt 25 32008.062 ± 3524.149 ops/s

EncryptionBenchmark.nonfipsEncryption thrpt 25 3235114.417 ± 136671.753 ops/s

where nonfipsEncryption is using SunJCE version 16 security provider and fipsBcProviderEncryption is using BCFIPS version 1.000203

So it looks like BCFIPS is about 100x slower(i. terms of ops/sec) than the standard SunJCE.

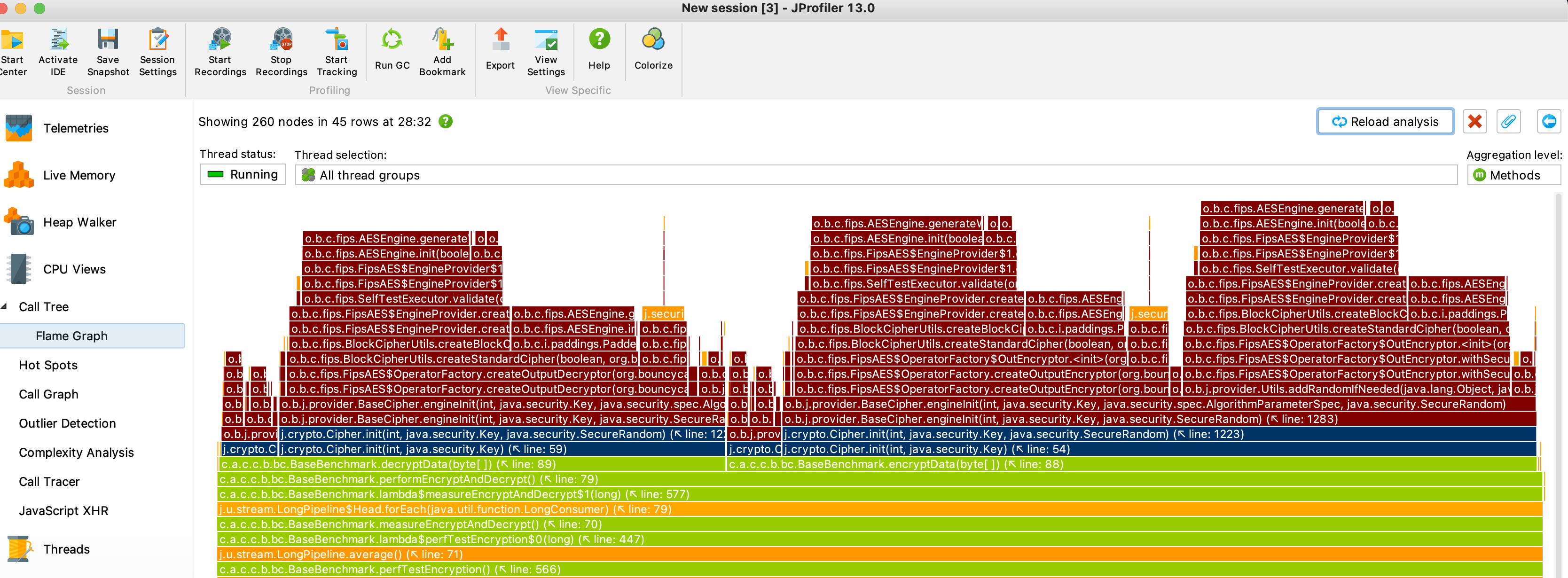

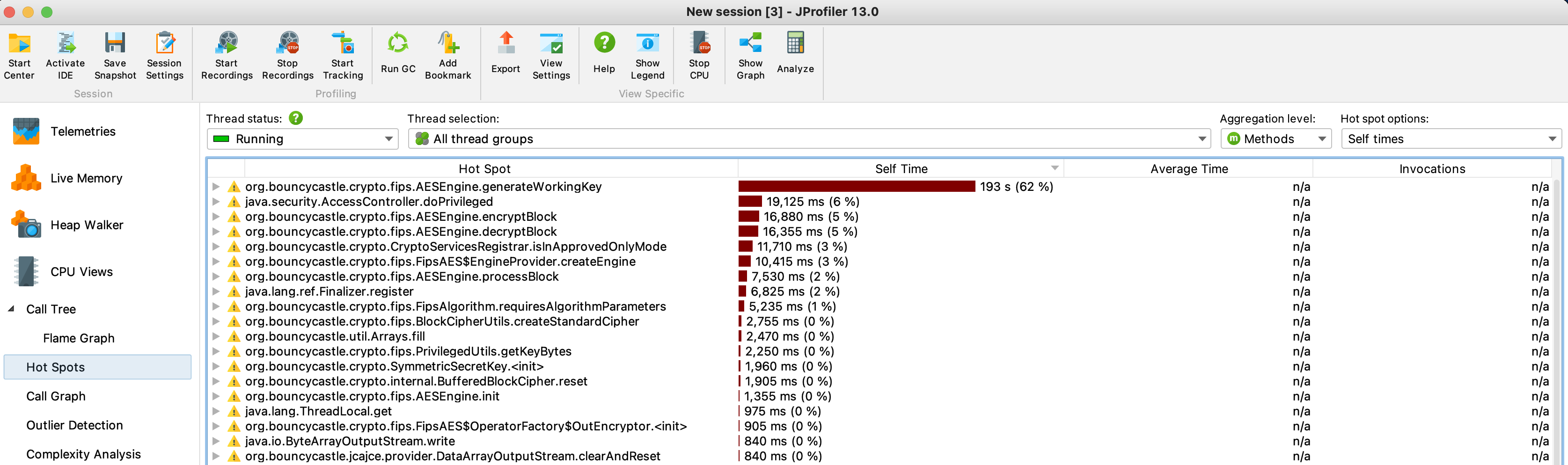

I also did some profiling on the same test and it looks like the hotspot is in org.bouncycastle.cryptor.fips.AESEngine.generateWorkingKey where 62 % of the cpu time is spent.

Do you have any suggestions/recommendations on how to improve the performance of the BCFips?

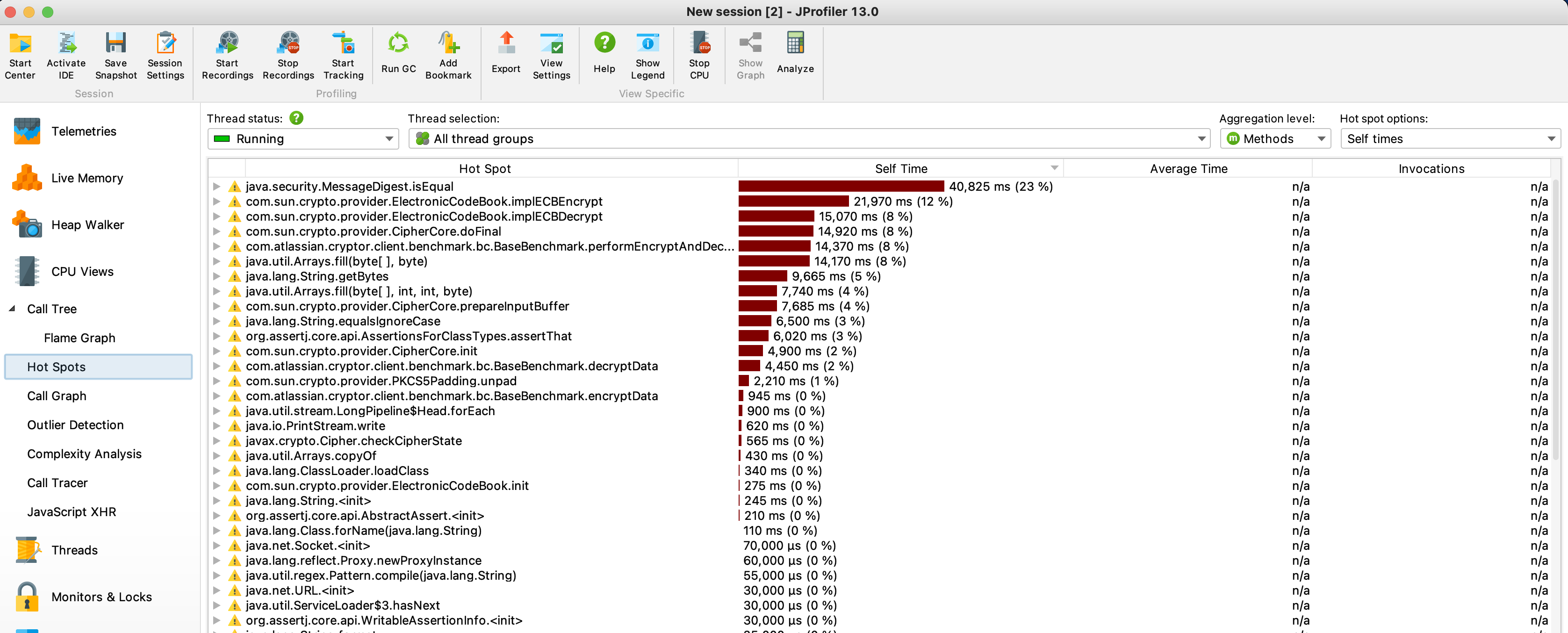

In comparison this is how the same test looks like with the Sun Jce

The results differ depending on the OS/JVM/hardware, do you have any recommendation for the hardware?

The first thing I noted about the benchmark is that the cipher is initialized for every encryption/decryption (which are very small texts) with the same key. It's unnecessary to re-initialize unless changing the key (or when the cipher is being switch from encryption to/from decryption).

We don't have an optimization to avoid the expensive work of generating the working key if the key hasn't actually changed since the last call. It's possible the SunJCE one does have this.

Could you please modify your benchmark (or add a second one) that avoids these repeated calls to Cipher.init, and report the results? The difference will still be significant since SunJCE is probably forwarding to a native implementation (if not AES-NI hardware implementation).

Thanks a lot for your suggestion. I have added additional test, that have separate ciphers for encryption and decryption, that are initialised once at setup

https://gist.github.com/chavdarch/85ca0851e4f5b157972ee918e0d2e761

and yes, that improved significantly the throughput.

Benchmark Mode Cnt Score Error Units

EncryptionBenchmark.fipsBcProviderEncryption thrpt 25 32765.990 ± 3777.521 ops/s

EncryptionBenchmark.fipsBcProviderEncryptionLessCipherInit thrpt 25 1163069.649 ± 18342.893 ops/s

EncryptionBenchmark.nonfipsEncryption thrpt 25 3030303.132 ± 141339.365 ops/s

fipsBcProviderEncryptionLessCipherInit is now just ~3x slower than nonfipsEncryption.

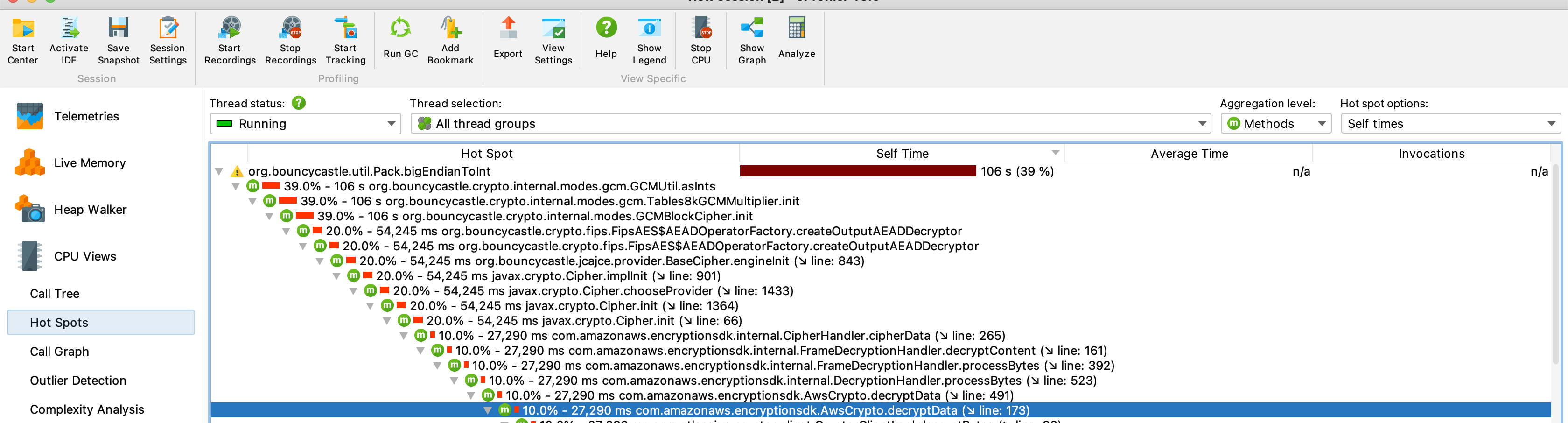

This is great, unfortunately we are using aws encryption sdk and in the call chain I can see a call https://github.com/aws/aws-encryption-sdk-java/blob/master/src/main/java/com/amazonaws/encryptionsdk/internal/CipherHandler.java#L57

which is calling init and then doFinal in the same way as the fipsBcProviderEncryption so i guess that needs to be patched, unless bc has a way to optimise the Cipher.init.

We could look at improving checks for same-key initialization (for AES/GCM I think this is something of a known issue already), but you likely couldn't expect a new version of the FIPS provider with such a feature before next year.

Unfortunately the CipherHandler case cannot really be "fixed" because at least the nonce will need to be changed for each operation, so the init calls cannot be avoided. You could check whether CipherHandler is actually re-used though, maybe there's a different key for each operation anyway (I don't know the context).

thank you for your answers! We will wait for the new version when it comes and in the meantime we will see what can we do with the encryptions sdk

Appears resolved.