fio

fio copied to clipboard

fio copied to clipboard

Noticeably lower random read iops results than diskspd on AMD platform in windows

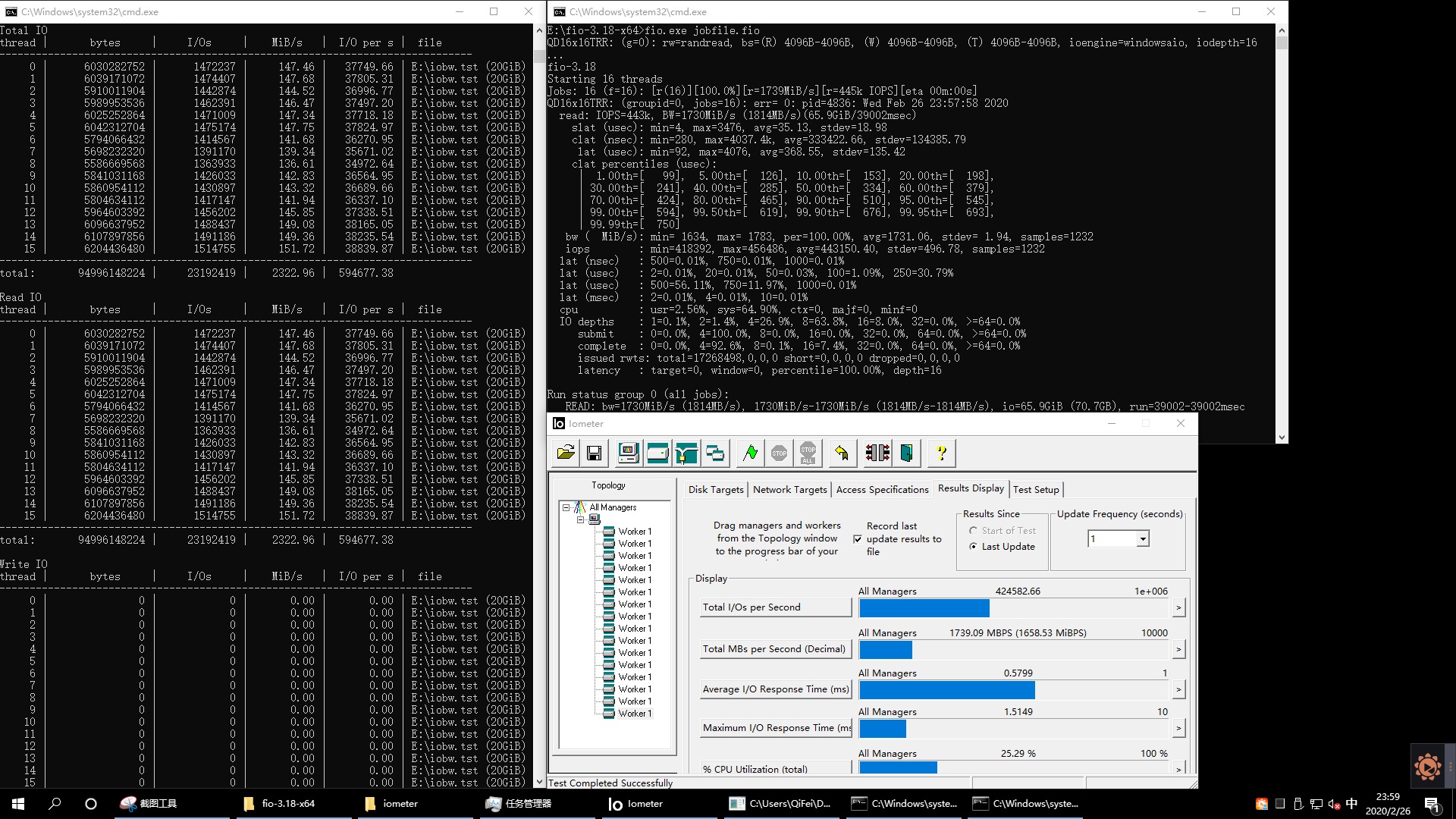

Doing some cross-platform comparison to measure the disk performance difference between CPUs. The test is direct-IO QD16x16threads 4KB random read to a single 20GB file. The jobfile for FIO is here:

[QD16x16TRR] filename=iobw.tst ioengine=windowsaio direct=1 thread=1 iodepth=16 rw=randread bs=4k size=20g numjobs=16 runtime=39 time_based group_reporting

The command for diskspd is here:

diskspd.exe -b4K -t16 -r -o16 -d39 -Sh E:\iobw.tst

Also I added the IOmeter for comparison. All tests are conducted on the same file.

On intel xeon platinum platform all the results are close but on AMD EPYC2 platform, FIO's result is much lower than diskspd(420-440k iops to nearly 600K), but closer to IOmeter's. The SSD tested is PM1725a 3.2TB U.2.

I tried creating the test file with different tools, disabling SMT/HT or setting thread affinity to 0-31 cpus, all the results stays relatively the same. What could be the cause of FIO having significantly lower results than diskspd?

thank you.

I wonder if it's the clock source - does it change anything if you add gtod_reduce=1 to the options?

@111alan This sounds like #875 ... Do things get better if you fully write to your files and then do I/O to them? If so the issue is that unlike the other tools fio doesn't currently doesn't have the logic on Windows to try a privileged call (that itself can create a security risk) with a fall back to manually write to all the file to force fully backed allocation...

@sitsofe Thx for the reply. My first test file was written with pseudo random contents(confirmed by checking the HEX values of the file). There isn't any difference in test results between this file and the test file created by FIO.

I wonder if it's the clock source - does it change anything if you add gtod_reduce=1 to the options?

OK I'll test this tomorrow. Thank you.

@111alan I mean:

- Create the file write to it entirely once:

fio --thread --filename=testfile --rw=write --size=10G` --name=writeonce --bs=1M --end_fsync=1

- Ensure that fio uses the fully written file (i.e. ensure it does not delete the file and recreate it) then check how fio performs doing I/O to it:

fio --thread --filename=testfile --overwrite=1 --rw=randwrite --name=speedtest --bs=4k --depth=32 --end_fsync=1

- What's the speed at step 2?

- What is the fio output like?

- What does performance monitor say about the amount of queued I/O at any given instance while the second job is running?

@sitsofe After preconditioning the read iops and write performance of FIO dropped a bit, but both non-preconditioned and preconditioned tests are still no where near diskspd's result.

The first row is the file directly created by FIO without preconditioning, the second row is preconditioned using your script. I only changed the file name and size but this should be irrelevant. Files are recreated after each pair of tests.

By the way the reported queue depth is different from what windows performance recorder shows(about 32 on average).

I wonder if it's the clock source - does it change anything if you add gtod_reduce=1 to the options?

Tried, but no difference at all. Perfmon shows that both software reported the correct iops though.

Having a similar issue were diskspd results, on same machine and using the same file target, generate considerably higher performance than fio. Looking at XPERF and dumps, FIO (latest buil 3.22) with windowsaio seems to issuing synchronous IO causing to have a lower performance. Diskspd is using IOCP (IO Completion Ports) where FIO was suppose to use on Windows platform Completion Ports, but it is not using them.

FIO READ FILE THREAD

00 00000000506ffb88 00007ffe41ffbbe8 ntdll!NtReadFile+0x14

01 00000000506ffb90 0000000000440e61 KERNELBASE!ReadFile+0x108 --> Reading the same file

02 00000000506ffc00 0000000000476c4b fio+0x40e61

03 00000000506ffc50 00000000004796ac fio+0x76c4b

04 00000000506ffcd0 000000000040d8d4 fio+0x796ac

05 00000000506ffe80 00007ffe4374de44 fio+0xd8d4

06 00000000506ffed0 00007ffe4374df1c msvcrt!_callthreadstartex+0x28

07 00000000506fff00 00007ffe430330f4 msvcrt!_threadstartex+0x7c

08 00000000506fff30 00007ffe4441448b kernel32!BaseThreadInitThunk+0x14

09 00000000506fff60 0000000000000000 ntdll!RtlUserThreadStart+0x2b

DISKSPD READ FILE THREAD

00 00000021db37f488 00007ffe41ffbbe8 ntdll!NtReadFile+0x14

01 00000021db37f490 00007ff7e8cbd1d4 KERNELBASE!ReadFile+0x108 --> Reading the same file

02 00000021db37f500 00007ff7e8cbd83c diskspd!issueNextIO+0x480

03 00000021db37f630 00007ff7e8cbf4e1 diskspd!doWorkUsingIOCompletionPorts+0x18c

04 00000021db37f6c0 00007ffe430330f4 diskspd!threadFunc+0x18b1

05 00000021db37fb90 00007ffe4441448b kernel32!BaseThreadInitThunk+0x14

WORKER THREADS on both

00 00000021dafbf858 00007ffe4439a64f ntdll!NtWaitForWorkViaWorkerFactory+0x14

01 00000021dafbf860 00007ffe430330f4 ntdll!TppWorkerThread+0x2df

02 00000021dafbfb50 00007ffe4441448b kernel32!BaseThreadInitThunk+0x14

03 00000021dafbfb80 0000000000000000 ntdll!RtlUserThreadStart+0x2b

But on FIO threads are waiting for IO completion to signal another IO

00 00000000502ff648 00007ffe4098644e ntdll!NtWaitForSingleObject+0x14

01 00000000502ff650 00007ffe4098e9dc mswsock!SockWaitForSingleObject+0x11e

02 00000000502ff6f0 00007ffe44071c11 mswsock!WSPSelect+0x86c

03 00000000502ff8a0 000000000044a03a ws2_32!select+0x141

04 00000000502ff980 000000000040d8d4 fio+0x4a03a

05 00000000502ffe80 00007ffe4374de44 fio+0xd8d4

06 00000000502ffed0 00007ffe4374df1c msvcrt!_callthreadstartex+0x28

07 00000000502fff00 00007ffe430330f4 msvcrt!_threadstartex+0x7c

08 00000000502fff30 00007ffe4441448b kernel32!BaseThreadInitThunk+0x14

09 00000000502fff60 0000000000000000 ntdll!RtlUserThreadStart+0x2b

DISKSPD

00 00000021db37f488 00007ffe41ffbbe8 ntdll!NtReadFile+0x14

01 00000021db37f490 00007ff7e8cbd1d4 KERNELBASE!ReadFile+0x108

02 00000021db37f500 00007ff7e8cbd83c diskspd!issueNextIO+0x480

03 00000021db37f630 00007ff7e8cbf4e1 diskspd!doWorkUsingIOCompletionPorts+0x18c

04 00000021db37f6c0 00007ffe430330f4 diskspd!threadFunc+0x18b1

05 00000021db37fb90 00007ffe4441448b kernel32!BaseThreadInitThunk+0x14

06 00000021db37fbc0 0000000000000000 ntdll!RtlUserThreadStart+0x2b

Just 100% READ with two threads , 4K block size, 4 QD

C:\Program Files\fio>fio.exe fio_rand_Read.fio.txt file1: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=windowsaio, iodepth=4 ... fio-3.22 Starting 2 threads Jobs: 2 (f=0): [f(2)][100.0%][r=77.6MiB/s][r=19.9k IOPS][eta 00m:00s] file1: (groupid=0, jobs=1): err= 0: pid=7012: Wed Aug 19 19:32:38 2020 read: IOPS=10.4k, BW=40.6MiB/s (42.6MB/s)(4061MiB/100001msec) slat (usec): min=14, max=1309.2k, avg=37.27, stdev=1284.19 clat (nsec): min=672, max=1524.0M, avg=326889.92, stdev=4522814.32 lat (usec): min=124, max=1524.0k, avg=364.16, stdev=4702.74 Diskspd diskspd.exe -b4k -t2 -o4 -d60 -Suw -L -r -w0 c:\tools\FIO\fio-rand-RW

thread | bytes | I/Os | MiB/s | I/O per s | AvgLat | LatStdDev | file

0 | 3331268608 | 813298 | 52.95 | 13554.43 | 0.294 | 0.294 | c:\tools\FIO\fio-rand-RW (10240MiB)

1 | 3665743872 | 894957 | 58.26 | 14915.36 | 0.267 | 0.273 | c:\tools\FIO\fio-rand-RW (10240MiB)

total: 6997012480 | 1708255 | 111.21 | 28469.79 | 0.280 | 0.284

A quick note on this one: I recently added an option to create PDB information (when a clang build is done). As I'm not a Windows user I don't know if it works but can someone try a clang appveyor build (e.g. https://ci.appveyor.com/project/axboe/fio/builds/35205447/job/trjap5x1dicn3023/artifacts ) and see if the stack looks different?