containers-roadmap

containers-roadmap copied to clipboard

containers-roadmap copied to clipboard

[EKS] [request]: Allow Adding Additional SAN Entries

Tell us about your request Feature to add supplementary address names (SAN) entries for the EKS API server pki.

Which service(s) is this request for? EKS

Tell us about the problem you're trying to solve. What are you trying to do, and why is it hard?

If we try and access the EKS API server using a CNAME alias of private EKS endpoint domain name, secure access fails because its PKI does not have our custom CNAME as part of the SAN.

The CNAME is resolvable through route53 resolver for its VPC. The CNAME makes it easy for queries in our datacenter to route to the appropriate VPC to resolve via convention.

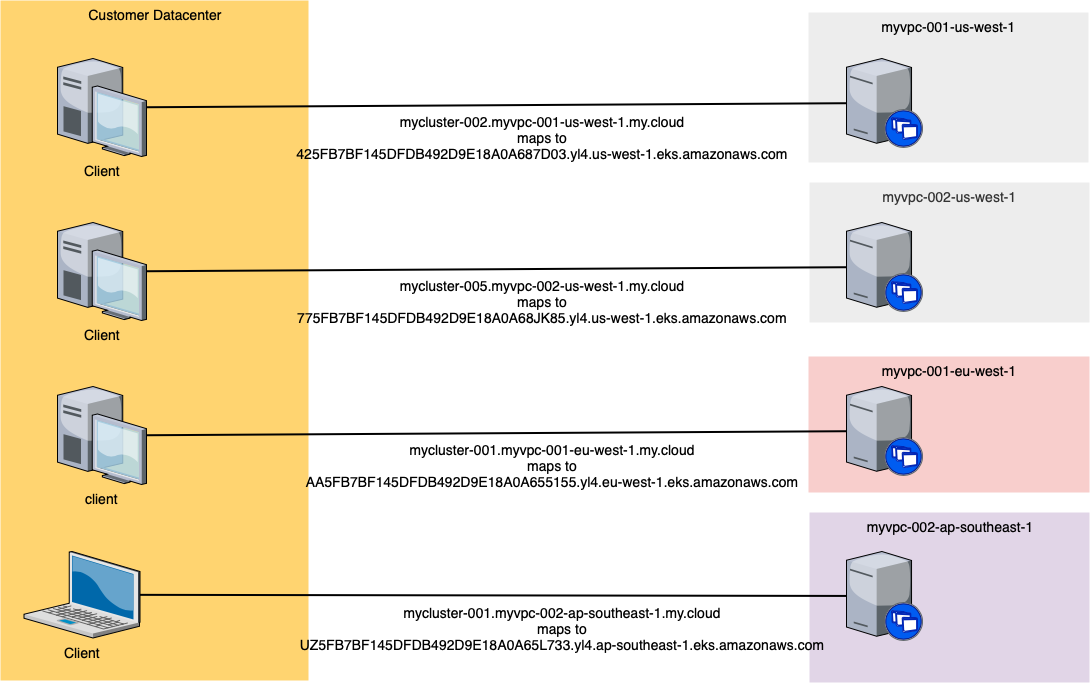

An example CNAME is mycluster-001.myvpc-001.us-west-1.my.cloud contains the subdomain myvpc-001.us-west-1.my.cloud allowing our DNS infrastructure in datacenter to route DNS queries for that subdomain to the route53 resolver. The example name contains the identifier for the cluster so it will resolve to that cluster's private name and then its private IPs.

To be able to map these CNAME to the appropriate VPC and cluster from our datacenter we use a forwarding zone per VPC. This is a simple elegant solution so that queries are resolved at the VPC level, decentralizing DNS management. This helps us scale and allows separate parties to self manage their VPCs DNS w/o having to

Worth noting that the stock AWS domain names all use the same subdomain making it impossible to differentiate one VPC from another. To solve this we create our own DNS records in VPC private zone. We use route53 resolver so we can query these from our datacenter. We do this using forwarding zones, mapping a VPC specific zone to the resolver for that VPC. Now we can have multiple VPC in a region even and have queries forward to resolve by the correct route53 resolver.

By being able to add SAN address entries we can enable tooling in our datacenters to use a CNAME to communicate with the EKS clusters in our many VPC privately and securely w/o need for brittle manual or poorly automated solutions.

Overall architecture looks like:

1 forward zone in our network for each VPC 1 route53 resolver in each VPC 1 private hosted zone in VPC 1-N EKS cluster per VPC 1 CNAME in private hosted zone per EKS cluster

The order of operations looks like:

- Software in our datacenter looks to connect to the API server for EKS cluster using the CNAME, sends query to datacenter DNS infrastructure

- Based on subdomain, query is forwarded and the route53 resolver of the appropriate VPC is contacted

- route53 resolver resolves CNAME to domain name for EKS cluster, returns private IP required to contact

- Software creates connection using the private IP (but using the CNAME which causes secure connection to fail!)

Are you currently working around this issue?

We have tried 2-3 different strategies that require manually updating records/entries. These are brittle and break often. We cannot use public records, and from our network we cannot forward queries based on stock domain names because we have more than one VPC.

some references http://social.dnsmadeeasy.com/blog/understanding-dns-forwarding/

Is there any progress ?

This would also allow using bastions to get to an EKS cluster in the VPC only: https://blog.scottlowe.org/2020/06/16/using-kubectl-via-an-ssh-tunnel/

This would help us out too. Getting a new Kube API URL when clusters are rebuilt it problematic. We also use internal CNAMEs to r53 entries for our internal services. Being able to add our CNAME as a SAN to the cert would be very helpful.