containers-roadmap

containers-roadmap copied to clipboard

containers-roadmap copied to clipboard

[Rebalancing] Smarter allocation of ECS resources

@euank

Doesn't seem like ECS rebalances tasks to allocate resources more effectively. For example, if I have task A and task B running on different cluster hosts, and try to deploy task C, I'll get a resource error, and task C will fail to deploy. However, if ECS rebalanced tasks A and B to run on the same box, there would be enough resources to deploy task C.

This comes up pretty often for us- with the current placement of tasks, we run of resources and cannot deploy/achieve 100% utilization of our cluster hosts, because things are balanced inefficiently. Max, we're probably getting 75% utilization, and 25% is going to waste.

Thanks for the report Abby. Yes, this is correct our scheduler does not currently re-balance tasks across an existing cluster. This is something that is on our roadmap.

+1

This is a great example of the issue at its worst:

Due to extremely poor balancing of the tasks by the scheduler I'm blocked from deploying a new revision of the hyperspace task. The only instance that has spare capacity for a new hyperspace task is already running a hyperspace task so the ECS agent just gives up.

The ideal thing to do would be for the ECS agent to automatically rebalance tasks from the first instance onto the second instance which has tons of extra resources, so that a new hyperspace could be placed onto the first instance.

Right now the only way to get ECS to operate smoothly is to allocate tons of extra server capacity so that there is always a huge margin of extra capacity for deploying new task definitions. I'd expect to need maybe say 10%-15% extra spare capacity, but it seems that things only work properly if there is nearly 50% spare capacity available.

During a restart of services too, the same occurs.

This needs me to run a spare instance, just for proper re-scheduling during restarts. Bigger Problem This issue occurs on a large scale, which is very problematic. If I have 10 tasks with 1 vCPU and 1 task with 2vCPU and 10 t2.mediums (2 vCPU), the 10 smaller tasks get launched on each of the instance and 2vCPU gets not resource left (rare occurrence though), but hitting this 2 to 3 times a month

Please prioritize this issue. Thanks.

#338 is requesting the same feature

Edit: Not the same feature, misunderstood sorry!

@skarungan @djenriquez ECS has added a new feature that kind of fixed this for us. You can specify a "minimum percentage" that will allow the agent to shut down old task definitions before starting new task definitions. So let's say you are running 6 of the old task definition and you want to deploy 6 of the new task definition but there isn't enough resources to add even 1 more copy of the new task defintion. If you have min percentage at 50% then ECS agent will kill 3 of the old tasks and start 3 of the new tasks, then once those 3 new tasks are started it kills off the remaining 3 old tasks and starts 3 more new tasks.

Ah @nathanpeck, I completely misunderstood the feature request here. Though what you describe is very helpful, I was actually looking for a "rebalance" feature, where as new instances come up in the cluster, ECS will reevaluate its distribution of tasks to run tasks on them.

@simplycloud where are we at with this FR? ta.

+1 for something smarter from ECS on this.

We're currently using Convox to manage our ECS clusters, and our racks are currently running on t2 instances - we've noticed that tasks seem to cluster on a couple of instances, while others are pretty much empty. With the t2's in particular, this means that some instances are chewing through their CPU credits while others are gaining.

Smarter balancing of tasks would help make this more feasible until we actually outgrow the t2 class.

@simplycloud can you please respond? This is biting people (myself included). It's been over a year since you mentioned this was on the road map. Where was it on the 15 Oct 2015 and where is it now?

@mwarkentin I would not use convox with any of the t2 classes, there is a build agent that builds the images that will eat all your CPU credits on deployments.

@mwarkentin ignore me I thought I was commenting on a convox issue.

Hi all, just hoping for an update on the rebalance feature.

Ideally, this feature should handle something similar to Nomad's System jobs, where it is just an event that ECS is listening to and can perform actions against.

In this specific case, the actions it would perform would be a redeployment of the task definition such that it ensures the proper task placement strategy is effect.

+1

+1 rebalancing wih the include of credit balance would be good. I would already be happy if an EC2 container instance would be drained when it has low credit balance.

Even a weight feature in the elastic-application-load-balancer that includes the credit balance of the target would help a lot.

Cant find any easy method to reduce the load of a throttled ec2 instance that hosts a normal wep-app task (wordpress).

@samuelkarp @aaithal or others: Any plans to add this feature or something similar?

with autoscaling, i have the same problem...

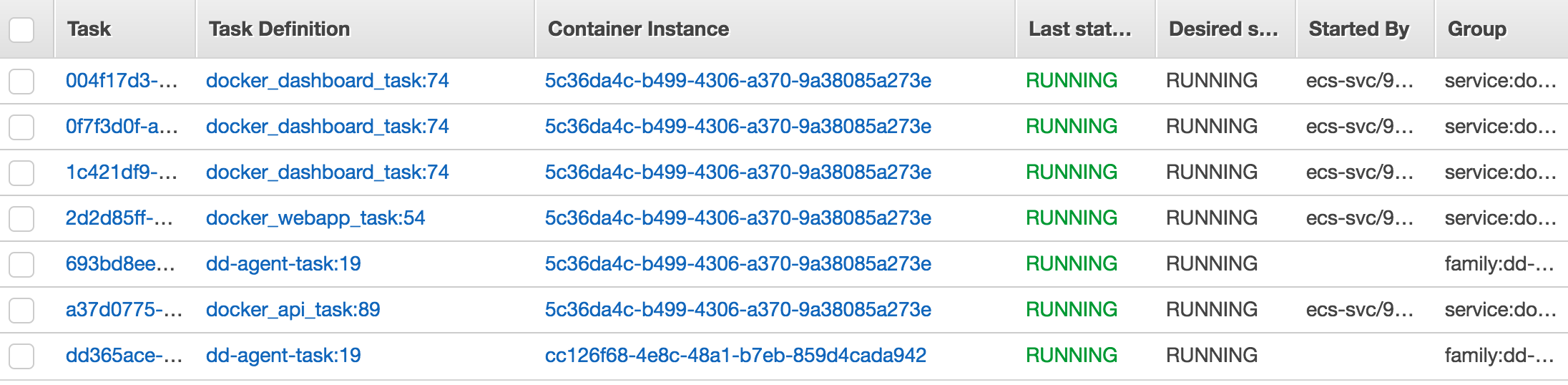

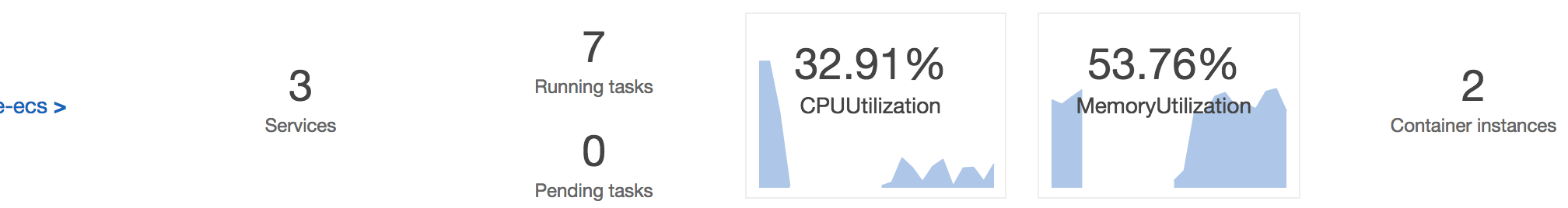

@debu99 Sorry its not clear to me, what is the issue in your screenshots?

@nathanpeck I think that 6/7 tasks are running on the same instance (and the one that's not looks like a daemon that runs on all instances).

+1 on rebalancing when the cluster grows

My two cents...

I'd imagine that it'd be unwanted behavior to include by default, so it should be something that the user should opt-in to on a per-service basis -- if you're running a web app behind a load balancer or anything ephemeral, it's relatively safe to "move" these.

(By "move", I mean start a new instance on the new container instance, without the count of tasks in the RUNNING/PENDING state exceeding the maximum percent; and once it's running, kill the old task)

However, the rebalancing should not take the limited CPU credit of throttled instance types (tX) into account. To effectively do that, you'd need to generate a large volume of CloudWatch statistics gathering requests and/or estimate the rate of depletion which will push up your overall bill. By how much? I'm not sure, but it will start to chip away at the cost advantage you're intended to have by using the tX instance types.

You're better off using non-throttled instances for ECS clusters, or you'll need to roll your own logic/framework for managing CPU credit balances. (One can argue this is the standard "buy-versus-build" argument)

As far as I'm aware, throttled instance types are meant for burstable workloads that can be terminated and restarted without incurring pain or major service degradation. Your containers may be considered a burstable workload, but the ECS agent and Docker daemon are not -- if you starve these two of CPU, your entire ECS instance may become unusable/invisible to the ECS scheduler.

Lastly, this would not necessarily something that would live within the ECS agent, but instead need to be a centrally coordinated service within the larger EC2 Container Service.

Otherwise, the sole complexity cost of having ECS agents be aware of other ECS agents (agent-to-agent discovery, heartbeats to make sure the other ECS agent hasn't died, communication and coordination patterns to request-accept-send tasks, limiting broadcast traffic to not propagate beyond a single cluster, and many other distributed computing concerns) would make this feature DOA before you even get to solving the problem of how to properly rebalance.

I would already be happy if an EC2 container instance would be drained when it has a low credit balance and activated on a specific higher limit.

The autoscale feature could manage the rest to add more EC2 instances.

The main problem is just that if an tX instance is out of credits, it is in ecs online and healthy node/container but in real it is a "unhealthy" node that cant surf all services

+1 for rebalancing

Wouldn't using 'binpack' task placement strategy help with rebalancing in the scenarios described above. It's described in more detail here: http://docs.aws.amazon.com/AmazonECS/latest/developerguide/task-placement-strategies.html

binpack makes sure that the instance is utilized to the maximum before placing the task on a fresh node. I understand that there will be edge cases where task placement will still not be as desired, but I think this will solve the majority of problems for you.

@amitsaxena I'm not sure that solves the problem at all. Binpack isn't HA since it will try and pack as many items on the box as possible.

From the docs:

This minimizes the number of instances in use

The issue in this ticket is that you want to have even spread. So as you add more nodes to the cluster you want to maximize HA by moving tasks off of nodes that are more heavily loaded onto newer nodes that are less heavy loaded. This way each node is utilized, vs having some nodes that are running at near peak and other nodes that may have zero or very few services. This is the situation we run into now, we have nodes that are running at around 75% capacity and we'd like to make sure they have breathing room to bursts. If we add a new node to the cluster we now have a node that is doing nothing vs having nodes that are at our peak run less hot and have more headroom.

Anyone working on this? It's currently the second highest rated FR. I'm hesitant to even use ECS due to this.

I wonder if the new EKS service makes this less of an issue, or at least provides an option (or explanation) for the lack of progress on this issue? Some guidance from AWS would be great! @simplycloud @samuelkarp @aaithal @anyone with some information! Please respond ( - friendly, not demanding).

Watching the reinvent keynote and @abby-fuller just came on.. then opened this issue because of a notification.. hah!

+1 for rebalancing

@devshorts I sort of get your point about having an even spread, but having binpack placement strategy along with Multi AZ is possible as well. Something similar to:

"placementStrategy": [

{

"field": "attribute:ecs.availability-zone",

"type": "spread”

},

{

"field": "memory",

"type": "binpack"

}

]

So I don't think HA is a problem with binpack strategy, and it can be effectively used to have maximum utilization of instances before spawning new ones. We use it effectively in production!

PS: Sorry for the late reply. I somehow missed your original comment :)

+1 on rebalancing when the cluster grows.