increasing recv-Q on libtorrent port

Please provide the following information

libtorrent version (or branch): 1.1.14

platform/architecture: centos7/x86_64

compiler and compiler version: gcc-4.8.2

please describe what symptom you see, what you would expect to see instead and how to reproduce it.

I build a client with libtorrent and add many torrents to download. And I monitor the process with ss -lnts.

Then I get:

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 3001 3000 *:10001 *:*

I set listen_queue_size=3000 and the Recv-Q queue is full.

I use pstack to see what is going on in threads, then I get

#0 0x00007fd6c6187d4d in sendmsg () from /lib64/libpthread.so.0

#1 0x0000000000c664c4 in boost::asio::detail::socket_ops::sync_sendto(int, unsigned char, iovec const*, unsigned long, int, sockaddr const*, unsigned long, boost::system::er

ror_code&) ()

#2 0x0000000000c61020 in libtorrent::udp_socket::send(boost::asio::ip::basic_endpoint<boost::asio::ip::udp> const&, char const*, int, boost::system::error_code&, int) ()

#3 0x0000000000c77383 in libtorrent::utp_socket_manager::send_packet(boost::asio::ip::basic_endpoint<boost::asio::ip::udp> const&, char const*, int, boost::system::error_code&, int) ()

#4 0x0000000000c7c814 in libtorrent::utp_socket_impl::send_pkt(int) ()

#5 0x0000000000c7cd7b in libtorrent::utp_stream::issue_write() ()

#6 0x0000000000bc078c in void libtorrent::utp_stream::async_write_some<std::vector<boost::asio::const_buffer, std::allocator<boost::asio::const_buffer> >, libtorrent::aux::allocating_handler<boost::_bi::bind_t<void, boost::_mfi::mf2<void, libtorrent::peer_connection, boost::system::error_code const&, unsigned long>, boost::_bi::list3<boost::_bi::value<boost::shared_ptr<libtorrent::peer_connection> >, boost::arg<1>, boost::arg<2> > >, 336ul> >(std::vector<boost::asio::const_buffer, std::allocator<boost::asio::const_buffer> > const&, libtorrent::aux::allocating_handler<boost::_bi::bind_t<void, boost::_mfi::mf2<void, libtorrent::peer_connection, boost::system::error_code const&, unsigned long>, boost::_bi::list3<boost::_bi::value<boost::shared_ptr<libtorrent::peer_connection> >, boost::arg<1>, boost::arg<2> > >, 336ul> const&) ()

#7 0x0000000000d233cf in libtorrent::peer_connection::setup_send() ()

#8 0x0000000000d236c0 in libtorrent::peer_connection::send_buffer(char const*, int, int) ()

#9 0x0000000000d368d4 in libtorrent::bt_peer_connection::write_piece(libtorrent::peer_request const&, libtorrent::disk_buffer_holder&) ()

#10 0x0000000000d208be in libtorrent::peer_connection::on_disk_read_complete(libtorrent::disk_io_job const*, libtorrent::peer_request, boost::chrono::time_point<boost::chrono::steady_clock, boost::chrono::duration<long, boost::ratio<1l, 1000000000l> > >) ()

#11 0x0000000000d255ef in boost::detail::function::void_function_obj_invoker1<boost::_bi::bind_t<void, boost::_mfi::mf3<void, libtorrent::peer_connection, libtorrent::disk_io_job const*, libtorrent::peer_request, boost::chrono::time_point<boost::chrono::steady_clock, boost::chrono::duration<long, boost::ratio<1l, 1000000000l> > > >, boost::_bi::list4<boost::_bi::value<boost::shared_ptr<libtorrent::peer_connection> >, boost::arg<1>, boost::_bi::value<libtorrent::peer_request>, boost::_bi::value<boost::chrono::time_point<boost::chrono::steady_clock, boost::chrono::duration<long, boost::ratio<1l, 1000000000l> > > > > >, void, libtorrent::disk_io_job const*>::invoke(boost::detail::function::function_buffer&, libtorrent::disk_io_job const*) ()

#12 0x0000000000c8b8b0 in libtorrent::disk_io_thread::async_read(libtorrent::piece_manager*, libtorrent::peer_request const&, boost::function<void ()(libtorrent::disk_io_job const*)> const&, void*, int) ()

#13 0x0000000000d17a76 in libtorrent::peer_connection::fill_send_buffer() ()

#14 0x0000000000d23f91 in libtorrent::peer_connection::on_send_data(boost::system::error_code const&, unsigned long) ()

#15 0x0000000000c7f155 in boost::asio::detail::completion_handler<boost::_bi::bind_t<void, boost::function2<void, boost::system::error_code const&, unsigned long>, boost::_bi::list2<boost::_bi::value<boost::system::error_code>, boost::_bi::value<unsigned long> > > >::do_complete(boost::asio::detail::task_io_service*, boost::asio::detail::task_io_service_operation*, boost::system::error_code const&, unsigned long) ()

#16 0x0000000000b70e9c in boost::asio::detail::task_io_service::run(boost::system::error_code&) ()

#17 0x0000000000b710a5 in boost::asio::io_service::run() ()

#18 0x0000000000b6fbef in boost_asio_detail_posix_thread_function ()

#19 0x00007fd6c61809d1 in start_thread () from /lib64/libpthread.so.0

#20 0x00007fd6c55378ed in clone () from /lib64/libc.so.6

libtorrent seems use one thread to do all network(including send and recv), the thread is working fine, there is no stuck.

What is the most important reason that affects the speed of packet receipt in network threads?Can I use multi threads or some other settings to speed it up?

I believe the main bottle neck in receiving UDP packets (which I assume you're talking about) is that libtorrent currently makes a system call for every packet received. i.e. it doesn't use recvmmsg() or sendmmsg() (which are linux extensions).

TCP recv-Q is high, so I guess the increasing incoming/outgoing UDP maybe affect the TCP receiving performance.

Maybe I can try to reduce the uploading by reducing the unchoke slots or limiting the connection number from the same peer?

I think limit the connections from the same peer may help. But I only find connections_limit to controller the global connections, maybe I miss some options like connections_limit_per_peer?(by default I enable the allow_multiple_connections_per_ip)

finally I found the option

network_threads, which can increase the working thread num from 1 to N. That solve my problem.

oh no yet

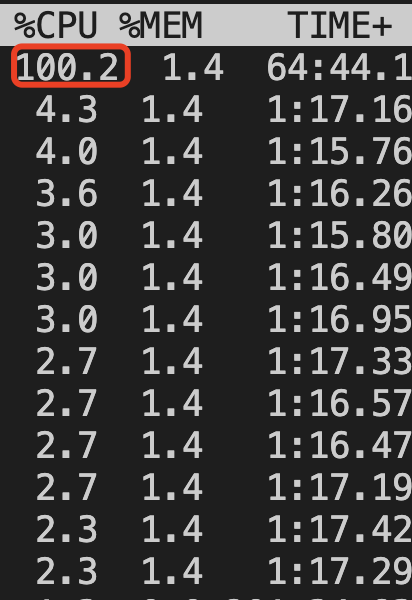

the bottle neck is absolutely in recving&sending, only one thread run all the actions

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.