libtorrent

libtorrent copied to clipboard

libtorrent copied to clipboard

Libtorrent 2.x memory-mapped files and RAM usage

libtorrent version (or branch): 2.0.5 (from Arch Linux official repo)

platform/architecture: Arch Linux x86-64, kernel ver 5.16

compiler and compiler version: gcc 11.1.0

Since qBittorrent started to actively migrate to libtorrent 2.x, there a lot of concerns from users about extensive RAM usage.

I not tested libtorrent with other frontends, but think result will be similar to qBt.

The thing is, libtorrent 2.x memory-mapped files model may be great improvement for I/O performance, but users are confused about RAM usage. And seems like this is not related to particular platform, both Windows and Linux users are affected.

libtorrent 2.x causes strange memory monitoring behavior. For me the process RAM usage is reported very high, but in fact memory is not consumed and overall system RAM usage reported is low.

Not critical in my case, but kinda confusing. This counter does not include OS filesystem cache, just in case. Also this is not some write sync/flush issue, because also present when only seeding.

I'm not an expert in this topic, but maybe there are some flags can be tweaked for mmap to avoid this?

Seems like for Windows users it also can cause crashes https://github.com/qbittorrent/qBittorrent/issues/16048. Because in Windows process can't allocate more than virutal memory avaliable (physical RAM + max pagefile size). And also it can cause lags, because if pagefile is dynamic, the system will expand it with empty space. Windows doesn't have overcommit feature, so it must ensure that allocated virtual memory is actually exist somewhere.

there's no way to avoid mmap allocating virtual address space. However, the relevant metric is resident memory (which is the actual amount of physical RAM used by a process. in htop these metrics are reported as VIRT and RES respectively. I don't know what PSS is, do you? it sounds like it may measure something similar to virtual address space.

Is the confusion among users similar to this?

And also it can cause lags, because if pagefile is dynamic, the system will expand it with empty space.

pages backed by memory mapped files are not also backed by the pagefile. The page file backs anonymous memory (i.e. normally allocated memory). The issue of windows prioritizing pages backed by memory mapped files very high, failing to flush it when it's running low on memory is known, and there are some work-arounds for that. such as periodically flushing views of files and closing files (forcing a flush to disk).

Windows doesn't have overcommit feature, so it must ensure that allocated virtual memory is actually exist somewhere.

I don't believe that's true. Do you have a citation for that?

I don't know what

PSSis, do you? it sounds like it may measure something similar to virtual address space.

Yes. It's like RES but more precise. Don't matter anyway, RES value are effectively identical in this case. Can take a > screenshot with all possible values enabled, if you don't belive.

Is the confusion among users similar to this?

No. I mentioned, that it's not cache.

I don't believe that's true. Do you have a citation for that?

Well, just search for it. https://superuser.com/questions/1194263/will-microsoft-windows-10-overcommit-memory https://www.reddit.com/r/learnpython/comments/fqzb4h/how_to_allow_overcommit_on_windows_10/

Simply Windows never had overcommit feature. And I personally as programmer faced this fact.

sorry, I accidentally clicked "edit" instead of "quote reply". And now I'm having a hard time finding the undo button.

More columns. VIRT is way larger.

I don't know what

PSSis, do you? it sounds like it may measure something similar to virtual address space.

Yes. It's like

RESbut more precise. Don't matter anyway,RESvalue are effectively identical in this case. Can take a > screenshot with all possible values enabled, if you don't belive.

The output from:

pmap -x <pid>

would be more helpful.

I don't believe that's true. Do you have a citation for that?

Well, just search for it. https://superuser.com/questions/1194263/will-microsoft-windows-10-overcommit-memory https://www.reddit.com/r/learnpython/comments/fqzb4h/how_to_allow_overcommit_on_windows_10/

None of these are microsoft sources, just random people on the internet making claims. One of those claims is a program that (supposedy) demonstrates over-committing on windows.

Simply Windows never had overcommit feature. And I personally as programmer faced this fact.

I think this may be drifting away a bit from your point. Let me ask you this. On a computer that has 16 GB of physical RAM, would you expect it to be possible to memory map a file that's 32 GB?

According to one answer on the stack overflow link, it wouldn't be considered over-committing as long as there is enough space in the page file (and presumably in any other file backing the pages, for non anonymous ones). So, mapping a file on disk (according to that definition) wouldn't be over-committing. With that definition of over-committing, whether windows does it or not isn't relevant, so long as it allows more virtual address space than there is physical memory.

The output from:

pmap -x <pid>would be more helpful.

Of course. (File name is redacted.)

10597: /usr/bin/qbittorrent

Address Kbytes RSS Dirty Mode Mapping

000055763ecbd000 512 0 0 r---- qbittorrent

000055763ed3d000 3508 2316 0 r-x-- qbittorrent

000055763f0aa000 5984 368 0 r---- qbittorrent

000055763f682000 108 108 108 r---- qbittorrent

000055763f69d000 28 28 28 rw--- qbittorrent

000055763f6a4000 8 8 8 rw--- [ anon ]

000055764131e000 54236 54048 54048 rw--- [ anon ]

00007ef4cc899000 9402888 8062068 56592 rw-s- file-name-here

...

None of these are microsoft sources, just random people on the internet making claims. One of those claims is a program that (supposedy) demonstrates over-committing on windows. I think this may be drifting away a bit from your point. Let me ask you this. On a computer that has 16 GB of physical RAM, would you expect it to be possible to memory map a file that's 32 GB?

According to one answer on the stack overflow link, it wouldn't be considered over-committing as long as there is enough space in the page file (and presumably in any other file backing the pages, for non anonymous ones). So, mapping a file on disk (according to that definition) wouldn't be over-committing. With that definition of over-committing, whether windows does it or not isn't relevant, so long as it allows more virtual address space than there is physical memory.

Sorry. By overcommit I mean exceeding the virtual memory amount, as I said earlier physical RAM + pagefile. Windows don't allow that. If this happens, Windows will expand the pagefile with empty space to ensure full commit capacity and will raise OOM if max pagefile size exceeded. So if you have e.g. 16G RAM and 16G max pagefile size, maximum possible virtual memory amount for the whole system will be 32G. This can be easily tested.

Linux allows overcommit, by that I mean allocate more VIRT than RAM+swap size. Btw on the screenshot I have 16G RAM and no swap at all. VIRT size of 41G will not be possible on Windows in such case.

Ok. When memory mapping a file, that file itself is backing the pages, so (presumably) they won't affect the pagefile, or be relevant for purposes of extending the pagefile.

That pmap output seems reasonable to me, and what I would expect. Except if you're seeding, then I would expect the "file-name-here" region to be mapped read-only.

I would also expect those pages to be some of the first to be evicted (especially some of the 99.3 % of the non-dirty pages, that are cheap to evict). Do you experience this not happening? Does it slow down the system as a whole?

I found a more clear analogy. Windows always behaves like Linux with kernel option vm.overcommit_memory = 2. That's it.

Sorry again for previous word spam.

That

pmapoutput seems reasonable to me, and what I would expect. Except if you're seeding, then I would expect the "file-name-here" region to be mapped read-only.I would also expect those pages to be some of the first to be evicted (especially some of the 99.3 % of the non-dirty pages, that are cheap to evict). Do you experience this not happening? Does it slow down the system as a whole?

This was a download. It was just easier to make a fast showcase for a such large memory scale. Because when downloading the memory consumption seems to grow indefinitely.

When seeding, memory consumption depends on peers activity. Only certain file parts are loaded, as shown in this output (file names omitted):

Address Kbytes RSS Dirty Mode Mapping

...

00007f0f3ca19000 949204 96008 0 r--s-

00007f0f7690e000 220484 5440 0 r--s-

00007f0f8405f000 1046808 15980 0 r--s-

00007f1003853000 1048576 1472 0 r--s-

00007f1043ea5000 995120 67236 0 r--s-

00007f1080a71000 1048576 2604 0 r--s-

00007f10c0a71000 1048576 53036 0 r--s-

00007f1100a71000 1048576 27404 0 r--s-

00007f1140a71000 888884 53708 0 r--s-

00007f1176e7e000 1048576 59892 0 r--s-

00007f11b6e7e000 1048576 41908 0 r--s-

00007f11f6e7e000 1178632 153136 0 r--s-

00007f123ed80000 797004 4668 0 r--s-

00007f126f7d3000 1173584 142572 0 r--s-

00007f12f6b50000 6748 4800 0 r--s-

00007f12f71e7000 1045132 101892 0 r--s-

00007f1336e8a000 1176632 140892 0 r--s-

00007f137eb98000 1048576 55532 0 r--s-

00007f13beb98000 1146904 5504 0 r--s-

00007f1404b9e000 1178168 191456 0 r--s-

00007f144ca2c000 886596 47408 0 r--s-

00007f1482bfd000 836900 34880 0 r--s-

00007f14b5d46000 1163336 149824 0 r--s-

00007f14fcd58000 1165984 224952 0 r--s-

...

When seeding RSS are freeing when file are not in use. But qBt memory consumption is still very large. With intensive seeding tasks it easily grows to gigabytes.

With libtorrent 1.x qBt consumes like 100M overall (with in-app disk cache disabled), regardless of anything.

Do you experience this not happening? Does it slow down the system as a whole?

I performed better test downloading very large file that is larger than my RAM.

free output:

total used free shared buffers cache available

Mem: 15Gi 1.7Gi 183Mi 104Mi 0.0Ki 13Gi 13Gi

Swap: 0B 0B 0B

pmap output:

Address Kbytes RSS Dirty Mode Mapping

00007f085b17e000 34933680 13305724 37664 rw-s- filename

RSS caps at RAM amount avaliable. No system slowdown, OOM events or such. Don't have the swap though.

So good news, it works just like regular disk cache (belongs to cache column in free output), at least in Linux. Dirty amount is small as expected. The only scary thing is that it shows as resident memory in per-process stats.

My impression is that Linux is one of the most sophisticated kernels when it comes to managing the page cache. Windows is having more problems where the system won't flush dirty pages early enough, requiring libtorrent to force flushing. But that leads to waves of disk thread lock-ups while flushing. There's some work to do on windows still, to get this to work well (unless persistent memory and the unification of RAM and SSD happens before then :) )

Yeah. Linux at least can be tweaked in all aspects and debugged.

I made some more research in per-process stats.

RssAnon: 123820 kB

RssFile: 5087496 kB

RssShmem: 9276 kB

Mapped files obviously represented as RssFile and monitoring tools like htop seem to simply sum up all 3 values.

Can't say is this just monitoring software issue (should RssFile even be included?) or the situation is more complicated.

This is an issue on Linux, as libtorrent's memory-mapped files implementation affects file eviction (swaping out) functionality.

Please watch the video where rechecking the torrent in qBittorrent forces the kernel to swap out 3 GB of RAM.

https://user-images.githubusercontent.com/3054729/149670686-f2b33d7a-f08c-4426-adc7-c06a56d18d49.mp4

Regular mmap()'ed files never trigger this behavior: they never counted as RSS and does not force to swap out the memory of other processes.

Relevant issue in qBittorrent repo: https://github.com/qbittorrent/qBittorrent/issues/16146

I also found this behavior strange. It shouldn't work this way I think.

But diving in the source, not found anything suspicious. mmap seems to be used in a usual way

https://github.com/arvidn/libtorrent/blob/55111d2c06d991639d9103b6c7b5fc2ba4a2b82a/src/mmap.cpp#L580

And flags are normal

https://github.com/arvidn/libtorrent/blob/55111d2c06d991639d9103b6c7b5fc2ba4a2b82a/src/mmap.cpp#L326

I also tried to play around with the flags, but nothing changes.

But I'm not an expert in C++ and Linux programming, so definitely can miss something.

Ugh.

mmap()'ed files are indeed shown in RSS on Linux as you read them- When physical memory becomes low, the kernel will unmap sections of the file from physical memory based on its LRU (least recently used) algorithm. But the LRU is also global. The LRU may also force other processes to swap pages to disk, and reduce the disk cache. This can have a severely negative affect on the performance on other processes and the system as a whole.[1] — this is what I see on my system with

vm.swappiness = 80 - It is possible to hint the kernel that you don't need some parts of the memory mapping with

madvise MADV_DONTNEEDwithout unmapping the file, but you'll need to implement your own LRU algo for this to be efficient in libtorrent case. You can't 'set' the mapped memory to reclaim itself more automatically, you'll need to manually callmadviseon selected regions you use less than other. - Mmaped files make memory consumption monitoring problematic, at least on Linux, which was also spotted by golang developers and jemalloc library developers which is used in Firefox:

"I looked at the task manager and Firefox sux!"

- Unless torrenting is the machine higher priority task, using mmap (at least on linux) for torrent data only decreases overall performance as it affects other workloads by swapping more than it could/should and "spoiling" LRU with less-priority data.

MADV_DONTNEED will destroy the contents of dirty pages, so I don't think that's an option, but there's MADV_COLD in newer versions of linux.

It seems I was not entirely correct in my previous comment. For some reason, my Linux system also swaps out anonymous memory upon reading huge files with the regular means (read/fread), and decreasing vm.swappiness does not help much. This might be recent change as I don't remember such behavior in the past. So take my comment with a grain of salt: everything might work fine, I need to do more tests.

@ValdikSS, try to adjust vm.vfs_cache_pressure.

I am very confused about what htop reports as memory usage for qbittorrent with libtorrent 2.x.

Can someone explain these values?

Can someone explain these values?

Well, this is how the OS treats memory-mapped files. This question should be addressed to Linux kernel devs, I suppose.

For the mmap issue, how about creating many smaller maps instead of the large map of the entire file. And let the size of mapped chunks pool configurable, or default to a reasonable value. It's similar to the original cache_size, but it would reduce some confusions from most users that rely on tools that mix mmap-ed pages vs actual memory usage.

For the mmap issue, how about creating many smaller maps instead of the large map of the entire file

I don't think it's obvious that it would make a difference. It would make it support 32 bit systems for sure, since you could stay within the 3 GB virtual address space. But whether unmapping a range of a file causes those pages to be forcefully flushed or evicted, would have to be demonstrated first.

If that would work, simply unmapping and remapping the file regularly might help, just like the periodic file close functionality.

But whether unmapping a range of a file causes those pages to be forcefully flushed or evicted, would have to be demonstrated first.

I believe the default behavior is to flush pages or delayed flush on sync, unless special flags were used such as MADV_DONTNEED.

But you can always use msync(2) to force the flush.

Just my 2 cents I'm using qbittorrent which uses libtorrent on windows. In earlier versions of qbittorrent i set cache size to 0 (disabled) and turned OS cache on. This worked perfectly where disk usage was the lowest it could ever be (5-20 %) with very little memory usage (160-200 MiB) for the actual qbittorrent process. Modified cache stayed relatively low aswell.

qbittorrent 4.4.0 started using libtorrent 2.0. With qbittorrent 4.4.0 the cache size setting had disappeared. When i added a couple of torrents the disk usage was around 40-50% and the memory shot up to 12-14 GiB for the qbittorrent process for similar torrents as described above.

Windows 10 x64 Hardware RAID 5 using 6 HDD drives 32 GiB of ram

Based on this https://libtorrent.org/upgrade_to_2.0-ref.html#cache-size i feel something is not quite right since it says that libtorrent 2.0 should exclusively use the OS cache. If i understand corrently libtorrent 2.0 wanted to make my previous situation standard and unchangeable. But it does not behave the same. Or am i misunderstanding something?

@SL-Gundam

libtorrent 2 features memory mapped file. So it request the OS to virtually maps the file to the memory and let the OS to decide when to do actual read, write and release through this cpu cache - physical memory - disk stack.

OS will report a high memory usage but most of these usage should actually just be cached binaries that don't need to be freed at the moment. (Unless under some scenario windows didn't flush its cache early enough, which is what https://github.com/arvidn/libtorrent/issues/6522 and this issue is talking about).

But I do think I observed disk usage and IO get higher than before when I first use a libtorrent 2 build. Don't sure if it's still the case for windows now as I've migrated to linux.

@escape0707 So it does not use the Windows standby file cache but rather a Windows managed in-process cache? Guess i'll be staying with Qbittorrent 4.3.9 (libtorrent 1.x)

Libtorrent 1 with application cache en OS cache both enabled saw similar disk IO activity according to Windows task manager compared to libtorrent 2. Within 10% of each other at the very least.

a quick summary is that libtorrent 2.0 uses memory mapped files. On all modern operating systems, other than windows, use a unified page cache, at the block device level, where anonymous memory (backed by the swapfile) and memory mapped files (including shared libraries, running executables) are all part of the same cache. Linux may be the most sophisticated to decide how to balance these pages in physical RAM.

The benefits of using memory mapped files are primarily:

- the kernel (which knows how much physical RAM the machine has available) has the best knowledge when and in which order to flush cache. Perhaps more importantly decide how much, and for how long, to keep read cache.

- some kinds of storage can be directly addressed by the CPU, as if it was RAM, circumventing a lot of kernel infrastructure and providing a very high performance. (linux calls this DAX)

Windows has a slightly more old-school approach, where memory mapped files sits on top of the filesystem, which in-turn has a disk cache (at the file-level, rather than block device level). When writing to a memory mapped file on windows, the page is first placed in the memory mapped buffer, and then (at some point) is written to the file, but possibly put in the disk cache.

There's a patch to force flush the memory mapped buffer to the disk here. There may still be issues with windows not flushing the disk buffer very often or early. This is a problem in libtorrent 1.2 as well, and (as far as I understand) was solved by periodically closing files. This is a feature that has been re-enabled in libtorrent 2.0 recently.

Another issue is; if the file being downloaded to isn't sparse (or is a network share, that possibly doesn't preserve sparseness across the network) every page that's written may also need to be read just to populate the memory mapped buffer. When downloading to a network drive, using a memory mapped file may not be that great. I'm working on a simple overlapped-io disk backend. That would clearly tell a network share (for instance) that a buffer is to be written, no matter what's on disk already, so it could be submitted.

also, when reporting problems in libtorrent (specifically the mmap-disk backend), just pointing to vmstats numbers suggesting the kernel decided to use a lot of physical memory for disk cache is not sufficient. That's a feature of the memory mapped disk backend.

However, if the kernel is having a hard time making good decisions about which pages to keep in RAM, where it negatively impacts the system, I would like to know about it. There are hints to help the kernel make better decisions.

Users will still be frightened by memory utilization. Especially on Windows, where it is considered as actually used. Significant userbase pushback already can be seen for qBt. I mean users will continue to blindly make theories about memory leaks and malicious activity. Just because this is complicated topic and very small amount of techy people can understand what is going on. I think "best is the enemy of the good" here.

Users will still be frightened by memory utilization. Especially on Windows, where it is considered as actually used.

I mean users will continue to blindly made theories about memory leaks and malicious activity. Just because this is complicated topic and very small amount of techy people can understand what is going on. I think "best is the enemy of the good" here.

The remembers me of https://www.linuxatemyram.com/ .

Maybe an faq and links to some technical reference is needed on libtorrent.org, but at the end of the day, we needed to progress to the better way of solving this cache problem.

The remembers me of https://www.linuxatemyram.com/ .

Maybe an faq and links to some technical reference is needed on libtorrent.org, but at the end of the day, we needed to progress to the better way of solving this cache problem.

This situation is worse. FS cache does not appear as a working set. But for memory-mapped files it does. And there is no easy way to distinguish em from an actual memory consumption. Also yes, mmap-ed files just seem to be handled worse than regular FS cache, regardless of OS.

Windows standby cache and modified cache work perfectly in my opinion. Memory mapped files in the application process... not a fan. It's a problem when applications feel they need cache stuff for themselves or decide how things should be cached which p2p apps are notorious for. Just do normal reads and writes and let the OS do his thing.

My problem with the memory mapped files is not the amount of memory used. It's that the cache is directly connected to the application and not a shared cache. Windows standby and modified cache are shared and all processes can profit from it.

You should really do some tests on Windows where you download 10 5GB+ torrents at the same time and compare libtorrent 1.x to 2.x where 1.x uses the following settings.

- cache size to 0 (disabled)

- OS cache on

- using normal HDD's

- Make sure the torrents and internet connection is able to reach 30-50 MB's per sec download.

- I use full allocation non-sparse files... so don't know how that would impact the situation

Yeah, I also have no issues with qBt in-app cache disabled. With 1 Gbps link and some amount of torrents downloading/seeding simultaneously. OS handles the cache well. For me, I didn't noticed any performance degradation with mmap-ed files, but also as any improvement. But some people report degradation for very large torrents amount in the client. E.g. https://github.com/qbittorrent/qBittorrent/issues/16456#issuecomment-1042586505

this is a non-issue. I have 512Gb of ram and 219 files with zero issues. qbittorrent-nox libtorrent is using 46GB of ram. If you have any issues with ram usage, please try to equip your computer with a reasonable amount of memory.

Hello,

Started having similar issue when downloading 20GB files (big memory consumption).

I'm currently on Windows 10 x64, qBittorent 4.4.1:

A working fix is underway people! (currently for windows only) https://github.com/qbittorrent/qBittorrent/pull/16485

This is an issue for me on Ubuntu Server 20.04 (i5, 8gb RAM). Using qBittorrent 4.4.1, when downloading 3+ torrents simultaneously (100mbps+ connection, spinning hard drive), the CPU and RAM usage will spike to 60-80%, resulting in input lag on remote SSH connection and other services being unavailable until the downloads are complete.

I understand that unused RAM is wasted RAM, but this is to the point where other programs requiring it are apparently not getting it. I initially blamed it on qBittorrent, but reverting to 4.3.9 with libtorrent 1.2 resolves all this, so I would not say it's a Windows-only problem.

This is an issue for me on Ubuntu Server 20.04 (i5, 8gb RAM). Using qBittorrent 4.4.1, when downloading 3+ torrents simultaneously (100mbps+ connection, spinning hard drive), the CPU and RAM usage will spike to 60-80%, resulting in input lag on remote SSH connection and other services being unavailable until the downloads are complete.

I understand that unused RAM is wasted RAM, but this is to the point where other programs requiring it are apparently not getting it. I initially blamed it on qBittorrent, but reverting to 4.3.9 with libtorrent 1.2 resolves all this, so I would not say it's a Windows-only problem.

I do not think Ubuntu 20.04 supports qb 4.4.1? According to its website:

Also Ubuntu 20.04 is dropped from the PPAs because it doesn't have the minimum required Qt5 version (5.15.2). The AppImage should cover any users left on that version.

What's your qbittorrent build?

@star-39 I used the linuxserver Docker image but I think that's irrelevant given that 4.3.9 does not present those issues. I'm using it headless so only accessing the web UI, hence not affected by Qt versions.

This is an issue for me on as well but on Debian headless. I run Qbittorrent-nox on a vm using NFS to access storage and ever since 4.4.x any download basically makes the drive act like its mounted with sync. IO jumps to around 90-100% with very little writes unlike 4.3.9 where it downloads anything near native speeds. on 4.3.9 i could download around 80MB/s vs on 4.4.x the best i got is around 20MB/s with the drive's IO being 100%

Another thing with 4.4.x idling just seeding this is the network utilization of just uploading around 4MB/s

ens19 is the interface I only use for NFS with MTU 9000. And as you can see its recving give or take 70MB/s of something . the NFS server shows this as well and its driving up CPU usage and rising the drive's IO reading 70MB/s to upload around 4MB/s. ens18 is normal lan.

ens19 is the interface I only use for NFS with MTU 9000. And as you can see its recving give or take 70MB/s of something . the NFS server shows this as well and its driving up CPU usage and rising the drive's IO reading 70MB/s to upload around 4MB/s. ens18 is normal lan.

Next is an image of 4.4.0 (idling just seeding) compiled with the old libtorrent where i have no problems.

So something is happening here where libtorrent2.x mmap is not playing nice with NFS

So something is happening here where libtorrent2.x mmap is not playing nice with NFS

@arvidn How does this behave on a filesystem like ZFS, where file operations are cached in memory natively via the ZFS ARC? Unfortunately I couldn't find much other than the following (which is relatively old): https://stackoverflow.com/questions/34668603/memory-usage-of-zfs-for-mapped-files

There is one peculiar workload that does lead ZFS to consume more memory: writing (using syscalls) to pages that are also mmaped. ZFS does not use the regular paging system to manage data that passes through reads and writes syscalls. However mmaped I/O which is closely tied to the Virtual Memory subsystem still goes through the regular paging code . So syscall writting to mmaped pages, means we will keep 2 copies of the associated data at least until we manage to get the data to disk. We don't expect that type of load to commonly use large amount of ram

@draetheus I think I can answer this as I have this setup. I have 512 GB of RAM and 8x18 TB hard drives. A very modest middle of the road PC.

when I had a btrfs array, that didn't have in memory cache qbittorrent would use 67GB of memory for minimum feature level.

when I had a zfs array the arc would be shrunk by 67GB to make room for qbittorrent. I tried making a data set with only 102 GB of files that were in the client. Arc (eventually) ballooned to 102GB and stopped + qbittorrent using 67GB so this means that some files are double paged to memory.

I believe that's operating as designed, you really want your torrent client being the most memory hungry application on your PC. Your torrent client will determine what needs to go in memory, not your file system layer. Otherwise it wouldn't do this, obviously.

if this is a problem, I suggest using an older version of libtorrent that doesn't Force pin your files to memory. the community who has sub 512GB could start using that version and back port feature updates, and the community who is interested in a pure libtorrent configuration could continue on main. I for one know that the devs know what they are doing, and I'm not going to question the fact that my client is using the equivalent Ram of eight virtual machines. this is not a bug, it's operating as designed.

to be clear, it's the kernel that decides what the physical memory is best used for. Do you have a reason to believe the kernel page cache performed worse than the zfs ARC in your case?

when I had a zfs array the arc would be shrunk by 67GB to make room for qbittorrent. I tried making a data set with only 102 GB of files that were in the client. Arc (eventually) ballooned to 102GB and stopped + qbittorrent using 67GB so this means that some files are double paged to memory.

The docs for libtorrent's cache_size say the cache is shared between socket buffers and file cache, so just because it's full doesn't mean it's full of file data (@arvidn correct me if I'm wrong).

The ARC always can fill up. It's a cache; it's supposed to do that. It may be that mmap fills the ARC more aggressively than other access patterns (looks like there were issues with mmap and ZFS a few years back), but that shouldn't affect how you set your zfs_arc_max.

The docs for libtorrent's cache_size say the cache is shared between socket buffers and file cache, so just because it's full doesn't mean it's full of file data (@arvidn correct me if I'm wrong).

in libtorrent 2.0 there is no cache_size. The disk cache is handled by the kernel.

Just FYI, qbittorrent 4.4.2 with libtorrent 2.0.5 allocates 2 TB (2005 GB) of virtual memory on my x86_64 system.

As far as I know, AArch64 with 3-level translation and without Large Virtual Address support operates only in up to 512 GB virtual memory space [source 1: Linux kernel + source 2: The definitive guide to make software fail on ARM64].

on arm64 you get only 39-bit addresses if you take Linux defaults ○ only 512GB addressable space

Have anyone (@arvidn) tested libtorrent with mmaped files in such, pretty realistic, setup? How does it behave on a 32-bit systems?

Just FYI, qbittorrent 4.4.2 with libtorrent 2.0.5 allocates 2 TB (2005 GB) of virtual memory on my x86_64 system.

On windows a x64 process often allocates that by default for virtual memory. So pretty normal imo. Though unsure whether the definitions in Windows and Linux for virtual memory are comparable. https://superuser.com/questions/1503829/process-explorer-reports-processes-with-2tb-virtual-size-in-windows-10

I still feel that libtorrent should focus more on standby memory caching, not on the mmap file cache (active in-process file cache)

Though unsure whether the definitions in Windows and Linux for virtual memory are comparable.

Not really. In Windows Virtual size doesn't mean anything most of the time.

For actual memory allocated by a process you can look at Commit size. But it is still not always directly comparable.

@arvidn I have a question for developers. How hard would it be to bring back the old cache mechanism in parallel to the existing one and give the users option to choose from them? I got an impression, based on numerous comments here and on qBittorent, that there are many people who would prefer to have a choice to benchmark both the systems by themself and pick the one that suits them better. What are the negatives of that in the long run (apart from somewhat increased maintenance cost)?

I think the root cause is mmap could pollute the system cache, and including interactive applications such as ssh and anything that's still useful for the user. I still suggest the application should limit the memory usage by cgroups or equivalent on non-linux systems.

The old disk I/O sybsystem was quite complex so bringing it back would be a considerable maintenance burden.

Since the main problem appears to be with poor handling of mmaped writes, a possible solution would be to use regular syscalls for writes while retaining mmaped I/O for reads. IIRC some other apps which use mmapped I/O use this approach.

The old disk I/O sybsystem was quite complex so bringing it back would be a considerable maintenance burden.

I doubt that a general-purpose system of the kernel could be as efficient as a well-tunned application-specific one.

How long will it take to adapt the library behavior to many variants of the different kernels? What if the kernels change their algorithms? It can turn out to be a significant maintenance burden and complexity by itself but without clarity and control of the DIY system. Essentially, the kernel cache implementation will always be a black box, even worse, multiple different constantly changing black boxes.

On the contrary, the self-made system reduces the number of variables and guarantees that the performance problems can be much easier reproduced and as a result solved despite the tradeoffs the kernel developers make in certain specific situations.

The old disk I/O sybsystem was quite complex so bringing it back would be a considerable maintenance burden.

Since the main problem appears to be with poor handling of mmaped writes, a possible solution would be to use regular syscalls for writes while retaining mmaped I/O for reads. IIRC some other apps which use mmapped I/O use this approach.

Afaik, the old io storage engine was quite heavily optimized and coupled with the mem cache management. Since lt still supports custom storage engine, how about having alternative storage engine?

to be clear, it's the kernel that decides what the physical memory is best used for. Do you have a reason to believe the kernel page cache performed worse than the zfs ARC in your case?

An counter argument could be kernel is dumb on telling which page more important when memory pressure comes. For example the sshd pages could usually be more important than pages of a Linux ISO file that's has few leechers. However the mmap approach doesn't really give kernel any hint, causing performance degradation on any other procs sharing the same page cache pool.

Ideally we need Linux kernel to limit the maximum cached pages per application, however it's seems doesn't exist.

An counter argument could be kernel is dumb on telling which page more important when memory pressure comes

I wasn't making an argument, I was asking a question. Are you saying the kernel is dump, or are you speculating that it might be?

However the mmap approach doesn't really give kernel any hint

I don't know what you base that claim on (I'm curious). The kernel has quite a lot of information, more than any single process one might argue. It has:

- cache hit rate and ratio

madvise()hints- the kind of storage backing the page (and I would expect it also has an estimate of latency and throughput of accessing the storage)

An counter argument could be kernel is dumb on telling which page more important when memory pressure comes

I wasn't making an argument, I was asking a question. Are you saying the kernel is dump, or are you speculating that it might be?

However the mmap approach doesn't really give kernel any hint

I don't know what you base that claim on (I'm curious). The kernel has quite a lot of information, more than any single process one might argue. It has:

- cache hit rate and ratio

madvise()hints- the kind of storage backing the page (and I would expect it also has an estimate of latency and throughput of accessing the storage)

Afaik, the default Linux page cache (not those advanced cache such as zfs arc) doesn't track per page hit/miss ratio or if has been accessed recently, and most consumer level application doesn't use madvise, it does have the info on the backing storage, however I don't think kernel will sort pages by that when trying to eviction some page, since those mmap-ed page caches usually shares the same backing device.

I believe that's the reason most high performance commercial application such as database uses explicit io hints, such as direct io and madvise to instruct kernel to make unload useless pages.

Afaik, the default Linux page cache (not those advanced cache such as zfs arc) doesn't track per page hit/miss ratio or if has been accessed recently,

You could try this tool: http://manpages.ubuntu.com/manpages/bionic/man8/cachestat-perf.8.html

and most consumer level application doesn't use madvise,

But this thread is about libtorrent, which does use madvise()

it does have the info on the backing storage, however I don't think kernel will sort pages by that when trying to eviction some page, since those mmap-ed page caches usually shares the same backing device.

I'm not sure exactly what scenario you're imagining. But I don't think it's uncommon for the swap file (backing anonymous memory) to be on a different device than the bittorrent download directory, or executable files and shared libraries.

Are you saying the kernel is dump, or are you speculating that it might be?

@arvidn Please, let me jump in here and add my 2 cents. I would argue that the kernel might be extremely smart but at the same time somewhat blind about the actual tradeoffs the user is willing to take. The kernel has the ability to optimize the throughput of the whole system probably to a greater extent. However, it has much fewer opportunities to optimize for specific latency expectations the actual user has in mind for different applications. What I mean is that the desktop user would trade off the overall throughput of the system for the responsiveness of the individual app. At the same time, the server user will probably do the opposite. That is the conflict the kernel has little power to know about.

For the libtorrent, I would assume that an average user expectation is "please work as fast as possible unless it makes an impact on my browser or other apps. Even if I stepped away for a minute from my PC." The problem is that the new version of libtorrent behaves differently. The kernel doesn't know that the idle memory of the browser (which stayed 5 minutes minimized) is much more important for the user and shouldn't be paged off even if it will speed up the throughput of the libtorrent and the machine overall.

Moving forward I came across interesting reasoning SQLite provides about Memory-Mapped I/O. Here is the summary:

- mmap() is disabled by default.

- It can be enabled only up to a specific size

- This specific size is caped from hardcoded constant anyway

- The main advantage of the mmap() they claim is avoidance of the memory copy between the kernel and the user space

- They don't mention any advances mmap() gives for the cache implementation

- They use mmap() for read-only

Coming back to the original quote, I would personally argue that, although the kernel is definitely not dumb, it doesn't have much of advantages against the user-space implementation of the cache if the data is used by the app exclusively. I mean cache only, zero-copy is still a big benefit when needed. The app can monitor the total available memory and grow the cache automatically, it can use fadvise() instead of madvise() to control prefetch, etc. At the same time, the user-space cache implementation could be much better tuned for specific app requirements.

So, if I had a chance, I would vote for at least a choice between two storage engines.

Agreed, the user space application is usually smarter on managing cache than the kernel, and the 0-copy mostly benefit the high performance IO. There are also bt specific features such as "piece suggest", which is easier to implement with user space caching, and it's tricky to implement with mmap. So there is a PR to allow user to switch to the basic posix disk io engine, which is slower but also more conservative on memory/cache usage.

Are you saying the kernel is dump, or are you speculating that it might be?

@arvidn Please, let me jump in here and add my 2 cents. I would argue that the kernel might be extremely smart but at the same time somewhat blind about the actual tradeoffs the user is willing to take.

It was a genuine question. Knowing how the kernel behaves is a step towards solving this problem. We can all speculate, but best is evidence, measurements or someone who actually knows what the kernel logic is.

At the same time, the server user will probably do the opposite. That is the conflict the kernel has little power to know about.

I'm not sure I agree with that conclusion. If you can't ssh into your server, because it's not responsive, it doesn't really matter what throughput it has.

The kernel doesn't know that the idle memory of the browser (which stayed 5 minutes minimized) is much more important for the user and shouldn't be paged off even if it will speed up the throughput of the libtorrent and the machine overall.

If the browser memory is anonymous (i.e. backed by the swap file) it could know. I would kind of be surprised if any kernel prioritized regular file I/O over swapping anonymous memory to disk. But it seems nobody here knows what or why the kernel behaves the way it does.

Moving forward I came across interesting reasoning SQLite provides about Memory-Mapped I/O. Here is the summary:

Yes, @ssiloti mentioned that kernels seem to have an especially hard time with flushing dirty pages, and using regular file I/O for that might make sense.

Coming back to the original quote, I would personally argue that, although the kernel is definitely not dumb, it doesn't have much of advantages against the user-space implementation of the cache if the data is used by the app exclusively.

The question was whether @myali was speculating or whether they know that this is for sure the behavior of the kernel. I think you misunderstood the term "dumb" to generally discredit the linux kernel. It specifically meant whether the kernel is sophisticated "on telling which page more important when memory pressure comes".

It seems like you also speculate that it is dumb/not sophisticated when it comes to determining the importance of pages in the page cache.

At the same time, the user-space cache implementation could be much better tuned for specific app requirements.

You end up with the same data in two places, once in the page cache (because you just read it from disk or you just wrote it to disk) plus your user-level cache. There's no practical way to tell the operating system that your user-level page cache can be shrunk/reclaimed when there's memory pressure. I experimented with MacOS purgable memory feature. It was impractical and specific to MacOS. If another process reads the same file, the data will be duplicated in RAM as well, since the page cache will be populated with data you may already have in the user-level cache.

So, if I had a chance, I would vote for at least a choice between two storage engines.

Yes, I'm in favor of that too. I have a half-finished win32 overlapped I/O back-end I'm working on. It would seem reasonable to have a multi-threaded version of the posix-io backend too. Patches are welcome!

There are also bt specific features such as "piece suggest", which is easier to implement with user space caching, and it's tricky to implement with mmap.

You mean it's easier because with a user level cache you know exactly which pieces to suggest because you know exactly what's in the cache?

I wouldn't say it's so clear cut. Even with a user level cache, I don't think you want to pin pieces in cache just because you've sent a suggest message. To chances are that whatever you suggested has been evicted by the time the peer gets around requesting it.

It is a bit more complicated to ask the kernel (and you need to make a system call), but you can, man page. However, the libtorrent implementation of sending suggest_piece messages doesn't care about the cache. It assumes that a piece we just downloaded will be available in the cache, and it will be reasonably rare and a good suggestion for other peers.

I could also use some help in identifying this problem through some metrics. I started measuring some memory counters in linux and Windows here. But I don't really have a way to reproduce or measure this problem.

I personally still stick with 1.x because the in-app cache can disabled there completely. I use an SSD and even fully loaded 1 Gbps connection is easy walk for it. I keep the OS cache option enabled though, but my kernel is tuned to restrict dirty pages amount at very low value (128M).

It's been a month. Is there any progress on this issue? What needs to be done if not?

@Sin2x yes, have you tried libtorrent-2.0.6?

@arvidn Not yet, I've been checking this Github issue from time to time and remained on 1.2 meanwhile. I'll try the latest qBittorrent to see how it behaves. I'm on Windows, though, and they have implemented a 512MB usage limit there, so this probably is not a clean test. Looking through their issues list, it seems that on Linux the problem is unchanged: https://github.com/qbittorrent/qBittorrent/issues/16775

@arvidn I'm on 2.0.6 and this bug is still present. In the span of less than 3 hours qbittorent 4.4.2 using libtorrent 2.0.6, will consume all available system memory. Doesn't seem like setting any of the limits in advanced settings has any affect. When the container first starts it seems like it jumps to about 1 gig of memory usage right away and in less than 3 hours will be consuming (on my system) about 80 gigs of ram. This is on a trunas server.

Seems to occur as soon as any file is being downloaded or seeded, and the RAM usage doesn't reduce once a file finishes being downloaded, even if removed.

Right now the only solution seems to be to reboot the system which flushes the ram and the process starts over.

@asdpyro I have 512GB of ram and do not have this issue. Have you tried upgrading the ram? I believe the minimum is 256gb if the machine is headless and 512gb if you need a GUI.

@Motophan

I've never seen any requirements for ANY service that would require 256GB of ram, certainly not 512GB the number of systems out there that can even support that on a non-commercial level are few and far between. The max my system can support is 128GB Are you sure you're not thinking MB?

arvi knows what he is doing.

just because a file is pinned to memory in my ZFS ram cache doesn't mean anything. we need to make a one-to-one copy of that file again for this library. this is operating as designed. soon we will have the ability to have every single file you are seeding / downloadig triplicated in ram. people are upset because it's bloat, but who's to say my download client should not take four virtual machines worth of memory? if the developer designed the library to be more resource intensive than multiple dedicated kernels and subsystems, then so be it why question it?

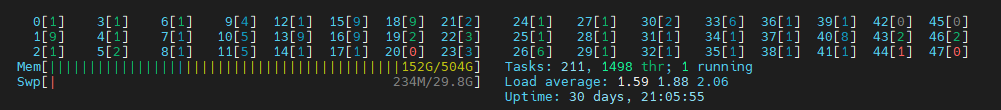

Here is my EXTREMELY modest system (only 24 cores and 512gb ram) and the load is completely manageable. The only thing running is nginx and qbittorrent.

Here is my EXTREMELY modest system (only 24 cores and 512gb ram) and the load is completely manageable. The only thing running is nginx and qbittorrent.

@Motophan I think you sarcasm might be lost on non native english speakers, or people that assume good faith and pragmatic contributions to solve issues in this forum. Please help out rather than heckling from the sidelines.

@asdpyro

In the span of less than 3 hours qbittorent 4.4.2 using libtorrent 2.0.6, will consume all available system memory.

Right now the only solution seems to be to reboot the system which flushes the ram and the process starts over.

I assume it's technically the kernel using the memory, and I would expect:

echo 1 | sudo tee /proc/sys/vm/drop_caches

Would work to free up the RAM, instead of rebooting. You're not even supposed to have to restart the client.

I assume you also meant to say that your system performance was negatively affected by all your RAM being used as disk cache. Is that right? Without actually saying that "linux ate my ram" is still a legitimate response.

Do you know if the your page cache is mostly dirty pages or clean pages?

I would expect clean pages to have very little impact on the system, since they are cheap to drop.

The most recent attempt at keeping dirty pages in check was the updated semantics to the setting disk_io_write_mode when set to write_through (or disable_os_cache). With that setting, the kernel is asked to flush pieces to disk when they complete.

Could you check what settings you're using? And, if not, try disk_io_write_mode = write_through

@arvidn @Motophan

The sarcasm was lost on me with the first post but the second was pretty obvious, you never know though, sometimes people are serious even when they make unbelievable claims.

I'll gladly do some digging into my system/setup. later today.

Could you provide me with the cmd for checking dirty/clean pages?

Last night after my post I did some more experimenting and was able to get the usage to balloon 'slower' by reducing the number of allowed connections (though I'm not certain that's actually what did it as I tweaked many settings). Now qBittorrent seems to only consume about 2 gigs per hour. Still till the whole systems memory is consumed. Again stopping/removing a torrent doesn't remedy.

I'll try your drop caches suggestion tonight.

Now qBittorrent seems to only consume about 2 gigs per hour. Still till the whole systems memory is consumed.

This is intended behavior, if you still don't get it. Memory-mapped files will use as much RAM as possible. But such memory is not really "used". It will be reclaimed for other processes when needed. At least it should, this behavior is controlled by OS kernel, not the program itself.

The problem also still exists on FreeBSD with libtorrent-rasterbar-2.0.6.27,1

It was recently upgraded again to 2.x and the memory problem came back again with this.

@yurivict when you say "came back", do you mean there was a regression in the libtorrent 2.0.x versions?

If so, do you know approximately which versions regressed?

And "the problem" refers to a lot of memory pages being used as disk cache, right? Do you know if those pages are dirty or not?

Do you set disk_io_write_mode to write_through? With that set, libtorrent will msync() pieces as they are downloaded, and the FreeBSD man page seems pretty clear about actually flushing pages to disk. So I would expect the pages not to be dirty.

when you say "came back", do you mean there was a regression in the libtorrent 2.0.x versions?

Yes.

If so, do you know approximately which versions regressed?

I don't know the exact version. Every time I tried to update the port to 2,x people complained. I also experienced problems personally.

And "the problem" refers to a lot of memory pages being used as disk cache, right? I think so.

Do you know if those pages are dirty or not?

Not sure. What I just saw is qbittorrent being 1TB in size.

Do you set disk_io_write_mode to write_through? With that set, libtorrent will msync() pieces as they are downloaded, and the FreeBSD man page seems pretty clear about actually flushing pages to disk. So I would expect the pages not to be dirty.

I didn't try this.

@Sin2x yes, have you tried libtorrent-2.0.6?

So, for the past month of use on Windows I haven't seen any memory issues with qBittorrent 4.4.3.1+libtorrent 2.0.6 on my setup that were apparent before. So far so good.

@Sin2x yes, have you tried libtorrent-2.0.6?

So, for the past month of use on Windows I haven't seen any memory issues with qBittorrent 4.4.3.1+libtorrent 2.0.6 on my setup that were apparent before. So far so good.

Because window users have a "hack" that limits memory usage unlike unix users.

Do you set disk_io_write_mode to write_through? With that set, libtorrent will msync() pieces as they are downloaded, and the FreeBSD man page seems pretty clear about actually flushing pages to disk. So I would expect the pages not to be dirty.

I couldn't find how to change this config value in qBittorrent. And now I don't have 2.x handy any more.

Do you set

disk_io_write_modetowrite_through? With that set, libtorrent willmsync()pieces as they are downloaded, and the FreeBSD man page seems pretty clear about actually flushing pages to disk. So I would expect the pages not to be dirty.

@arvidn Is this the correct setting? https://github.com/qbittorrent/qBittorrent/blob/master/src/gui/advancedsettings.cpp#L511

it looks like that gets translated into a libtorrent setting here: https://github.com/qbittorrent/qBittorrent/blob/master/src/base/bittorrent/session.cpp#L1615

and that it only supports disable_os_cache and enable_os_cache. It also sets the read mode and write mode to the same setting. the "write-through" option is a subset of disabling the cache entirely, so if your issue is that you have too many dirty pages, disabling the cache might help still.