Not owned by argocd app Openshift Resources become out-of-sync (to be pruned) in argocd app

We are running 1.7.8 argocd on Openshift and argocd sees resources out of sync, to be pruned on resources not owned by argocd.

Reproduce steps

- Deploy from argocd a user-ca-bundle resource in openshift-config namespace.

kind: ConfigMap

apiVersion: v1

metadata:

name: user-ca-bundle

namespace: openshift-config

data:

- now Openshift creates another object based on this user-ca-bundle in argocd and defines it to be pruned. Underwater it appears that it clones the user-ca-bundle object together with the argocd app label so this becomes (unwanted) part of the argocd app :

ND

ConfigMap

NAME

openshift-user-ca

NAMESPACE

openshift-controller-manager

CREATED_AT

03/16/2021 21:34:23

STATUS

OutOfSync (requires pruning)

In my opninion argocd should automatically not look at resources that aren't coming from their own argocd application.

Openshift has magic where this would happen with other resources as well I predict.

Hi @peterbosalliandercom, this is actually the intended behavior of Argo CD. When it reconciles resources into the cluster, it sets the app.kubernetes.io/instance label to the name of the Application that the resource belongs to. This is the indicator for Argo CD that a resource belongs to a given application. If a resource with this label matching the application name is found on the cluster, but not in the manifests, it is considered obsolete and marked for pruning.

The problem here is that a controller creates another resource, and applies this label, when the label shouldn't be there.

You could try and set the IgnoreExtraneous compare option annotation on the user-ca-bundle ConfigMap, along with the Prune=false sync option annotation as described here and here. Or maybe only Prune=false would be sufficient already.

As these annotations probably would be copied over to the openshift-user-ca ConfigMap, Argo CD would ignore them for OutOfSync and Pruning required conditions. However, they will also affect the resource you created to be part of the app, so this won't get pruned from the cluster when deleted in the manifests, too.

Yes we solved it not by adding the resource to the helm chart and set ignore like this ignoreDifferences:

- kind: ConfigMap

name: openshift-user-ca

namespace: openshift-controller-manager

jsonPointers:

- /data

Hopefully we can inspire other teams, we dont think this is a solution, but a workaround.

I reopend this case because we have another case in Openshift. We deploy a pipeline object, all pipelinerun objects stem from the pipeline object also get the label and thereby become a prune item. I believe the setting of the label is not enough to identify an object part of the Application. How do we cope with this situation?

Is an ownerReferences set on those extraneous resources by the controller that creates the objects?

I've used resource exclusions to prevent my TaskRuns/PipelineRuns from being pruned.

Eg:

data:

resource.exclusions: |

- apiGroups:

- tekton.dev

clusters:

- '*'

kinds:

- TaskRun

- PipelineRun

This will exclude all PipelineRuns and TaskRuns from Argo CD discovery.

Hi @jannfis, late answer but anyway: there is no ownerReferences on openshift-user-ca ConfigMap.

Thank you @peterbosalliandercom for your workaround.

I have the same problem. The workaround from @peterbosalliandercom does not work for me. I am using ArgoCD 2.1 I have added the two annotations that @jannfis mentioned.

I have a similar issue with this object:

apiVersion: v1

data:

bindPassword: XXXXXXXXXXXXXXXX

kind: Secret

metadata:

annotations:

labels:

app.kubernetes.io/instance: XXXXXX

name: v4-0-config-user-idp-0-bind-password

namespace: openshift-authentication

I tried this:

ignoreDifferences:

- kind: Secret

name: v4-0-config-user-idp-0-bind-password

namespace: openshift-authentication

jsonPointers:

- /data

- /metadata

- /kind

- /type

- /apiVersion

Which gives me a diff (OutOfSync) with the following:

{}

This may be (a not so good) workaround but does not work.

Regarding the secrets and configmaps of the openshift-authentication that are generated automatically, the solution of adding the annotation "argocd.argoproj.io/compare-options: IgnoreExtraneous" just puts the Application in Healthy, but the objects continue with the status OutOfSync (requires pruning)

A workaround that has worked has been to declare the secrets and cm with the name and namespace in the repo:

apiVersion: v1

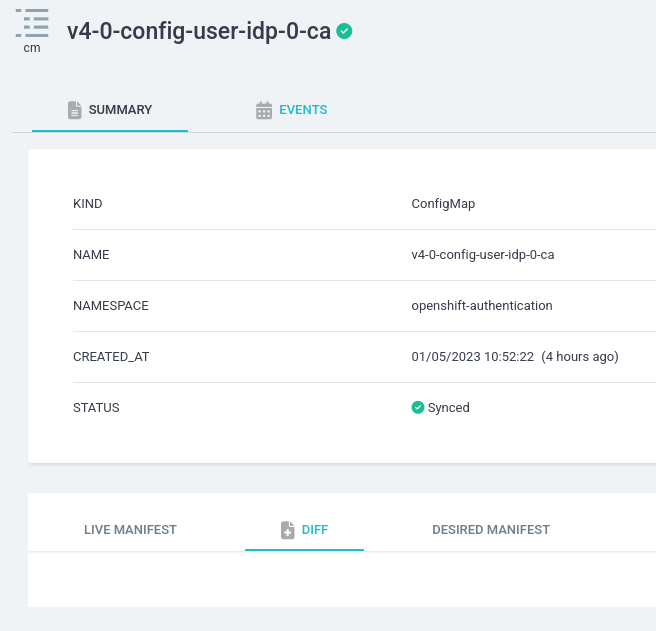

kind: ConfigMap

metadata:

name: v4-0-config-user-idp-0-ca

namespace: openshift-authentication

---

apiVersion: v1

kind: Secret

metadata:

name: v4-0-config-user-idp-0-bind-password

namespace: openshift-authentication

---

apiVersion: v1

kind: Secret

metadata:

name: v4-0-config-user-idp-1-file-data

namespace: openshift-authentication

Then apply at the ArgoCD level:

resourceIgnoreDifferences:

all:

jsonPointers:

- /data

- /metadata

managedFieldsManagers:

- authentication-operator

With this we already have the Application and the objects in green, but ideally, the ArgoCD instance would allow some parameter to ignore child objects not declared at source like:

ignorePruneObjects:

all:

managedFieldManagers:

- authentication-operator

- cluster-openshift-controller-manager-operator

- [...]

The main reason why argocd takes v4-0-config-user-idp-0-ca config map into account is that authentication operator creates this config map with the same metadata as the parent config map. Operator doesn't set ownerReference details in the resulting (v4-0-config-user-idp-0-ca) config map hence Argocd thinks that the config map is a standalone resource and tracks it as a standalone resource.

@mparram We also created blank ConfigMaps and set ignoreDifferences for the v4-0-config-user-idp-0-ca and lived happy until yesterday. We found out the oauth pods in openshift-authentication namespace have been restarted by operator. After investigation found that if someone or argocd itself tries to perform full application synchronization (all resources within) the resourceVersion option is changed for the v4-0-config-user-idp-0-ca config map. Authentication operator catches it considering it as whole manifest change and do oauth pods restart. So be careful with your workaround.