archinstall

archinstall copied to clipboard

archinstall copied to clipboard

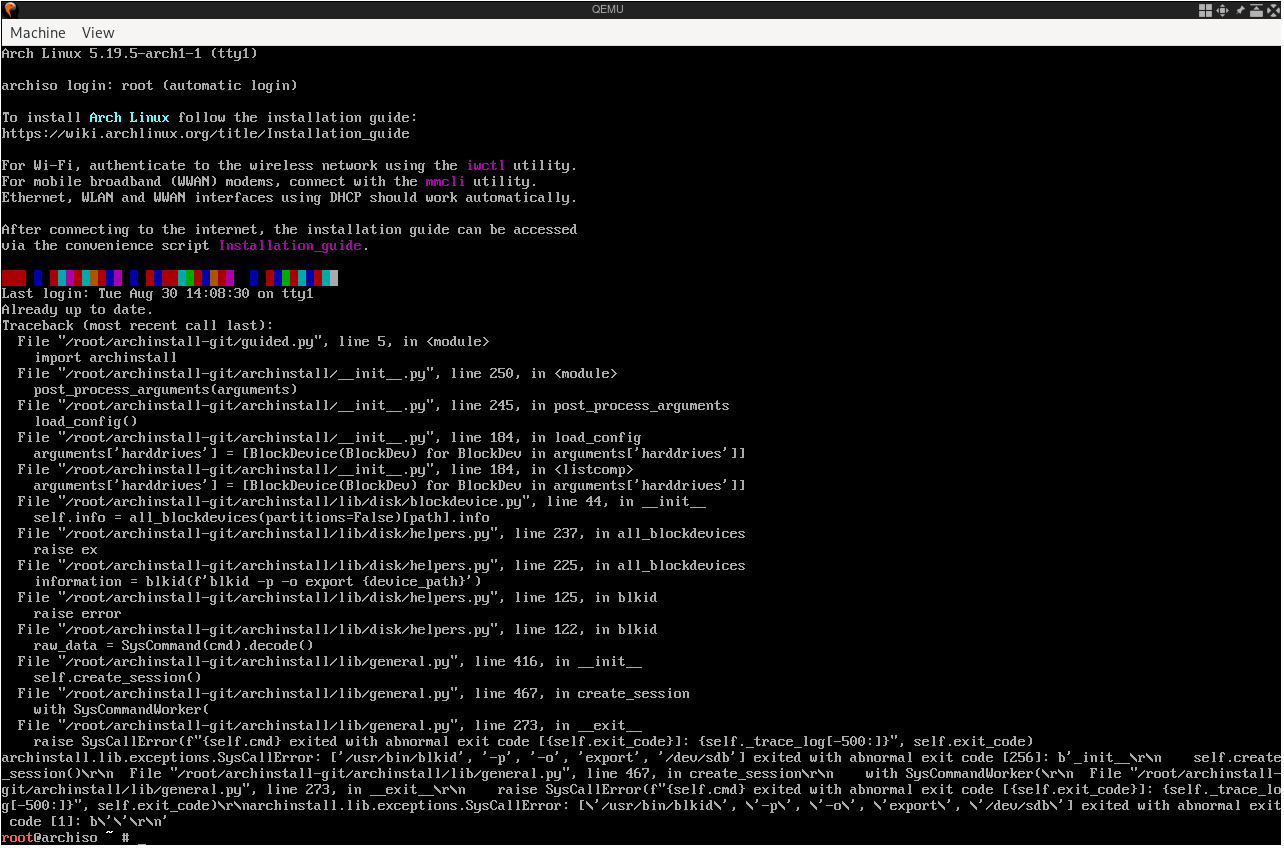

Using `Ventoy`, the first time `archinstall --config` output `archinstall.lib.exceptions.SysCallError`

archinstall version

2.4.2

Ventoy version

1.0.74

command is

archinstall --config user_configuration.json --creds user_credentials.json --disk_layouts user_disk_layout.json

log

Traceback (most recent call last):

File "/usr/bin/archinstall", line 5, in <module>

from archinstall import run_as_a_module

File "/usr/lib/python3.10/site-packages/archinstall/__init__.py", line 248, in <module>

post_process_arguments(arguments)

File "/usr/lib/python3.10/site-packages/archinstall/__init__.py", line 243, in post_process_arguments

load_config()

File "/usr/lib/python3.10/site-packages/archinstall/__init__.py", line 187, in load_config

arguments['harddrives'] = [BlockDevice(BlockDev) for BlockDev in arguments['harddrives']]

File "/usr/lib/python3.10/site-packages/archinstall/__init__.py", line 187, in <listcomp>

arguments['harddrives'] = [BlockDevice(BlockDev) for BlockDev in arguments['harddrives']]

File "/usr/lib/python3.10/site-packages/archinstall/lib/disk/blockdevice.py", line 23, in __init__

info = all_blockdevices(partitions=False)[path].info

File "/usr/lib/python3.10/site-packages/archinstall/lib/disk/helpers.py", line 235, in all_blockdevices

raise ex

File "/usr/lib/python3.10/site-packages/archinstall/lib/disk/helpers.py", line 223, in all_blockdevices

information = blkid(f'blkid -p -o export {device_path}')

File "/usr/lib/python3.10/site-packages/archinstall/lib/disk/helpers.py", line 123, in blkid

raise error

File "/usr/lib/python3.10/site-packages/archinstall/lib/disk/helpers.py", line 120, in blkid

raw_data = SysCommand(cmd).decode()

File "/usr/lib/python3.10/site-packages/archinstall/lib/general.py", line 409, in __init__

self.create_session()

File "/usr/lib/python3.10/site-packages/archinstall/lib/general.py", line 460, in create_session

with SysCommandWorker(self.cmd, callbacks=self._callbacks, peak_output=self.peak_output, environment_vars=self.environment_vars, remove_vt100_escape_codes_from_lines=self.remove_vt100_escape_codes_from_lines) as session:

File "/usr/lib/python3.10/site-packages/archinstall/lib/general.py", line 268, in __exit__

raise SysCallError(f"{self.cmd} exited with abnormal exit code [{self.exit_code}]: {self._trace_log[-500:]}", self.exit_code)

archinstall.lib.exceptions.SysCallError: ['/usr/bin/blkid', '-p', '-o', 'export', '/dev/sdd2'] exited with abnormal exit code [256]: b'tput, environment_vars=self.environment_vars, remove_vt100_escape_codes_from_lines=self.remove_vt100_escape_codes_from_lines) as session:\r\n File "/usr/lib/python3.10/site-packages/archinstall/lib/general.py", line 268, in __exit__\r\n raise SysCallError(f"{self.cmd} exited with abnormal exit code [{self.exit_code}]: {self._trace_log[-500:]}", self.exit_code)\r\narchinstall.lib.exceptions.SysCallError: [\'/usr/bin/blkid\', \'-p\', \'-o\', \'export\', \'/dev/sdd2\'] exited with abnormal exit code [1]: b\'\'\r\n'

/dev/sdd2 is the EFI partition of Ventoy.

The error occurs only the first time the above command is executed.

After more tests, I found that both --config and --disk_layouts have this problem when the correct configuration file is included. Using these two parameters again after running successfully once with no config file does not cause the problem either.

Heh, that's a funny error. I don't know how two stack traces ended up in side of each other.. But somehow they did. Error.

[\'/usr/bin/blkid\', \'-p\', \'-o\', \'export\', \'/dev/sdd2\'] exited with abnormal exit code [1]: b\'\'

Inside: /usr/lib/python3.10/site-packages/archinstall/lib/disk/helpers.py @ line 223, in all_blockdevices

I'll look in to it.

I'm really curious if this same error occurs without using ventoy. I am not sure how ventoy works, but I imagine it mounts the install media as some sort of virtual device, and boots from it. Perhaps operations on this media are not occuring as expected. I do not want to say we don't support ventoy, but we might have to figure out how it functions and see if there is a specific reason it fails using this boot method.

I'm really curious if this same error occurs without using ventoy.

At this point, all I can say for sure is that the error must be related to Ventoy's EFI partition (I've tested for this more than five times and gotten the same result). I don't know of any other software similar to Ventoy, so I can't do more testing.

I'm able to reproduce this as well, I believe its related to how ventoy mounts the isos. Ideally the iso should load magic initramfs which does some dmsetup magic and make the iso behave properly. Arch doesn't support this currently and there's an issue open about it https://gitlab.archlinux.org/mkinitcpio/mkinitcpio-archiso/-/issues/7. If someone is willing to play with /dev/mem or efi vars it should be possible to detect we are running in a ventoy booted system and hack around the issue.

for more information on identifying if we are running as a ventoy system https://www.ventoy.net/en/doc_compatible_format.html

https://www.ventoy.net/en/doc_compatible_pass.html

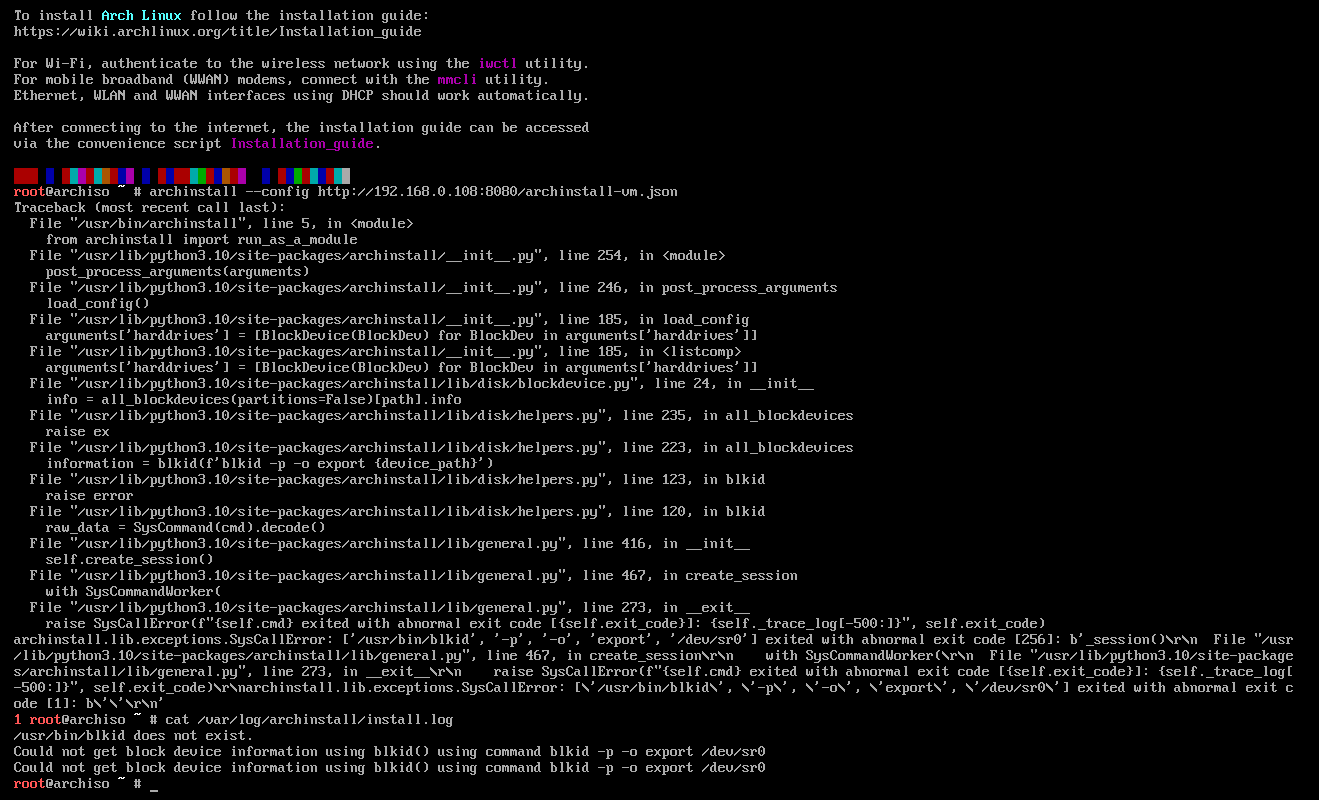

I'm also got this error, only happen on first run the command, but I didn't know about ventoy, I'm running archlinux live on Viartual box

command is archinstall --config user_configuration.json --creds user_credentials.json --disk_layouts user_disk_layout.json [--silent]

I'm also got this error, only happen on first run the command, but I didn't know about ventoy, I'm running archlinux live on Viartual box command is

archinstall --config user_configuration.json --creds user_credentials.json --disk_layouts user_disk_layout.json [--silent]

Sorry for the late answer, but if you have it could you post your /var/log/archinstall/install.log?

I've noticed a similar issue but not SysCallError. Without --silent it won't load the disks appropriately.

So this happened on a newly created ISO (Not Ventoy):

I'm investigating. It feels like a weird one hehe.

Really hard to reproduce. Because it happens far from every try. I'll keep rebooting the ISO with new blank disks a few times. I've added some debug information and the issue is related to this block most likely: https://github.com/archlinux/archinstall/blob/ee64276f0e3651367de51e1423f3767dde1be525/archinstall/lib/disk/helpers.py#L224-L237

Which nests exceptions and handles them poorly in an attempt to try alternative information gathering methods to get as much disk information as possible rather than none.

I haven't been able to trigger this the last 50 re-installs using the same --conf https://hvornum.se/laptop_conf.json and --disk-layout https://hvornum.se/disk_layout.json to automatically re-install a couple of times.

I'll keep this issue open, but don't expect it to be solved quickly. Seeing as there's no way to force trigger this issue in a controlled environment it's really hard to pinpoint the issue. But the bug information is there now so we have to hope someone or we get a hit and gives us some info :)

While debugging, I happened upon this:

For me, this error is always happening (in the first run) when i am using an QEMU vm:

My profile file is that:

{

"bootloader": "systemd-bootctl",

"hostname": "vmanderson",

"kernels": [

"linux"

],

"keyboard-layout": "br-abnt",

"mirror-region": {

"Brazil": {

"https://archlinux-br.com.br/archlinux/$repo/os/$arch": true

}

},

"nic": {

"type": "nm"

},

"ntp": true,

"audio": "pulseaudio",

"profile": null,

"script": "guided",

"swap": true,

"sys-encoding": "utf-8",

"sys-language": "en_US",

"timezone": "America/Sao_Paulo",

"!users": [

{

"username": "anderson",

"!password": "changeit",

"sudo": true

}

],

"packages": [

"awesome",

"alacritty",

"xorg",

"xorg-server",

"xorg-server-xephyr",

"xorg-xclipboard",

"xorg-xev",

"xorg-xset",

"xprintidle",

"xscreensaver",

"xsel",

"xss-lock",

"zsh",

"lightdm"

],

"harddrives": [

"/dev/sda"

]

}

It started happening after i defined the property "harddrives"

Maybe it's related to my vm config, idk, that is:

qemu-img create -f qcow2 vm/storage.qcow2 4G

qemu-system-x86_64 \

-cdrom /home/anderson/Downloads/archlinux-2022.07.01-x86_64.iso \

-cpu qemu64-v1 \

-enable-kvm \

-m 2048 \

-smp 2 \

-drive file=vm/storage.qcow2,format=qcow2 \

-qmp tcp:127.0.0.1:4055,server,wait=off

Thanks, great info! I'll have to organize and see what we can do here and now :)

There have been a lot of updates and this may be resolved by now