ml-stable-diffusion

ml-stable-diffusion copied to clipboard

ml-stable-diffusion copied to clipboard

Poor Image Generation

When I run this, none of my test prompts come out looking anything at all like the demo images, they look terrible. Any ideas what might be going wrong? I may just have the wrong expectation.

I'm running Stable Diffusion 2 Base on a MacMini M1 16GB. Python 3.10.0

Compiled:

python -m python_coreml_stable_diffusion.torch2coreml --convert-unet --convert-text-encoder --convert-vae-decoder --convert-safety-checker -o models --model-version 'stabilityai/stable-diffusion-2-base'

python command:

python -m python_coreml_stable_diffusion.pipeline --prompt "a photo of an astronaut riding a horse on mars" --compute-unit ALL -o output -i models --model-version 'stabilityai/stable-diffusion-2-base'

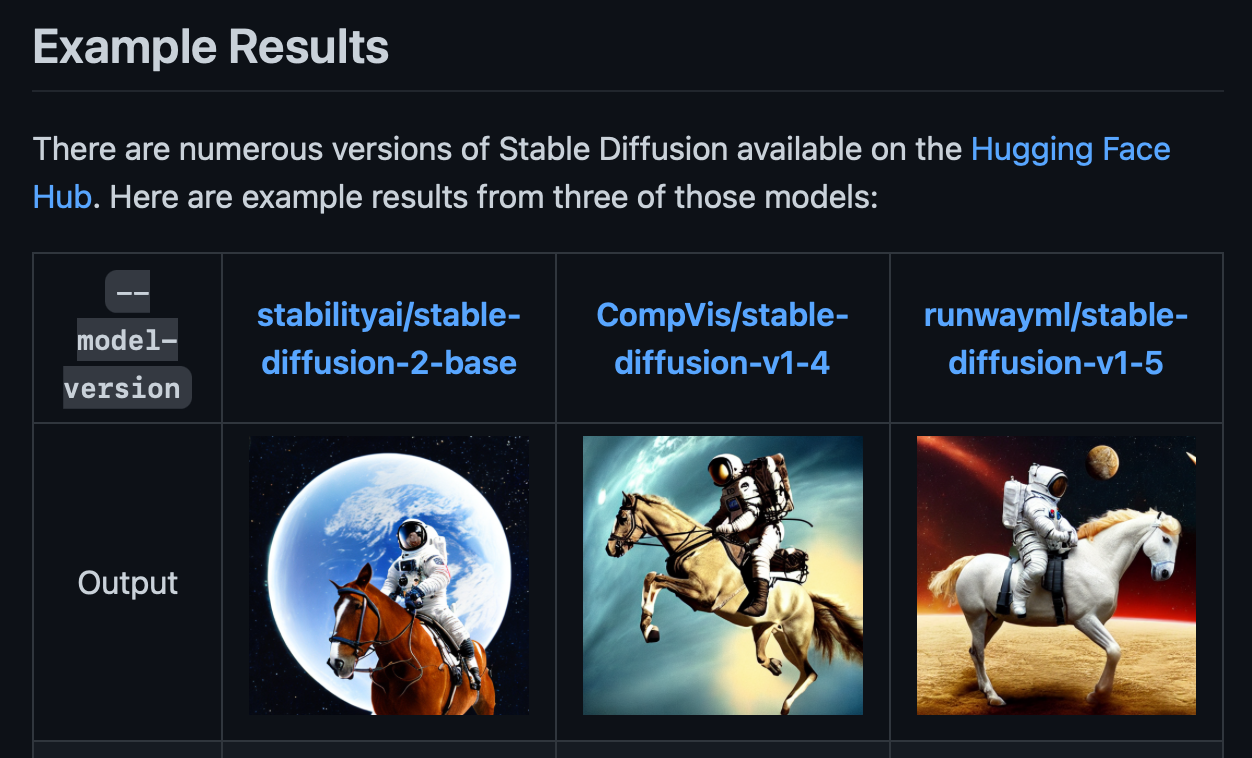

I realize now that I had been completely off with my expectations based on the install readme. I thought the demo prompt was meant to produce an image similar to that of the Example Results section. It seems those images are created with more sophisticated prompting and assumed that others will just know that. I was using those Example Results as a gauge to to know if I got my local setup correctly.

It might be helpful to others working through the initial setup, to clarify that those are not the initial results you will get with a simple prompt of:

"a photo of an astronaut riding a horse on mars" and a default seed 93.

I guess the issue here is the absence of a way to append negative prompts to the input prompt. The v2 model in particular is known to be almost garbage without negative prompts. It seems there are some related PRs though. With v1-5 you should get nicer outputs for the time being.

Well it definitely isn't "bad" now that I learned to change my expectation when using the prompt "a photo of an astronaut riding a horse on mars".

I generated these images using a longer description, and they show promise. I think the issue now might be that it still outputs to 512x512. I could have this totally wrong, but I think I read that SD 2 outputs to 768x768?

I could have this totally wrong, but I think I read that SD 2 outputs to 768x768?

As noted elsewhere https://github.com/apple/ml-stable-diffusion/issues/81#issuecomment-1364509035, the base model generates 512x512 images, whereas the "full" model generates 768x768. The current conversion code seems to be a bit limited and only supports a subset of what the original diffusers library can do. Another limitation is input shapes / image dimensions, as noted in https://github.com/apple/ml-stable-diffusion/issues/64.

Lower res images could be upscaled, e.g. with https://huggingface.co/stabilityai/stable-diffusion-x4-upscaler, however that particular model cannot be converted right now since the converter code is incomplete (https://github.com/apple/ml-stable-diffusion/blob/main/python_coreml_stable_diffusion/unet.py#L822-L829).