Updatable PyTorch model

Hello, I am trying to convert an off the shelf PyTorch model to be updatable. The model I am using with CoreML doesn't have a softmax layer at the end because that's handled by the loss function. Setting the cross entropy loss using the coremltools build however seems to require a softmax output. I tried adding one like this:

# Load a pre-trained version of MobileNetV2 model.

torch_model = torchvision.models.mobilenet_v2(weights=torchvision.models.MobileNet_V2_Weights.DEFAULT)

torch_model = torch.nn.Sequential(

torch_model,

torch.nn.Softmax(dim=1)

)

torch_model.eval()

but it get's converted as a softmax_nd instead of a normal softmax which the updatable script doesn't seem to like. Not sure how to make it the PyTorch layer a normal softmax?

What would be the appropriate way to set this model to be updatable?

Thank you.

Can you give us more details about what you are trying to do here? What script are you referring to?

Hey, sorry, the script I was referring to is your Neural Network updatable example.

https://coremltools.readme.io/docs/updatable-neural-network-classifier-on-mnist-dataset

Basically I want to be able to have a mobilnet-based PyTorch model converted to CoreML where the last layer is updatable. Since the documentation shows a Tensorflow example I wasn't able to do it for my model for the reason described in the original post. Does that make sense? Let me know if I can provide further clarification.

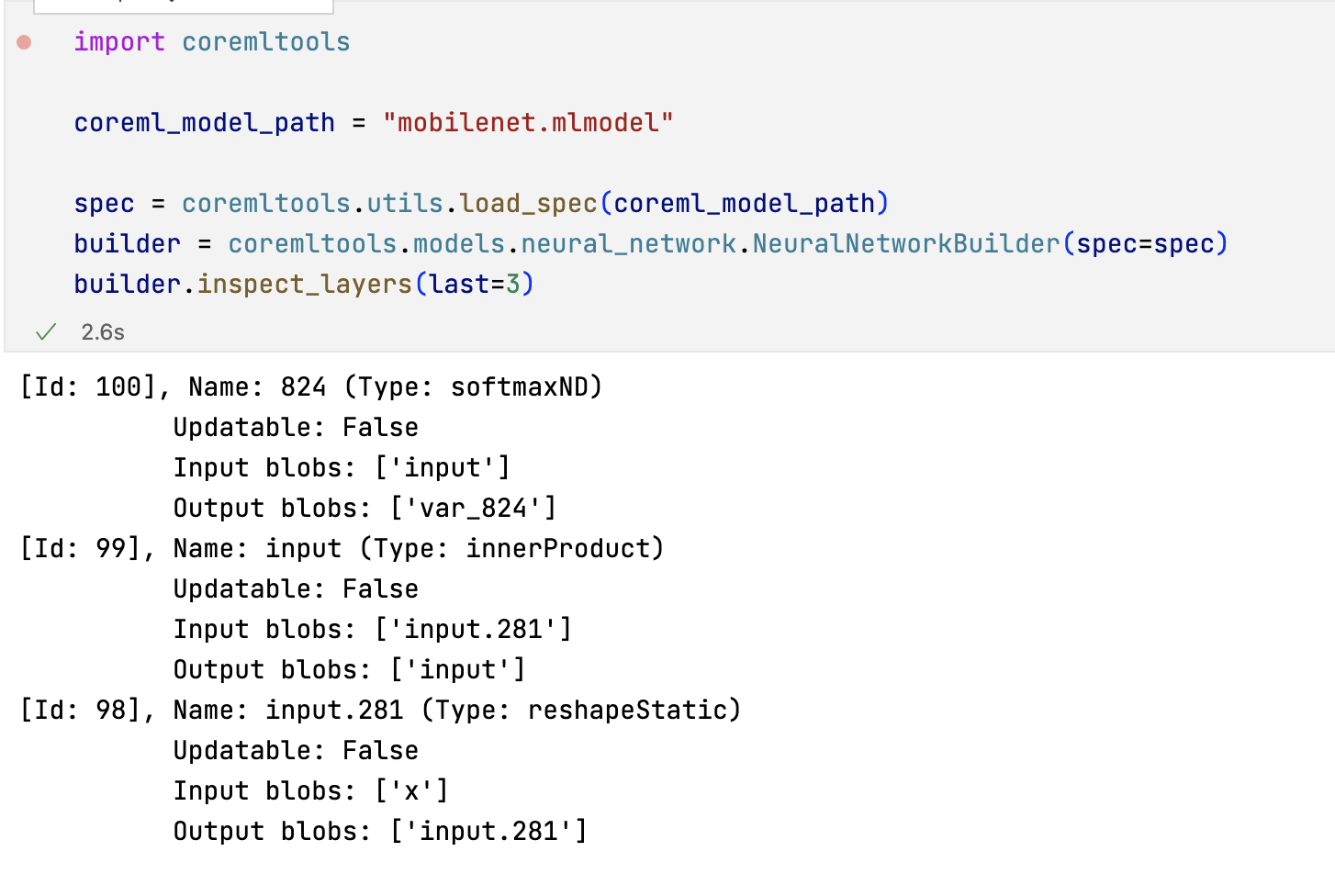

This is the last 3 layers of my model. As you can see adding the softmax results in type softmax-nd

Then when calling builder.set_categorical_cross_entropy_loss I get the following error

ValueError: Categorical Cross Entropy loss layer input (var_824) must be a softmax layer output.

It seems like it should be possible to do this with a softmax-nd layer instead of a softmax layer. You could try just removing the code that raises that exception and see if it works or if you get a new error.

Another option is to try modifying the proto of your model. See if your SoftmaxNDLayerParams could be converted to a SoftmaxLayerParams. You could get the axis from the SoftmaxLayerParams. Then slice just that axis and feed that to a softmax.

Thanks for following up!

Turning off the exceptions works but then I get this warning:

python3.10/site-packages/coremltools/models/model.py:145: RuntimeWarning: You will not be able to run predict() on this Core ML model. Underlying exception message was: Error compiling model: "Error reading protobuf spec. validator error: For the categorical cross entropy loss layer named 'lossLayer', input is not generated from a softmax output.".

I will try to do what you suggested next although I'm not super sure I understand what you mean. If possible, would you be able to provide some sample code for converting a softmaxND into a softmax? I'm confused why the normal softmax's dimensions is harcoded to -3.

Hey @TobyRoseman sorry to bother again. Would you mind giving some more guidance? Maybe I'm overlooking some part of the docs I'm not clear on how the SoftmaxND -> Softmax conversion needs to happen. Thanks a bunch in advance.

You will need to manually edit the spec (i.e. the protobuf) for your model. Here is what I would try.

First, get the axis value for your SoftmaxND layer. Then change that SoftmaxND layer to just a Softmax. Then add a TransposeLayerParams on either side of that layer. The first transpose layer would change it so axis is now in the -3 position. The second transpose layer would change the axis ordering back.

Thanks for bearing with me here. For more context, when converting I'm printing the input op and its rank of the softmaxND:

op: %var_824: (1, 1000, fp32)(Tensor) = softmax(x=%input, axis=1, name="824")

rank: 2

(This is basically the logits of the 1000 classes of mobilenet)

Later I can do this to get the axis as you're saying:

spec = model.get_spec()

spec.neuralNetworkClassifier.layers[-1]

which yields

name: "824"

input: "input"

output: "var_824"

softmaxND {

axis: 1

}

Is this what you meant? Given my input tensor has two dimensions (and the softmax operates on the second one), do I need to transpose by adding an additional two dimensions so that -3 would equal 1?

As for changing the layer type and adding the additional layers, what is the programmatic way to do that? Do I need to use the NeuralNetworkBuilder or something like that? Would appreciate any pointers or pseudocode here!

PS: seems like my issue is the same as this one https://github.com/apple/coremltools/issues/1417

You could potentially use the NeuralNetworkBuilder. I would probably just edit the protobuf directly.

I wasn't sure how to edit the protobuf from the CoreML side so I decided to try and tweak the PyTorch model. I ended up doing this:

import torch

import torchvision

from einops.layers.torch import Rearrange

torch_model = torchvision.models.mobilenet_v2()

print(torch_model.classifier[1].weight.shape)

torch_model = torch.nn.Sequential(

torch_model,

Rearrange('c f -> c f () ()'),

torch.nn.Softmax(dim=-3),

Rearrange('c f () () -> c f')

)

Now when I convert I get a softmax layer instead of softmaxND so I thought it I finally cracked, however it ended up returning this warning message.

RuntimeWarning: You will not be able to run predict() on this Core ML model. Underlying exception message was: Error compiling model: "Error reading protobuf spec. validator error: There is a layer (input), which does not support backpropagation, between an updatable marked layer and the loss function.".

_warnings.warn(

I suppose this is because I have this layer here between the innerProduct and the softmax. Do you think there's a workaround for this or is my solution with PyTorch no good?

[Id: 100], Name: input (Type: reshapeStatic)

Updatable: False

Input blobs: ['x.3']

Output blobs: ['input']

Hey @TobyRoseman. I've decided to try out the Tensorflow example from the documentation (https://coremltools.readme.io/docs/updatable-neural-network-classifier-on-mnist-dataset) and it turns out the exact same problem occurs in the given example. The softmax has an input tensor of rank 2 and it converts to softmaxND. You should be able to replicate by just running the code as is.

Was this perhaps a recent change in coremltools? Wondering if I'm missing something here.

Any updates on this?

Regarding the documentation on https://coremltools.readme.io/docs/updatable-neural-network-classifier-on-mnist-dataset

Using from coremltools.converters import keras as keras_converter produces ImportError: cannot import name 'keras' from 'coremltools.converters'. Is this example out of date?

Trying to fix the example from the documentation unfortunately produces an ML model that is invalid and can't be trained due to: There is a layer (transpose_5), which does not support backpropagation, between an updatable marked layer and the loss function.

Is there a known mitigation/workaround for this? I tried to convert multiple CNNs from Keras and all were plagued by this issue. I'm attaching a python script to reproduce the problem.

make_mnist_model.py.zip