Cannot pass the tile operation

❓Question

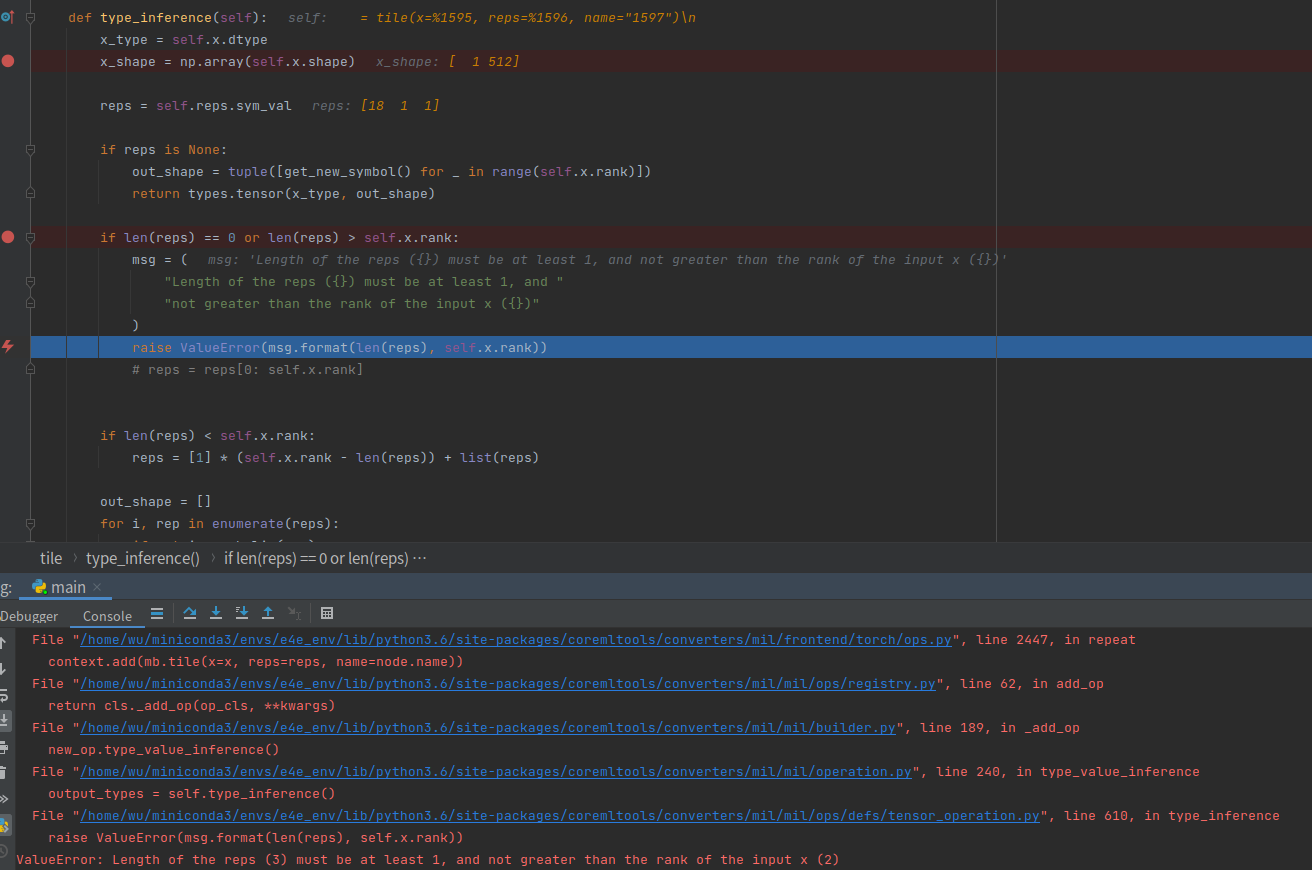

In the type_inference of the tile operation, it always throw the error "Length of the reps ({}) must be at least 1, and not greater than the rank of the input x ({})". since the length of reps is 3 while the dimension of the x is 2. Cannot figure out what causes this.

Thank you!

Can you give us an example to reproduce this error?

Can you give us an example to reproduce this error?

It looks like this.

Hi @VincentWu1997 - this is not an example to reproduce the error. This is a (partial) stack trace. In order to help you, I need to be able to reproduce the problem. Can you give me self contained code to reproduce this issue? Ideally, the amount should be minimal.

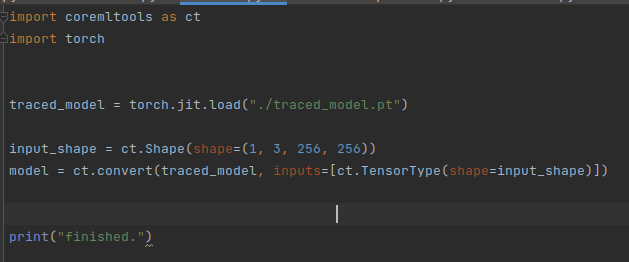

If you could download this traced_model file and just try to convert it to coreml format as the picture code.png shows, you can produce the error.

The environment to load the traced_model is torch ==1.10.0 with cuda and cudnn support.

Thanks a lot for helping!

------------------ 原始邮件 ------------------ 发件人: "apple/coremltools" @.>; 发送时间: 2022年4月28日(星期四) 凌晨0:42 @.>; @.@.>; 主题: Re: [apple/coremltools] Cannot pass the tile operation (Issue #1475)

Hi @VincentWu1997 - this is not an example to reproduce the error. This is a (partial) stack trace. In order to help you, I need to be able to reproduce the problem. Can you give me self contained code to reproduce this issue? Ideally, the amount should be minimal.

— Reply to this email directly, view it on GitHub, or unsubscribe. You are receiving this because you were mentioned.Message ID: @.***>

从QQ邮箱发来的超大附件

traced_model.pt (1.12G, 2022年05月28日 15:56 到期)进入下载页面:http://mail.qq.com/cgi-bin/ftnExs_download?k=20326632a23b2b896a4a1a0a4339571e51005507010f5309495754560714510250074b53005a5c1c070351035c0c555702035f5365366545165305570166085e00570a1c154d650c&t=exs_ftn_download&code=d2f2e9e1

This is the convert process.

This is the convert process.

I'm not going to load an arbitrary model. That's a security risk. Can you define a model in code (ideally it would be only a couple of layers) that reproduces the problem?

Minimal example to reproduce the error:

import torch

import torch.nn as nn

import coremltools as ct

class Encode(nn.Module):

def __init__(self, d_model = 512):

super(Encode, self).__init__()

self.d_model = d_model

def forward(self, length):

a = torch.zeros(length[0], int(self.d_model/2))

b = torch.ones(length[0], int(self.d_model/2))

signal = torch.stack((a,b), dim=2).view(length[0],self.d_model)

signal = signal.repeat(32, 1, 1)

return signal

x = Encode(d_model=192)

print(x(torch.tensor([478]).long()).shape)

x.eval()

t = torch.jit.trace(x, [torch.tensor([478]).long()])

coreml_inputs = [ct.TensorType(shape=(1,))]

mlmodel = ct.convert(model=t, inputs=coreml_inputs)

@TobyRoseman Any update on this bug? Thanks!

With the release of coremltools 6.0 this has been fixed.