trafficcontrol

trafficcontrol copied to clipboard

trafficcontrol copied to clipboard

Add server indicator if a server is a cache

This Improvement request (usability, performance, tech debt, etc.) affects these Traffic Control components:

- Traffic Ops

- Traffic Portal

Current behavior:

ALL servers, caches and non-caches are stored in the servers table and fetched via GET /api/servers. There is no real way to discern between caches and non-caches in the response. You can however use GET /api/servers?type=EDGE or GET /api/servers?type=MID but types can be added (and have been in our environment) to satisfy different use cases so you could have an EDGE_FOO type if you want.

New behavior:

Add an indicator to a server object to indicate if it is a cache or not so you can do something like GET /api/servers?cache=true and you can also manipulate this value via the API and the UI.

Also, by adding this indicator, it would be easy to fix #4137

FYI: this problem is a result of overloading the server table and this proposed solution is a bit of a band-aid. the real solution would be to split the servers table into 2 tables (imo) like servers and caches but that is a much larger effort (from a db, api and UI perspective) and this band-aid could be the shorter-term solution that would facilitate the real solution.

The original plan with TPv2 was to artificially separate server types into cache and non-cache, but that turned out to be difficult and expensive, because what a "cache server" is varies depending on context and almost all of those various criteria cannot be directly filtered on using query string parameters available on the /servers endpoint. Essentially I'd have to make some caveat like "this is the cache servers table except/plus some servers that meet criteria x" and even then I'd have to do the filtering on every digest cycle on potentially tens of thousands of table rows.

tl;dr this overloading issue is straight-up getting in the way of UI improvements, it's not just an annoyance to developers.

well that all sounds great/hard but don't you think a simple flag on a server (cache=true/false) which could be set to true on migration if type=EDGE*/MID* and allowing the users to set the rest would go a long way (in the short-term) to letting users define what is/isn't a cache?

..but i guess that would mean, after the migration, caches would have to be determined by cache=true/false and ignore the server types altogether (EDGE*/MID* for example)

Server types should be eliminated entirely, IMO. Or at least cache servers should not have mutable, user-create-able types. You can fairly easily place an indicator on a server that shows it's a cache server with like 80% accuracy (wild guess). Being absolutely sure is probably a waste of time, because most of the remaining edge cases represent non-functioning configurations (but then why are they possible??) and historically our plan for dealing with such things is just assume nobody makes those particular mistakes.

A flag is possible, yes, and probably quite useful, but I'm saying that we cannot really do any better than that because of limitations of the data model (or lack thereof). And that's frustrating.

A flag is possible, yes, and probably quite useful, but I'm saying that we cannot really do any better than that because of limitations of the data model (or lack thereof). And that's frustrating.

yep, understood and completely agree.

Hey @mitchell852 , I'm interested in contributing to the issue and I'd like to learn more about it. I got here from the Good First Issue you mentioned. I am new to open-source, but have understood about 60-70% of things talked in this issue. To me it's seems to be a major issue and I wonder if I have relevent experience in contributing towards the project. I appreciate any information you can provide and look forward to contributing to the project. Thank you, Utkarsh Chourasia

Hey @mitchell852 , I'm interested in contributing to the issue and I'd like to learn more about it. I got here from the Good First Issue you mentioned. I am new to open-source, but have understood about 60-70% of things talked in this issue. To me it's seems to be a major issue and I wonder if I have relevent experience in contributing towards the project. I appreciate any information you can provide and look forward to contributing to the project. Thank you, Utkarsh Chourasia

@JammUtkarsh - i doubt this is a "good first issue" issue. it touches a lot of things - database, api, ui - but you are more than welcome to take it on if you choose. Here are the other GFIs - https://github.com/apache/trafficcontrol/labels/good%20first%20issue

Hi @mitchell852, I I am interested to contribute to this issue and several other issues relating to Traffic Ops and Traffic Portal.

I have been trying to read the documentation and get started with the project by setting up the backend (i.e., Traffic Ops) and frontend (i.e., Traffic Portal) but I have been struggling for a week and have not been able to make much progress. Despite trying several ways to start the application, I have not been successful so far.

These are the ways I have tried:

- After following the instructions on https://traffic-control-cdn.readthedocs.io/en/latest/development/index.html# and cloning the repository, I executed

source dev/atc.dev.shand ranatc buildandatc startwith no errors on my Mac machine where Docker is installed. However, upon runningatc ready, nothing is printed on the console and commands likeatc ready --wait trafficopsoratc ready --wait trafficportalseem to be waiting indefinitely. Additionally, attempting to access the Traffic Portal interface via https://localhost:60443/ or https://localhost:8080/ has not been successful. Despite multiple attempts at restarting the process, the issue persists. - I attempted to build Traffic Control using the pkg command following the instructions provided in the link https://traffic-control-cdn.readthedocs.io/en/latest/development/building.html#build-using-pkg. To ensure I was in the correct directory, I cloned the repo and in

$GOPATH/src/github.com/apache/trafficcontrol, I ranpkg -a. However, the only output I saw was"Building weasel."and it appeared to be stuck indefinitely. This was attempted on my Mac machine and I have tried restarting the process multiple times, but the issue persists. - After following the instructions in https://traffic-control-cdn.readthedocs.io/en/latest/development/building.html#build-using-docker-compose, I ran the command

docker-compose buildwith no errors. However, I am unable to access the Traffic Portal interface at https://localhost:60443/ or https://localhost:8080/. Despite restarting the process multiple times, the issue persists. - After referring to https://traffic-control-cdn.readthedocs.io/en/latest/development/building.html#building-individual-components, I attempted to execute

build/clean_build.sh. However, I encountered errors during the process , per the below.

==================================================

WORKSPACE: /private/tmp/go/src/github.com/apache/trafficcontrol

BUILD_NUMBER: 12425.72b2332e

RHEL_VERSION: el7

TC_VERSION: 7.1.0

--------------------------------------------------

-rw-r--r-- 1 zhuzikun staff 50950600 Apr 3 22:37 dist/apache-trafficcontrol-7.1.0.tar.gz

+ for project in '"$@"'

+ [[ 0 -eq 1 ]]

+ ./build/build.sh traffic_monitor

+ tee dist/build-traffic_monitor.log

/opt/homebrew/bin/realpath

----- Building traffic_monitor ...

/opt/homebrew/bin/realpath

git is /opt/homebrew/bin/git

go is /opt/homebrew/bin/go

/private/tmp/go/src/github.com/apache/trafficcontrol/build/functions.sh: line 173: type: rpmbuild: not found

rpmbuild not found in PATH

Error on line 97 of traffic_monitor/build/build_rpm.sh

traffic_monitor failed: traffic_monitor/build/build_rpm.sh

The following subdirectories had errors:

traffic_monitor

Error on line 110 of ./build/build.sh

+ exit_code=1

+ '[' 1 -ne 0 ']'

+ echo 'Error on line 77 of build/clean_build.sh'

Error on line 77 of build/clean_build.sh

+ cleanup

++ id -u

+ '[' 501 -eq 0 ']'

+ exit 1

- After installing CentOS Stream 9 on my Mac machine using Parallels, I followed the instructions in https://traffic-control-cdn.readthedocs.io/en/latest/development/traffic_portal.html#installing-the-traffic-portal-developer-environment, except for step 4.2 where I didn't modify

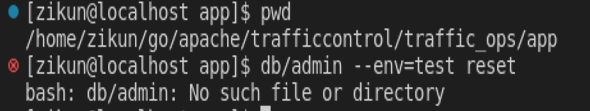

api.base_urlto point to my Traffic Ops API endpoint. After this, I was able to access https://localhost:60443/. I then attempted to follow the instructions in https://traffic-control-cdn.readthedocs.io/en/latest/development/traffic_ops.html#installing-the-developer-environment, but encountered an error while attempting step 4, which involved using the reset and upgrade commands of admin (see app/db/admin for usage) to set up the traffic_ops databases. The error message is shown below.

I am new to open-source. I would greatly appreciate if you could advise me on how to get started. I have been thinking about how to contribute to this issue and I have a few ideas. Perhaps the initial task involves augmenting the server object in the Server ORM by including a new field named isCached (or cache for brevity). Next, the server table in the database has to be updated to reflect the inclusion of this new field, which would necessitate the implementation of migration logic as current Traffic Ops users (i.e., administrators) will be impacted by this change. Finally, both the GET and POST APIs will need to be modified to incorporate the new field in the server object.

Thank you for your time reading this and have a nice day!