does version 5.1.1 support "count" and "group by"?

In the first case, I configure create_time as sharding key, and use "count" function. Print logs like this:

[http-nio-8080-exec-4] INFO ShardingSphere-SQL : Logic SQL: select count(accesslog0_.id) as col_0_0_ from ACCESS_LOG accesslog0_ where accesslog0_.create_time>=? and accesslog0_.create_time<? [http-nio-8080-exec-4] INFO ShardingSphere-SQL : SQLStatement: SQL92SelectStatement(limit=Optional.empty) [http-nio-8080-exec-4] INFO ShardingSphere-SQL : Actual SQL: main ::: select count(accesslog0_.id) as col_0_0_ from ACCESS_LOG_W202223 accesslog0_ where accesslog0_.create_time>=? and accesslog0_.create_time<? UNION ALL select count(accesslog0_.id) as col_0_0_ from ACCESS_LOG_W202224 accesslog0_ where accesslog0_.create_time>=? and accesslog0_.create_time<? UNION ALL select count(accesslog0_.id) as col_0_0_ from ACCESS_LOG_W202225 accesslog0_ where accesslog0_.create_time>=? and accesslog0_.create_time<? ::: [2022-05-01 00:00:00.0, 2022-07-01 00:00:00.0, 2022-05-01 00:00:00.0, 2022-07-01 00:00:00.0, 2022-05-01 00:00:00.0, 2022-07-01 00:00:00.0]

shardingsphere generated "UNION ALL" rather then add up the results, this leaded to an error.

In the second case, I configure startTime as sharding key, and use "group by" funtion. Print logs like this:

[http-nio-8080-exec-10] INFO ShardingSphere-SQL : Logic SQL: select infolog0_.method as col_0_0_, count(distinct infolog0_.actorId) as col_1_0_, count(infolog0_.id) as col_2_0_ from set_info_logs infolog0_ where infolog0_.startTime>=? and infolog0_.startTime<=? group by infolog0_.method order by count(infolog0_.id) DESC limit ? [http-nio-8080-exec-10] INFO ShardingSphere-SQL : SQLStatement: MySQLSelectStatement(table=Optional.empty, limit=Optional[org.apache.shardingsphere.sql.parser.sql.common.segment.dml.pagination.limit.LimitSegment@770083b1], lock=Optional.empty, window=Optional.empty) [http-nio-8080-exec-10] INFO ShardingSphere-SQL : Actual SQL: main ::: select DISTINCT infolog0_.method as col_0_0_, infolog0_.actorId as col_1_0_, count(infolog0_.id) as col_2_0_ from set_info_logs_202206 infolog0_ where infolog0_.startTime>=? and infolog0_.startTime<=? order by count(infolog0_.id) DESC limit ? ::: [2022-06-01 00:00:00.0, 2022-07-07 00:00:00.0, 2147483647]

shardingsphere generated sql without "goup by", which is unexpected.

I wonder how to use "count" and "grouo by" in version 5.1.1?

Hi @phdbutbachelor, can you provide your sharding configuration? I will try to reproduce this bug.

I will do this.

formatted sql

count

Logic SQL:

select

count(accesslog0_.id) as col_0_0_

from

ACCESS_LOG accesslog0_

where

accesslog0_.create_time >= ?

and accesslog0_.create_time < ?

Actual SQL:

select

count(accesslog0_.id) as col_0_0_

from

ACCESS_LOG_W202223 accesslog0_

where

accesslog0_.create_time >= ?

and accesslog0_.create_time < ?

UNION

ALL

select

count(accesslog0_.id) as col_0_0_

from

ACCESS_LOG_W202224 accesslog0_

where

accesslog0_.create_time >= ?

and accesslog0_.create_time < ?

UNION

ALL

select

count(accesslog0_.id) as col_0_0_

from

ACCESS_LOG_W202225 accesslog0_

where

accesslog0_.create_time >= ?

and accesslog0_.create_time < ?

[2022-05-01 00:00:00.0, 2022-07-01 00:00:00.0, 2022-05-01 00:00:00.0, 2022-07-01 00:00:00.0, 2022-05-01 00:00:00.0, 2022-07-01 00:00:00.0]

shardingsphere generated "UNION ALL" rather then add up the results

group by

Logic SQL:

select

infolog0_.method as col_0_0_,

count(distinct infolog0_.actorId) as col_1_0_,

count(infolog0_.id) as col_2_0_

from

set_info_logs infolog0_

where

infolog0_.startTime >= ?

and infolog0_.startTime <= ?

group by

infolog0_.method

order by

count(infolog0_.id) DESC

limit

?

Actual SQL:

select

DISTINCT infolog0_.method as col_0_0_,

infolog0_.actorId as col_1_0_,

count(infolog0_.id) as col_2_0_

from

set_info_logs_202206 infolog0_

where

infolog0_.startTime >= ?

and infolog0_.startTime <= ?

order by

count(infolog0_.id) DESC

limit

?

[2022-06-01 00:00:00.0, 2022-07-07 00:00:00.0, 2147483647]

shardingsphere generated sql without "goup by".

I can't reproduce the two errors, the two sql can be executed correctly in my test case.

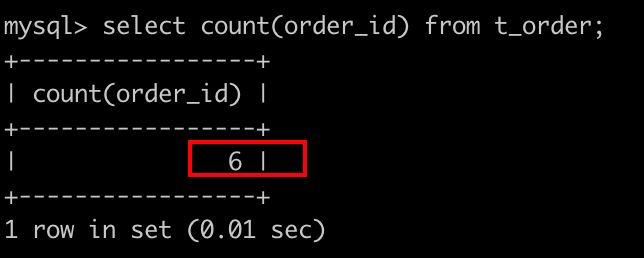

count

[INFO ] 2022-07-01 13:40:54.879 [Connection-1-ThreadExecutor] ShardingSphere-SQL - Logic SQL: select count(order_id) from t_order [INFO ] 2022-07-01 13:40:54.879 [Connection-1-ThreadExecutor] ShardingSphere-SQL - SQLStatement: MySQLSelectStatement(table=Optional.empty, limit=Optional.empty, lock=Optional.empty, window=Optional.empty) [INFO ] 2022-07-01 13:40:54.879 [Connection-1-ThreadExecutor] ShardingSphere-SQL - Actual SQL: ds_0 ::: select count(order_id) from t_order_0 UNION ALL select count(order_id) from t_order_1 [INFO ] 2022-07-01 13:40:54.879 [Connection-1-ThreadExecutor] ShardingSphere-SQL - Actual SQL: ds_1 ::: select count(order_id) from t_order_0 UNION ALL select count(order_id) from t_order_1

group by and order by

[INFO ] 2022-07-01 14:38:54.684 [Connection-1-ThreadExecutor] ShardingSphere-SQL - Logic SQL: select * from t_order group by t_order.order_id order by t_order.status [INFO ] 2022-07-01 14:38:54.684 [Connection-1-ThreadExecutor] ShardingSphere-SQL - SQLStatement: MySQLSelectStatement(table=Optional.empty, limit=Optional.empty, lock=Optional.empty, window=Optional.empty) [INFO ] 2022-07-01 14:38:54.684 [Connection-1-ThreadExecutor] ShardingSphere-SQL - Actual SQL: ds_0 ::: select * from t_order_0 group by t_order_0.order_id order by t_order_0.status [INFO ] 2022-07-01 14:38:54.684 [Connection-1-ThreadExecutor] ShardingSphere-SQL - Actual SQL: ds_0 ::: select * from t_order_1 group by t_order_1.order_id order by t_order_1.status [INFO ] 2022-07-01 14:38:54.684 [Connection-1-ThreadExecutor] ShardingSphere-SQL - Actual SQL: ds_1 ::: select * from t_order_0 group by t_order_0.order_id order by t_order_0.status [INFO ] 2022-07-01 14:38:54.684 [Connection-1-ThreadExecutor] ShardingSphere-SQL - Actual SQL: ds_1 ::: select * from t_order_1 group by t_order_1.order_id order by t_order_1.status

mysql> select * from t_order group by t_order.order_id order by t_order.status;

+----------+---------+--------+

| order_id | user_id | status |

+----------+---------+--------+

| 1 | 1 | 1 |

| 11 | 11 | 11 |

| 12 | 12 | 12 |

| 13 | 13 | 13 |

| 14 | 14 | 14 |

| 15 | 15 | 15 |

| 16 | 16 | 16 |

| 2 | 2 | 2 |

| 3 | 3 | 3 |

| 4 | 4 | 4 |

| 5 | 5 | 5 |

| 6 | 6 | 6 |

+----------+---------+--------+

12 rows in set (0.02 sec)

@strongduanmu the configuration is:

schemaName: eeb

dataSources: main: dataSourceClassName: com.zaxxer.hikari.HikariDataSource driverClassName: com.mysql.cj.jdbc.Driver jdbcUrl: jdbc:mysql://x.x.x.x/eeb?useUnicode=TRUE&characterEncoding=UTF-8&nullCatalogMeansCurrent=true&serverTimezone=PRC username: x password: x

rules:

- !SHARDING tables: set_info_logs: actualDataNodes: main.set_info_logs tableStrategy: standard: shardingColumn: startTime shardingAlgorithmName: shardingLogsByMonths set_error_logs: actualDataNodes: main.set_error_logs tableStrategy: standard: shardingColumn: startTime shardingAlgorithmName: shardingLogsByMonths shardingAlgorithms: shardingLogsByMonths: type: SHARDING_LOGS_BY_MONTHS

props: sql-show: true check-table-metadata-enabled: true

Can you give me a simple runnable demo to investigate this issue,thank you.

Can you give me a simple runnable demo to investigate this issue,thank you.

when the 'distinct' and 'group by' are used in one sql , the 'group by' will be replace by 'order by ' after creating ShardingSphere-SQL

CASE:

Logic SQL:

SELECT COUNT(DISTINCT DATE_FORMAT(LEFT(b.create_date,13),'%Y-%m-%d %H')) hourNum, date FROM table_name b where (b.p_id,b.d_id) IN ( (?, ?) ) and b.create_date >= ? AND b.create_date < ? GROUP BY date,d_id

Actual SQL:

SELECT COUNT(DISTINCT DATE_FORMAT(LEFT(b.create_date,13),'%Y-%m-%d %H')) hourNum, date FROM table_name_1 b where (b.p_id,b.d_id) IN ( (?, ?) ) and b.create_date >= ? AND b.create_date < ? ORDER BY DATE ASC,deviceId ASC

version: 5.1

Hello , this issue has not received a reply for several days. This issue is supposed to be closed.

@zhao-en Please don't use COUNT(DISTINCT DATE_FORMAT(LEFT(b.create_date,13),'%Y-%m-%d %H')) nested aggregation function, since ShardingSphere doesn't support it now.

您好,您的邮件我已经收到,我会尽快处理!

Since this issue has long time no reply, I will close it.