couchdb

couchdb copied to clipboard

couchdb copied to clipboard

CouchDB compression error

Recently we are noticing couchdb error frequently in one of our database

in RedHat Linux server.

in RedHat Linux server.

We are using CouchDB version 3.1.1, installed through Docker Container.

Issue is happening only in sensor_event database which has about 280 GB of data.

Running in one node.

Currently we are rolling back the entire VM of the system to previous working CouchDB day. This causes loss of data to our study. Is there a solution to having this issue. Thanks!

Here are the settings we have

Section Option Value attachments compressible_types text/, application/javascript, application/json, application/xml compression_level 8 chttpd backlog 512 bind_address 0.0.0.0 max_db_number_for_dbs_info_req 100 port 5984 prefer_minimal Cache-Control, Content-Length, Content-Range, Content-Type, ETag, Server, Transfer-Encoding, Vary require_valid_user false server_options [{recbuf, undefined}] socket_options [{sndbuf, 262144}, {nodelay, true}] cluster n 3 q 2 compactions _default [{db_fragmentation, "70%"}, {view_fragmentation, "60%"}, {from, "02:00"}, {to, "05:00"}] cors credentials false couch_httpd_auth allow_persistent_cookies true auth_cache_size 50 authentication_db _users authentication_redirect /_utils/session.html iterations 10 require_valid_user false secret fc7857a6df7358f9ccefc842ecb9e0e2 timeout 600 couch_peruser database_prefix userdb- delete_dbs false enable false couchdb attachment_stream_buffer_size 4096 changes_doc_ids_optimization_threshold 100 database_dir ./data default_engine couch default_security admin_only file_compression none max_dbs_open 500 max_document_size 8000000 os_process_timeout 5000 users_db_security_editable false uuid 4e5744d50ad4d7d3d3a189b474f850c7 view_index_dir ./data couchdb_engines couch couch_bt_engine csp enable true feature_flags partitioned|| true httpd allow_jsonp false authentication_handlers {couch_httpd_auth, cookie_authentication_handler}, {couch_httpd_auth, default_authentication_handler} bind_address 127.0.0.1 enable_cors false enable_xframe_options false max_http_request_size 4294967296 port 5986 secure_rewrites true socket_options [{sndbuf, 262144}] indexers couch_mrview true ioq concurrency 10 ratio 0.01 ioq.bypass compaction false os_process true read true shard_sync false view_update true write true log level info writer stderr query_server_config os_process_limit 100 reduce_limit true replicator connection_timeout 30000 http_connections 20 interval 60000 max_churn 20 max_jobs 500 retries_per_request 5 socket_options [{keepalive, true}, {nodelay, false}] ssl_certificate_max_depth 3 startup_jitter 5000 verify_ssl_certificates false worker_batch_size 500 worker_processes 4 ssl port 6984 uuids algorithm sequential max_count 1000 vendor name The Apache Software Foundation

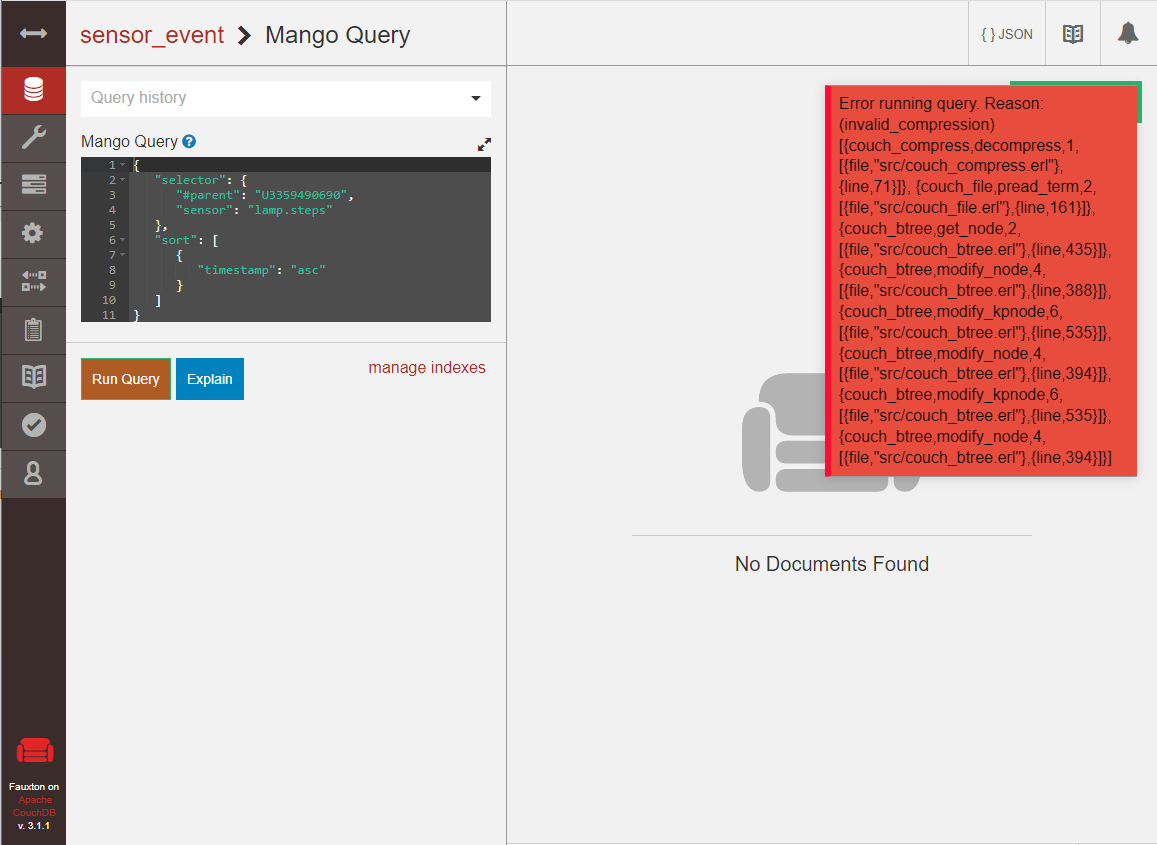

Here is the error

Error running query. Reason: (invalid_compression) [{couch_compress,decompress,1,[{file,"src/couch_compress.erl"},{line,71}]}, {couch_file,pread_term,2,[{file,"src/couch_file.erl"},{line,161}]}, {couch_btree,get_node,2,[{file,"src/couch_btree.erl"},{line,435}]}, {couch_btree,modify_node,4,[{file,"src/couch_btree.erl"},{line,388}]}, {couch_btree,modify_kpnode,6,[{file,"src/couch_btree.erl"},{line,535}]}, {couch_btree,modify_node,4,[{file,"src/couch_btree.erl"},{line,394}]}, {couch_btree,modify_kpnode,6,[{file,"src/couch_btree.erl"},{line,535}]}, {couch_btree,modify_node,4,[{file,"src/couch_btree.erl

[error] 2022-05-24T15:19:54.944847Z nonode@nohost emulator -------- Error in process <0.12552.27> with exit value:

{invalid_compression,[{couch_compress,decompress,1,[{file,"src/couch_compress.erl"},{line,71}]},{couch_file,pread_term,2,[{file,"src/couch_file.erl"},{line,161}]},{couch_btree,get_node,2,[{file,"src/couch_btree.erl"},{line,435}]},{couch_btree,modify_node,4,[{file,"src/couch_btree.erl"},{line,388}]},{couch_btree,modify_kpnode,6,[{file,"src/couch_btree.erl"},{line,535}]},{couch_btree,modify_node,4,[{file,"src/couch_btree.erl"},{line,394}]},{couch_btree,modify_kpnode,6,[{file,"src/couch_btree.erl"},{line,535}]},{couch_btree,modify_node,4,[{file,"src/couch_btree.erl"},{line,394}]}]}

[error] 2022-05-24T15:19:54.945009Z nonode@nohost <0.12581.27> 34d336cfa8 rexi_server: from: nonode@nohost(<0.11656.27>) mfa: fabric_rpc:map_view/5 throw:{invalid_compression,[{couch_compress,decompress,1,[{file,"src/couch_compress.erl"},{line,71}]},{couch_file,pread_term,2,[{file,"src/couch_file.erl"},{line,161}]},{couch_btree,get_node,2,[{file,"src/couch_btree.erl"},{line,435}]},{couch_btree,modify_node,4,[{file,"src/couch_btree.erl"},{line,388}]},{couch_btree,modify_kpnode,6,[{file,"src/couch_btree.erl"},{line,535}]},{couch_btree,modify_node,4,[{file,"src/couch_btree.erl"},{line,394}]},{couch_btree,modify_kpnode,6,[{file,"src/couch_btree.erl"},{line,535}]},{couch_btree,modify_node,4,[{file,"src/couch_btree.erl"},{line,394}]}]} [{couch_mrview_util,get_view_index_state,5,[{file,"src/couch_mrview_util.erl"},{line,138}]},{couch_mrview_util,get_view,4,[{file,"src/couch_mrview_util.erl"},{line,82}]},{couch_mrview,query_view,6,[{file,"src/couch_mrview.erl"},{line,262}]},{rexi_server,init_p,3,[{file,"src/rexi_server.erl"},{line,138}]}]

[error] 2022-05-24T15:19:54.945332Z nonode@nohost <0.11656.27> 34d336cfa8 req_err(3722911048) invalid_compression : [{couch_compress,decompress,1,[{file,"src/couch_compress.erl"},{line,71}]},

It looks there a view term which could not be opened and decompressed. I noticed you use file_compression none wonder if that has anything to do with it.

Would there be a chance the files were restored from backup or somehow altered outside of CouchDB's control?

Is there a way to reproduce the issue?

We had file_compression = snappy before in default.ini, thought snappy compression is causing error so we have updated to file_compression = none

Today we noticed one more thing, if we run the query with sort we are getting the invalid_compression error:

"selector": {

"#parent": "U3359490690",

"sensor": "lamp.steps"

},

"sort": [

{

"timestamp": "asc"

}

]

}

but if we remove the sort option, then we are getting the values, any reason why sorting throws compression error.

{

"selector": {

"#parent": "U3359490690",

"sensor": "lamp.steps"

}

}

Only our application is inserting/reading data, we do not have any other controls to modify the couchdb database.

This error is happening only for the sensor_events database which has millions of data.

@jeydude makes sense.

When you switched from snappy to none, that was the same database? In other words, snappy was used, db was filled with data, then compression was switched to none? Or, did you create a new database, after switching compression to none, and then filled it with all the data? The issue could still be with the snappy compression as switching compression doesn't re-compress the data already written to disk.

Perhaps somehow your view files got corrupt, if you have ability you can try to reset your view and let it rebuild. Try using deflate_6 compression (that's what we use at Cloudant by default).

A more drastic fix could be to switch to deflate_6, re-create the db using a larger sharding factor, say Q=16 if your database is that large, and replicate your data into that database. That way your view files would be smaller and may be build faster.

Good to know. Thanks @nickva for sharing the information. We did not create new database after switching to none.

We did apply below changes in the default.ini

[cluster]

q=16

n=1

and in local.ini

[compactions]

_default =

q=16 Modification applies only the new database, not to the existing database.

How can I rebuild the view for the sensor_event database? Is there a command I can apply?

I will try applying deflate_6 compression. Once l set deflate_6, to apply this compression to senser_event database, is there anything I need to do? Thanks!

http://hostname/sensor_event/_design/parent-sensor-timestamp-index/_view/parent-sensor-timestamp

gave me { "error": "invalid_compression", "reason": "[{couch_compress,decompress,1,[{file,"src/couch_compress.erl"},{line,71}]},\n {couch_file,pread_term,2,[{file,"src/couch_file.erl"},{line,161}]},\n {couch_btree,get_node,2,[{file,"src/couch_btree.erl"},{line,435}]},\n {couch_btree,modify_node,4,[{file,"src/couch_btree.erl"},{line,388}]},\n {couch_btree,modify_kpnode,6,[{file,"src/couch_btree.erl"},{line,535}]},\n {couch_btree,modify_node,4,[{file,"src/couch_btree.erl"},{line,394}]},\n {couch_btree,modify_kpnode,6,[{file,"src/couch_btree.erl"},{line,535}]},\n {couch_btree,modify_node,4,[{file,"src/couch_btree.erl"},{line,394}]}]", "ref": 3722911048 }

http://hostname/sensor_event/_design/parent-timestamp-index/_view/parent-timestamp gave me { "rows": [ { "key": null, "value": 775776868 } ] }

is there a way to fix the corrupted view? or re-build again?

When the view is rebuilding it won't be able to return results. So make sure to account for that. At 280Gb that could take quite a while. First, apply deflate_6 compression, so the rebuilt view can use that compression. When you're ready, you could try modifying the _design document of the corrupt view a bit (add a comment or a semicolon) that would change the view signature and start rebuilding the view. Make sure to account for the extra disk space and CPU resources as well.

To migrate the database to have a higher Q sharding factor, after you apply deflate_6, creating a sensor_event_1 and then replicate from sensor_event to sensor_event_1. That would take quite a bit at 280Gb, could be a few days. Also make sure you have enough disk and CPU capacity. After that can try querying your views to ensure they have been built and return results. Then you'd need to update your client code to read from the sensor_event_1 instead of sensor_event

Thanks!, will try it.