KVM Disk-only VM Snapshots

ISSUE TYPE

- Feature Idea

COMPONENT NAME

VM Snapshot

CLOUDSTACK VERSION

4.20/main

CONFIGURATION

OS / ENVIRONMENT

KVM, file storage (NFS, Shared mountpoint, local storage)

SUMMARY

This spec addresses an update to the disk-only VM snapshot feature on the KVM

1. Problem Description

Currently, using KVM as the hypervisor, CloudStack does not support disk-only snapshots of VMs with volumes in NFS or local storage, CloudStack also does not support VM snapshots for stopped VMs; this means that if the user needs some sort of snapshot of their volumes, they must use the volume snapshot/backup feature. Furthermore, the current implementation relies on the same workflows as volume snapshots/backups:

- The VM will be frozen (ignoring the quiesce parameter);

- Each volume will be processed individually using the volume snapshot workflow;

- Once all the snapshots are done, the VM will be resumed.

However, this approach is flawed: as we not only create the snapshots, but also copy all of them to another directory, there will be a lot of downtime, as the VM is frozen during this whole process. This downtime might be extremely long if the volumes are big.

Moreover, as the snapshots will be copied to another directory in the primary storage, the revert takes some time as we need to copy the snapshot back.

1.1 Basic Definitions

Here are some basic definitions that will be used throughout this spec:

- Backing file: a read-only file that will be read when data is not found on the top file.

- Delta: a file that stores data that was changed in comparison to its backing file(s). When a snapshot is taken, the current delta/file will become the backing file of a new delta, thus preserving the data on the file being snapshotted.

- Backing chain: a chain of backing files.

- Current delta/snapshot: the current delta that is being written to. It may have a parent snapshot and siblings.

- Parent snapshot: the immediate predecessor of a given snapshot/delta in its backing chain. A snapshot/delta can only have one parent.

- Child snapshot: the immediate successor of a given snapshot in the backing chain. A snapshot might have multiple children. The current delta cannot have children.

- Sibling snapshot: a snapshot that is the child of a given snapshot/delta's parent.

2. Proposed Changes

To address the described problems, we propose to extend the VM snapshot feature on KVM to allow disk-only VM snapshots for NFS and local storage; other types of storage, such as shared-mount-point, already support disk-only VM snapshot. Furthermore, we intend to change the disk-only VM snapshot process for all other file-based storages (local, NFS and shared-mount-point):

- We will take all the snapshots at the same time, instead of one at a time.

- Unlike volume snapshots, the disk-only VM snapshots will not be copied to another directory, they will stay as is after taken and be part of the volumes' backing chains. This makes reverting a snapshot much faster as we only have to change the paths that will be pointed to in the VM's DOM.

- The VM will only be frozen if the

quiesceVMparameter istrue.

2.0.2. Limitations

- This proposal will not change the process for non-file based storages, such as LVM and RBD (Ceph).

- The snapshots will be external, that is, they will generate a new file when created, instead of internal, where the snapshots are inside the single volume file. This is a limitation with Qemu: active Qemu domains require external disk snapshots when not saving the state of the VM.

- As Libvirt does not support reverting external snapshots, the reversion will have to be done by ACS while the VM is stopped.

- To avoid adding complexity to the volume migration, we will not allow migrating a volume when they have disk-only VM snapshots; this limitation is already present in the current implementation for VM snapshots with memory, although for different reasons.

- After taking a disk-only VM snapshot, attaching/detaching volumes will not be permitted. This limitation already exists for other VM snapshot types.

- This feature will be mutually exclusive with volume snapshots. When taking a volume snapshot of a volume that is attached to a VM with disk-only VM snapshots, the volume snapshot will fail, furthermore, the user will not be able to create snapshot policies for the volume as well. Conversely, if one of the VM's volumes has a snapshot or snapshot policy, the user will not be able to create a disk-only VM snapshot. There are multiple reasons to limit the interaction between these features:

- When reverting volume snapshots we have two choices: create a new tree for that volume that will have the reverted snapshot as base; invalidate the VM snapshots. The first option adds complexity to the implementation by adding lots of trees that have to be taken care of, while the second option will make the user lose data.

- The KVM incremental volume snapshot feature uses an API that is not compatible with

virDomainSnapshotCreateXML(the API used for this feature). With this in mind, allowing volume and disk-only VM snapshots to coexist would create edge cases for failure, for example: if the user has a snapshot policy, and thekvm.incremental.snapshotis changed to true, the volume snapshots will suddenly begin to fail.

- This feature will also be mutually exclusive with VM snapshots with memory. When reverting an external snapshot, all internal that were taken after the creation of the snapshot being reverted will be lost, causing data loss. As the VM snapshot with memory uses internal snapshots and this feature uses external snapshots, we will not allow both to coexist to prevent data loss.

2.1. Disk-only VM Snapshot Creation

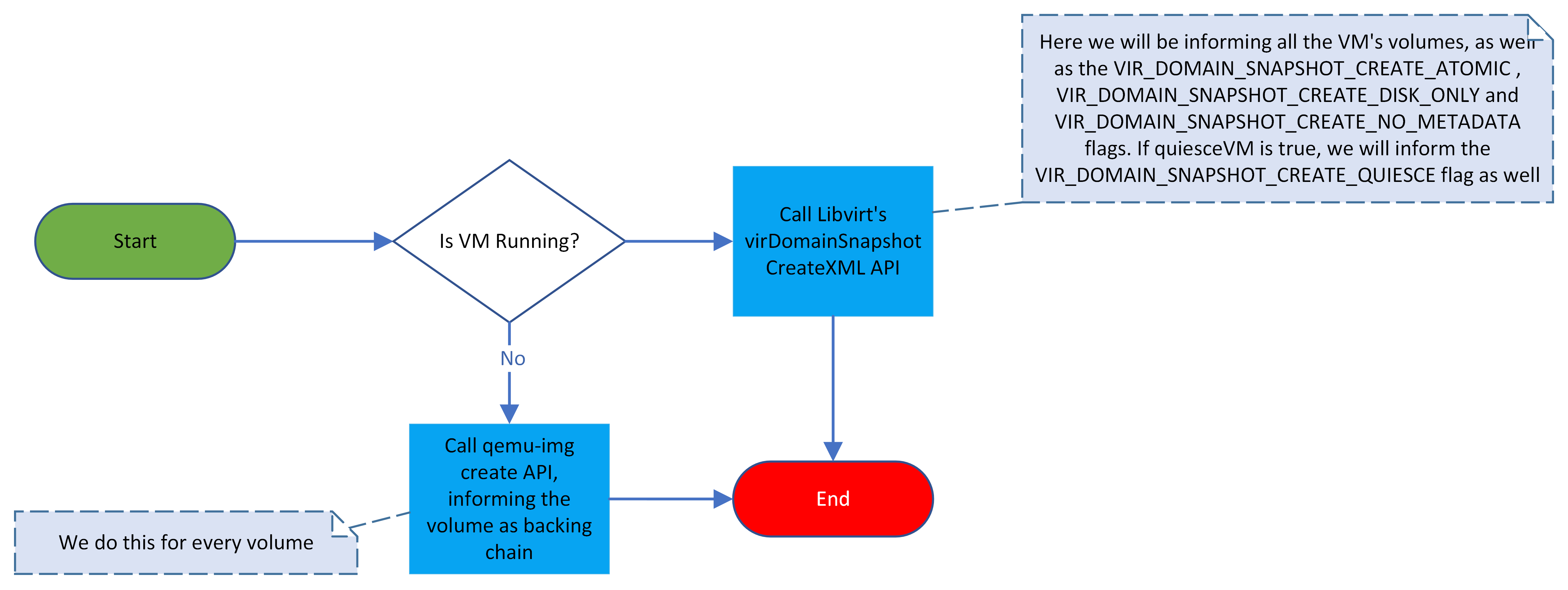

The proposed disk-only VM snapshot creation workflow is summarized in the following diagram.

- If the VM is running, we will call the

virDomainSnapshotCreateXMLAPI, informing all the VM's volumes with thesnapshotkey and theexternalvalue, and using the flags: 1.VIR_DOMAIN_SNAPSHOT_CREATE_ATOMIC: to make the snapshot atomic across all the volumes; 2.VIR_DOMAIN_SNAPSHOT_CREATE_DISK_ONLY: to make the snapshot disk-only; 3.VIR_DOMAIN_SNAPSHOT_CREATE_NO_METADATA: to tell Libvirt not to save any metadata for the snapshot. This flag will be informed because we do not need Libvirt to save any metadata, all the other processes regarding the VM snapshots will be done manually using qemu-img. 4.VIR_DOMAIN_SNAPSHOT_CREATE_QUIESCE: ifquiesceVMistrue, this flag will be informed as well to keep the VM frozen during the snapshot process, once the snapshot is done it will be already thawed. - Otherwise, we will call

qemu-img createfor every volume of the VM, to create a delta on top of the current file, which will become our snapshot.

Unlike the volume snapshots, the disk-only VM snapshots are not designed to be backups; thus, we will not copy the disk-only VM snapshots to another directory or storage. We want the disk-only snapshots to be fast to revert whenever needed, and keeping them in the volumes backing-chain is the best way to achieve this.

Currently, the VM is always frozen and resumed during the snapshot process, regardless of what is informed in the quiesceVM parameter. This process will be changed, the VM will only be frozen if the quiesceVM is informed. Furthermore, the downtime of the proposed process will be orders of magnitude smaller then the current implementation, as there will not be any copy while the VM is frozen.

During the VM snapshot process, the snapshot job is queued alongside the other VM jobs; therefore, we do not have to worry about the VM being stopped/started during the snapshot, as each job is processed sequentially for each given VM. Furthermore, after creating the VM snapshot, ACS already forbids detaching volumes from the VM, so we do not need to worry about this case as well.

2.2. VM Snapshot Reversion

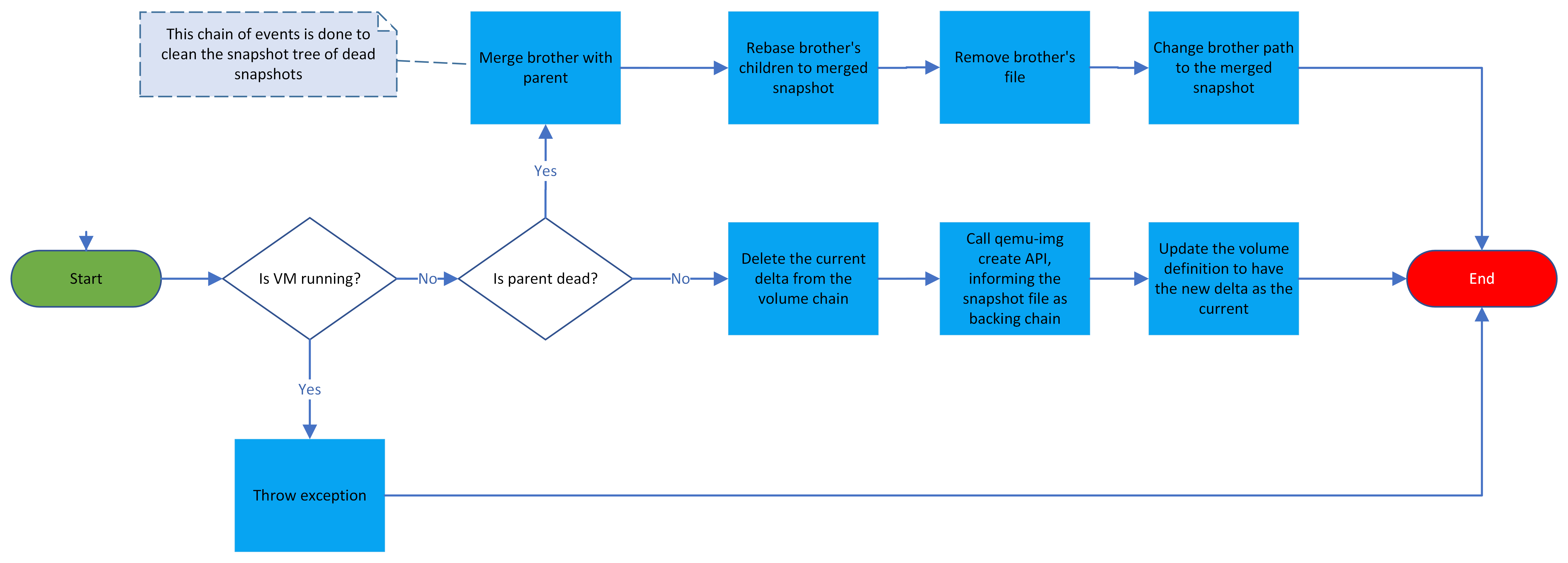

The proposed disk-only VM snapshot restore process is summarized in the diagram below. The process will be repeated for all the VM's volumes.

- If the VM is running, we throw an exception, otherwise, we continue;

- If the current delta's parent is dead, we:

- Merge our sibling and our parent;

- Rebase our sibling's children (if any) to point to the merged snapshot;

- Remove our sibling's old file, as it is now empty;

- Change our sibling's path in the DB so that it points to the merged file;

- Delete the current delta that is being written to. It only contains changes on the disk that will be reverted as part of the process;

- Create a new delta on top of the snapshot that is being reverted to, so that we do not write directly into it and are able to return to it again later;

- Update the volume path to point to the newly created delta.

The proposed process will allow us to go back and forth on snapshots if need be. Furthermore, this process will be much faster than reverting a volume snapshot, as the bottleneck here is deleting the top delta that will not be used anymore; which should be much faster than copying a volume snapshot from another storage and replacing the old volume.

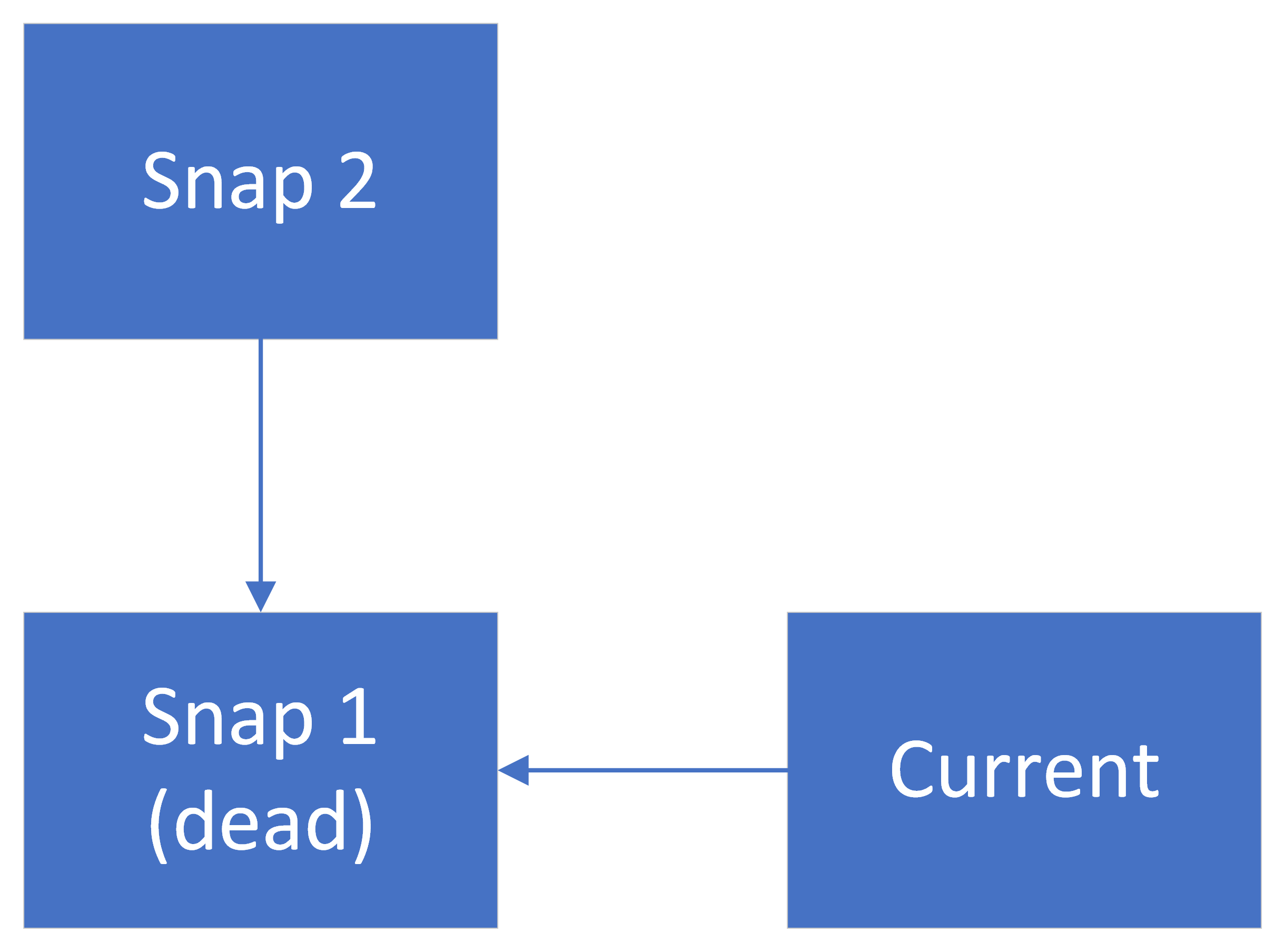

The process done in step 2 was added to cover an edge case where dead snapshots would be left in the storage until the VM was expunged. Here's a simple example of why it's needed:

- Let's imagine the below image represents the current state of the snapshot tree: with

Currentbeing the current delta that is being written to,Snap 1the parent ofCurrentandSnap 2. If we deleteSnap 1, following the diagram on the snapshot deletion section, we can see that it will be marked as destroyed, but will not be deleted nor merged, as none of these operations can be done in this situation.

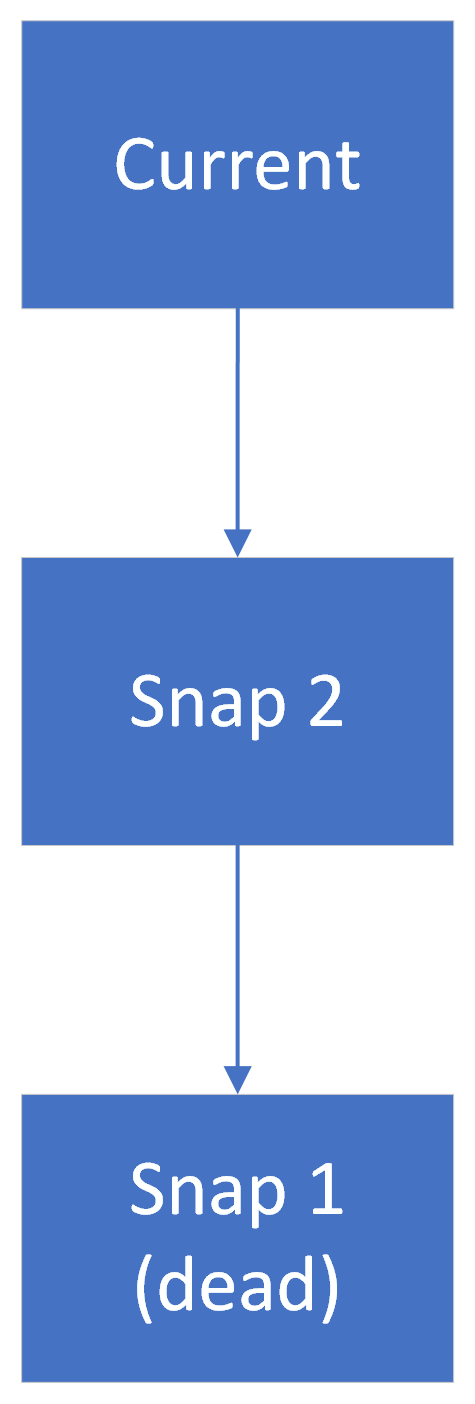

- Then, we decide to revert to

Snap 2, thus the oldCurrentwill be deleted and a new delta will be created on top ofSnap 2. The problem is that now we have a dead snapshot, that will not be removed by any other process, as the user will not see it, and none of the processes of the snapshot deletion will delete it:

- Now adding step 2 of the proposed reversion workflow: during reversion we will merge

Snap 1andSnap 2(usingqemu-img commit) and be left with onlySnap 2, and our current delta:

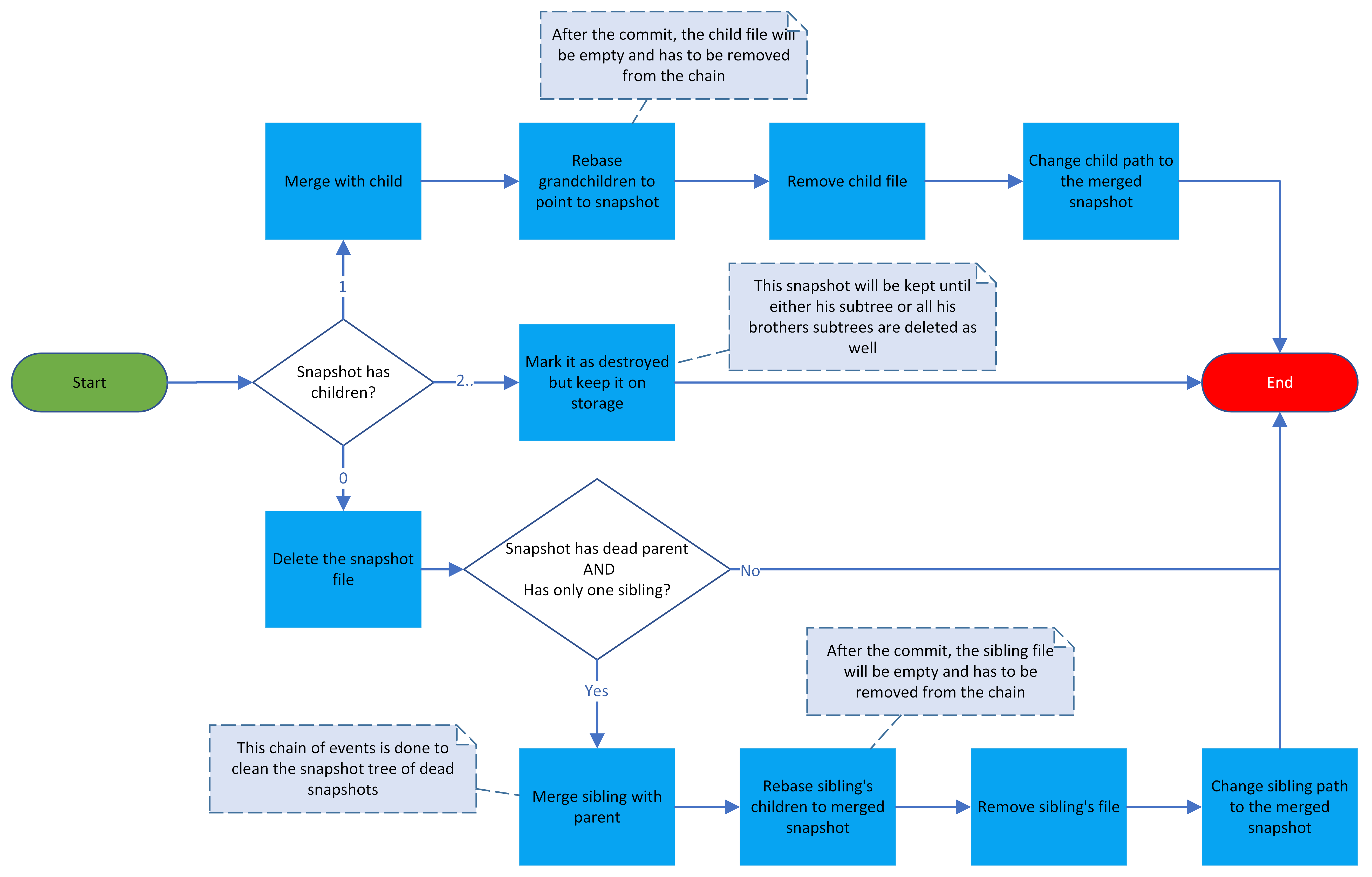

2.3. VM Snapshot Deletion

In order to keep the snapshot tree consistent and with the least amount of dead nodes, the snapshot deletion process will always try to manipulate the snapshot tree to remove any unneeded nodes while keeping the ones that are still needed; even if they were removed by the user, in these cases, they'll be marked as deleted on the DB, but will remain on the storage primary until they can be merged with another snapshot. The diagram below summarizes the snapshot deletion process, this process will be repeated for all the VM's volumes:

As this diagram has several branches, each branch will be explained separately:

- If our snapshot has a single child:

- we will merge it with its child. If the VM is running we use Libvirt's

virDomainBlockCommit; else useqemu-img commit, to commit the child to it; - rebase its grandchildren to the merged file, if they exist. If the VM is running, the

virDomainBlockCommitAPI already does this for us; - remove the child's old file;

- change the child's path on the DB to the merged file;

- we will merge it with its child. If the VM is running we use Libvirt's

- If our snapshot has more than one child:

- we mark it as removed on the DB, but keep it in storage until it can be deleted.

- If our snapshot has no children:

- we delete the snapshot file

- if the snapshot has a dead parent and only one sibling:

- we merge the sibling with its parent. If the VM is running we use Libvirt's

virDomainBlockCommit; else useqemu-img commit, to commit the sibling to the parent; - rebase the sibling's children to the merged file. If the VM is running, the

virDomainBlockCommitAPI already does this for us; - remove the sibling's old file;

- change the sibling's path on the DB to the merged file;

- we merge the sibling with its parent. If the VM is running we use Libvirt's

The proposed deletion process leaves room for one edge case, which can lead to a dead node that would only be removed when the volume was deleted: If we revert to a snapshot that has one other child and then delete it, using the above algorithm, the deleted snapshot will end up only marked as removed on the DB. If we revert to another snapshot, this will leave a dead node on the tree that would not be removed (the snapshot that was previously deleted). To solve this edge case, when this specific situation happens, we will do as explained in the snapshot reversion section and merge the dead node with its child.

2.4. Template Creation from Volume

The current process of creating a template from a volume does not need to be changed. We already convert the volume when creating a template, so the volume's backing chain will be merged when creating a template.

Hi @JoaoJandre, there is a similar functionality for VM snapshots without memory

Introduced in this PR

and this PR allows it for NFS/Local storage

It doesn't support VM snapshots for stopped VMs but I think it will be a small change

What I got from libvirt docs and a few forums is that using the flag VIR_DOMAIN_SNAPSHOT_CREATE_QUIESCE is discouraged.

If flags includes VIR_DOMAIN_SNAPSHOT_CREATE_QUIESCE, then the libvirt will attempt to use guest agent to freeze and thaw all file systems in use within domain OS. However, if the guest agent is not present, an error is thrown. Moreover, this flag requires VIR_DOMAIN_SNAPSHOT_CREATE_DISK_ONLY to be passed as well. For better control and error recovery users should invoke virDomainFSFreeze manually before taking the snapshot and then virDomainFSThaw to restore the VM rather than using VIR_DOMAIN_SNAPSHOT_CREATE_QUIESCE.

Probably you could leave the usage of virDomainFSFreeze/virDomainFSThaw to be executed by the value of the quiesceVm parameter and by the state of the VM (running/stopped).

Hi @JoaoJandre, there is a similar functionality for VM snapshots without memory Introduced in this PR and this PR allows it for NFS/Local storage It doesn't support VM snapshots for stopped VMs but I think it will be a small change What I got from libvirt docs and a few forums is that using the flag

VIR_DOMAIN_SNAPSHOT_CREATE_QUIESCEis discouraged.If flags includes VIR_DOMAIN_SNAPSHOT_CREATE_QUIESCE, then the libvirt will attempt to use guest agent to freeze and thaw all file systems in use within domain OS. However, if the guest agent is not present, an error is thrown. Moreover, this flag requires VIR_DOMAIN_SNAPSHOT_CREATE_DISK_ONLY to be passed as well. For better control and error recovery users should invoke virDomainFSFreeze manually before taking the snapshot and then virDomainFSThaw to restore the VM rather than using VIR_DOMAIN_SNAPSHOT_CREATE_QUIESCE.

Probably you could leave the usage of virDomainFSFreeze/virDomainFSThaw to be executed by the value of the quiesceVm parameter and by the state of the VM (running/stopped).

Hello, @slavkap

I'm aware of the current functionality, but I was not aware that it was made to support NFS/Local storage. Regardless, I have listed (in the spec) a few other issues with it:

- It does not support VM snapshots for stopped VMs;

- The process is based on the volume snapshot, which is much slower as we take one snapshot at a time, instead of using one command for all the volumes;

- The VM is frozen (regardless of the user's orders) during the whole snapshot process, including the copy of the snapshots, which is a huge waste of time for the VM (this is made worse by the point above);

- The proposed implementation will not copy the snapshots, making the snapshot creation/reversion process much faster;

In any case, this feature will only be used for NFS/SMP/Local storage, for the other types of storage (such as RBD or iSCSi), the implementation introduced in #3724 will still be used.

Regarding the domain freeze/thaw, the quote you posted says "For better control and error recovery users should invoke virDomainFSFreeze manually before taking the snapshot and then virDomainFSThaw to restore the VM rather than using VIR_DOMAIN_SNAPSHOT_CREATE_QUIESCE.", I saw the implementation of the freeze/thaw and there doesn't seem to be any error recovery attempt, so using it instead of the quiesce parameter does not seem any better. I'm not sure what type of error recovery we could do to be fair; so again, I don't see a point in using the freeze/thaw.