apisix

apisix copied to clipboard

apisix copied to clipboard

bug: [file-logger] two log messages mix-output in one line(两条不同的日志混合在一行输出)

Current Behavior

I added file-logger plugins in global_rules, and I foud that two different log are mixed in one line. 我这里把file-logger插件添加到全局规则,我发现存在部分日志内容会把两个不同的日志消息混合在一行中输出。 I guess is it related to buffer size which is used to cache log message or something else ? 我猜测这个会不会用于缓存日志消息的buffer size或其他东西有关? My log message is more than 4K bytes for this case. 在这个bug中,我的日志信息都超过了4K字节大小。

Expected Behavior

I expected each log message should be output in sperated line. 我期望每条日志记录应该独立的输出到一行。

Error Logs

no error log, just the behavior. 没有错误日志, 只是看到了错误现象。

Steps to Reproduce

It happens accidently, and appears 1/1000 in my environment. 这是个偶发现象, 在我的环境中出现概率约为千分之一。

Environment

-

APISIX version 2.15.0: apisix is run in docker with image [apache/apisix:2.15.0-centos]

-

Operating system (run

uname -a): Linux gateway-web-2-15-0-86687b9f68-xzhdc 4.14.105-19-0024 #1 SMP Tue Jun 14 16:39:55 CST 2022 x86_64 x86_64 x86_64 GNU/Linux apisix is run in docker with image [apache/apisix:2.15.0-centos] -

OpenResty / Nginx version (run

openresty -Vornginx -V): nginx version: openresty/1.21.4.1 -

etcd version, if relevant (run

curl http://127.0.0.1:9090/v1/server_info): {"version":"2.15.0","etcd_version":"3.4.0","id":"2a887545-0b32-4060-bd5d-5db1057b9db2","boot_time":1661732855,"hostname":"gateway-web-2-15-0-86687b9f68-xzhdc"} -

APISIX Dashboard version, if relevant: v2.13.0

-

Plugin runner version, for issues related to plugin runners: matched to apisix v2.15.0

-

LuaRocks version, for installation issues (run

luarocks --version):

no error log, just the behavior.

We must at least know the logs, without which we cannot imagine what the errors are like.

您的邮件我已收到,我会尽快给您回复,谢谢!

no error log, just the behavior.

We must at least know the logs, without which we cannot imagine what the errors are like.

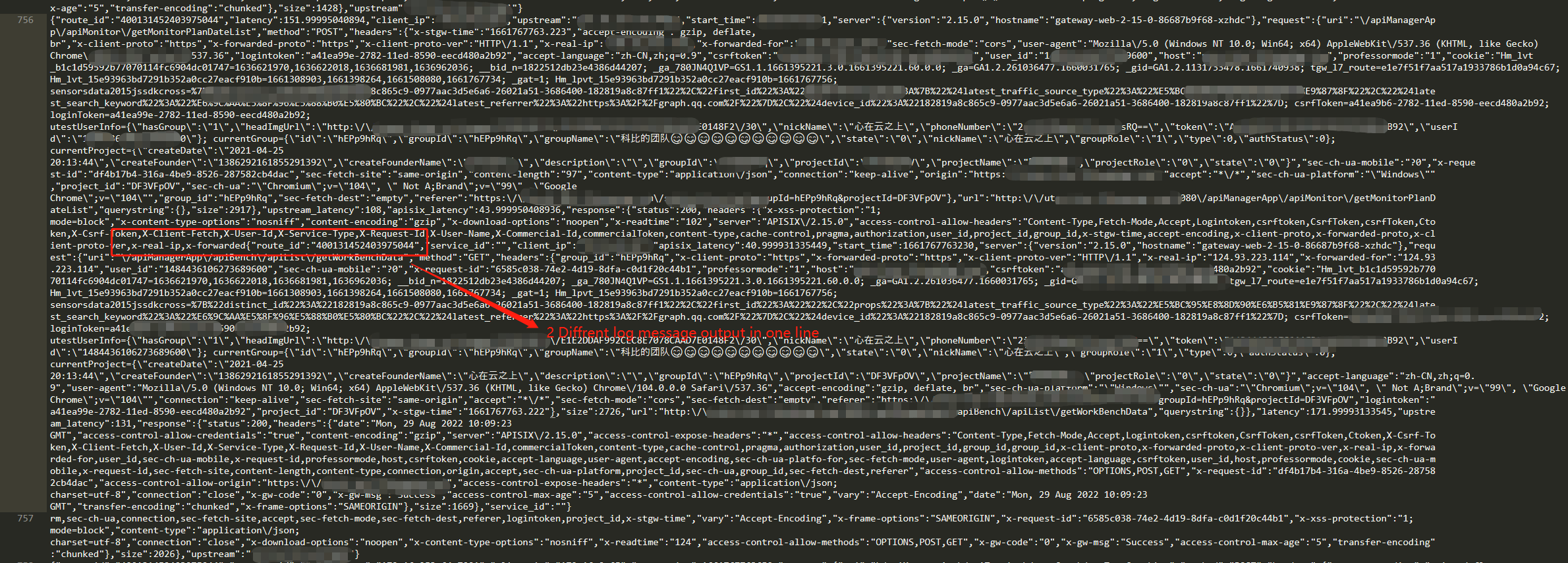

file-logger run with no error. Just output two diffrent log message in one line. Message has been output to file but in wrong format. Here is the log file I got (file-logger output file):

Maybe two diffrent worker flush each log message to file at the same time, and log message size is larger then log cache buffer. Perhaps it happens like this: message A's size is larger than the cache size, so part of message flush to file, then message B in another worker begin to flush. After that message A continue flush its remained message. So I think we need concurrency control when file-logger flush to file.

这里file-logger并没有执行报错, 只是将两个不通的日志混合在一行输出了,造成日志格式错误。相面是我得到的日志截图(对敏感数据进行了处理)。 我猜测发生过程是这样的: 一个工作进程将日志A的消息内容超过了用来缓存消息的buffer,当A开始向文件flush时,首先将前半部分内容flush到文件中(因为buffer size小于消息大小,所以只能flush部分内容)。此时另一个工作进程开始将日志B向文件flush。最后将日志A的剩余消息写入到文件中。 因此,我们认为在file-logger在向文件写数据的时候需要并发控制逻辑。

@guoqqqi PTAL

Hello, @kizy008 Can you provide a reproduction step? The scenario as I understand it is:

- Create a global plugin

file-logger - At least two routes exist

- Concurrent requests for routes

- Ensure that the

file-loggerwrites a message larger than the default buffer

Is there anything else you would like to add to the above?

The file:flush calls fflush() and it's thread-safe.

Hello, @kizy008 Can you provide a reproduction step? The scenario as I understand it is:

- Create a global plugin

file-logger- At least two routes exist

- Concurrent requests for routes

- Ensure that the

file-loggerwrites a message larger than the default bufferIs there anything else you would like to add to the above?

Yes, this is all I have, and it occurs with very low probability.

I can reproduce the problem now, the condition is that the message written to log larger than the default buffer.

@monkeyDluffy6017 What did you mean by saying the "default buffer"? file-logger doesn't like the Nginx error log printer which has the 4K hard limitation.

luajit's io interface io.write will use fwrite, for fwrite in glibc, it does have a buff, and we can set buff with setvbuf.

And for this problem, we plan to replace luajit's io.write with syscall write according to @spacewander, which does not have a buffer, or use no buffer model provided by glibc.

luajit's io interface

io.writewill usefwrite, forfwritein glibc, it does have a buff, and we can set buff withsetvbuf.

But what about the default buffer size?

/* Allocate a file buffer, or switch to unbuffered I/O. Streams for

TTY devices default to line buffered. */

int

_IO_file_doallocate (_IO_FILE *fp)

{

_IO_size_t size;

char *p;

struct stat64 st;

size = _IO_BUFSIZ;

if (fp->_fileno >= 0 && __builtin_expect (_IO_SYSSTAT (fp, &st), 0) >= 0)

{

if (S_ISCHR (st.st_mode))

{

/* Possibly a tty. */

if (

#ifdef DEV_TTY_P

DEV_TTY_P (&st) ||

#endif

local_isatty (fp->_fileno))

fp->_flags |= _IO_LINE_BUF;

}

#if _IO_HAVE_ST_BLKSIZE

if (st.st_blksize > 0 && st.st_blksize < _IO_BUFSIZ)

size = st.st_blksize;

#endif

}

p = malloc (size);

if (__glibc_unlikely (p == NULL))

return EOF;

_IO_setb (fp, p, p + size, 1);

return 1;

}

From the glibc code, we know that the buffer vary from os, it's default size depend on macro BUFSIZ, the BUFSIZ is different on different os, and if the file bulk size is smaller than the BUFSIZ, it will just allocate the file bulk size.

I think it happens by accident. Because the issuse (repeat history log in every log file) here #7899 , So I found the log message in every log file. It did not occur any more recently.

您的邮件我已收到,我会尽快给您回复,谢谢!

我这里补充一个讨论,[file-logger] duplicate log, new log file contains history log message #7902 https://github.com/apache/apisix/discussions/7902 我的场景中, 新生成的日志文件包含了历史的日志记录,原因是日志存储使用了腾讯云的COS,具体可参考上面的discussions; 如果你也遇到类似的问题可以参考一下。