add: container securityContext not available in podSecurityContext

This PR is only a small change in the helm chart of Airflow.

What: Deployments can have security settings in their manifest on two levels: pod and container. However, there are some capabilities only configurable in one of the respective levels(https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.23/#securitycontext-v1-core). This PR sets a default configuration for container securityContext, which denies privilege escalation and drops all POSIX capabilities. These are and should be standard settings in the context of Kubernetes. It also adds the possibility of running Airflow in an Kubernetes environment without PSP (to be removed in v1.25 https://kubernetes.io/docs/concepts/security/pod-security-policy/), but with OpenPolicyAgent (a or possibly the PSP substitute) with the same capabilities as a restricted PSP instead.

Why: This missing configuration restricts Airflow from being used with the simple upstream helm chart without modifications/unnecessary maintenance. This especially applies to the restricted policy use in OPA. The specific setting in this PR is not inherited from podSecurityContext(pod level) in securityContext(container level).

Problem: There is already a securityContext in the values.yaml, however, this should also be be called podSecurityContext since it's on pod level, but it isn't. To not break backwards compatibility of Airflow, this PR hardcodes the respective capabilities on container level for statsd, scheduler and webserver.

The other possibility would be to introduce a containerSecurityContext in the values.yaml, which is a made up word since it is commonly called scurityContext.

Benefit in either case would be a more secure deployment.

In case of existing issue, reference it using one of the following:

closes: #ISSUE related: #ISSUE

Could not find any related issue at first sight.

^ Add meaningful description above

Read the Pull Request Guidelines for more information.

Test was a simple helm lint . on chart level as well as a successful deployment.

WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /Users/christophfraundorfer/.kube/config

WARNING: Kubernetes configuration file is world-readable. This is insecure. Location: /Users/christophfraundorfer/.kube/config

==> Linting .

1 chart(s) linted, 0 chart(s) failed

Congratulations on your first Pull Request and welcome to the Apache Airflow community! If you have any issues or are unsure about any anything please check our Contribution Guide (https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst) Here are some useful points:

- Pay attention to the quality of your code (flake8, mypy and type annotations). Our pre-commits will help you with that.

- In case of a new feature add useful documentation (in docstrings or in

docs/directory). Adding a new operator? Check this short guide Consider adding an example DAG that shows how users should use it. - Consider using Breeze environment for testing locally, it’s a heavy docker but it ships with a working Airflow and a lot of integrations.

- Be patient and persistent. It might take some time to get a review or get the final approval from Committers.

- Please follow ASF Code of Conduct for all communication including (but not limited to) comments on Pull Requests, Mailing list and Slack.

- Be sure to read the Airflow Coding style. Apache Airflow is a community-driven project and together we are making it better 🚀. In case of doubts contact the developers at: Mailing List: [email protected] Slack: https://s.apache.org/airflow-slack

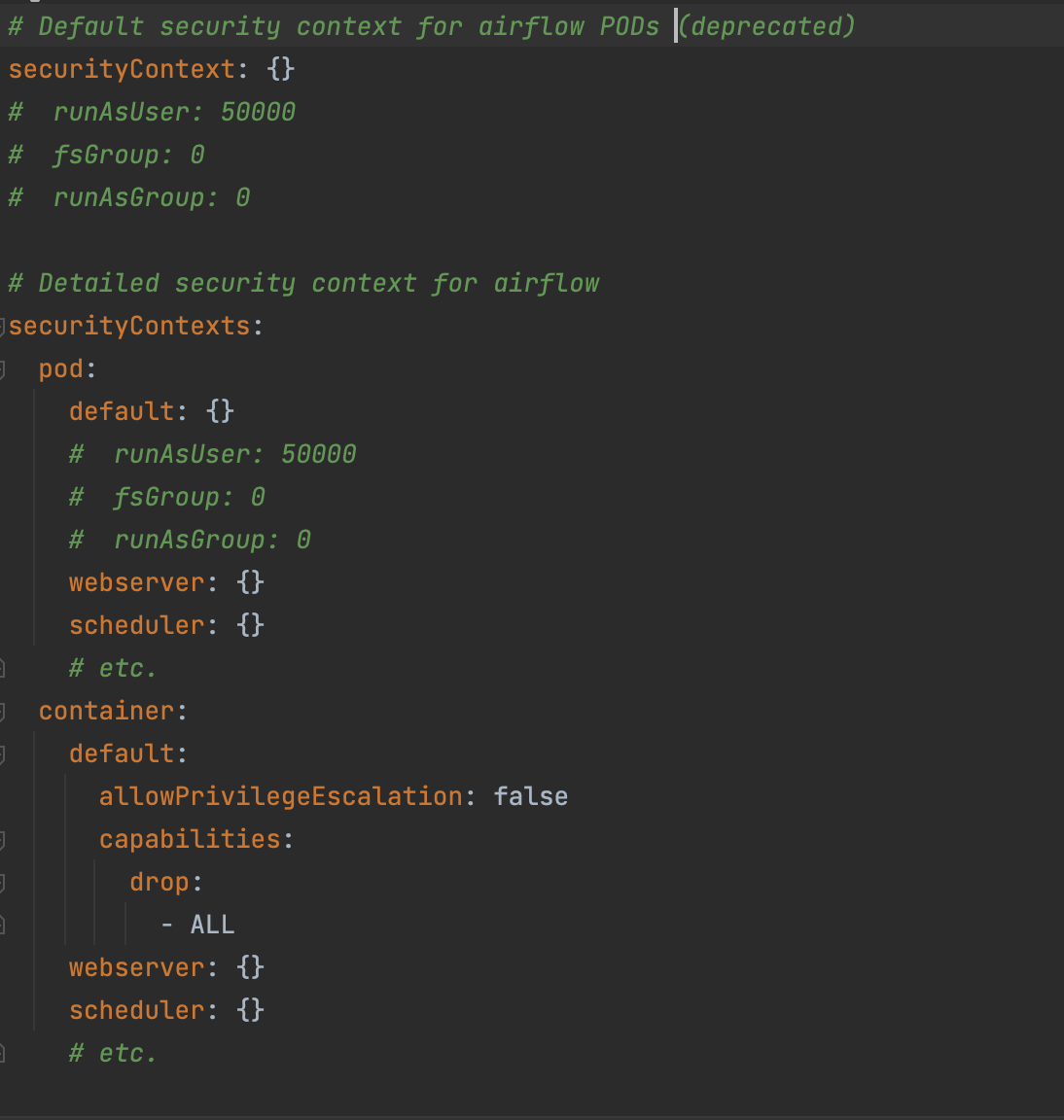

I'd say that better action would really be to have two separate contexts and maybe simply - rather than adding "containerSecurityContext", introduce values/securityContexts/pod and values/securityContexts/container as a bit more detailed structure in values, allowing more fine-grained security context configuration (while keeping deprecated default)

Something like:

I think that would be much more versatile and rather easy to use configure (more detailed values could be merged into the defaults).

Updated the change, can you check it?

is the change how you imagined it @potiuk ?

Yep it looks good :). I am not sure if there is an easy way to "warn" if you use the deprecated configuration (I think this is the only change that I would like to see here) - and of course some tests would be useful. @jedcunningham @dstandish - WDYT?

Deprecation warnings happen in NOTES, e.g: https://github.com/apache/airflow/blob/178af9d24772a8866ac55d25eeb48bed77337031/chart/templates/NOTES.txt#L160-L166

This should be expanded to cover all of the components as well.

I'd also like to see the component specific override come from the components config section, e.g. scheduler.securityContexts.pod instead of securityContexts.pod.scheduler.

Hi, sorry for the late reply! what was added:

- added deprecation notes

- Modified values.yaml (webserver, scheduler, statsd)

- Modified deployments (webserver, scheduler, statsd)

- Modified values.schema (webserver, scheduler, statsd)

Tested with helm lint . and helm template . --debug - both compiled.

Is this the way you imagine it? @potiuk @jedcunningham

still some static checks are failing,

Hi @potiuk can you maybe point me into a direction on why the checks are not running through? - I don't see the problem so far.

Hi @jedcunningham thanks a lot for the input! I tried to stick to your comments, but feel free to add some more :)

Hi, I am still getting this error during the images build: ERROR: denied: permission_denied: write_package

It does not seem to me that this has something to do with my changes.

Any advice?

Hi, I am still getting this error during the images build:

ERROR: denied: permission_denied: write_packageIt does not seem to me that this has something to do with my changes. Any advice?

Rebase please. GitHub seemed to have a rough day.

Conflict to solve :(

Tried to add the changes demanded in #25985

Thanks @ChrisFraun for reconciling our 2 PRs into one (https://github.com/apache/airflow/pull/25985 and this one). These changes look good to me 👍

Running the tests now (sorry @ChrisFraun for this all taking so long - just returned from Holidays and trying to catch-up - let's see how this one looks like for tests and I will give it another pass).

errors ?

@ChrisFraun there seems to be an error running the Helm chart:

parse error at (airflow/templates/webserver/webserver-deployment.yaml:65):

undefined variable "$containerSecurityContext"

@malthe shouldn't it be there with https://github.com/apache/airflow/pull/24588/files#diff-dc12e5cc8016c85ad9e662af7c8b8cfb49d91965225e94aa12146dc1f371fc86R824?

@ChrisFraun that snippet you linked to seems to point to a template definition, not a variable.

Variables are like this:

{{- $securityContext := include "airflowSecurityContext" (list . .Values.cleanup) }}

then for the above mentioned error it should be here: https://github.com/apache/airflow/pull/24588/files#diff-42f2a460a872212b923b5d7591c552a22816d768478a70d6943103221e08f11fR27, no?

conflicts, I am afraid.

Now some checks need to be fixed.

@potiuk pretty hard to grok these test failures. I found this though:

stderr = b'W1206 16:45:48.861465 1052 loader.go:221]

Config not found: /files/.kube/config\nW1206 16:45:48.879883 1052 lo..." at

<.securityContexts.pod>: nil pointer evaluating interface {}.pod\n\nUse --debug

flag to render out invalid YAML\n'

What is the best way to iterate on this? I think there shouldn't be too much work left here.

@potiuk pretty hard to grok these test failures. I found this though:

stderr = b'W1206 16:45:48.861465 1052 loader.go:221] Config not found: /files/.kube/config\nW1206 16:45:48.879883 1052 lo..." at <.securityContexts.pod>: nil pointer evaluating interface {}.pod\n\nUse --debug flag to render out invalid YAML\n'What is the best way to iterate on this? I think there shouldn't be too much work left here.

Well. First of all there are conflicts - so it needs rebase and fixing them. And if the test are Python helm unit tests, then this is nothing unusual - just regular pytest tests that you can iterate on and the only difference vs. standard airflow unit tests are requirements needed (and helm installed) . Nothing special there.

And as usual (and described for all other parts of our test suite) Helm Unit tests have a separate chapter in our TESTING.rst that describe how they work and how you should run the tests locally https://github.com/apache/airflow/blob/main/TESTING.rst#helm-unit-tests

@potiuk pretty hard to grok these test failures. I found this though:

Also - I am not the author of those Helm Unit tests, but if you have an idea how to improve the output of tests, I think it would be great. Those tests simply (as explained in the docs) are just standard Pytest tests that simply render the charts using helm binary, taking values specified and verify if the rendered chart contains expected values. Possibly the output there can be improved if you think it is difficult to parse - any improvements there are most welcome. The chapter in the docs also explains the general structure of those tests. Maybe also the problem is that the charts have a bug and helm rendering fails there, and maybe that is a good opportunity to handle it better and in a more readable way ? Would love to review an improvement there :D

Still errors . I stronly recommend installing pre-commit and using it. Then a number of iterations you would have to do would be much smaller .

unfortunately the checks are still failing and I have no means of testing them locally..

unfortunately the checks are still failing and I have no means of testing them locally..

What do you mean by no means of testing locally?

All our tests are specifically designed to be possible (and generally easy) to run locally - see https://github.com/apache/airflow/blob/main/TESTING.rst that describe how to run every type of test we have locally.

Do you sse a problem with running some kind of tests? Happy to help if you do. I just need to know what problem you have when following those instructions above.

@potiuk I've been trying to figure out some of the test failures. However, I'm not certain how to debug the failing tests. I'm using Breeze because I could not get those tests to work using a local venv. Maybe I'm just not used to these kind of tests and not looking at the right error output; how would you approach debugging something like the following:

root@7fa702d1f4c4:/opt/airflow# pytest tests/charts/test_airflow_common.py -n auto

============================================================================ test session starts =============================================================================

platform linux -- Python 3.7.16, pytest-7.3.1, pluggy-1.0.0 -- /usr/local/bin/python

cachedir: .pytest_cache

rootdir: /opt/airflow

configfile: pyproject.toml

plugins: timeouts-1.2.1, asyncio-0.21.0, capture-warnings-0.0.4, httpx-0.21.3, time-machine-2.9.0, instafail-0.5.0, rerunfailures-11.1.2, xdist-3.2.1, requests-mock-1.10.0, anyio-3.6.2, cov-4.0.0

asyncio: mode=strict

setup timeout: 0.0s, execution timeout: 0.0s, teardown timeout: 0.0s

[gw0] linux Python 3.7.16 cwd: /opt/airflow

[gw1] linux Python 3.7.16 cwd: /opt/airflow

[gw2] linux Python 3.7.16 cwd: /opt/airflow

[gw3] linux Python 3.7.16 cwd: /opt/airflow

[gw4] linux Python 3.7.16 cwd: /opt/airflow

[gw5] linux Python 3.7.16 cwd: /opt/airflow

[gw6] linux Python 3.7.16 cwd: /opt/airflow

[gw7] linux Python 3.7.16 cwd: /opt/airflow

[gw0] Python 3.7.16 (default, Apr 12 2023, 07:12:00) -- [GCC 10.2.1 20210110]

[gw4] Python 3.7.16 (default, Apr 12 2023, 07:12:00) -- [GCC 10.2.1 20210110]

[gw1] Python 3.7.16 (default, Apr 12 2023, 07:12:00) -- [GCC 10.2.1 20210110]

[gw3] Python 3.7.16 (default, Apr 12 2023, 07:12:00) -- [GCC 10.2.1 20210110]

[gw6] Python 3.7.16 (default, Apr 12 2023, 07:12:00) -- [GCC 10.2.1 20210110]

[gw2] Python 3.7.16 (default, Apr 12 2023, 07:12:00) -- [GCC 10.2.1 20210110]

[gw5] Python 3.7.16 (default, Apr 12 2023, 07:12:00) -- [GCC 10.2.1 20210110]

[gw7] Python 3.7.16 (default, Apr 12 2023, 07:12:00) -- [GCC 10.2.1 20210110]

gw0 [18] / gw1 [18] / gw2 [18] / gw3 [18] / gw4 [18] / gw5 [18] / gw6 [18] / gw7 [18]

scheduling tests via LoadScheduling

tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_use_correct_default_image[apache/airflow@user-digest-user-tag-user-digest]

tests/charts/test_airflow_common.py::TestAirflowCommon::test_dags_mount[dag_values0-expected_mount0]

tests/charts/test_airflow_common.py::TestAirflowCommon::test_webserver_config_configmap_name_volume_mounts

tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_use_correct_default_image[apache/airflow:user-tag-user-tag-None]

tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_use_correct_image[apache/airflow@user-digest-None-user-digest]

tests/charts/test_airflow_common.py::TestAirflowCommon::test_dags_mount[dag_values2-expected_mount2]

tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_disable_some_variables

tests/charts/test_airflow_common.py::TestAirflowCommon::test_global_affinity_tolerations_topology_spread_constraints_and_node_selector

[gw0] [ 5%] FAILED tests/charts/test_airflow_common.py::TestAirflowCommon::test_dags_mount[dag_values0-expected_mount0]

tests/charts/test_airflow_common.py::TestAirflowCommon::test_dags_mount[dag_values1-expected_mount1]

[gw4] [ 11%] FAILED tests/charts/test_airflow_common.py::TestAirflowCommon::test_dags_mount[dag_values2-expected_mount2]

tests/charts/test_airflow_common.py::TestAirflowCommon::test_dags_mount[dag_values3-expected_mount3]

[gw1] [ 16%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_webserver_config_configmap_name_volume_mounts

tests/charts/test_airflow_common.py::TestAirflowCommon::test_annotations

[gw2] [ 22%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_use_correct_default_image[apache/airflow:user-tag-user-tag-None]

[gw5] [ 27%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_use_correct_default_image[apache/airflow@user-digest-user-tag-user-digest]

tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_use_correct_default_image[apache/airflow@user-digest-None-user-digest]

tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_set_correct_helm_hooks_weight

[gw7] [ 33%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_disable_some_variables

[gw6] [ 38%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_use_correct_image[apache/airflow@user-digest-None-user-digest]

tests/charts/test_airflow_common.py::TestAirflowCommon::test_have_all_variables

tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_use_correct_image[apache/airflow@user-digest-user-tag-user-digest]

[gw0] [ 44%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_dags_mount[dag_values1-expected_mount1]

tests/charts/test_airflow_common.py::TestAirflowCommon::test_have_all_config_mounts_on_init_containers

[gw4] [ 50%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_dags_mount[dag_values3-expected_mount3]

tests/charts/test_airflow_common.py::TestAirflowCommon::test_priority_class_name

[gw3] [ 55%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_global_affinity_tolerations_topology_spread_constraints_and_node_selector

tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_use_correct_image[apache/airflow:user-tag-user-tag-None]

[gw7] [ 61%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_have_all_variables

[gw6] [ 66%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_use_correct_image[apache/airflow@user-digest-user-tag-user-digest]

[gw5] [ 72%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_set_correct_helm_hooks_weight

[gw2] [ 77%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_use_correct_default_image[apache/airflow@user-digest-None-user-digest]

[gw0] [ 83%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_have_all_config_mounts_on_init_containers

[gw1] [ 88%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_annotations

[gw3] [ 94%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_should_use_correct_image[apache/airflow:user-tag-user-tag-None]

[gw4] [100%] PASSED tests/charts/test_airflow_common.py::TestAirflowCommon::test_priority_class_name

================================================================================== FAILURES ==================================================================================

_______________________________________________________ TestAirflowCommon.test_dags_mount[dag_values0-expected_mount0] _______________________________________________________

[gw0] linux -- Python 3.7.16 /usr/local/bin/python

self = <tests.charts.test_airflow_common.TestAirflowCommon object at 0x7ff0de8ec590>, dag_values = {'gitSync': {'enabled': True}}

expected_mount = {'mountPath': '/opt/airflow/dags', 'name': 'dags', 'readOnly': True}

@pytest.mark.parametrize(

"dag_values, expected_mount",

[

(

{"gitSync": {"enabled": True}},

{

"mountPath": "/opt/airflow/dags",

"name": "dags",

"readOnly": True,

},

),

(

{"persistence": {"enabled": True}},

{

"mountPath": "/opt/airflow/dags",

"name": "dags",

"readOnly": False,

},

),

(

{

"gitSync": {"enabled": True},

"persistence": {"enabled": True},

},

{

"mountPath": "/opt/airflow/dags",

"name": "dags",

"readOnly": True,

},

),

(

{"persistence": {"enabled": True, "subPath": "test/dags"}},

{

"subPath": "test/dags",

"mountPath": "/opt/airflow/dags",

"name": "dags",

"readOnly": False,

},

),

],

)

def test_dags_mount(self, dag_values, expected_mount):

docs = render_chart(

values={

"dags": dag_values,

"airflowVersion": "1.10.15",

}, # airflowVersion is present so webserver gets the mount

show_only=[

"templates/scheduler/scheduler-deployment.yaml",

"templates/workers/worker-deployment.yaml",

> "templates/webserver/webserver-deployment.yaml",

],

)

tests/charts/test_airflow_common.py:84:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

/home/mikaeld/code/mozilla/airflow/tests/charts/helm_template_generator.py:138: in render_chart

???

/usr/local/lib/python3.7/subprocess.py:411: in check_output

**kwargs).stdout

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

input = None, capture_output = False, timeout = None, check = True

popenargs = (['helm', 'template', 'release-name', '/opt/airflow/chart', '--values', '/tmp/tmpjppcs087', ...],)

kwargs = {'cwd': '/opt/airflow/chart', 'stderr': -1, 'stdout': -1}, process = <subprocess.Popen object at 0x7ff0dfc4ef90>, stdout = b''

stderr = b'W0417 15:54:23.300556 17785 loader.go:221] Config not found: /files/.kube/config\nW0417 15:54:23.310400 17785 lo..." at <.securityContexts.pod>: nil pointer evaluating interface {}.pod\n\nUse --debug flag to render out invalid YAML\n'

retcode = 1

def run(*popenargs,

input=None, capture_output=False, timeout=None, check=False, **kwargs):

"""Run command with arguments and return a CompletedProcess instance.

The returned instance will have attributes args, returncode, stdout and

stderr. By default, stdout and stderr are not captured, and those attributes

will be None. Pass stdout=PIPE and/or stderr=PIPE in order to capture them.

If check is True and the exit code was non-zero, it raises a

CalledProcessError. The CalledProcessError object will have the return code

in the returncode attribute, and output & stderr attributes if those streams

were captured.

If timeout is given, and the process takes too long, a TimeoutExpired

exception will be raised.

There is an optional argument "input", allowing you to

pass bytes or a string to the subprocess's stdin. If you use this argument

you may not also use the Popen constructor's "stdin" argument, as

it will be used internally.

By default, all communication is in bytes, and therefore any "input" should

be bytes, and the stdout and stderr will be bytes. If in text mode, any

"input" should be a string, and stdout and stderr will be strings decoded

according to locale encoding, or by "encoding" if set. Text mode is

triggered by setting any of text, encoding, errors or universal_newlines.

The other arguments are the same as for the Popen constructor.

"""

if input is not None:

if kwargs.get('stdin') is not None:

raise ValueError('stdin and input arguments may not both be used.')

kwargs['stdin'] = PIPE

if capture_output:

if kwargs.get('stdout') is not None or kwargs.get('stderr') is not None:

raise ValueError('stdout and stderr arguments may not be used '

'with capture_output.')

kwargs['stdout'] = PIPE

kwargs['stderr'] = PIPE

with Popen(*popenargs, **kwargs) as process:

try:

stdout, stderr = process.communicate(input, timeout=timeout)

except TimeoutExpired as exc:

process.kill()

if _mswindows:

# Windows accumulates the output in a single blocking

# read() call run on child threads, with the timeout

# being done in a join() on those threads. communicate()

# _after_ kill() is required to collect that and add it

# to the exception.

exc.stdout, exc.stderr = process.communicate()

else:

# POSIX _communicate already populated the output so

# far into the TimeoutExpired exception.

process.wait()

raise

except: # Including KeyboardInterrupt, communicate handled that.

process.kill()

# We don't call process.wait() as .__exit__ does that for us.

raise

retcode = process.poll()

if check and retcode:

raise CalledProcessError(retcode, process.args,

> output=stdout, stderr=stderr)

E subprocess.CalledProcessError: Command '['helm', 'template', 'release-name', '/opt/airflow/chart', '--values', '/tmp/tmpjppcs087', '--kube-version', '1.23.13', '--namespace', 'default', '--show-only', 'templates/scheduler/scheduler-deployment.yaml', '--show-only', 'templates/workers/worker-deployment.yaml', '--show-only', 'templates/webserver/webserver-deployment.yaml']' returned non-zero exit status 1.

/usr/local/lib/python3.7/subprocess.py:512: CalledProcessError

_______________________________________________________ TestAirflowCommon.test_dags_mount[dag_values2-expected_mount2] _______________________________________________________

[gw4] linux -- Python 3.7.16 /usr/local/bin/python

self = <tests.charts.test_airflow_common.TestAirflowCommon object at 0x7f8ec5f4ae90>, dag_values = {'gitSync': {'enabled': True}, 'persistence': {'enabled': True}}

expected_mount = {'mountPath': '/opt/airflow/dags', 'name': 'dags', 'readOnly': True}

@pytest.mark.parametrize(

"dag_values, expected_mount",

[

(

{"gitSync": {"enabled": True}},

{

"mountPath": "/opt/airflow/dags",

"name": "dags",

"readOnly": True,

},

),

(

{"persistence": {"enabled": True}},

{

"mountPath": "/opt/airflow/dags",

"name": "dags",

"readOnly": False,

},

),

(

{

"gitSync": {"enabled": True},

"persistence": {"enabled": True},

},

{

"mountPath": "/opt/airflow/dags",

"name": "dags",

"readOnly": True,

},

),

(

{"persistence": {"enabled": True, "subPath": "test/dags"}},

{

"subPath": "test/dags",

"mountPath": "/opt/airflow/dags",

"name": "dags",

"readOnly": False,

},

),

],

)

def test_dags_mount(self, dag_values, expected_mount):

docs = render_chart(

values={

"dags": dag_values,

"airflowVersion": "1.10.15",

}, # airflowVersion is present so webserver gets the mount

show_only=[

"templates/scheduler/scheduler-deployment.yaml",

"templates/workers/worker-deployment.yaml",

> "templates/webserver/webserver-deployment.yaml",

],

)

tests/charts/test_airflow_common.py:84:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

/home/mikaeld/code/mozilla/airflow/tests/charts/helm_template_generator.py:138: in render_chart

???

/usr/local/lib/python3.7/subprocess.py:411: in check_output

**kwargs).stdout

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

input = None, capture_output = False, timeout = None, check = True

popenargs = (['helm', 'template', 'release-name', '/opt/airflow/chart', '--values', '/tmp/tmpfd7jte59', ...],)

kwargs = {'cwd': '/opt/airflow/chart', 'stderr': -1, 'stdout': -1}, process = <subprocess.Popen object at 0x7f8ec5be6910>, stdout = b''

stderr = b'W0417 15:54:23.307418 17790 loader.go:221] Config not found: /files/.kube/config\nW0417 15:54:23.316569 17790 lo..." at <.securityContexts.pod>: nil pointer evaluating interface {}.pod\n\nUse --debug flag to render out invalid YAML\n'

retcode = 1

def run(*popenargs,

input=None, capture_output=False, timeout=None, check=False, **kwargs):

"""Run command with arguments and return a CompletedProcess instance.

The returned instance will have attributes args, returncode, stdout and

stderr. By default, stdout and stderr are not captured, and those attributes

will be None. Pass stdout=PIPE and/or stderr=PIPE in order to capture them.

If check is True and the exit code was non-zero, it raises a

CalledProcessError. The CalledProcessError object will have the return code

in the returncode attribute, and output & stderr attributes if those streams

were captured.

If timeout is given, and the process takes too long, a TimeoutExpired

exception will be raised.

There is an optional argument "input", allowing you to

pass bytes or a string to the subprocess's stdin. If you use this argument

you may not also use the Popen constructor's "stdin" argument, as

it will be used internally.

By default, all communication is in bytes, and therefore any "input" should

be bytes, and the stdout and stderr will be bytes. If in text mode, any

"input" should be a string, and stdout and stderr will be strings decoded

according to locale encoding, or by "encoding" if set. Text mode is

triggered by setting any of text, encoding, errors or universal_newlines.

The other arguments are the same as for the Popen constructor.

"""

if input is not None:

if kwargs.get('stdin') is not None:

raise ValueError('stdin and input arguments may not both be used.')

kwargs['stdin'] = PIPE

if capture_output:

if kwargs.get('stdout') is not None or kwargs.get('stderr') is not None:

raise ValueError('stdout and stderr arguments may not be used '

'with capture_output.')

kwargs['stdout'] = PIPE

kwargs['stderr'] = PIPE

with Popen(*popenargs, **kwargs) as process:

try:

stdout, stderr = process.communicate(input, timeout=timeout)

except TimeoutExpired as exc:

process.kill()

if _mswindows:

# Windows accumulates the output in a single blocking

# read() call run on child threads, with the timeout

# being done in a join() on those threads. communicate()

# _after_ kill() is required to collect that and add it

# to the exception.

exc.stdout, exc.stderr = process.communicate()

else:

# POSIX _communicate already populated the output so

# far into the TimeoutExpired exception.

process.wait()

raise

except: # Including KeyboardInterrupt, communicate handled that.

process.kill()

# We don't call process.wait() as .__exit__ does that for us.

raise

retcode = process.poll()

if check and retcode:

raise CalledProcessError(retcode, process.args,

> output=stdout, stderr=stderr)

E subprocess.CalledProcessError: Command '['helm', 'template', 'release-name', '/opt/airflow/chart', '--values', '/tmp/tmpfd7jte59', '--kube-version', '1.23.13', '--namespace', 'default', '--show-only', 'templates/scheduler/scheduler-deployment.yaml', '--show-only', 'templates/workers/worker-deployment.yaml', '--show-only', 'templates/webserver/webserver-deployment.yaml']' returned non-zero exit status 1.

/usr/local/lib/python3.7/subprocess.py:512: CalledProcessError

========================================================================== short test summary info ===========================================================================

FAILED tests/charts/test_airflow_common.py::TestAirflowCommon::test_dags_mount[dag_values0-expected_mount0] - subprocess.CalledProcessError: Command '['helm', 'template', 'release-name', '/opt/airflow/chart', '--values', '/tmp/tmpjppcs087', '--kube-version', '1.23.13', '--namespace', 'default', '--show-only', 'templates/scheduler/scheduler-deployment.yaml', '--show-only', 'templates/workers/worker-deployment.yaml', '--show-only', 'templates/webserver/webserver-deployment.yaml']' returned non-zero exit status 1.

FAILED tests/charts/test_airflow_common.py::TestAirflowCommon::test_dags_mount[dag_values2-expected_mount2] - subprocess.CalledProcessError: Command '['helm', 'template', 'release-name', '/opt/airflow/chart', '--values', '/tmp/tmpfd7jte59', '--kube-version', '1.23.13', '--namespace', 'default', '--show-only', 'templates/scheduler/scheduler-deployment.yaml', '--show-only', 'templates/workers/worker-deployment.yaml', '--show-only', 'templates/webserver/webserver-deployment.yaml']' returned non-zero exit status 1.

======================================================================= 2 failed, 16 passed in 30.49s ========================================================================

/usr/local/lib/python3.7/site-packages/flask_appbuilder/models/sqla/__init__.py:105 MovedIn20Warning: Deprecated API features detected! These feature(s) are not compatible with SQLAlchemy 2.0. To prevent incompatible upgrades prior to updating applications, ensure requirements files are pinned to "sqlalchemy<2.0". Set environment variable SQLALCHEMY_WARN_20=1 to show all deprecation warnings. Set environment variable SQLALCHEMY_SILENCE_UBER_WARNING=1 to silence this message. (Background on SQLAlchemy 2.0 at: https://sqlalche.me/e/b8d9)

root@7fa702d1f4c4:/opt/airflow#