Fix backfill queued task getting reset to scheduled state.

the backfill queued tasks were getting reset to scheduled state by kubernetes_executer.clear_not_launched_queued_tasks of scheduler instance.

this fixes the issue by making clear_not_launched_queued_tasks only handle the tasks which are created by its own scheduler/job.

closes: #23356 , closes: #23693 , closes: #23145

@ashb @ephraimbuddy @dstandish @jedcunningham -> not sure if that's the right fix, But I guess this addresses the issues for 2.3.1

Having the same issue in airflow==2.2.5. Applying a slightly adapted patch from this pull request fixes the problem with broken backfill.

Hi team! New to the scene here, but we are also facing this bug as well in our implementation (2.3.3, KubernetesExecutor). Wondering if this PR could get a second pair of eyes. Similar to what is mentioned in the discussion, we'll have backfills that run (some to completion) but then get stuck because they are later marked scheduled.

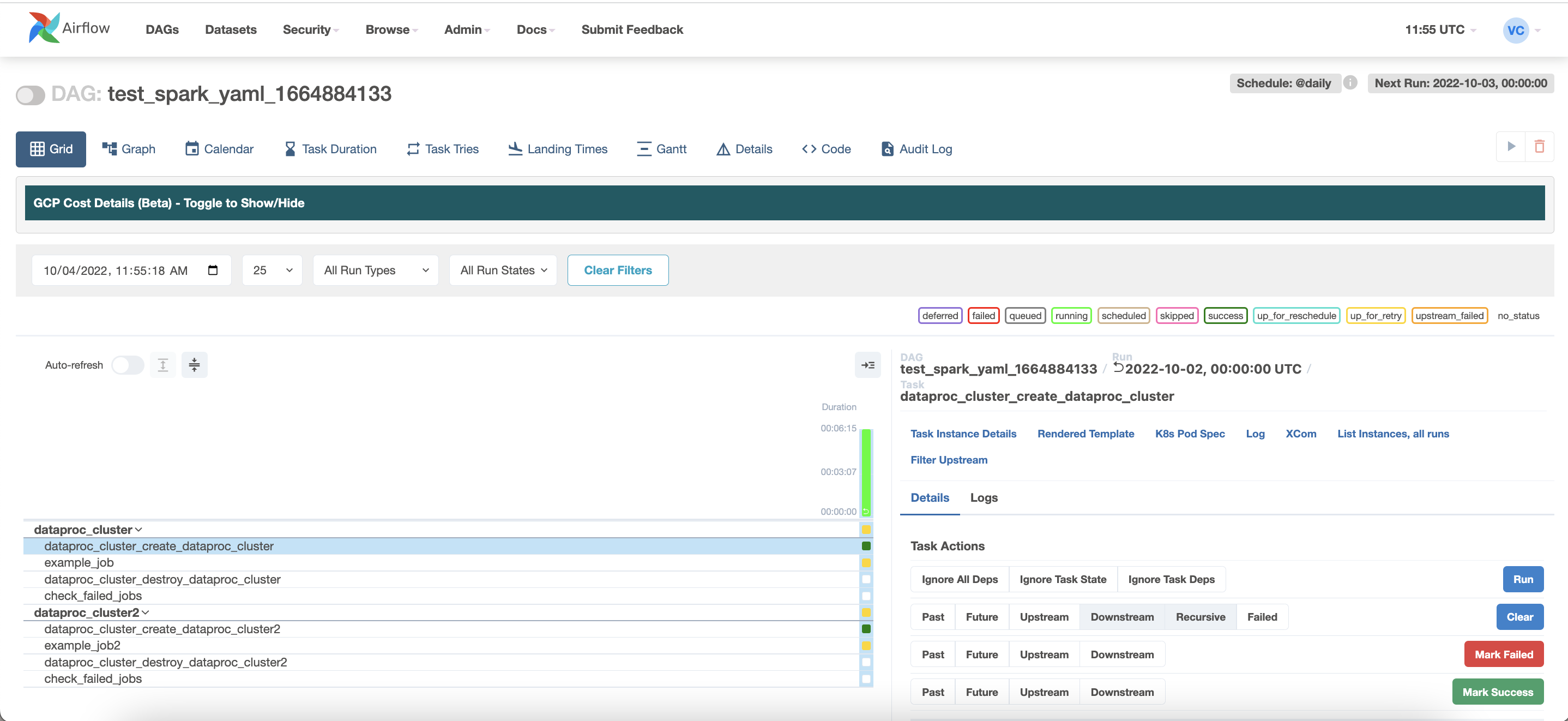

All tasks getting marked as scheduled after already completing their backfill:

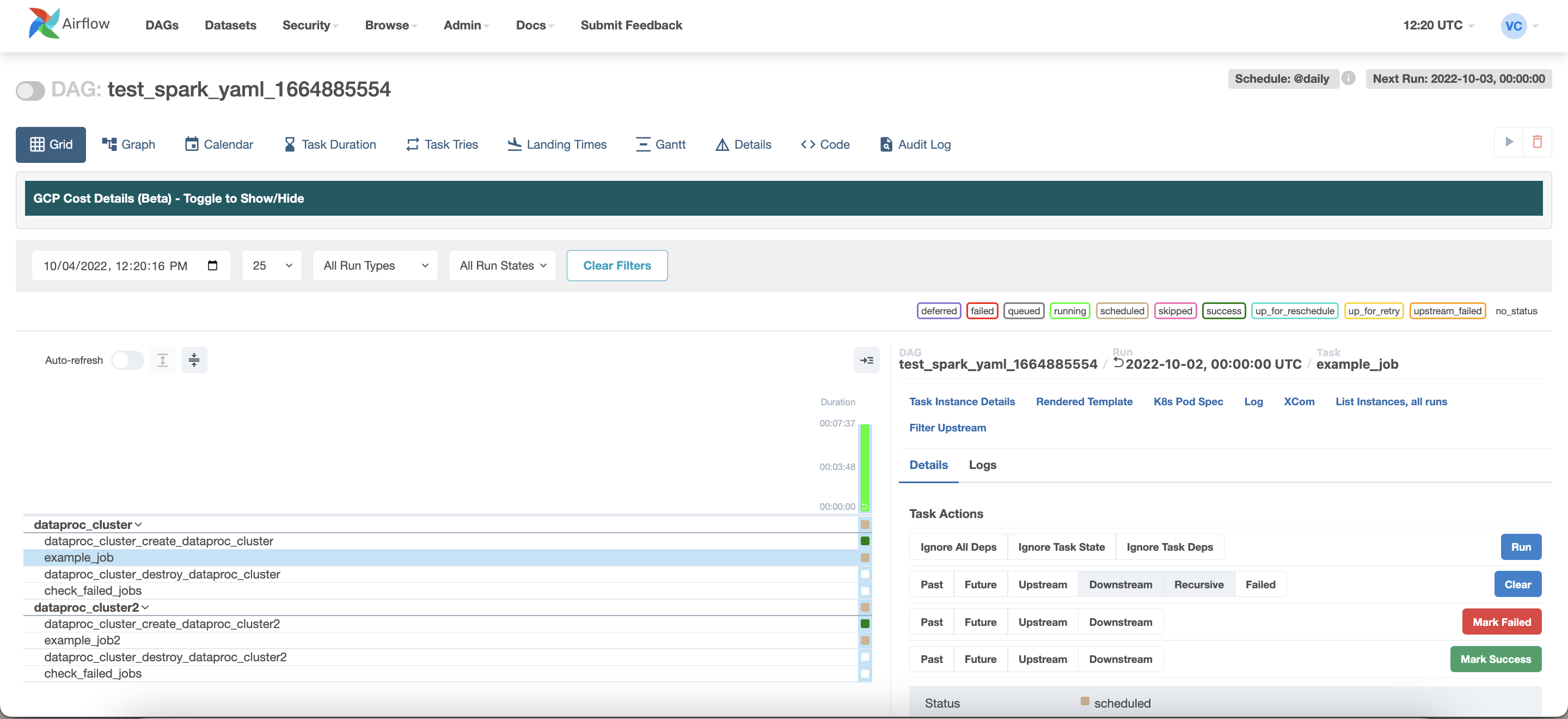

A dag run that completes one task but has its subsequent task marked as scheduled:

I don't think we should change how Kubernetes executor handles queued task instances that have overstayed in queued state. If a task stayed in queued state for a long time, then something is wrong and the task should be cleared since we have a configuration to detect and clear it.

While https://github.com/apache/airflow/pull/26205 can also solve this issue, I think it's not necessary to send a task that's not in scheduled state to the executor. This is missing right now in backfill because the possibility to have many tasks set to scheduled was solved in https://github.com/apache/airflow/pull/26161.

I don't think we should change how Kubernetes executor handles queued task instances that have overstayed in queued state. If a task stayed in queued state for a long time, then something is wrong and the task should be cleared since we have a configuration to detect and clear it. While #26205 can also solve this issue, I think it's not necessary to send a task that's not in

scheduledstate to the executor. This is missing right now in backfill because the possibility to have many tasks set to scheduled was solved in #26161.

After consultations, I'm withdrawing the above statement.

Can you add tests to ensure this is not altered in the future?

Hi all. I can confirm I still see this issue on 2.4.0 KubernetesExecutor. Would this be available in 2.4.2? I see the milestone has being pushed several times. We are extremely interested in this fix

Hi all. I can confirm I still see this issue on 2.4.0 KubernetesExecutor. Would this be available in 2.4.2? I see the milestone has being pushed several times. We are extremely interested in this fix

We need tests on this one

For what it's worth, we just updated to 2.4.1 yesterday (w/ KubernetesExecutor) and we are unable to repro the previous buggy behavior where the scheduler would change the status of backfill runs. @ephraimbuddy , I think your PR may have mitigated or resolved this issue -https://github.com/apache/airflow/pull/26342/files.

By fixed, I mean that we can now start a backfill with the airflow dags backfill command and have it run to completion. It no longer seems to be getting interrupted mid-way by a scheduler process that is resetting its state.

Argh, I may have spoken too soon. Another stuck one 😢

One more note - we see these log lines directly before the backfill stalls. Which I think is in line with what this PR will address since @snjypl is modifying the function clear_not_launched_queued_tasks.

[2022-10-04 18:37:06,838] {scheduler_job.py:1374} INFO - Resetting orphaned tasks for active dag runs

[2022-10-04 18:37:08,918] {kubernetes_executor.py:470} INFO - Found 2 queued task instances

[2022-10-04 18:37:08,930] {kubernetes_executor.py:511} INFO - TaskInstance: <TaskInstance: test_spark_yaml_1664908327.dataproc_cluster.check_failed_jobs backfill__2022-09-06T00:00:00+00:00 [queued]> found in queued state but was not launched, rescheduling

[2022-10-04 18:37:08,944] {kubernetes_executor.py:511} INFO - TaskInstance: <TaskInstance: test_spark_yaml_1664908327.dataproc_cluster2.check_failed_jobs backfill__2022-09-06T00:00:00+00:00 [queued]> found in queued state but was not launched, rescheduling

Can you add tests to ensure this is not altered in the future?

@ephraimbuddy , i have added some tests, can you please review it and let me know if i needto change/update anything. thank you for help !

Needs conflict resolving.

Some strange errors to fix.

Looks like the only meaningful error is:

Run black (python formatter) on other..................................................Failed

- hook id: black

- duration: 67.5s

- files were modified by this hook

reformatted tests/executors/test_kubernetes_executor.py

Yep. You need to fix it - I recommend installing pre-commit (as per our contribution docs). It will fix it for you.

Make sure to rebase BTW.

Yep. You need to fix it - I recommend installing pre-commit (as per our contribution docs). It will fix it for you.

@potiuk i have fixed the issue with black formatter by installing pre commit hook. there is still a failing task. Tests / Sqlite Py3.7: API Always CLI Core Integration Other Providers WWW

does it need to be addressed?

Is there anything I can do to help move this one along? We rely on the airflow backfill CLI and this bug really makes it difficult for our users to backfill anything.

Anyone have any workarounds that I can implement now without waiting for this to be merged in?

Is there anything I can do to help move this one along? We rely on the airflow backfill CLI and this bug really makes it difficult for our users to backfill anything.

You can patch your airflow with those changes and verify that they work in your case. That would be an absolutely awesome input and one that can speed up the merge process if we have some evidences that people are eager to get it, even to the extend that they decide to patch their airflow without waiting for the next release.

The change looks like easy to patch manually.

I went ahead and manually patched this proposed fix into our airflow environment.

We average around 30 backfills per day, and over the last 4 days since it was manually patched - I have not seen or heard of this issue popping up. So far it seems that the fix has resolved the issue.

If it pops up again before this is merged I will report back. Just wanted to update with some test results.

If it pops up again before this is merged I will report back. Just wanted to update with some test results.

Cool. Thanks for that !

Thanks @snjypl