Thanks for a repo! Segmentation is wrong.

Hi, when I ran our model on nyuv2, I just noticed that predicted segmentation map is always completely black image while depth map is grayish color.

Hi, thank you for your message. Could you provide me an example by sharing some screenshots?

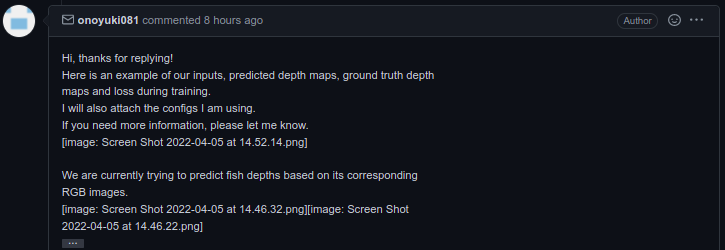

Hi, thanks for replying! Here is an example of our inputs, predicted depth maps, ground truth depth maps and loss during training. I will also attach the configs I am using. If you need more information, please let me know. [image: Screen Shot 2022-04-05 at 14.52.14.png]

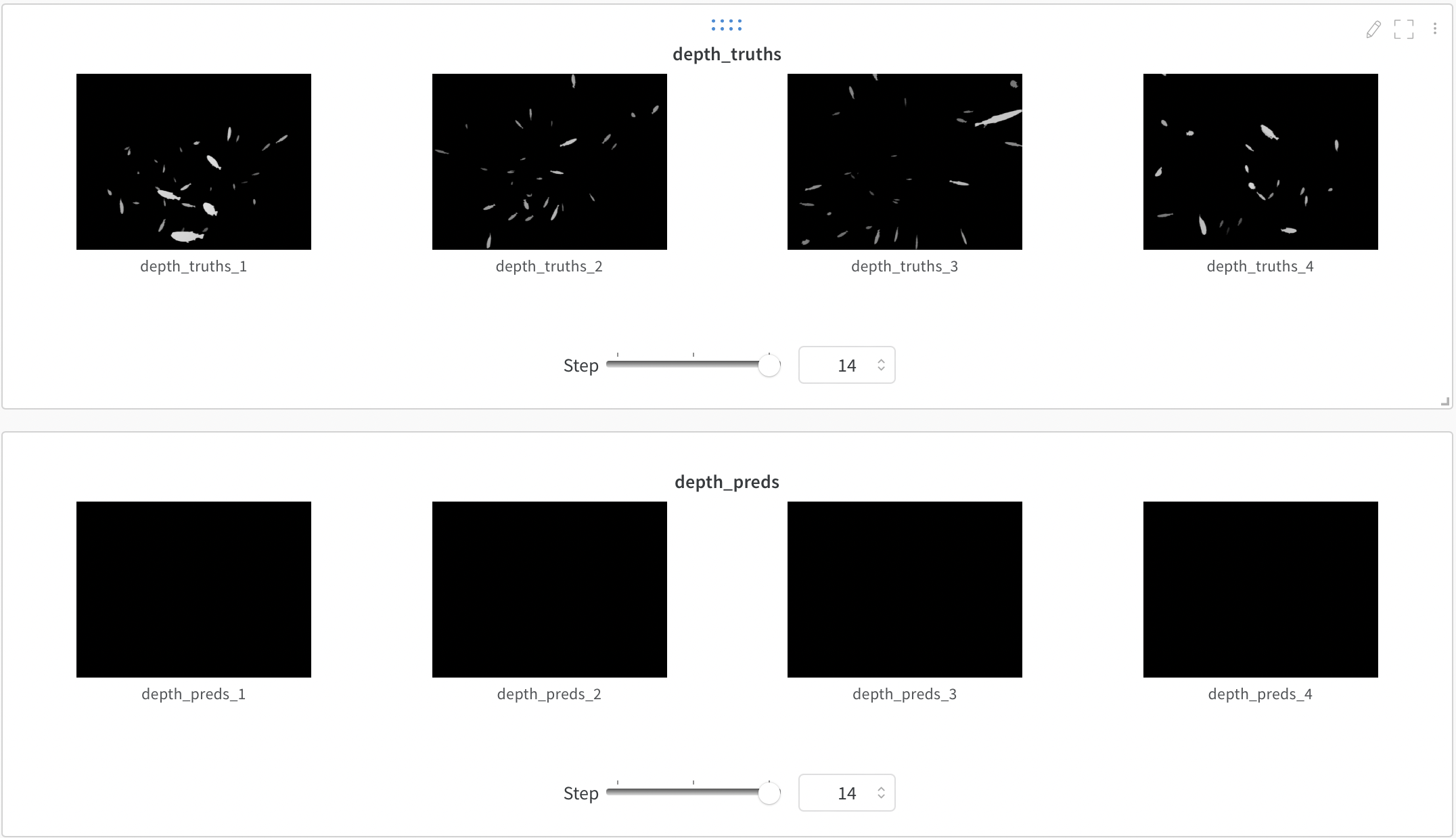

We are currently trying to predict fish depths based on its corresponding RGB images. [image: Screen Shot 2022-04-05 at 14.46.32.png][image: Screen Shot 2022-04-05 at 14.46.22.png]

On Mon, Apr 4, 2022 at 6:11 PM Younes Belkada @.***> wrote:

Hi, thank you for your message. Could you provide me an example by sharing some screenshots?

— Reply to this email directly, view it on GitHub https://github.com/antocad/FocusOnDepth/issues/6#issuecomment-1087306908, or unsubscribe https://github.com/notifications/unsubscribe-auth/AWN5EYX354J4HLHUIKSCXV3VDKW3DANCNFSM5SODDBSA . You are receiving this because you authored the thread.Message ID: @.***>

Hi,

Thank you for your message, it seems that I cant see your images. Could you please double check that you sent them correctly?

Thanks!

Here are the depth truths, depth preds, and original RGB we have.

Thank you!

I think that I understand the problem now, so if I am correct, you are facing this issue when training your model on your custom dataset?

In the image that you have provided tthe depth_truth seem to correspond to segmentation masks instead of segmentation masks, so if this is the case you are inverting the segmentation with the depth estimation. Could you please try again with the correct inputs? I think this can solve the issue

Thank you for suggestion! Actually, the image I provided for depth_truth is depth not segmentation. As whiter fish color in depth truth gets, that means it's closer to cameras, and as blacker fish color, that means it's far from cameras.

Ok ok I see thank you very much! I think that this is due to the fact that most of the image (the background for instance), in labeled as 0 (black pixels). Therefore, most of the labels consists of black pixels and the model is collapsing

I think that by default in our loss function, it will backpropagate only on non zero pixels (>= 0), so maybe you could try to replace the pixels equal to 0 by -1, (so that the model will ignore the labels equal to -1) for example and see what happens.

Note also that the method is quite sensitive to hyperparameters, so maybe finding the best hyperparameters can help

Could you please share with me the behavior of the loss function?

hmmm, I also found that finetuning the pretrained weight on customized RGBD dataset fails to converge, leading to all-black predictions for some reason. In my case, the model even cannot overfit to a single RGBD image during finetuning.