An Xarray accessor to make `xr.Dataset`s comply with the Python dataframe interchange protocol

I had a helpful discussion about this with @TomNicholas today in the Distributed Arrays Working Group meeting. Here are the high level notes:

- What if in the long term, we made

xr.DataArrayandxr.Datasetcompliant with the Python Dataframe Protocol?- In this formulation, a

xr.Datasetwhose variables had consistent dimensions could be thought of as a table, wherexr.Variables are treated as chunked columns in the dataframe spec. - xarray would be a sort of "holder" of array references that could be lazily evaluated by a dataframe library that adheres to the Data API, where the iterating and computing would be part of that dataframe library's implementation (and likely, corresponding logical plan for evaluating df operations).

- In this formulation, a

- Since this would be a big change to Xarray, and further, it's likely not to work, how could this library prototype this feature ahead of time? Ideally, it would be nice if upon

import xarray_sql, allxr.Datasets available to the user suddenly had the dunder methods that made them compliant to the dataframe data API.- Tom recommended playing around with Custom accessors as an Xarray-native way to achieve this sort of functionality.

- If an

xr.Dataset(with consistent dimensions) was also a__dataframe__, then it would be really easy to integrate it into DataFusion/SQL.- PyArrow specifically provides a

__dataframe__interchange method,from_dataframe

- PyArrow specifically provides a

- It is an open question if this type of integration is better suited to be in Python on top of Xarray or in Rust on top of the raw data format. In both Tom and my view, it seems like we should do both and figure out a good integration point between them.

- Couldn't most of the value of Zarr --> Rust --> DataFusion be achieved with xarray_sql + Xarray backed by the zarrs-python IO layer? We'll have to evaluate this empirically.

- There will be a large swath of users who will need the performance gains provided by a direct integration of DataFusion with (virtual) Zarr in Rust with SQL/Python wrappers on top. At the same time, there will be a large swath of users who need the interoperability ("joinability") of melding any data loadable in Xarray via a SQL interface.

xref: https://github.com/pydata/xarray/issues/10135

Edit: TIL The Dataframe API and the Dataframe protocol are related, but distinct things! That explains my confusion. Just updated the link with the right term + docs.

all xr.Datasets available to the user suddenly had the dunder methods that made them compliant to the dataframe data API.

That specific idea is a non-starter. A cursory look at the DataFrame API specification shows methods which won't be able to be made compatible with xarray. To pick just one example DataFrame.max() has signature

max(*, skip_nulls: bool | Scalar = True) → Self)

whereas xarray.Dataset.max() has signature

Dataset.max(dim=None, *, skipna=None, keep_attrs=None, **kwargs)

It makes sense for one to have dim and the other not to - it seems very difficult/unlikely for these to converge.

Instead I was suggesting that you just make some lightweight method for converting, e.g. something like

ds = xr.open_zarr(...)

df = PyArrow.from_dataframe(ds.xr_sql.to_arrow_dataframe())

you can achieve this method-like syntax (ds.xr_sql.to_arrow_dataframe()) using xarray's accessor registration mechanism.

Thanks, I like your alternative. You're right that what I want is a dataframe interchange, not a dataframe API surface for Datasets.

From the dataframe interchange protocol docs (see https://data-apis.org/dataframe-protocol/latest/design_requirements.html):

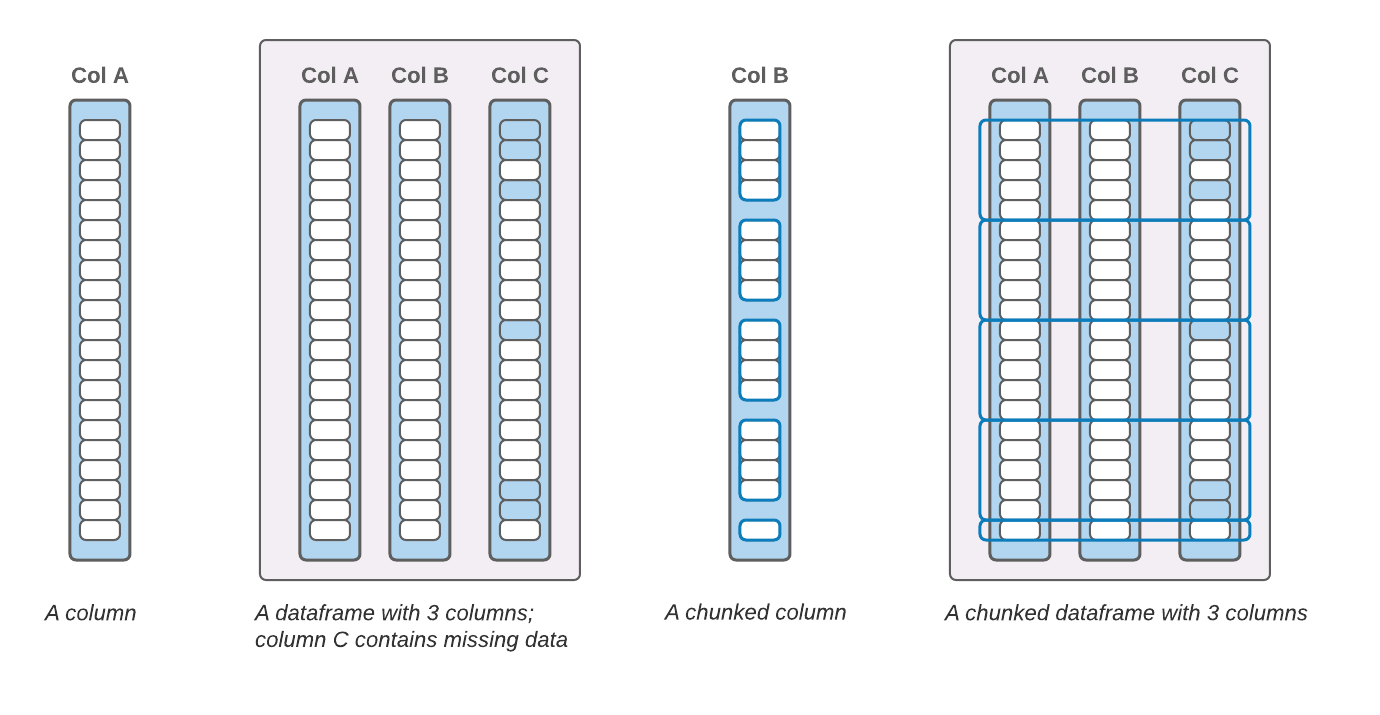

I think that the Xarray data model fits in fairly well with this interchange protocol: chunked variables are morally equivalent to this interchange protocol's "chunked column" spec.