graph-learn

graph-learn copied to clipboard

graph-learn copied to clipboard

An Industrial Graph Neural Network Framework

Are there any requirements for the dependent grpc version library?

Exciting working! Just thinking did you do any experiments using different importance metrics for cache? like other centrality measures?

I have to fork the sample path at different level to sample multiple paths in heterogeneous graphs, How can I do it with only one `each` step in a GSL?

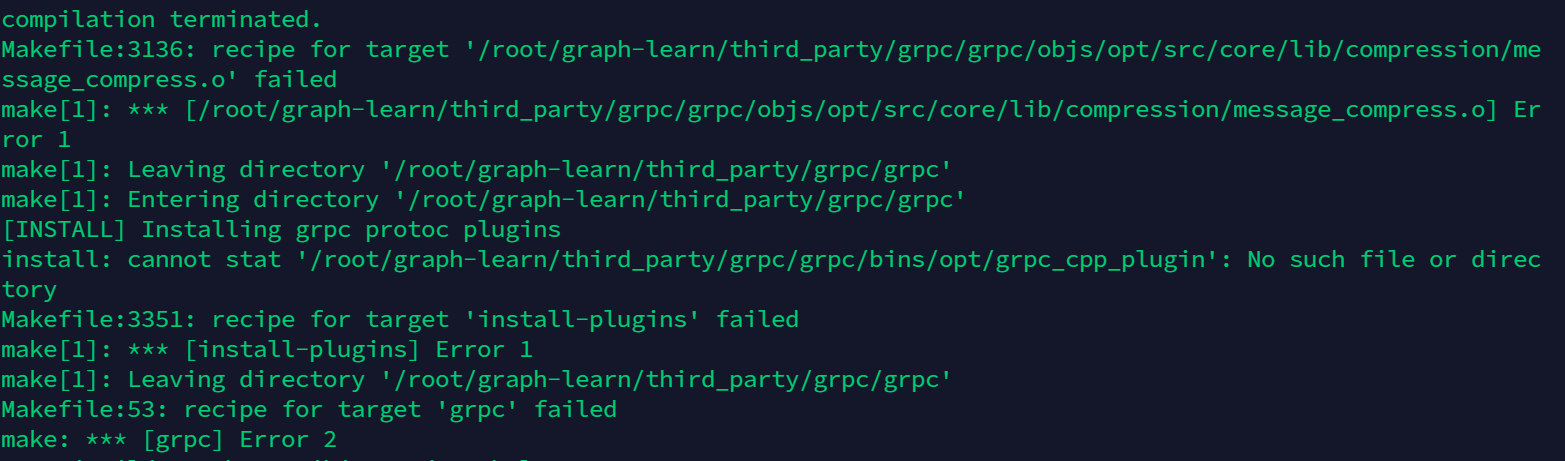

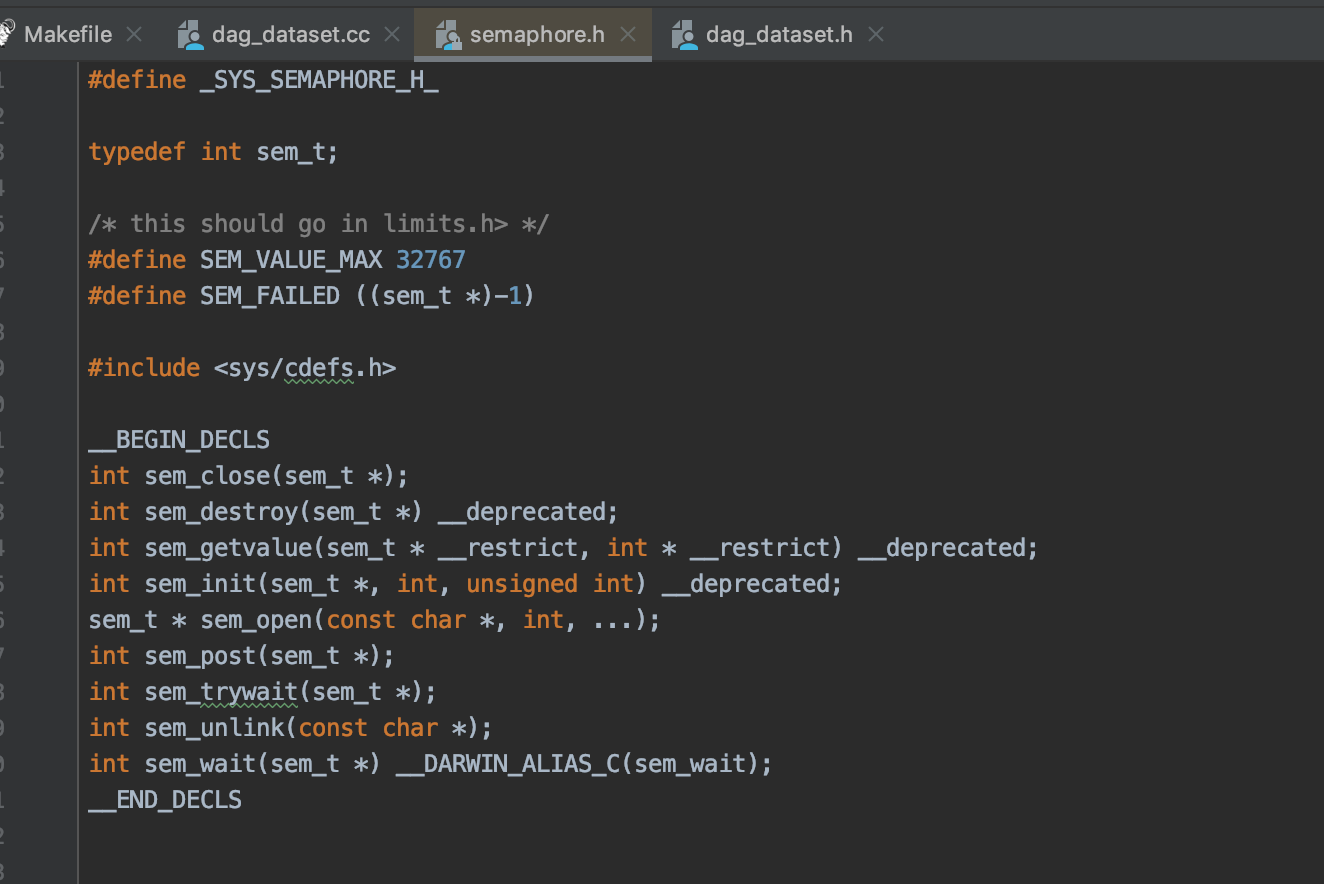

/Users/root/graph-learn/graphlearn/core/dag/dag_dataset.cc:62:7: error: use of undeclared identifier 'sem_timedwait' if (sem_timedwait(&(occupied_[cursor_]), &ts) == -1) { ^ 3 warnings and 1 error generated. mac c不支持sem_timedwait函数,导致安装失败:

Hello, The distributed training example of GraphSage in: https://github.com/alibaba/graph-learn/blob/master/examples/tf/graphsage/dist_train.py seems to load the full graph (cora) in all machine nodes separately, and begin training using a tensorflow's ps and worker...

cmake 时报如下错误 /git/alibaba/graph-learn/built/lib/libgraphlearn_shared.so:对‘_ULx86_64_step’未定义的引用 /git/alibaba/graph-learn/built/lib/libgraphlearn_shared.so:对‘_ULx86_64_get_reg’未定义的引用 /git/alibaba/graph-learn/built/lib/libgraphlearn_shared.so:对‘_Ux86_64_getcontext’未定义的引用 /git/alibaba/graph-learn/built/lib/libgraphlearn_shared.so:对‘gflags::FlagRegisterer::FlagRegisterer(char const*, char const*, char const*, char const*, void*, void*)’未定义的引用 /git/alibaba/graph-learn/built/lib/libgraphlearn_shared.so:对‘_ULx86_64_init_local’未定义的引用 collect2: error: ld returned 1 exit status make[2]: *** [../built/bin/service_unittest] 错误 1 make[1]: ***...

As we know from the paper, graph-learn have implemented four built-in graph partition algorithms to minimize the number of crossing edges whose endpoints are in different workers, but there still...

If I use Deploy Mode 2 , do the PS and workers of tensorflow have to be one-to-one? just like server and client. Thank you for your answer! @baoleai @archwalker...

Hi, I was trying to run graph-learn in a distributed setting but met some problems: When I launch four clients and four servers, one of the clients will exit with...