webMAN-MOD

webMAN-MOD copied to clipboard

webMAN-MOD copied to clipboard

[Bug] FTP server disconnects and freezes

There seem to be issues with the current FTP server implementation existing for several years now, so I'm creating this thread to help with efforts to find and fix them. Most of them happen when transferring large amounts of files or using less widespread clients or libraries, such as Rclone, that enforce the protocol strictly.

From my observations, they can be categorized these groups:

- Server sends an invalid response, forcing the client to reconnect or ignore the unexpected response

- Server stops responding in the current connection, leading to timeouts and reconnects on client side

- Server closes the connection unexpectedly, forcing the client to reconnect or abort the operation

- Server stops receiving any connections, making it impossible to make a new connection

Looking into and debugging the code, I found 4 bugs so far:

Bug 1 - When entering passive mode, server never responds to the new connection

This happens when slisten() function fails to bind socket to requested port, but returns a valid socket handle anyways. This can happen for multiple reasons, such as the port being already used by another thread. Server then tells client to connect to an invalid port, resulting in timeout and disconnect.

Solution: Return error in slisten() when bind() or listen() fails.

Bug 2 - After entering passive mode and accepting connection, server stops responding

This sneaky one happens when accept() returns a socket handle with ID 0 (valid range is 0 - 1023), as the code incorrectly treats that as an invalid handle. Client eventually times out, but the server side thread seems to never exit, resulting in a thread buildup and server rejecting new connections. May even happen more often than every 1024 files, as WMM isn't the only one creating new sockets.

Solution: Take 0 as a valid socket, turning > 0 into >= 0.

Bug 3 - CWDing into non-existing directory returns error for a path never requested

For example:

Request: CWD /dev_hdd0/packages/aaa/bbb

Response: 550 File Error "///dev_hdd0/packages/aaa/bbb"

Request: CWD /dev_hdd0/packages/aaa/New folder

Response: 550 File Error "/dev_hdd0/packages/aaa/bbb//dev_hdd0/packages/aaa/New folder"

findPath() seems to treat absolute paths as relative sometimes, leading to concatenation of current working directory with the requested one. Some clients close connection after receiving this kind of response, in which case it's unusable.

Solution: findPath() should only do that when the requested path is relative and keep the original path intact otherwise

Also to note: sprintf() used for concatenation may overflow beyond the buffer limit and corrupt the stack contents, use of snprintf() with the correct buffer size would be better

Bug 4 - Under heavy FTP use, OS kills all connections with FIN packets, unexpectedly

This one is really annoying to debug since it also affects every network connection used sending logs and telemetry data. The only correlation I found is that it can be replicated with Rclone (only passive mode supported) sending large amounts of small files quickly, thus creating and closing sockets at a fast pace. Strangely enough, it always seems to die after 159th file and ~25 seconds since the start of the transfer (note: connections made by debugging may affect those values, but it's consistent for each version of source code). Filezilla set to forced active mode seems to bypass this issue. Logging into files on device may be required to trace what's going on.

Not-really a solution: Limitting files per second for passive mode under the magic value does bypass this issue, but it's impossible to calculate it on-the-fly.

Will post a pull request with fixes for bugs 1, 2 and 3 shortly.

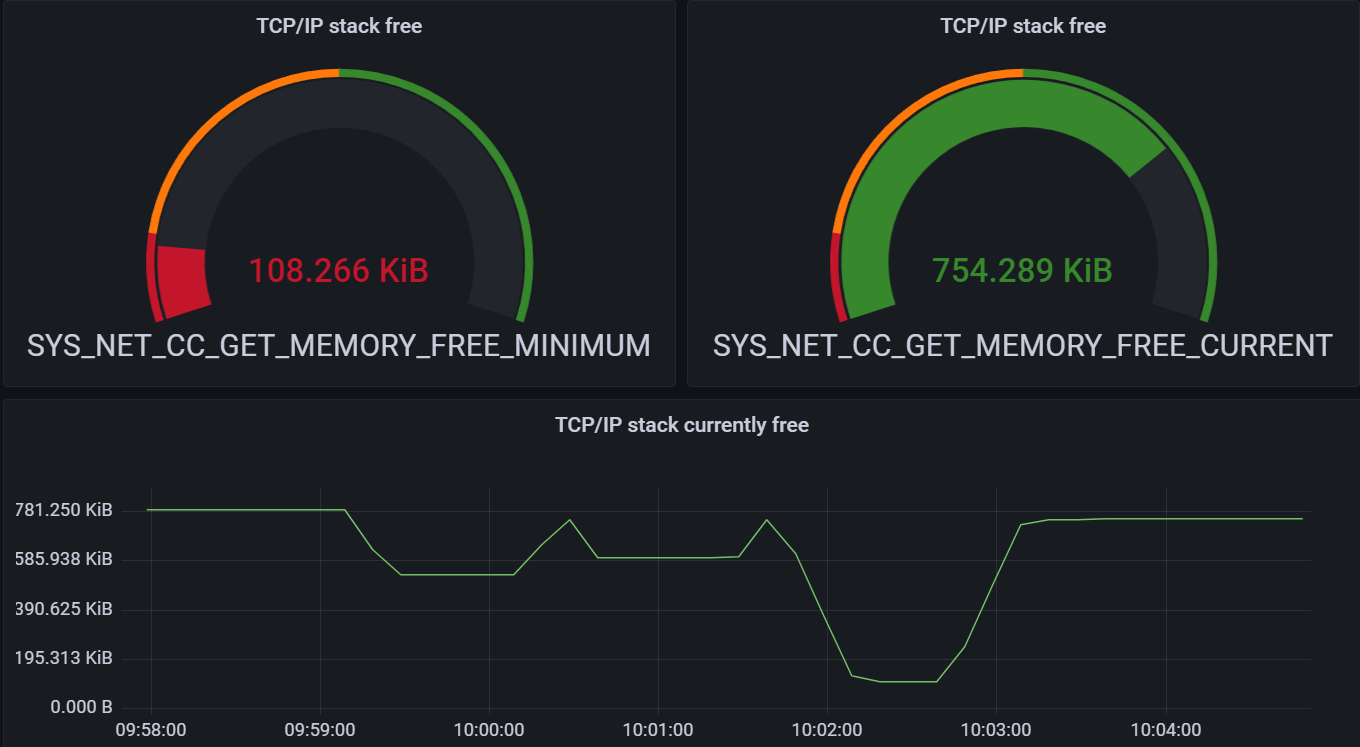

Bug 4 seems to be caused by "insufficient kernel work area" for sockets, as all network functions return SYS_NET_ENOBUFS when it occurs. Not sure if that happens because of too many sockets being open at the same time, or too many TCP connections in TIME_WAIT though. Monitoring SYS_NET_CC_GET_MEMORY_FREE_MINIMUM should hopefully reveal more...

Those are the values right after booting:

And after triggering bug 4, confirming that kernel connection table is full:

Things that were tried to decrease memory allocation, but failed:

Decreasing SO_SNDBUF and SO_RCVBUF buffers for every connection

Turns out this doesn't help at all. Even though packets are forced to be smaller (confirming the limited buffer size), the sockets itself still end up filling all of the space.

Enabling SO_LINGER and setting linger timeout to 0

In theory this should free kernel memory right after calling socket close functions, but side effect of TCP-RST to force close every connection means that every transfer fails. (TCP-FIN is EOF from client's point of view, TCP-RST signals network failure)

Enabling SO_LINGER and setting linger timeout to > 0

No perceivable difference, kernel still fills up.

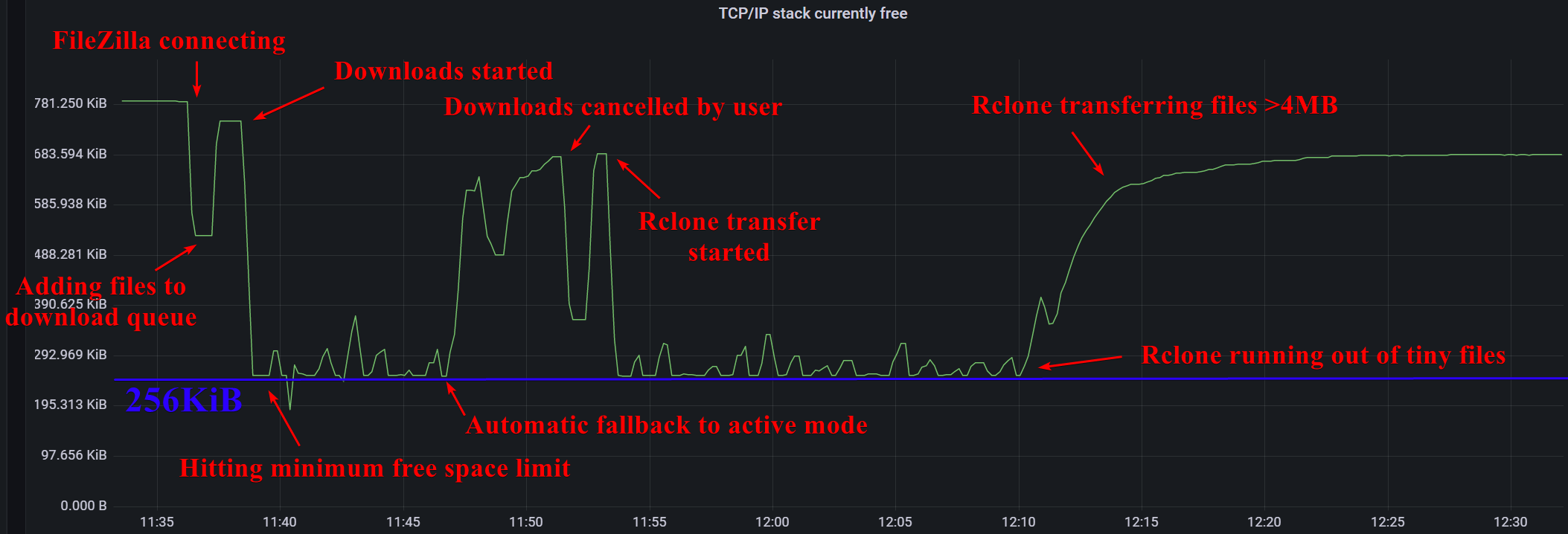

Also a chart of SYS_NET_CC_GET_MEMORY_FREE_CURRENT:

(lowest point is when sockets start to die due to SYS_NET_ENOBUFS)

@kostirez1 Thank you for your detailed information about the bug with FTP speed.

Have you tried changing the thread priority or limiting the number of threads? https://github.com/aldostools/webMAN-MOD/blob/master/include/ftp.h#L1412-L1432

Or maybe adding custom parameters to the function slisten() ? https://github.com/aldostools/webMAN-MOD/blob/master/include/init/socket.h#L67-L92

This line is commented. I don't remember if I tested uncommenting it. //setsockopt(s, SOL_SOCKET, SO_REUSEADDR, &optval, sizeof(optval));

There are more socket options that could be used also in the function connect_to_server() https://github.com/aldostools/webMAN-MOD/blob/master/include/init/socket.h#L8-L60

This issue has been discussed on PSX-PLACE forum. This bug not only affect webMAN's FTP server. It also affect other FTP servers like multiMAN or Rebug Toolbox. The conclusion obtained was that something changed in LV1 between 4.21 and 4.30 https://www.psx-place.com/threads/discussion-research-ftp-speed-changes-between-firmwares.3040/

Limitting files per second for passive mode under the magic value does bypass this issue, but it's impossible to calculate it on-the-fly.

This "magic number" is actually tied to SYS_NET_CC_GET_MEMORY_FREE_CURRENT, so allowing PASV to only open new connections when the free memory is over a set threshold does prevent systemwide TCP/IP lockups.

Below is a chart showcasing how it works in practice. FileZilla got a task to download ~6000 tiny files and used passive mode for the first few minutes, but after receiving "421 Could not create socket" (this happens when free memory is under the threshold for ~20s, client then automatically retries) a few times, it automatically switched to active mode, solving the issue with low ram.

Then I used Rclone to upload ~4500 tiny files (< 1KB) and few large ones (1MB - 5GB), sorted by size, lowest first. The same issue of hitting the minimum threshold can be observed and some small files time out from time to time, but there are no complete lockups of all connections. Also, after it transfered all of those tiny files and moved to large ones, the ram is slowly freeing up - no more PASV slowdowns, no more 421 timeouts 🥳.

Side note: In a real world scenario, file size distribution would be hopefully more even, so as long as there isn't a sequence of >150 tiny files, there should be no problems of activating the "slow down" mechanism.

Have you tried changing the thread priority or limiting the number of threads? https://github.com/aldostools/webMAN-MOD/blob/master/include/ftp.h#L1412-L1432

I'm not really sure if more time from CPU scheduler could help with kernel freeing it's memory faster and too low priority could make the buffers full all the time, as there would be not enough CPU time for the thread to process them.

Or maybe adding custom parameters to the function slisten() ? https://github.com/aldostools/webMAN-MOD/blob/master/include/init/socket.h#L67-L92

I tried to modify SO_SNDBUF, SO_RCVBUF and SO_LINGER there (and everywhere else, for good measure), but it did not help with anything unfortunately. I was able to confirm that they were set correctly too...

This line is commented. I don't remember if I tested uncommenting it. //setsockopt(s, SOL_SOCKET, SO_REUSEADDR, &optval, sizeof(optval));

This option could help with bug 1, but I don't all side effects, so opted for better error reporting instead.

There are more socket options that could be used also in the function connect_to_server() https://github.com/aldostools/webMAN-MOD/blob/master/include/init/socket.h#L8-L60

Not sure if there are any bugs related to outbound connections, so I didn't need to modified them yet.

This issue has been discussed on PSX-PLACE forum. This bug not only affect webMAN's FTP server. It also affect other FTP servers like multiMAN or Rebug Toolbox. The conclusion obtained was that something changed in LV1 between 4.21 and 4.30 https://www.psx-place.com/threads/discussion-research-ftp-speed-changes-between-firmwares.3040/

I haven't looked into optimizing large file speeds yet to be honest, only looked into connection problems related to hundreds of small files. The tables there are interesting though, I could test that with my current setup.

I've submitted a new commit f450445aeac71d69fe82ad6289a60da30c07630c to implement your changes in PS3MAPI server too.

Basically I moved your wait code to the module socket.h for reuse it in ps3mapi_server.h

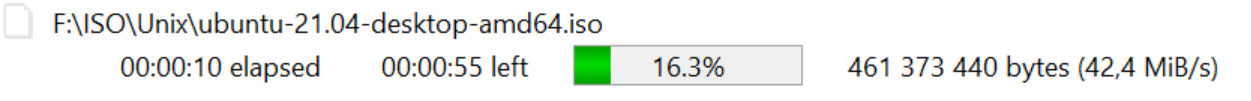

FTP avg upload speed: 16.3 MiB/s FTP avg download speed: 22.9 MiB/s

I don't think this is network limit, since if you remove call to cellFsWrite() here... 43.36 MiB/s for upload speed.

Maybe the disk IO got slower in between 4.21 and 4.30?

Maybe writing asynchronously could increase performance; that would require to implement a complex asynchronous function that write in a separated thread and that consume more memory.

Here is how Estwald implemented something like that in Iris Manager: https://github.com/aldostools/IRISMAN/blob/master/source/file_copy.h#L21-L51

It does indeed seem reading/writing in a separate thread would make a nice speedup:

When transferring 324MiB file Average recv() time: 2.970ms Average cellFsWrite() time: 4.218ms

This means there's around 3ms per 128KiB buffer fill of wasted time, which could be spent writing. Also, sending data to another thread won't take anywhere near that... I hope 😅.

So, after implementing a separate write thread for file uploads, I found out few things:

- The cost of thread synchronization with semaphores is practically immeasurable.

- Using 2 separate buffers - one for recv(), one for cellFsWrite() and switching them between them after each use does gain between 0% and 10% of speed. Not as much as I hoped. 😞

- Does that mean recv() and cellFsWrite() cannot run "in parallel" in the kernel, or is it something else slowing it down?

- By detaching those two threads from one another - recv() thread is throwing data away, cellFsWrite() fills the file with null buffer, I could transfer at ~45 MiB/s over the network, while only writing at around ~18 MiB/s.

- Is my 1TB 5400 RPM SMR HDD really that slow and therefore sabotaging the results? 😩

- Would switching to an SSD make it go faster? 🤔

- Writing two files in parallel is slower, I assume this is from additional seeks necessary to switch between two locations.

Edit: Thread with cellFsWrite() does in fact take twice as much time to execute compared to recv() one. This seems like bottleneck in HDD write speed and I don't like that. 😅

Maybe the thread priority could be impacting in the performance. That is if something a kernel level is not capping the speed.

How about allocating the disk space for the file before perform the cellFsWrite?

If at least one thread could improve the upload performance above 20MB/s it would be very helpful to upload large ISOs.

If the download is improved too it would be a plus. IMHO the upload is more important.

Maybe the thread priority could be impacting in the performance. That is if something a kernel level is not capping the speed.

I'm using priority 0 for the write thread already, so it shouldn't be that.

How about allocating the disk space for the file before perform the cellFsWrite?

Didn't think about this one, but the length of the file should unknown to the server anyway.

If at least one thread could improve the upload performance above 20MB/s it would be very helpful to upload large ISOs.

If the download is improved too it would be a plus. IMHO the upload is more important.

I agree with that.

Benchmarks for 250GB Samsung 860 Evo SSD:

Stock method: Download speed: ~24.47 MiB/s Upload speed: ~20.53 MiB/s

Two threads, two buffers: Upload speed: ~22.46 MiB/s

From function calls point of view (with 256KiB buffers):

- HDD

- recv() - ~6.1ms per call

- cellFsWrite() - ~12.0ms per call

- SSD

- recv() - ~6.0ms per call

- cellFsWrite() - ~8.7ms per call

I don't think those can get any faster. 🤔 One thing is interesting - while recv() for 256KiB buffer takes ~2x time of 128KiB buffer, cellFsWrite() is more expensive at ~2.4x time.

In a perfect scenario, that could mean: 256KiB * (1000ms / 6ms) = 42666.67 KiB/s of network receive throughput 256KiB * (1000ms / 8.7ms) = 29425.28 KiB/s of SSD write speed 128KiB * (1000ms / 3.7ms) = 34594.59 KiB/s of SSD write speed

I'm not sure why is the upload speed lower than 28.73 MiB/s for the SSD. It seems like it still takes around 11.13ms (256KiB *(1000ms / 22999 KiB/s) for the data to get from the network to the SSD. That's ~2.43ms longer than a perfect scenario. 🧐

Even by using CellFsAio (API for async IO in libfs), the speed is roughly the same as with the two thread method. I think that's all I can do for now, since I've got no experience outside of LV2 calls.

Also I found there's little to no benefit to transfer more than 2 - 3 files in parallel. Maybe the server could limit how many threads can enter STOR and RETR commands at the same tame? A semaphore would even make it possible to queue those requests as they come in.

I also have tried other things like:

- pre-allocate a larger file size using cellFsAllocateFileAreaWithoutZeroFill, then truncate it with the correct file size with cellFsFtruncate.

- Reduced the buffer size to 64KiB

- Changed the default buffer size using cellFsSetDefaultContainer

- Changed thread priority to 0 (THREAD_PRIO_HIGH)

In my tests I didn't notice any difference in the upload speed. However, my network setup it not good for tests since my PC is connected wireless using Wi-Fi 5 (802.11ac)