lucene-s3directory

lucene-s3directory copied to clipboard

lucene-s3directory copied to clipboard

ChecksumValidatingInputStream.read (ChecksumValidatingInputStream.java:102)

hi! this is a very cool project. I'm interested in using it to verify a remote lucene index stored in s3.

I'm running into this error (ChecksumValidatingInputStream.read (ChecksumValidatingInputStream.java:102)) when I try to use it to read a remote index.

Here are the steps I took:

After changing the lucene.version in pom.xml to 7.7.0, this is my script to test:

import java.nio.file.Path;

import com.erudika.lucene.store.s3.S3Directory;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.index.IndexReader;

S3Directory remotedDir = new S3Directory("my-awesome-bucket-with-a-lucene-index");

remotedDir.listAll();

IndexReader remoteReader = DirectoryReader.open(remotedDir); // ERROR HERE

// works locally

FSDirectory localDir = FSDirectory.open(Path.of("/home/myusername/local-copy-of-the-lucene-index"));

localDir.listAll();

IndexReader localReader = DirectoryReader.open(localDir);

localReader.getDocCount("content");

I used the mvn plugin for jshell, to quickly run and test the above commands:

❯ mvn com.github.johnpoth:jshell-maven-plugin:1.3:run

[INFO] Scanning for projects...

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building lucene-s3directory 1.0.0-SNAPSHOT

[INFO] ------------------------------------------------------------------------

[INFO]

[INFO] --- jshell-maven-plugin:1.3:run (default-cli) @ lucene-s3directory ---

| Welcome to JShell -- Version 11.0.13

| For an introduction type: /help intro

jshell> import java.nio.file.Path;

...> import com.erudika.lucene.store.s3.S3Directory;

...> import org.apache.lucene.store.FSDirectory;

...> import org.apache.lucene.index.DirectoryReader;

...> import org.apache.lucene.index.IndexReader;

jshell> S3Directory remotedDir = new S3Directory("my-awesome-bucket-with-a-lucene-index");

remotedDir ==> S3Directory@278bb07e

jshell> remotedDir.listAll();

$7 ==> String[176] { "_2q8s.dii", "_2q8s.dim", "_2q8s.fdt", "_2q8s.fdx", "_2q8s.fnm", "_2q8s.nvd", "_2q8s.nvm", "_2q8s.si", "_2q8s_Lucene50_0.doc", "_2q8s_Lucene50_0.pos", "_2q8s_Lucene50_0.tim", "_2q8s_Lucene50_0.tip", "_2q8s_Lucene70_0.dvd", "_2q8s_Lucene70_0.dvm", "_2q8s_a.liv", "_3dtp.dii", "_3dtp.dim", "_3dtp.fdt", "_3dtp.fdx", "_3dtp.fnm", "_3dtp.nvd", "_3dtp.nvm", "_3dtp.si", "_3dtp_1.liv", "_3dtp_Lucene50_0.doc", "_3dtp_Lucene50_0.pos", "_3dtp_Lucene50_0.tim", "_3dtp_Lucene50_0.tip", "_3dtp_Lucene70_0.dvd", "_3dtp_Lucene70_0.dvm", "_46q2.dii", "_46q2.dim", "_46q2.fdt", "_46q2.fdx", "_46q2.fnm", "_46q2.nvd", "_46q2.nvm", "_46q2.si", "_46q2_1.liv", "_46q2 ... cene50_0.pos", "_k_Lucene50_0.tim", "_k_Lucene50_0.tip", "_k_Lucene70_0.dvd", "_k_Lucene70_0.dvm", "_l.dii", "_l.dim", "_l.fdt", "_l.fdx", "_l.fnm", "_l.nvd", "_l.nvm", "_l.si", "_l_Lucene50_0.doc", "_l_Lucene50_0.pos", "_l_Lucene50_0.tim", "_l_Lucene50_0.tip", "_l_Lucene70_0.dvd", "_l_Lucene70_0.dvm", "segments_17", "segments_3" }

jshell> IndexReader remoteReader = DirectoryReader.open(remotedDir);

| Exception java.lang.NullPointerException

| at ChecksumValidatingInputStream.read (ChecksumValidatingInputStream.java:102)

| at FilterInputStream.read (FilterInputStream.java:133)

| at SdkFilterInputStream.read (SdkFilterInputStream.java:66)

| at FetchOnBufferReadS3IndexInput.readInternal (FetchOnBufferReadS3IndexInput.java:126)

| at FetchOnBufferReadS3IndexInput.readInternal (FetchOnBufferReadS3IndexInput.java:112)

| at ConfigurableBufferedIndexInput.readBytes (ConfigurableBufferedIndexInput.java:129)

| at DataInput.readBytes (DataInput.java:87)

| at FetchOnBufferReadS3IndexInput$SlicedIndexInput.readInternal (FetchOnBufferReadS3IndexInput.java:195)

| at BufferedIndexInput.refill (BufferedIndexInput.java:342)

| at BufferedIndexInput.readByte (BufferedIndexInput.java:54)

| at BufferedChecksumIndexInput.readByte (BufferedChecksumIndexInput.java:41)

| at DataInput.readInt (DataInput.java:101)

| at CodecUtil.checkHeader (CodecUtil.java:194)

| at CodecUtil.checkIndexHeader (CodecUtil.java:255)

| at Lucene60FieldInfosFormat.read (Lucene60FieldInfosFormat.java:117)

| at SegmentCoreReaders.<init> (SegmentCoreReaders.java:108)

| at SegmentReader.<init> (SegmentReader.java:83)

| at StandardDirectoryReader$1.doBody (StandardDirectoryReader.java:66)

| at StandardDirectoryReader$1.doBody (StandardDirectoryReader.java:58)

| at SegmentInfos$FindSegmentsFile.run (SegmentInfos.java:688)

| at StandardDirectoryReader.open (StandardDirectoryReader.java:81)

| at DirectoryReader.open (DirectoryReader.java:63)

| at (#8:1)

❯ aws s3 ls s3://my-awesome-bucket-with-a-lucene-index --human-readable | grep "GiB"

2021-12-28 16:37:15 3.0 GiB _2q8s.fdt

2021-12-28 16:37:15 1.9 GiB _3dtp.fdt

2021-12-28 16:37:15 1.6 GiB _46q2.fdt

2021-12-28 16:37:15 3.4 GiB _4lbn.fdt

2021-12-28 16:37:15 1.0 GiB _4lbn_Lucene50_0.pos

2021-12-28 16:37:15 1.7 GiB _540o.fdt

2021-12-28 16:37:15 3.1 GiB _5pg8.fdt

Maybe its the large files on S3?

Do you have any idea what might be going on? Seems it works with FSDirectory.

Moved out of JShell into a script and found the suppressed exception in case its relevant:

Suppressed: org.apache.lucene.index.CorruptIndexException: checksum status indeterminate: unexpected exception (resource=BufferedChecksumIndexInput(FetchOnBufferReadS3IndexInput [slice=_5umk.fnm]))

Full Stack Trace:

Exception in thread "main" java.lang.NullPointerException

at software.amazon.awssdk.services.s3.checksums.ChecksumValidatingInputStream.read(ChecksumValidatingInputStream.java:102)

at java.base/java.io.FilterInputStream.read(FilterInputStream.java:133)

at software.amazon.awssdk.core.io.SdkFilterInputStream.read(SdkFilterInputStream.java:66)

at com.erudika.lucene.store.s3.index.FetchOnBufferReadS3IndexInput.readInternal(FetchOnBufferReadS3IndexInput.java:126)

at com.erudika.lucene.store.s3.index.FetchOnBufferReadS3IndexInput.readInternal(FetchOnBufferReadS3IndexInput.java:112)

at com.erudika.lucene.store.s3.index.ConfigurableBufferedIndexInput.readBytes(ConfigurableBufferedIndexInput.java:129)

at org.apache.lucene.store.DataInput.readBytes(DataInput.java:87)

at com.erudika.lucene.store.s3.index.FetchOnBufferReadS3IndexInput$SlicedIndexInput.readInternal(FetchOnBufferReadS3IndexInput.java:195)

at org.apache.lucene.store.BufferedIndexInput.refill(BufferedIndexInput.java:342)

at org.apache.lucene.store.BufferedIndexInput.readByte(BufferedIndexInput.java:54)

at org.apache.lucene.store.BufferedChecksumIndexInput.readByte(BufferedChecksumIndexInput.java:41)

at org.apache.lucene.store.DataInput.readInt(DataInput.java:101)

at org.apache.lucene.codecs.CodecUtil.checkHeader(CodecUtil.java:194)

at org.apache.lucene.codecs.CodecUtil.checkIndexHeader(CodecUtil.java:255)

at org.apache.lucene.codecs.lucene60.Lucene60FieldInfosFormat.read(Lucene60FieldInfosFormat.java:117)

at org.apache.lucene.index.SegmentCoreReaders.<init>(SegmentCoreReaders.java:108)

at org.apache.lucene.index.SegmentReader.<init>(SegmentReader.java:83)

at org.apache.lucene.index.StandardDirectoryReader$1.doBody(StandardDirectoryReader.java:66)

at org.apache.lucene.index.StandardDirectoryReader$1.doBody(StandardDirectoryReader.java:58)

at org.apache.lucene.index.SegmentInfos$FindSegmentsFile.run(SegmentInfos.java:688)

at org.apache.lucene.index.StandardDirectoryReader.open(StandardDirectoryReader.java:81)

at org.apache.lucene.index.DirectoryReader.open(DirectoryReader.java:63)

at com.erudika.lucene.store.s3.Main.main(Main.java:17)

Suppressed: org.apache.lucene.index.CorruptIndexException: checksum status indeterminate: unexpected exception (resource=BufferedChecksumIndexInput(FetchOnBufferReadS3IndexInput [slice=_5umk.fnm]))

at org.apache.lucene.codecs.CodecUtil.checkFooter(CodecUtil.java:471)

at org.apache.lucene.codecs.lucene60.Lucene60FieldInfosFormat.read(Lucene60FieldInfosFormat.java:176)

... 8 more

Caused by: java.lang.NullPointerException

at software.amazon.awssdk.services.s3.checksums.ChecksumValidatingInputStream.read(ChecksumValidatingInputStream.java:102)

at java.base/java.io.FilterInputStream.read(FilterInputStream.java:133)

at software.amazon.awssdk.core.io.SdkFilterInputStream.read(SdkFilterInputStream.java:66)

at com.erudika.lucene.store.s3.index.FetchOnBufferReadS3IndexInput.readInternal(FetchOnBufferReadS3IndexInput.java:126)

at com.erudika.lucene.store.s3.index.FetchOnBufferReadS3IndexInput.readInternal(FetchOnBufferReadS3IndexInput.java:112)

at com.erudika.lucene.store.s3.index.ConfigurableBufferedIndexInput.readBytes(ConfigurableBufferedIndexInput.java:129)

at org.apache.lucene.store.DataInput.readBytes(DataInput.java:87)

at com.erudika.lucene.store.s3.index.FetchOnBufferReadS3IndexInput$SlicedIndexInput.readInternal(FetchOnBufferReadS3IndexInput.java:195)

at org.apache.lucene.store.BufferedIndexInput.readBytes(BufferedIndexInput.java:160)

at org.apache.lucene.store.BufferedIndexInput.readBytes(BufferedIndexInput.java:116)

at org.apache.lucene.store.BufferedChecksumIndexInput.readBytes(BufferedChecksumIndexInput.java:49)

at org.apache.lucene.store.DataInput.readBytes(DataInput.java:87)

at org.apache.lucene.store.DataInput.skipBytes(DataInput.java:317)

at org.apache.lucene.codecs.CodecUtil.checkFooter(CodecUtil.java:458)

... 9 more

Still debugging...

Do you happen to know the Lucene version of the index you are trying to read from S3? The dependencies here haven't been updated in a while - maybe the AWS SDK needs an update as well.

I'm using Lucene 7.7.0 which I updated in the pom.xml before building. This change made the code stop having that exact error but it certainly didn't fix it.

diff --git a/src/main/java/com/erudika/lucene/store/s3/index/FetchOnBufferReadS3IndexInput.java b/src/main/java/com/erudika/lucene/store/s3/index/FetchOnBufferReadS3IndexInput.java

index efcb99d..438e00c 100644

--- a/src/main/java/com/erudika/lucene/store/s3/index/FetchOnBufferReadS3IndexInput.java

+++ b/src/main/java/com/erudika/lucene/store/s3/index/FetchOnBufferReadS3IndexInput.java

@@ -123,7 +123,9 @@ public class FetchOnBufferReadS3IndexInput extends S3BufferedIndexInput {

position = curPos;

}

res.skip(position);

- res.read(b, offset, length);

+ if (length > 0) {

+ res.read(b, offset, length);

+ }

position += length;

}

This is the new error in case it offers any clues: checksum failed (hardware problem?) : expected=24000000 actual=24407d9d

Exception in thread "main" org.apache.lucene.index.CorruptIndexException: checksum failed (hardware problem?) : expected=24000000 actual=24407d9d (resource=BufferedChecksumIndexInput(FetchOnBufferReadS3IndexInput))

at org.apache.lucene.codecs.CodecUtil.checkFooter(CodecUtil.java:419)

at org.apache.lucene.codecs.CodecUtil.checkFooter(CodecUtil.java:448)

at org.apache.lucene.codecs.lucene70.Lucene70SegmentInfoFormat.read(Lucene70SegmentInfoFormat.java:266)

at org.apache.lucene.index.SegmentInfos.readCommit(SegmentInfos.java:361)

at org.apache.lucene.index.SegmentInfos.readCommit(SegmentInfos.java:291)

at org.apache.lucene.index.StandardDirectoryReader$1.doBody(StandardDirectoryReader.java:61)

at org.apache.lucene.index.StandardDirectoryReader$1.doBody(StandardDirectoryReader.java:58)

at org.apache.lucene.index.SegmentInfos$FindSegmentsFile.run(SegmentInfos.java:688)

at org.apache.lucene.index.StandardDirectoryReader.open(StandardDirectoryReader.java:81)

at org.apache.lucene.index.DirectoryReader.open(DirectoryReader.java:63)

at com.erudika.lucene.store.s3.Main.main(Main.java:17)

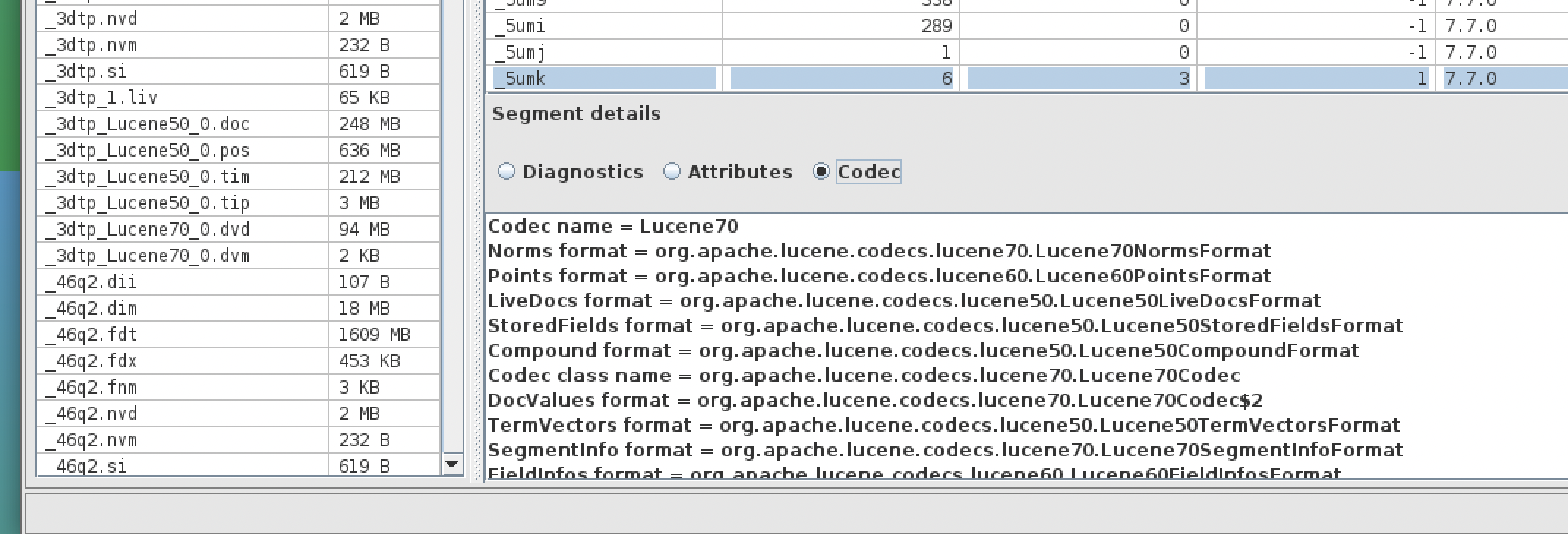

Given the error, I double checked that the local version of the index is okay using Luke's "Check Index" and got "No problems were detected with this index."

No, I mean the version of the actual index files on S3 - do you know that? Looks like it's version 6.x because I see calls to Lucene60FieldInfosFormat.read in the logs.

I think that's expected? I think the Lucene70 codec calls into the older classes for parts of the API that haven't changed, but don't quote me on that:

Perhaps the code for reading the objects from S3 contains a bug which only appears when the objects are large in size. I will have to review the code.

Okay, I'm 100% sure its Lucene 7.7.0. I just did a fresh download and ran it through Clue and the included demo program with Lucene 7.7.0.

# Downloading latest from S3

❯ mkdir debug-s3-lucene-issue

❯ cd debug-s3-lucene-issue

❯ aws s3 sync s3://my-bucket .

# Running Clue

❯ clue . info

readonly mode: true

Codec found: Lucene70

numdocs: 3545441

maxdoc: 4354259

num deleted docs: 808818

segment count: 23

number of fields: 32

# Download Lucene 7.7.0

❯ cd ~

❯ wget https://archive.apache.org/dist/lucene/java/7.7.0/lucene-7.7.0.tgz

❯ tar -xvf lucene-7.7.0.tgz

# Add Lucene 7.7.0 to CLASSPATH

❯ export CLASSPATH="$HOME/lucene-7.7.0/demo/*:$HOME/lucene-7.7.0/core/*:$HOME/lucene-7.7.0/queryparser/*:$HOME/lucene-7.7.0/analysis/common/*:$CLASSPATH"

# Running Lucene 7.7.0 Demo Program

❯ java org.apache.lucene.demo.SearchFiles -index debug-s3-lucene-issue -field content -query "hi"

Searching for: hi

975679 total matching documents

1. No path for this document

2. No path for this document

3. No path for this document

4. No path for this document

5. No path for this document

6. No path for this document

7. No path for this document

8. No path for this document

9. No path for this document

10. No path for this document

Perhaps the code for reading the objects from S3 contains a bug which only appears when the objects are large in size. I will have to review the code.

Sounds good! Thanks a ton for helping with debugging!

I will note that I found the error is occurring on relatively small files and intermittent on where it occurs although after a few runs it always settles on _5umk.cfs.

After adding a print statement:

diff --git a/src/main/java/com/erudika/lucene/store/s3/index/FetchOnBufferReadS3IndexInput.java b/src/main/java/com/erudika/lucene/store/s3/index/FetchOnBufferReadS3IndexInput.java

index efcb99d..8fc3570 100644

--- a/src/main/java/com/erudika/lucene/store/s3/index/FetchOnBufferReadS3IndexInput.java

+++ b/src/main/java/com/erudika/lucene/store/s3/index/FetchOnBufferReadS3IndexInput.java

@@ -70,6 +70,9 @@ public class FetchOnBufferReadS3IndexInput extends S3BufferedIndexInput {

if (logger.isDebugEnabled()) {

logger.info("refill({})", name);

}

+

+ System.out.println("Reading key: " + name);

+

ResponseInputStream<GetObjectResponse> res = s3Directory.getS3().

getObject(b -> b.bucket(s3Directory.getBucket()).key(name));

@@ -123,7 +126,9 @@ public class FetchOnBufferReadS3IndexInput extends S3BufferedIndexInput {

position = curPos;

}

res.skip(position);

- res.read(b, offset, length);

+ if (length > 0) {

+ res.read(b, offset, length);

+ }

position += length;

}

I found it was intermittent on where the actual bug occurred so perhaps its related to some network/buffering issue.

Reading key: _2q8s.si

Exception in thread "main" org.apache.lucene.index.CorruptIndexException: misplaced codec footer (file extended?): remaining=498, expected=16, fp=121 (resource=BufferedChecksumIndexInput(FetchOnBufferReadS3IndexInput))

at org.apache.lucene.codecs.CodecUtil.validateFooter(CodecUtil.java:497)

at org.apache.lucene.codecs.CodecUtil.checkFooter(CodecUtil.java:414)

at org.apache.lucene.codecs.CodecUtil.checkFooter(CodecUtil.java:448)

at org.apache.lucene.codecs.lucene70.Lucene70SegmentInfoFormat.read(Lucene70SegmentInfoFormat.java:266)

at org.apache.lucene.index.SegmentInfos.readCommit(SegmentInfos.java:361)

at org.apache.lucene.index.SegmentInfos.readCommit(SegmentInfos.java:291)

at org.apache.lucene.index.StandardDirectoryReader$1.doBody(StandardDirectoryReader.java:61)

at org.apache.lucene.index.StandardDirectoryReader$1.doBody(StandardDirectoryReader.java:58)

at org.apache.lucene.index.SegmentInfos$FindSegmentsFile.run(SegmentInfos.java:688)

at org.apache.lucene.index.StandardDirectoryReader.open(StandardDirectoryReader.java:81)

at org.apache.lucene.index.DirectoryReader.open(DirectoryReader.java:63)

at com.erudika.lucene.store.s3.Main.main(Main.java:17)

Reading key: _5umk.cfs

Exception in thread "main" org.apache.lucene.index.CorruptIndexException: codec header mismatch: actual header=0 vs expected header=1071082519 (resource=BufferedChecksumIndexInput(FetchOnBufferReadS3IndexInput [slice=_5umk.fnm]))

at org.apache.lucene.codecs.CodecUtil.checkHeader(CodecUtil.java:196)

at org.apache.lucene.codecs.CodecUtil.checkIndexHeader(CodecUtil.java:255)

at org.apache.lucene.codecs.lucene60.Lucene60FieldInfosFormat.read(Lucene60FieldInfosFormat.java:117)

at org.apache.lucene.index.SegmentCoreReaders.<init>(SegmentCoreReaders.java:108)

at org.apache.lucene.index.SegmentReader.<init>(SegmentReader.java:83)

at org.apache.lucene.index.StandardDirectoryReader$1.doBody(StandardDirectoryReader.java:66)

at org.apache.lucene.index.StandardDirectoryReader$1.doBody(StandardDirectoryReader.java:58)

at org.apache.lucene.index.SegmentInfos$FindSegmentsFile.run(SegmentInfos.java:688)

at org.apache.lucene.index.StandardDirectoryReader.open(StandardDirectoryReader.java:81)

at org.apache.lucene.index.DirectoryReader.open(DirectoryReader.java:63)

at com.erudika.lucene.store.s3.Main.main(Main.java:17)

Suppressed: org.apache.lucene.index.CorruptIndexException: checksum failed (hardware problem?) : expected=d8388aa0 actual=4ced1e38 (resource=BufferedChecksumIndexInput(FetchOnBufferReadS3IndexInput [slice=_5umk.fnm]))

at org.apache.lucene.codecs.CodecUtil.checkFooter(CodecUtil.java:419)

at org.apache.lucene.codecs.CodecUtil.checkFooter(CodecUtil.java:462)

at org.apache.lucene.codecs.lucene60.Lucene60FieldInfosFormat.read(Lucene60FieldInfosFormat.java:176)

... 8 more

❯ aws s3 ls --human-readable s3://my-bucket | egrep "_2q8s.si|_5umk.cfs"

2021-12-28 03:23:32 619 Bytes _2q8s.si

2021-12-28 03:23:32 41.3 KiB _5umk.cfs

^ as you can see the files are relatively small.

anyway, hope it helps!

I just pushed a few minor changes to the code, including updated dependencies - Lucene and AWS SDK. I noticed that your modification (that if (length > 0) check) breaks tests and causes the corruption exception. I ran the test without it and they passed.

Please note that all tests are executed against the actual S3 service, rather than a local S3 emulation environment.

Unfortunately this project is broken and I don't have the capacity to fix it. If you can debug - PRs are welcome.