airbyte

airbyte copied to clipboard

airbyte copied to clipboard

Postgres Source : Support JSONB datatype

What

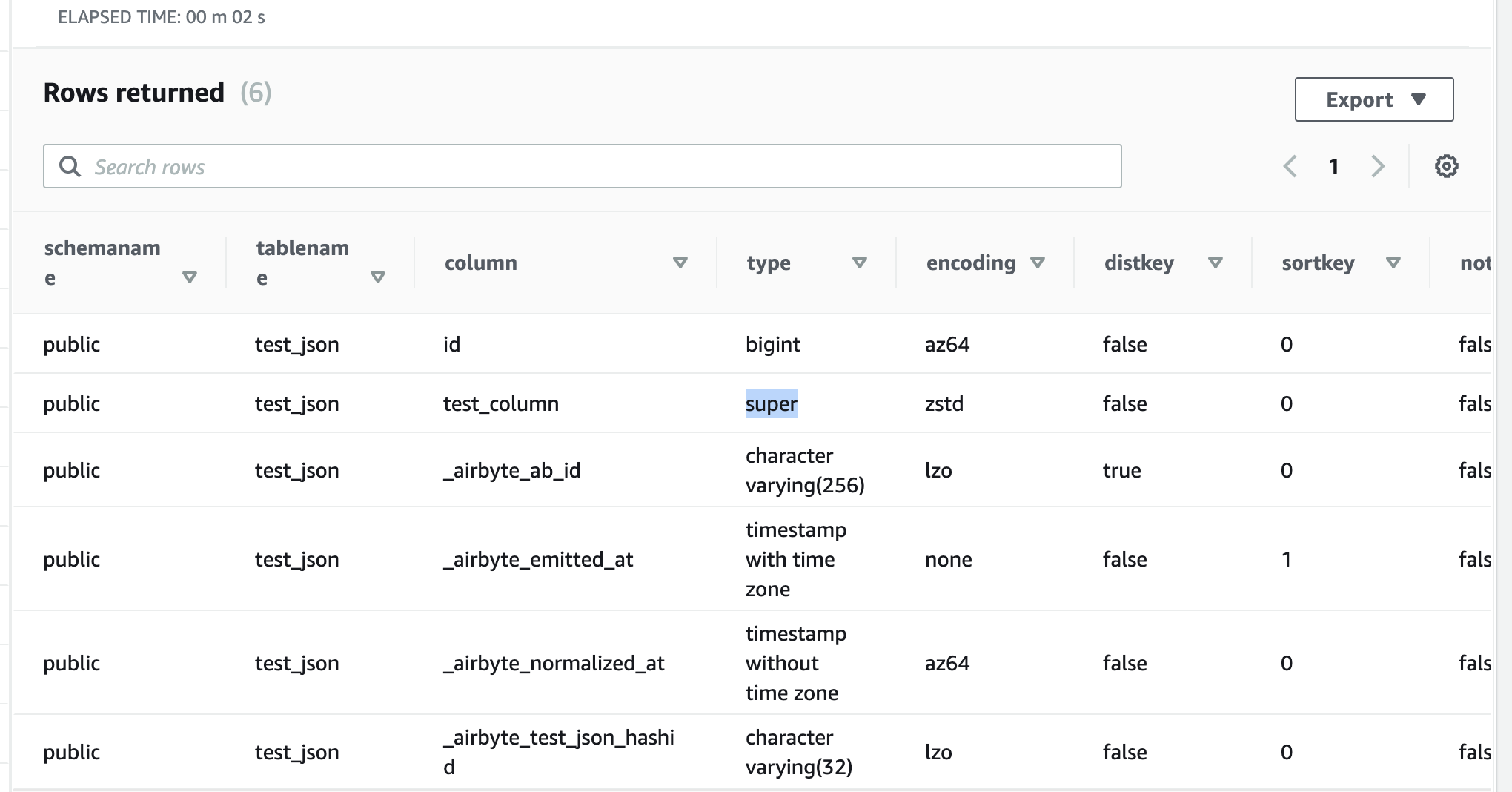

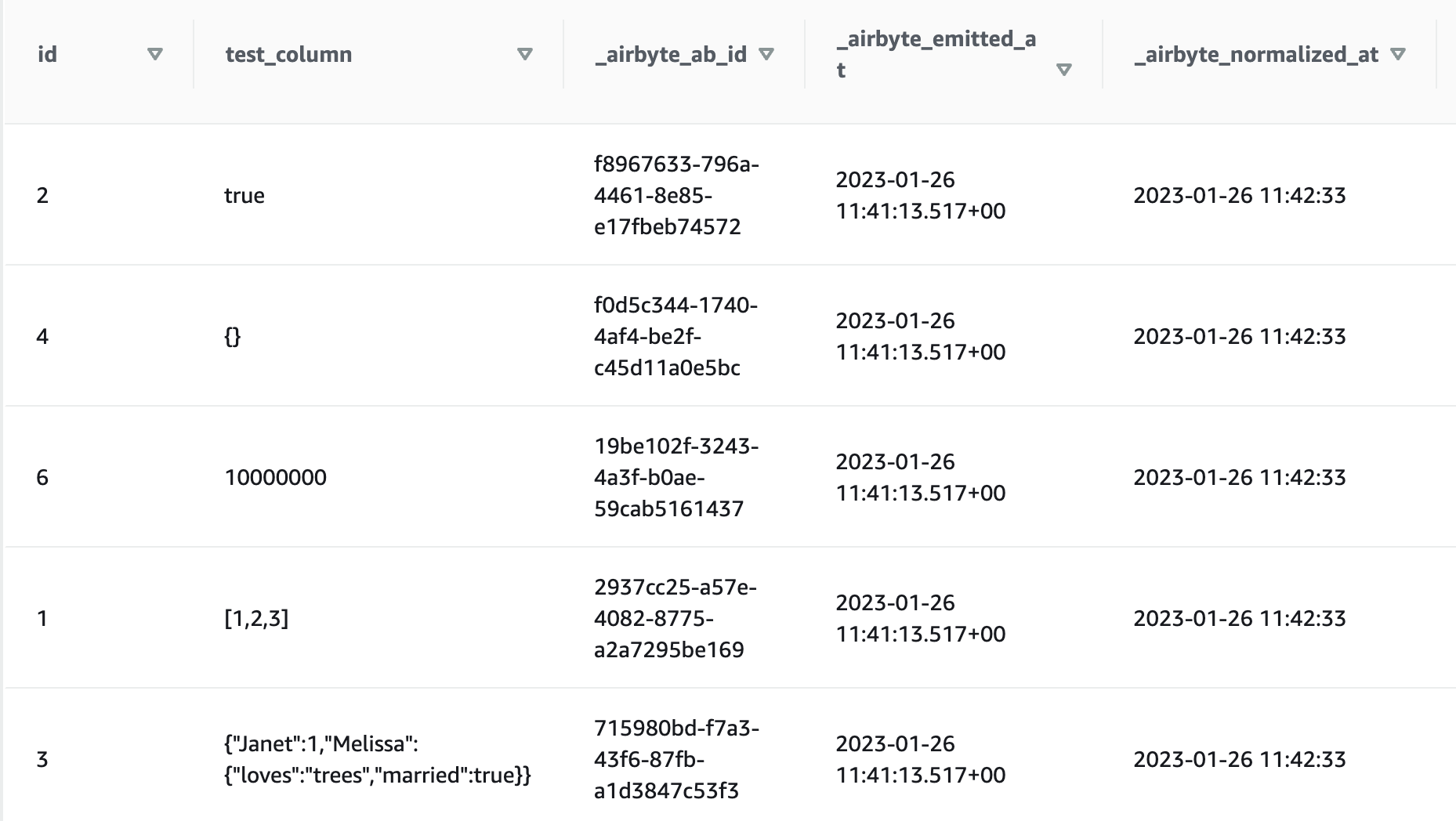

Postgres JSONB columns shows as string in destination, the field should be as VARIANT in Snowflake, SUPER in Redshift, JSONB in Postgres, etc

How

Updated Json schema mapping to

"test_column": {

"type": "object",

"oneOf": [

{

"type": "array"

},

{

"type": "object"

},

{

"type": "string"

},

{

"type": "number"

},

{

"type": "boolean"

}

]

}

Recommended reading order

JsonSchemaType.javaPostgresSourceOperations.java

🚨 User Impact 🚨

Are there any breaking changes? What is the end result perceived by the user? If yes, please merge this PR with the 🚨🚨 emoji so changelog authors can further highlight this if needed.

Pre-merge Checklist

Expand the relevant checklist and delete the others.

New Connector

Community member or Airbyter

- [ ] Community member? Grant edit access to maintainers (instructions)

- [ ] Secrets in the connector's spec are annotated with

airbyte_secret - [ ] Unit & integration tests added and passing. Community members, please provide proof of success locally e.g: screenshot or copy-paste unit, integration, and acceptance test output. To run acceptance tests for a Python connector, follow instructions in the README. For java connectors run

./gradlew :airbyte-integrations:connectors:<name>:integrationTest. - [ ] Code reviews completed

- [ ] Documentation updated

- [ ] Connector's

README.md - [ ] Connector's

bootstrap.md. See description and examples - [ ]

docs/integrations/<source or destination>/<name>.mdincluding changelog. See changelog example - [ ]

docs/integrations/README.md - [ ]

airbyte-integrations/builds.md

- [ ] Connector's

- [ ] PR name follows PR naming conventions

Airbyter

If this is a community PR, the Airbyte engineer reviewing this PR is responsible for the below items.

- [ ] Create a non-forked branch based on this PR and test the below items on it

- [ ] Build is successful

- [ ] If new credentials are required for use in CI, add them to GSM. Instructions.

- [ ]

/test connector=connectors/<name>command is passing - [ ] New Connector version released on Dockerhub by running the

/publishcommand described here - [ ] After the connector is published, connector added to connector index as described here

- [ ] Seed specs have been re-generated by building the platform and committing the changes to the seed spec files, as described here

Updating a connector

Community member or Airbyter

- [ ] Grant edit access to maintainers (instructions)

- [ ] Secrets in the connector's spec are annotated with

airbyte_secret - [x] Unit & integration tests added and passing. Community members, please provide proof of success locally e.g: screenshot or copy-paste unit, integration, and acceptance test output. To run acceptance tests for a Python connector, follow instructions in the README. For java connectors run

./gradlew :airbyte-integrations:connectors:<name>:integrationTest. - [ ] Code reviews completed

- [x] Documentation updated

- [ ] Connector's

README.md - [ ] Connector's

bootstrap.md. See description and examples - [x] Changelog updated in

docs/integrations/<source or destination>/<name>.mdincluding changelog. See changelog example

- [ ] Connector's

- [x] PR name follows PR naming conventions

Airbyter

If this is a community PR, the Airbyte engineer reviewing this PR is responsible for the below items.

- [ ] Create a non-forked branch based on this PR and test the below items on it

- [ ] Build is successful

- [ ] If new credentials are required for use in CI, add them to GSM. Instructions.

- [ ]

/test connector=connectors/<name>command is passing - [ ] New Connector version released on Dockerhub and connector version bumped by running the

/publishcommand described here

Connector Generator

- [ ] Issue acceptance criteria met

- [ ] PR name follows PR naming conventions

- [ ] If adding a new generator, add it to the list of scaffold modules being tested

- [ ] The generator test modules (all connectors with

-scaffoldin their name) have been updated with the latest scaffold by running./gradlew :airbyte-integrations:connector-templates:generator:testScaffoldTemplatesthen checking in your changes - [ ] Documentation which references the generator is updated as needed

Tests

Unit

Put your unit tests output here.

Integration

Put your integration tests output here.

Acceptance

Put your acceptance tests output here.

Affected Connector Report

NOTE ⚠️ Changes in this PR affect the following connectors. Make sure to do the following as needed:

- Run integration tests

- Bump connector or module version

- Add changelog

- Publish the new version

✅ Sources (6)

| Connector | Version | Changelog | Publish |

|---|---|---|---|

source-alloydb |

1.0.49 |

✅ | ✅ |

source-alloydb-strict-encrypt |

1.0.49 |

🔵 (ignored) |

🔵 (ignored) |

source-mssql |

0.4.29 |

✅ | ✅ |

source-mysql |

1.0.21 |

✅ | ✅ |

source-postgres |

1.0.50 |

✅ | ✅ |

source-postgres-strict-encrypt |

1.0.50 |

🔵 (ignored) |

🔵 (ignored) |

- See "Actionable Items" below for how to resolve warnings and errors.

✅ Destinations (0)

| Connector | Version | Changelog | Publish |

|---|

- See "Actionable Items" below for how to resolve warnings and errors.

✅ Other Modules (0)

Actionable Items

(click to expand)

| Category | Status | Actionable Item |

|---|---|---|

| Version | ❌ mismatch |

The version of the connector is different from its normal variant. Please bump the version of the connector. |

| ⚠ doc not found |

The connector does not seem to have a documentation file. This can be normal (e.g. basic connector like source-jdbc is not published or documented). Please double-check to make sure that it is not a bug. |

|

| Changelog | ⚠ doc not found |

The connector does not seem to have a documentation file. This can be normal (e.g. basic connector like source-jdbc is not published or documented). Please double-check to make sure that it is not a bug. |

| ❌ changelog missing |

There is no chnagelog for the current version of the connector. If you are the author of the current version, please add a changelog. | |

| Publish | ⚠ not in seed |

The connector is not in the seed file (e.g. source_definitions.yaml), so its publication status cannot be checked. This can be normal (e.g. some connectors are cloud-specific, and only listed in the cloud seed file). Please double-check to make sure that it is not a bug. |

| ❌ diff seed version |

The connector exists in the seed file, but the latest version is not listed there. This usually means that the latest version is not published. Please use the /publish command to publish the latest version. |

/test connector=connectors/source-postgres-strict-encrypt

:clock2: connectors/source-postgres-strict-encrypt https://github.com/airbytehq/airbyte/actions/runs/3984975572 :white_check_mark: connectors/source-postgres-strict-encrypt https://github.com/airbytehq/airbyte/actions/runs/3984975572 No Python unittests run

Build Passed

Test summary info:

All Passed

/test connector=connectors/source-postgres

:clock2: connectors/source-postgres https://github.com/airbytehq/airbyte/actions/runs/3984976632 :white_check_mark: connectors/source-postgres https://github.com/airbytehq/airbyte/actions/runs/3984976632 Python tests coverage:

Name Stmts Miss Cover Missing

----------------------------------------------------------------------------------

source_acceptance_test/base.py 12 4 67% 16-19

source_acceptance_test/config.py 141 5 96% 87, 93, 239, 243-244

source_acceptance_test/conftest.py 211 95 55% 36, 42-44, 49, 54, 77, 83, 89-91, 110, 115-117, 123-125, 131-132, 137-138, 143, 149, 158-167, 173-178, 193, 217, 248, 254, 262-267, 275-285, 293-306, 311-317, 324-335, 342-358

source_acceptance_test/plugin.py 69 25 64% 22-23, 31, 36, 120-140, 144-148

source_acceptance_test/tests/test_core.py 402 115 71% 53, 58, 93-104, 109-116, 120-121, 125-126, 308, 346-363, 376-387, 391-396, 402, 435-440, 478-485, 528-530, 533, 598-606, 618-621, 626, 682-683, 689, 692, 728-738, 751-776

source_acceptance_test/tests/test_incremental.py 160 14 91% 58-65, 70-83, 246

source_acceptance_test/utils/asserts.py 39 2 95% 62-63

source_acceptance_test/utils/common.py 94 10 89% 16-17, 32-38, 72, 75

source_acceptance_test/utils/compare.py 62 23 63% 21-51, 68, 97-99

source_acceptance_test/utils/connector_runner.py 133 33 75% 24-27, 46-47, 50-54, 57-58, 73-75, 78-80, 83-85, 88-90, 93-95, 124-125, 159-161, 208

source_acceptance_test/utils/json_schema_helper.py 107 13 88% 30-31, 38, 41, 65-68, 96, 120, 192-194

----------------------------------------------------------------------------------

TOTAL 1609 339 79%

Build Passed

Test summary info:

=========================== short test summary info ============================

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/source_acceptance_test/tests/test_core.py:94: The previous and actual specifications are identical.

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/source_acceptance_test/tests/test_core.py:377: The previous and actual discovered catalogs are identical.

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/source_acceptance_test/tests/test_incremental.py:22: `future_state` has a bypass reason, skipping.

================== 54 passed, 6 skipped in 715.83s (0:11:55) ===================

Airbyte Code Coverage

| File | Coverage [93.13%] | :green_apple: |

|---|---|---|

| JsonSchemaPrimitiveUtil.java | 98.15% | :green_apple: |

| JsonSchemaType.java | 90.31% | :green_apple: |

| Total Project Coverage | 24.68% | :x: |

|---|

Just tried this out (I hit a use case end of last week that requires jsonb be handled correctly) - I'm still getting strings in the destination. Stream config is updated along with the source connector image.

Just tried this out (I hit a use case end of last week that requires jsonb be handled correctly) - I'm still getting strings in the destination. Stream config is updated along with the source connector image.

@adam-bloom in which destinations did you get string?

@adam-bloom in which destinations did you get string?

@VitaliiMaltsev we use redshift as our destination. I ended up putting a workaround in a custom redshift destination in the meantime, but am definitely willing to test this build again in the future. I'm on the airbyte slack as well if you want more details.

@adam-bloom in which destinations did you get string?

@VitaliiMaltsev we use redshift as our destination. I ended up putting a workaround in a custom redshift destination in the meantime, but am definitely willing to test this build again in the future. I'm on the airbyte slack as well if you want more details.

@adam-bloom tested Redshift destination and values replicated as SUPER datatype not String

@adam-bloom tested Redshift destination and values replicated as SUPER datatype not String

What is in the column though? The column may be a super, but a super can hold any type of data (objects, arrays, strings, etc). My testing showed that the data was being stored as a string inside the super, not as an object, which limits the size of the data to 65KB. Try a large jsonb row and you should see that it is currently (correctly) dropped by the redshift destination as too large for a SUPER varchar field. Note that a SUPER has a limit of 1MB (potentially going up to 16 MB soon...docs aren't clear on this, but just noticed them starting to use 16 MB).

I'm not using normalization, but that shouldn't impact this.

/test connector=connectors/source-postgres-strict-encrypt

:clock2: connectors/source-postgres-strict-encrypt https://github.com/airbytehq/airbyte/actions/runs/4014679023 :white_check_mark: connectors/source-postgres-strict-encrypt https://github.com/airbytehq/airbyte/actions/runs/4014679023 No Python unittests run

Build Passed

Test summary info:

All Passed

/test connector=connectors/source-postgres

:clock2: connectors/source-postgres https://github.com/airbytehq/airbyte/actions/runs/4014679382 :white_check_mark: connectors/source-postgres https://github.com/airbytehq/airbyte/actions/runs/4014679382 Python tests coverage:

Name Stmts Miss Cover Missing

----------------------------------------------------------------------------------

source_acceptance_test/base.py 12 4 67% 16-19

source_acceptance_test/config.py 141 5 96% 87, 93, 239, 243-244

source_acceptance_test/conftest.py 211 95 55% 36, 42-44, 49, 54, 77, 83, 89-91, 110, 115-117, 123-125, 131-132, 137-138, 143, 149, 158-167, 173-178, 193, 217, 248, 254, 262-267, 275-285, 293-306, 311-317, 324-335, 342-358

source_acceptance_test/plugin.py 69 25 64% 22-23, 31, 36, 120-140, 144-148

source_acceptance_test/tests/test_core.py 402 115 71% 53, 58, 93-104, 109-116, 120-121, 125-126, 308, 346-363, 376-387, 391-396, 402, 435-440, 478-485, 528-530, 533, 598-606, 618-621, 626, 682-683, 689, 692, 728-738, 751-776

source_acceptance_test/tests/test_incremental.py 160 14 91% 58-65, 70-83, 246

source_acceptance_test/utils/asserts.py 39 2 95% 62-63

source_acceptance_test/utils/common.py 94 10 89% 16-17, 32-38, 72, 75

source_acceptance_test/utils/compare.py 62 23 63% 21-51, 68, 97-99

source_acceptance_test/utils/connector_runner.py 133 33 75% 24-27, 46-47, 50-54, 57-58, 73-75, 78-80, 83-85, 88-90, 93-95, 124-125, 159-161, 208

source_acceptance_test/utils/json_schema_helper.py 107 13 88% 30-31, 38, 41, 65-68, 96, 120, 192-194

----------------------------------------------------------------------------------

TOTAL 1609 339 79%

Build Passed

Test summary info:

=========================== short test summary info ============================

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/source_acceptance_test/tests/test_core.py:94: The previous and actual specifications are identical.

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/source_acceptance_test/tests/test_core.py:377: The previous and actual discovered catalogs are identical.

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/source_acceptance_test/tests/test_incremental.py:22: `future_state` has a bypass reason, skipping.

================== 54 passed, 6 skipped in 715.82s (0:11:55) ===================

@adam-bloom tested Redshift destination and values replicated as SUPER datatype not String

What is in the column though? The column may be a super, but a super can hold any type of data (objects, arrays, strings, etc). My testing showed that the data was being stored as a string inside the super, not as an object, which limits the size of the data to 65KB. Try a large jsonb row and you should see that it is currently (correctly) dropped by the redshift destination as too large for a SUPER varchar field. Note that a SUPER has a limit of 1MB (potentially going up to 16 MB soon...docs aren't clear on this, but just noticed them starting to use 16 MB).

I'm not using normalization, but that shouldn't impact this.

@adam-bloom i have updated values mapping

Please pull changes from this branch and check if it works for you now

Please pull changes from this branch and check if it works for you now

@VitaliiMaltsev confirmed that it is behaving as expected now. Thanks!

My workaround was a custom redshift destination build that coerced to JSON the same way :). So debezium is outputting strings for jsonb then, and not objects?

@VitaliiMaltsev confirmed that it is behaving as expected now. Thanks!

My workaround was a custom redshift destination build that coerced to JSON the same way :). So debezium is outputting strings for jsonb then, and not objects?

@adam-bloom tested Postgres JSONB <> Redshift Destination in both modes (STANDARD and CDC). In both cases results are the same - SUPER datatype with objects values

Can you add a test (or provide an example) for what the catalog looks like for the JSONB type?

@akashkulk here is an example of json schema for JSONB

"test_column": { "type": "object", "oneOf": [ { "type": "array" }, { "type": "object" }, { "type": "string" }, { "type": "number" }, { "type": "boolean" } ] }

this construction allows us to have successful syncs for normalization of JDBC destinations and for S3 avro/parquet conversions

@akashkulk @edgao please advise how to update this PR to support V1 protocol as well

@VitaliiMaltsev I agree that it works - my question was whyObjectMapper is even necessary - where is the json being stringified in the first place? I ask because other data types (numeric data types) are written as strings too and are required to be converted, which doesn't make much sense. I can open a separate issue for that.

@VitaliiMaltsev I agree that it works - my question was why

ObjectMapperis even necessary - where is the json being stringified in the first place? I ask because other data types (numeric data types) are written as strings too and are required to be converted, which doesn't make much sense. I can open a separate issue for that.

@adam-bloom Got it. No, these issues are not related, we have different mapping for each datatype. Thanks for creating a separate issue

Postgres source doesn't currently support v1. But in v1-land, the catalog should look like this :

"test_column": {

"type": "object",

"oneOf": [

{

"type": "array"

},

{

"type": "object"

},

{

"type": "AirbyteTypes.yaml#definitions/String"

},

{

"type": "AirbyteTypes.yaml#definitions/Integer"

},

{

"type": "AirbyteTypes.yaml#definitions/boolean"

}

]

}

/test connector=connectors/source-postgres-strict-encrypt

:clock2: connectors/source-postgres-strict-encrypt https://github.com/airbytehq/airbyte/actions/runs/4043603514 :white_check_mark: connectors/source-postgres-strict-encrypt https://github.com/airbytehq/airbyte/actions/runs/4043603514 No Python unittests run

Build Passed

Test summary info:

All Passed

/test connector=connectors/source-postgres

:clock2: connectors/source-postgres https://github.com/airbytehq/airbyte/actions/runs/4043604627 :white_check_mark: connectors/source-postgres https://github.com/airbytehq/airbyte/actions/runs/4043604627 Python tests coverage:

Name Stmts Miss Cover Missing

----------------------------------------------------------------------------------

source_acceptance_test/base.py 12 4 67% 16-19

source_acceptance_test/config.py 141 5 96% 87, 93, 239, 243-244

source_acceptance_test/conftest.py 211 95 55% 36, 42-44, 49, 54, 77, 83, 89-91, 110, 115-117, 123-125, 131-132, 137-138, 143, 149, 158-167, 173-178, 193, 217, 248, 254, 262-267, 275-285, 293-306, 311-317, 324-335, 342-358

source_acceptance_test/plugin.py 69 25 64% 22-23, 31, 36, 120-140, 144-148

source_acceptance_test/tests/test_core.py 402 115 71% 53, 58, 93-104, 109-116, 120-121, 125-126, 308, 346-363, 376-387, 391-396, 402, 435-440, 478-485, 528-530, 533, 598-606, 618-621, 626, 682-683, 689, 692, 728-738, 751-776

source_acceptance_test/tests/test_incremental.py 160 14 91% 58-65, 70-83, 246

source_acceptance_test/utils/asserts.py 39 2 95% 62-63

source_acceptance_test/utils/common.py 94 10 89% 16-17, 32-38, 72, 75

source_acceptance_test/utils/compare.py 62 23 63% 21-51, 68, 97-99

source_acceptance_test/utils/connector_runner.py 133 33 75% 24-27, 46-47, 50-54, 57-58, 73-75, 78-80, 83-85, 88-90, 93-95, 124-125, 159-161, 208

source_acceptance_test/utils/json_schema_helper.py 107 13 88% 30-31, 38, 41, 65-68, 96, 120, 192-194

----------------------------------------------------------------------------------

TOTAL 1609 339 79%

Build Passed

Test summary info:

=========================== short test summary info ============================

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/source_acceptance_test/tests/test_core.py:94: The previous and actual specifications are identical.

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/source_acceptance_test/tests/test_core.py:377: The previous and actual discovered catalogs are identical.

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/source_acceptance_test/tests/test_incremental.py:22: `future_state` has a bypass reason, skipping.

================== 54 passed, 6 skipped in 715.85s (0:11:55) ===================

Couple of thoughts after chatting with Edward :

- Have you tested this against v1 normalization & platform as Edward mentioned here : The upgrade to v1 has led to some issues at this point, so perhaps it's worth holding off on this until that is in?

- Is the top-level object required? Does having the test column as

test_column: {oneOf: [...], airbyte_type: jsonb}work? The way you have the test_column setup currently it seems to be kicking off normalization's unknown type logic which defaults to jsonb.

field should be as VARIANT in Snowflake, SUPER in Redshift, JSONB in Postgres

What here tells these destinations to creates these types? What will happen for connectors that don't have a specialized json type?

field should be as VARIANT in Snowflake, SUPER in Redshift, JSONB in Postgres

What here tells these destinations to creates these types? What will happen for connectors that don't have a specialized json type?

i believe dbt macros are responsible to put values to the proper datatypes. You can find them in datatypes.sql file

Couple of thoughts after chatting with Edward :

- Have you tested this against v1 normalization & platform as Edward mentioned here : The upgrade to v1 has led to some issues at this point, so perhaps it's worth holding off on this until that is in?

- Is the top-level object required? Does having the test column as

test_column: {oneOf: [...], airbyte_type: jsonb}work? The way you have the test_column setup currently it seems to be kicking off normalization's unknown type logic which defaults to jsonb.

@akashkulk

- Not yet. Will take a look

- I agree that such a strange schema is a little confusing This schema allows us to make successful syncs with normalization (for this top level type object is needed) and also to successfully convert avro records (for this we need an oneOf structure)

I came up with exactly this json schema through numerous tests with various destinations, since it showed the best result at the moment

Full tests results:

Redshift JSONB ----> SUPER Standart inserts - ok S3 staging - ok

Snowflake JSONB ----> VARIANT Internal Staging - ok A WS S3 Staging (CSV) - ok GCS Staging (CSV) - ok Azure blob Staging (CSV) -ok

BigQuery Standart inserts JSONB ----> STRING(ok) GCS Staging JSONB ----> STRING(ok)

S3 CSV - ok JSONL -ok AVRO - ok Parquet - ok

MsSQL JSONB ----> VARCHAR (ok) Postgres JSONB ----> JSONB (ok) MySQL JSONB ----> JSON (ok)

BigQuery Denormalized Standard inserts JSONB ----> STRING(failed) GCS Staging JSONB ----> STRING(failed)

For BigQuery Denormalized created the separate issue

/test connector=connectors/source-postgres

:clock2: connectors/source-postgres https://github.com/airbytehq/airbyte/actions/runs/4077687911 :white_check_mark: connectors/source-postgres https://github.com/airbytehq/airbyte/actions/runs/4077687911 Python tests coverage:

Name Stmts Miss Cover Missing

----------------------------------------------------------------------------------

source_acceptance_test/base.py 12 4 67% 16-19

source_acceptance_test/config.py 141 5 96% 87, 93, 239, 243-244

source_acceptance_test/conftest.py 211 95 55% 36, 42-44, 49, 54, 77, 83, 89-91, 110, 115-117, 123-125, 131-132, 137-138, 143, 149, 158-167, 173-178, 193, 217, 248, 254, 262-267, 275-285, 293-306, 311-317, 324-335, 342-358

source_acceptance_test/plugin.py 69 25 64% 22-23, 31, 36, 120-140, 144-148

source_acceptance_test/tests/test_core.py 476 117 75% 53, 58, 97-108, 113-120, 124-125, 129-130, 380, 400, 438, 476-493, 506-517, 521-526, 532, 565-570, 608-615, 658-660, 663, 728-736, 748-751, 756, 812-813, 819, 822, 858-868, 881-906

source_acceptance_test/tests/test_incremental.py 160 14 91% 58-65, 70-83, 246

source_acceptance_test/utils/asserts.py 39 2 95% 62-63

source_acceptance_test/utils/common.py 94 10 89% 16-17, 32-38, 72, 75

source_acceptance_test/utils/compare.py 62 23 63% 21-51, 68, 97-99

source_acceptance_test/utils/connector_runner.py 133 33 75% 24-27, 46-47, 50-54, 57-58, 73-75, 78-80, 83-85, 88-90, 93-95, 124-125, 159-161, 208

source_acceptance_test/utils/json_schema_helper.py 114 13 89% 31-32, 39, 42, 66-69, 97, 121, 203-205

----------------------------------------------------------------------------------

TOTAL 1690 341 80%

Build Passed

Test summary info:

=========================== short test summary info ============================

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/source_acceptance_test/tests/test_core.py:98: The previous and actual specifications are identical.

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/source_acceptance_test/tests/test_core.py:507: The previous and actual discovered catalogs are identical.

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/source_acceptance_test/tests/test_incremental.py:22: `future_state` has a bypass reason, skipping.

================== 66 passed, 6 skipped in 713.69s (0:11:53) ===================

/test connector=connectors/source-postgres

:clock2: connectors/source-postgres https://github.com/airbytehq/airbyte/actions/runs/4163431821 :x: connectors/source-postgres https://github.com/airbytehq/airbyte/actions/runs/4163431821 :bug: https://gradle.com/s/nf2yq4lyc63u6

Build Failed

Test summary info:

=========================== short test summary info ============================

FAILED test_incremental.py::TestIncremental::test_two_sequential_reads[inputs0]

FAILED test_incremental.py::TestIncremental::test_two_sequential_reads[inputs1]

ERROR test_core.py::TestBasicRead::test_read[inputs0] - Failed: High strictne...

ERROR test_core.py::TestBasicRead::test_read[inputs1] - Failed: High strictne...

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/connector_acceptance_test/tests/test_core.py:98: The previous and actual specifications are identical.

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/connector_acceptance_test/tests/test_core.py:507: The previous and actual discovered catalogs are identical.

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/connector_acceptance_test/tests/test_incremental.py:22: `future_state` has a bypass reason, skipping.

======== 2 failed, 62 passed, 6 skipped, 2 errors in 406.29s (0:06:46) =========

can we put something in the cdc value converter to parse these into json nodes? I think it would be surprising for users if > we read different values based on whether we're in cdc or select * mode

@edgao Unfortunately, we cannot, this is a limitation of the Kafka Connect API that is used by Debezium, so the construction registration.register(SchemaBuilder.string().optional(), x -> {) is used for jsonb

/test connector=connectors/source-postgres

:clock2: connectors/source-postgres https://github.com/airbytehq/airbyte/actions/runs/4225430594 :x: connectors/source-postgres https://github.com/airbytehq/airbyte/actions/runs/4225430594 :bug: https://gradle.com/s/2gx7hx4enlh5o

Build Failed

Test summary info:

=========================== short test summary info ============================

FAILED test_incremental.py::TestIncremental::test_two_sequential_reads[inputs0]

FAILED test_incremental.py::TestIncremental::test_two_sequential_reads[inputs1]

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/connector_acceptance_test/tests/test_core.py:98: The previous and actual specifications are identical.

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/connector_acceptance_test/tests/test_core.py:507: The previous and actual discovered catalogs are identical.

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/connector_acceptance_test/tests/test_incremental.py:22: `future_state` has a bypass reason, skipping.

============= 2 failed, 64 passed, 6 skipped in 413.77s (0:06:53) ==============

/test connector=connectors/source-postgres

:clock2: connectors/source-postgres https://github.com/airbytehq/airbyte/actions/runs/4242312817 :x: connectors/source-postgres https://github.com/airbytehq/airbyte/actions/runs/4242312817 :bug: https://gradle.com/s/aptkqxnuiuwwk

Build Failed

Test summary info:

=========================== short test summary info ============================

FAILED test_incremental.py::TestIncremental::test_two_sequential_reads[inputs0]

FAILED test_incremental.py::TestIncremental::test_two_sequential_reads[inputs1]

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/connector_acceptance_test/tests/test_core.py:98: The previous and actual specifications are identical.

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/connector_acceptance_test/tests/test_core.py:507: The previous and actual discovered catalogs are identical.

SKIPPED [2] ../usr/local/lib/python3.9/site-packages/connector_acceptance_test/tests/test_incremental.py:22: `future_state` has a bypass reason, skipping.

============= 2 failed, 64 passed, 6 skipped in 472.86s (0:07:52) ==============